This repo serves as the implementation of the EMNLP2023 paper Revisiting Block-based Quantisation: What is Important for Sub-8-bit LLM Inference?

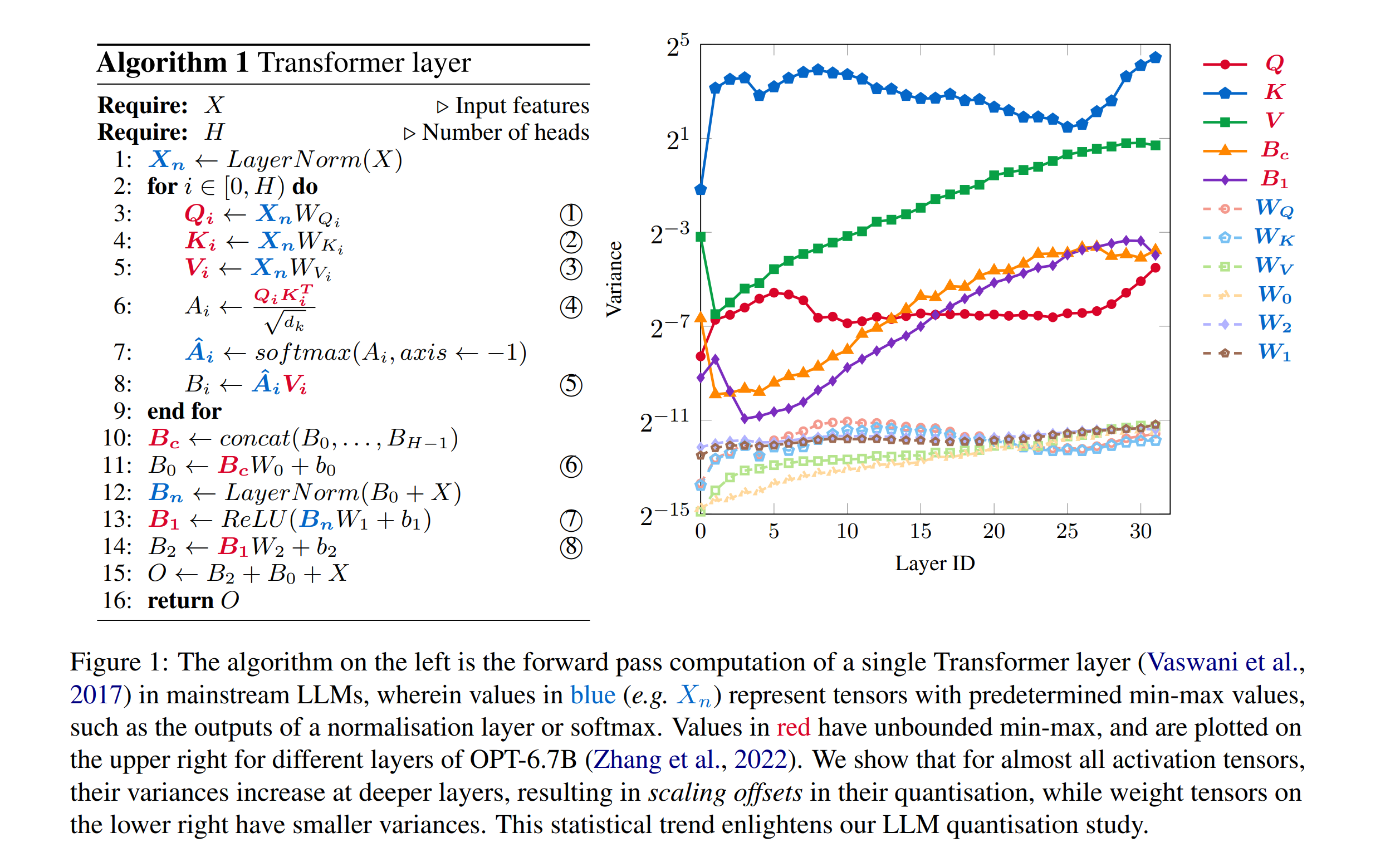

The inference of Large language models (LLMs) requires immense computation and memory resources. To curtail these costs, quantisation has emerged as a promising solution, but existing LLM quantisation mainly focuses on 8-bit. In this work, we explore the statistical and learning properties of the LLM layer and attribute the bottleneck of LLM quantisation to numerical scaling offsets. To address this, we adapt block quantisations for LLMs, a family of methods that share scaling factors across packed numbers. Block quantisations efficiently reduce the numerical scaling offsets solely from an arithmetic perspective, without additional treatments in the computational path. Our nearly-lossless quantised 6-bit LLMs achieve a

Conda environment is recommended. To create a conda environment, run:

git clone https://github.com/ChengZhang-98/llm-mixed-q.git

cd llm-mixed-q

git submodule update --init submodules/lm-evaluation-harness

conda env create -f environment.yml-

Supported model architectures:

- BERT

- OPT

- Llama

-

Compatible with HuggingFace Transformers. Checkpoints of supported model architecture from HuggingFace Transformers can be loaded, quantised, and evaluated.

-

Supported quantisation (software-emulated):

- Symmetric fixed-point

- Logarithmic

- Minifloat

- De-normalized minifloat (DMF)

- Block Logarithmic (BL)

- Block Floating Point (BFP)

- Block Minifloat (BMF)

-

Search algorithms are supported by Optuna

- Random

- TPE

- NSGA-II

- NSGA-III

- QMC

This example plots the variance plot of Vicuna-7B.

cd llm-mixed-q/experiments/emnlp/section_1/profile_variance

python profile.py-

PTQ on Wikitext2

This example evaluate W6A6 BFP llama models on Wikitext2.

cd llm-mixed-q/experiments/emnlp/section_4.2/perplexity

./group_bfp_6bit.sh-

PTQ on downstream tasks

This example evaluate W6A6 BFP llama models on downstream tasks in 0-shot prompting style.

cd llm-mixed-q/experiments/emnlp/section_4.2/downstream

./group_bfp_6bit.shThis example fine-tunes W4A4 BFP OPT-350M on SST-2.

cd /experiments/emnlp/section_4.3

./opt_350m_sst2.shcd llm-mixed-q/experiments/emnlp/section_4.4

# this bash script requires a string tag and a search config toml

./opt_1.3b_sst2.sh dummy-tag llm-mixed-q/experiments/emnlp/configs/search/opt_1.3b_sst2.toml@article{zhang2023revisiting,

title={Revisiting Block-based Quantisation: What is Important for Sub-8-bit LLM Inference?},

author={Zhang, Cheng and Cheng, Jianyi and Shumailov, Ilia and Constantinides, George A and Zhao, Yiren},

journal={arXiv preprint arXiv:2310.05079},

year={2023}

}