Krishnaswamy Lab, Yale University

This is the official implementation of

Due to certain internal policies, we removed the codebase from public access. However, for the benefit of future researchers, we hereby provide the DSE/DSMI functions.

@inproceedings{DSE2023,

title={Assessing Neural Network Representations During Training Using Noise-Resilient Diffusion Spectral Entropy},

author={Liao, Danqi and Liu, Chen and Christensen, Ben and Tong, Alexander and Huguet, Guillaume and Wolf, Guy and Nickel, Maximilian and Adelstein, Ian and Krishnaswamy, Smita},

booktitle={ICML 2023 Workshop on Topology, Algebra and Geometry in Machine Learning (TAG-ML)},

year={2023},

}

@inproceedings{DSE2024,

title={Assessing neural network representations during training using noise-resilient diffusion spectral entropy},

author={Liao, Danqi and Liu, Chen and Christensen, Benjamin W and Tong, Alexander and Huguet, Guillaume and Wolf, Guy and Nickel, Maximilian and Adelstein, Ian and Krishnaswamy, Smita},

booktitle={2024 58th Annual Conference on Information Sciences and Systems (CISS)},

pages={1--6},

year={2024},

organization={IEEE}

}

Here we present the refactored and reorganized APIs for this project.

api > dse.py > diffusion_spectral_entropy

api > dsmi.py > diffusion_spectral_mutual_information

You can directly run the following lines for built-in unit tests.

python dse.py

python dsmi.py

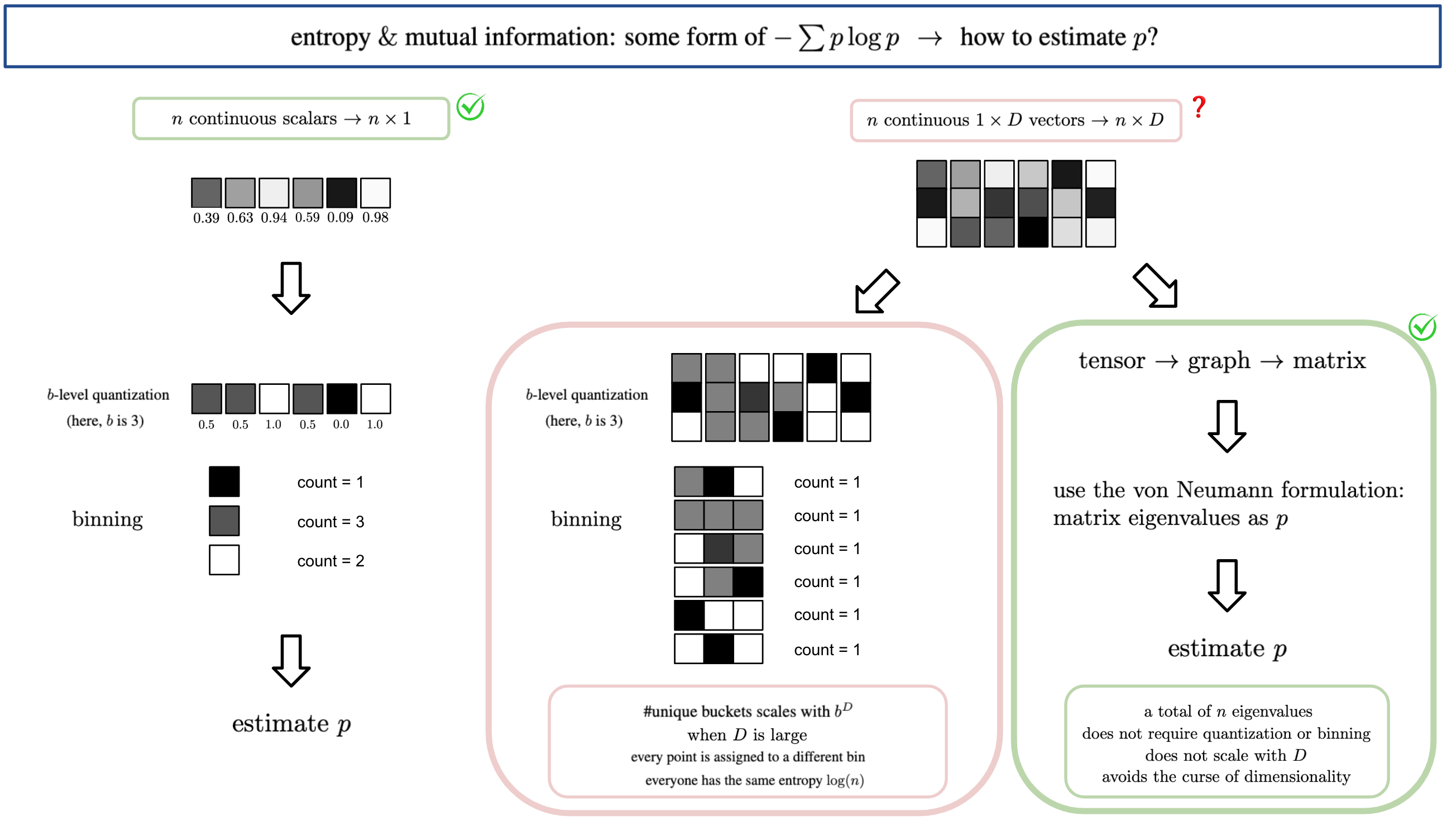

We proposed a framework to measure the entropy and mutual information in high dimensional data and thus applicable to modern neural networks.

We can measure, with respect to a given set of data samples, (1) the entropy of the neural representation at a specific layer and (2) the mutual information between a random variable (e.g., model input or output) and the neural representation at a specific layer.

Compared to the classic Shannon formulation using the binning method, e.g. as in the famous paper Deep Learning and the Information Bottleneck Principle [PDF] [Github1] [Github2], our proposed method is more robust and expressive.

No binning and hence no curse of dimensionality. Therefore, it works on modern deep neural networks (e.g., ResNet-50), not just on toy models with double digit layer width. See "Limitations of the Classic Shannon Entropy and Mutual Information" in our paper for details.

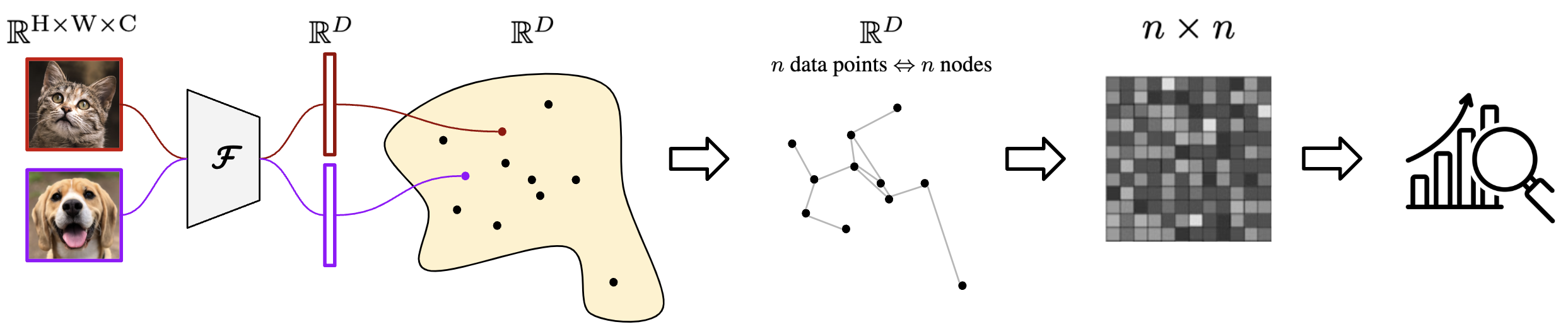

Conceptually, we build a data graph from the neural network representations of all data points in a dataset, and compute the diffusion matrix of the data graph. This matrix is a condensed representation of the diffusion geometry of the neural representation manifold. Our proposed Diffusion Spectral Entropy (DSE) and Diffusion Spectral Mutual Information (DSMI) can be computed from this diffusion matrix.

One major statement to make is that the proposed DSE and DSMI are "not conceptually the same as" the classic Shannon counterparts. They are defined differently and while they maintain the gist of "entropy" and "mutual information" measures, they have their own unique properties. For example, DSE is more sensitive to the underlying dimension and structures (e.g., number of branches or clusters) than to the spread or noise in the data itself, which is contracted to the manifold by raising the diffusion operator to the power of $t$.

In the theoretical results, we upper- and lower-bounded the proposed DSE and DSMI. More interestingly, we showed that if a data distribution originates as a single Gaussian blob but later evolves into

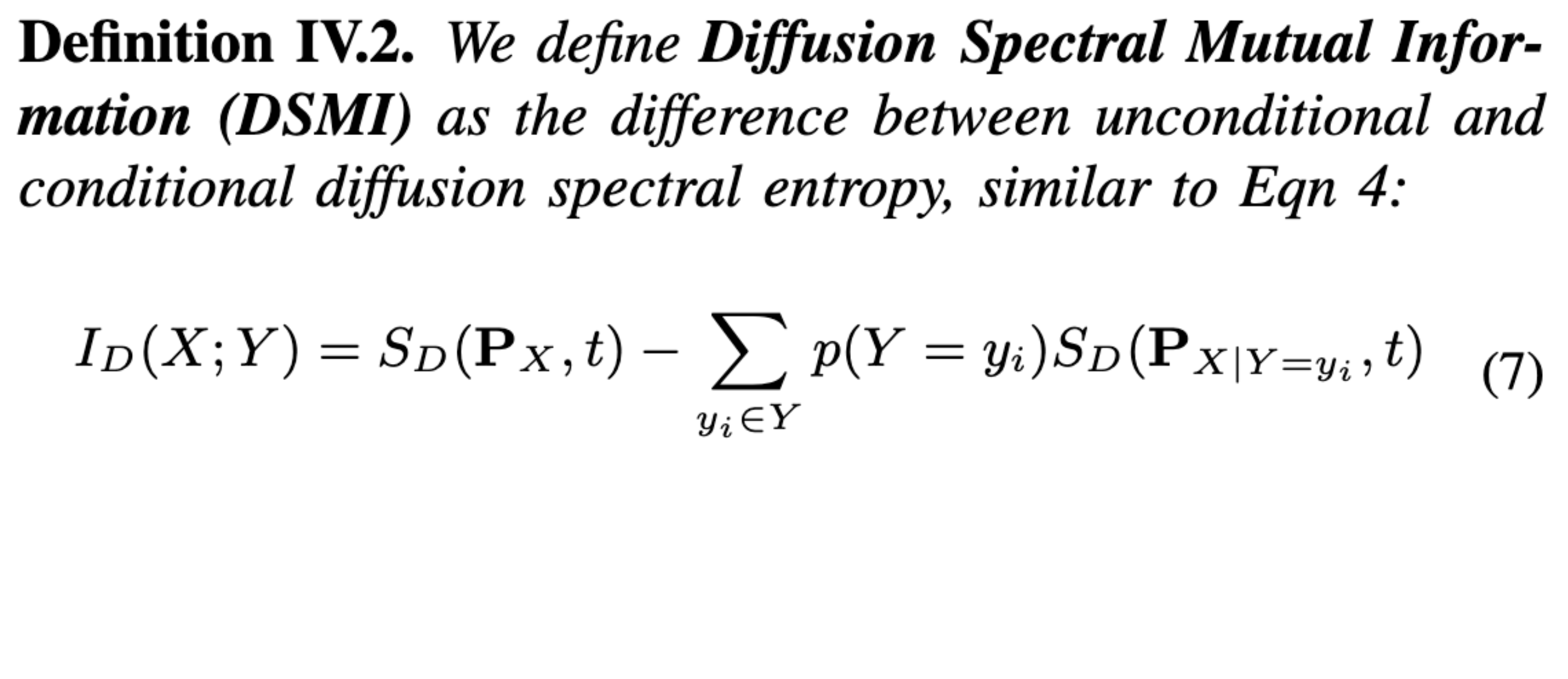

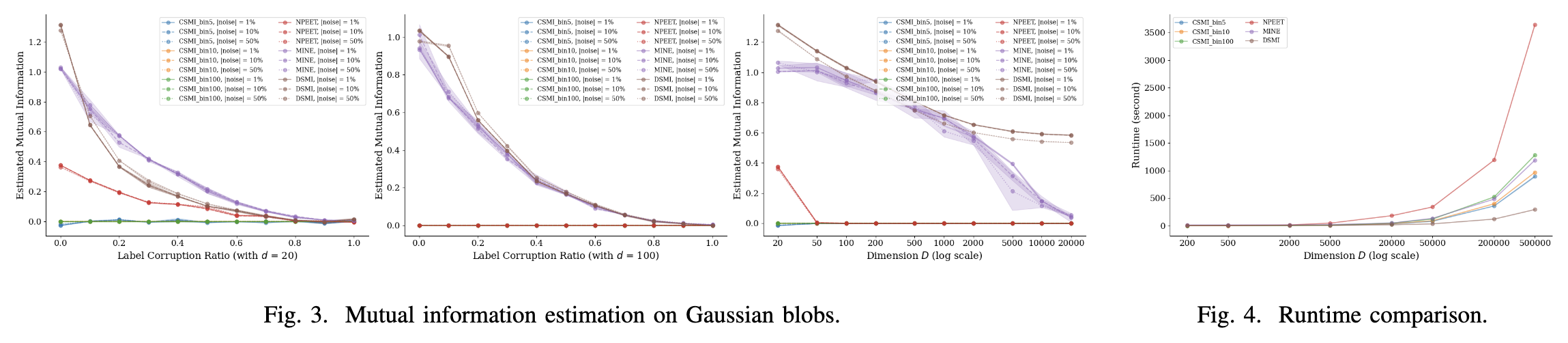

We first use toy experiments to showcase that DSE and DSMI "behave properly" as measures of entropy and mutual information. We also demonstrate they are more robust to high dimensions than the classic counterparts.

Then, we also look at how well DSE and DSMI behave at higher dimensions. In the figure below, we show how DSMI outperforms other mutual information estimators when the dimension is high. Besides, the runtime comparison shows DSMI scales better with respect to dimension.

Finally, it's time to put them in practice! We use DSE and DSMI to visualize the training dynamics of classification networks of 6 backbones (3 ConvNets and 3 Transformers) under 3 training conditions and 3 random seeds. We are evaluating the penultimate layer of the neural network --- the second-to-last layer where people believe embeds the rich representation of the data and are often used for visualization, linear-probing evaluation, etc.

DSE(Z) increasese during training. This happens for both generalizable training and overfitting. The former case coincides with our theoretical finding that DSE(Z) shall increase as the model learns to separate data representation into clusters.

DSMI(Z; Y) increases during generalizable training but stays stagnant during overfitting. This is very much expected.

DSMI(Z; X) shows quite intriguing trends. On MNIST, it keeps increasing. On CIFAR-10 and STL-10, it peaks quickly and gradually decreases. Recall that IB [Tishby et al.] suggests that I(Z; X) shall decrease while [Saxe et al. ICLR'18] believes the opposite. We find that both of them could be correct since the trend we observe is dataset-dependent. One possibility is that MNIST features are too easy to learn (and perhaps the models all overfit?) --- and we leave this to future explorations.

One may ask, besides just peeking into the training dynamics of neural networks, how can we REALLY use DSE and DSMI? Here comes the utility studies.

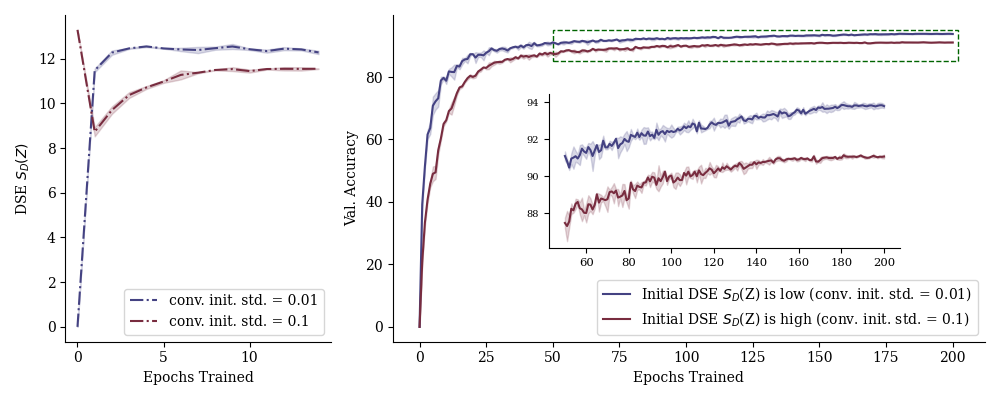

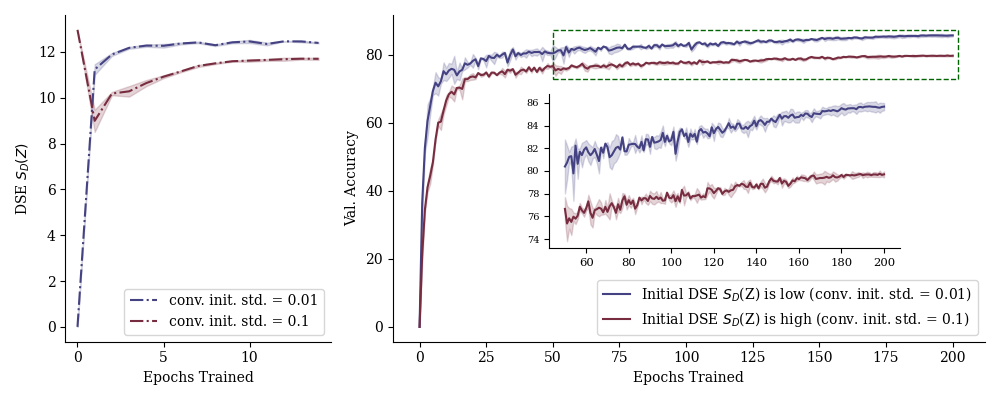

We sought to assess the effects of network initialization in terms of DSE. We were motivated by two observations: (1) the initial DSEs for different models are not always the same despite using the same method for random initialization; (2) if DSE starts low, it grows monotonically; if DSE starts high, it first decreases and then increases.

We found that if we initialize the convolutional layers with weights

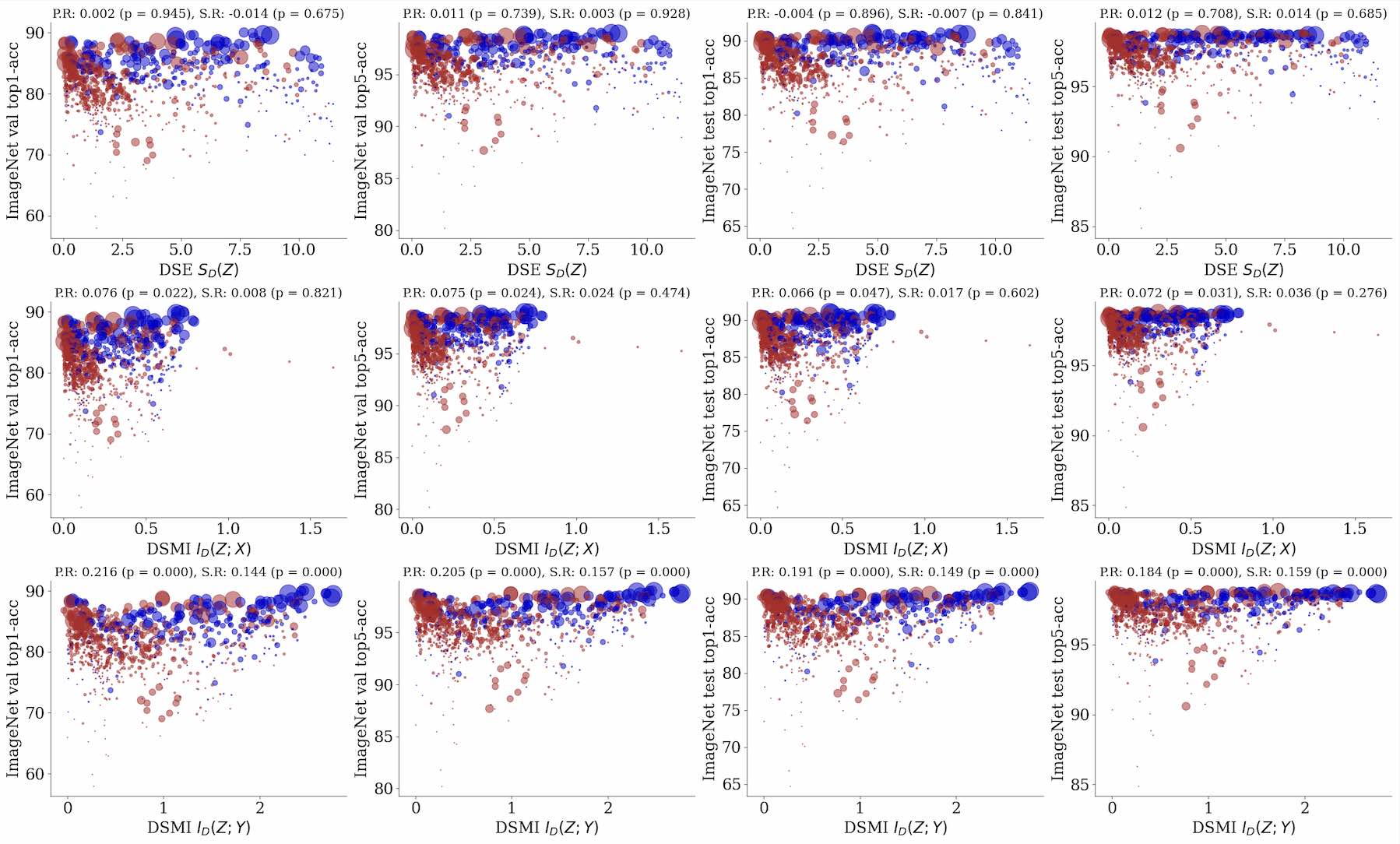

By far, we have monitored DSE and DSMI along the training process of the same model. Now we will show how DSMI correlates with downstream classification accuracy across many different pre-trained models. The following result demonstrates the potential in using DSMI for pre-screening potentially competent models for your specialized dataset.

Removed due to internal policies.

We developed the codebase in a miniconda environment. Tested on Python 3.9.13 + PyTorch 1.12.1. How we created the conda environment: Some packages may no longer be required.

conda create --name dse pytorch==1.12.1 torchvision==0.13.1 torchaudio==0.12.1 cudatoolkit=11.3 -c pytorch

conda activate dse

conda install -c anaconda scikit-image pillow matplotlib seaborn tqdm

python -m pip install -U giotto-tda

python -m pip install POT torch-optimizer

python -m pip install tinyimagenet

python -m pip install natsort

python -m pip install phate

python -m pip install DiffusionEMD

python -m pip install magic-impute

python -m pip install timm

python -m pip install pytorch-lightning

.png)

.png)

.png)