By the end of this tutorial you should be able to:

- Write a Dockerfile to build a flask Application

- Run the Flask APP Using Docker

- Build a Postgres Database in Google Cloud SQL

- Connect to a Google Cloud SQL Database from Flask

- Deploy a Dockerized Flask application using Google Cloud Run

We can set up the application to use environment variables to set the connection to the database.

We can create the following main.py file. Our container will start our python app with main.py so we can use our own server software.

main.py

from app import create_app

import os

app = create_app()

if __name__ == "__main__":

print('starting app!')

app.run(debug=True, host="0.0.0.0", port=int(os.environ.get("PORT", ).split(":")[1]))We can also create a file to setup the connection to the database using tcp.

app/db_setup.py

import os

import sqlalchemy

def get_tcp_connection_string():

db_user = os.environ["DB_USER"]

db_pass = os.environ["DB_PASS"]

db_name = os.environ["DB_NAME"]

db_host = os.environ["DB_HOST"]

# Extract host and port from db_host

host_args = db_host.split(":")

db_hostname, db_port = host_args[0], int(host_args[1])

# Equivalent URL:

# postgresql+pg8000://<db_user>:<db_pass>@<db_host>:<db_port>/<db_name>

return sqlalchemy.engine.url.URL(

drivername="postgresql+psycopg2",

username=db_user, # e.g. "my-database-user"

password=db_pass, # e.g. "my-database-password"

host=db_hostname, # e.g. "127.0.0.1"

port=db_port, # e.g. 5432

database=db_name # e.g. "my-database-name"

)Then we can use this function in the app/__init__.py

app/init.py

from flask import Flask

from flask_sqlalchemy import SQLAlchemy

from flask_migrate import Migrate

import os

from dotenv import load_dotenv

from app.setup_db import get_tcp_connection_string

db = SQLAlchemy()

migrate = Migrate()

load_dotenv()

def create_app(test_config=None):

app = Flask(__name__)

app.config["SQLALCHEMY_TRACK_MODIFICATIONS"] = False

# Get connection to Postgres

app.config["SQLALCHEMY_DATABASE_URI"] = get_tcp_connection_string()

# Import models here for Alembic setup

from app.models.task import Task

from app.models.goal import Goal

db.init_app(app)

migrate.init_app(app, db)

# Register Blueprints here

# ...

return appIn this exercise we modified the app, to:

- Start the application in

main.pyso that our container later can start the server without theflaskcommand. - Set the database configuration to pull data from environment variables.

- This way we can have the app use a different connection for different circumstances (production, development, local, on the cloud, etc).

Go to Docker.com and download and install Docker.

To build the container you need a Dockerfile. The Dockerfile will tell Docker how to install all the dependencies for the application and the default way to run the application.

This Dockerfile starts from a pre-made image (Python slim buster in this example) and adds any needed files/configurations.

Dockerfile

# Starter image

FROM python:3.9-slim-buster

# Install OS Dependencies

RUN apt-get update && apt-get install -y build-essential libpq-dev

ENV PYTHONBUFFERED True

RUN mkdir /app

ENV APP_HOME /app

WORKDIR $APP_HOME

# Install Python packages from requirements.txt

COPY ./requirements.txt .

RUN pip install -r requirements.txt

RUN pip install gunicorn

COPY . .

EXPOSE 8080

RUN chmod +x ./scripts/*

# Startup command

CMD ["./scripts/entrypoint.sh"]

You can then build a docker image with:

docker build . -t <IMAGE_NAME>

The . indicates the Dockerfile can be found in the current directory. The -t indicates a tag or name you can apply to the image. You can substitute <IMAGE_NAME> with a name you want for the image.

Create the following script file to run the app using gunicorn. Gunicorn (Green Unicorn) is a Python WSGI HTTP server for Unix (which is what the Docker image will run in).

# Make a scripts folder

mkdir scripts

# Create a script file

touch scripts/entrypoint.sh

# Make the script executable

chmod +x scripts/entrypoint.shentrypoint.sh

#!/bin/bash

/usr/local/bin/gunicorn --bind "0.0.0.0:$PORT" --workers 1 --threads 8 --timeout 0 main:appThis script will have gunicorn run a the app on localhost and using the environmental variable PORT for the port number. You can adjust the number of workers and threads to give the app more or less capabilities. A timeout of 0 means that requests never timeout. It will run the main.py file to start the app.

We can create a script to build the docker image so we don't have to remember the command.

# Create a script file

touch scripts/docker_build.sh

# Make the script executable

chmod +x scripts/docker_build.sh

The content of scripts/docker_build.sh can be the following.

scripts/docker_build.sh

#!/bin/bash

docker build . -t <IMAGE_NAME>

You can then run the script and build a container with: scripts/docker_build.sh

You can run a container by it's tag with the docker run command. This will run the app

docker run -it -p 8080:8080 <IMAGE_NAME>

Just like before we can create a script to run the container as well. We give the container a bunch of environment variables to set in the container.

We are adding environment variables to the container to tell our app how to connect to our database.

scripts/docker_run.sh

#!/bin/bash

docker run -it -p 8080:8080 docker run --env PORT=8080 \

--env DB_USER=<DATABASE_USER> \

--env DB_PASS=<DATABASE_PASSWORD> \

--env DB_NAME=<DATABASE_NAME> \

--env DB_HOST="host.docker.internal" \

--env DB_PORT=5432

-it -p 8080:8080 <IMAGE_NAME>- DB_USER: The Database User

- DB_PASS: The Database Password

- DB_NAME: The Name of the Database

- DB_HOST: The computer hosting the database (host.docker.internal means the computer running the container) and port

The script also sets it so that if you take the browser to port 8080 it will forward the request to the container at port 8080.

So my script file to run the app may look like:

scripts/docker_run.sh

#!/bin/bash

docker run --env PORT=8080 \

--env DB_USER=postgres \

--env DB_PASS="" \

--env DB_NAME=tasklist_db \

--env DB_HOST="host.docker.internal" \

--env DB_PORT=5432

-it -p 8080:8080 task-list-api We can make the script executable with chmod +x scripts/docker_run.sh

After building the container you can run the app with scripts/docker_run.sh

(venv) ➜ task-list-api git:(gcloud) ✗ ./scripts/docker_run.sh

[2021-08-11 00:14:39 +0000] [8] [INFO] Starting gunicorn 20.1.0

[2021-08-11 00:14:39 +0000] [8] [INFO] Listening at: http://0.0.0.0:8080 (8)

[2021-08-11 00:14:39 +0000] [8] [INFO] Using worker: gthread

[2021-08-11 00:14:39 +0000] [9] [INFO] Booting worker with pid: 9You can run migrations on your container by adding the command to the end of docker run

scripts/docker_db_init.sh

#!/bin/bash

docker run --env PORT=8080 \

--env DB_USER=postgres \

--env DB_PASS="" \

--env DB_NAME=tasklist_db \

--env DB_HOST="host.docker.internal:5432" \

-it -p 8080:8080 task-list-api flask db initscripts/docker_db_migrate.sh

#!/bin/bash

docker run --env PORT=8080 \

--env DB_USER=postgres \

--env DB_PASS="" \

--env DB_NAME=tasklist_db \

--env DB_HOST="host.docker.internal:5432" \

-it -p 8080:8080 task-list-api flask db migratescripts/docker_db_upgrade.sh

#!/bin/bash

docker run --env PORT=8080 \

--env DB_USER=postgres \

--env DB_PASS="" \

--env DB_NAME=tasklist_db \

--env DB_HOST="host.docker.internal:5432" \

-it -p 8080:8080 task-list-api flask db upgradeRun the app and verify that it works.

Google Cloud is Google's answer to AWS. It's a place where you can run all sorts of things on Google datacenters. You can create an account at https://cloud.google.com . New accounts get $300 free credit for the 1st year (yes you can create new accounts later). You will have to give Google a payment method like a credit card.

We are going to deploy our dockerized Flask app to the cloud using Google Cloud Run and host the database on Google Cloud SQL.

Terms:

- Google Cloud Console - The website where you interact with Google Cloud. You can do pretty much anything with the web interface in Google Cloud.

- Google Cloud Cli - The command line interface (gcloud) you can use to interact with Google Cloud. You can do practically everything with Google Cloud via the terminal. This makes it easier to automate via a script file.

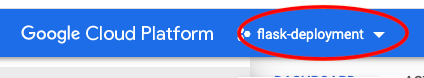

In the Google Cloud Console Create a new project.

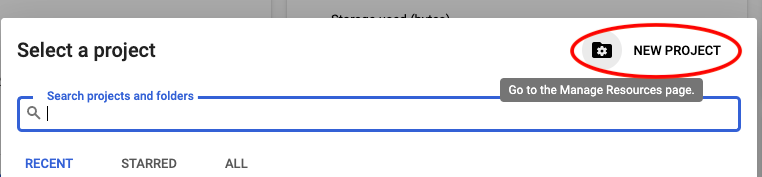

Then give the project a name & click on create. You can ignore organization.

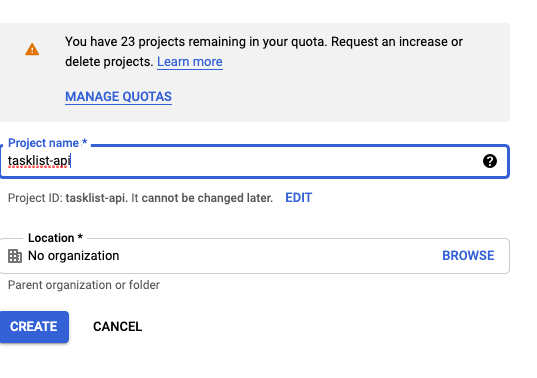

Write down the project name and id as you will need it later. You can always find it referenced on the dashboard.

The Google Cloud Command line tools can be installed with homebrew.

brew install --cask google-cloud-sdkThen do the following for your shell.

For bash users:

source "$(brew --prefix)/Caskroom/google-cloud-sdk/latest/google-cloud-sdk/path.bash.inc"

source "$(brew --prefix)/Caskroom/google-cloud-sdk/latest/google-cloud-sdk/completion.bash.inc"

For zsh users

source "$(brew --prefix)/Caskroom/google-cloud-sdk/latest/google-cloud-sdk/path.zsh.inc"

source "$(brew --prefix)/Caskroom/google-cloud-sdk/latest/google-cloud-sdk/completion.zsh.inc"

Then you can set the current gcloud project with the following, substiuting <PROJECT_ID> with your current project id.

gcloud config set project <PROJECT_ID>

You should get the confirmation Updated property [core/project].

At this point nothing below is free. This will consume Google Cloud credits and $ if you do not have credits. When you are finished with the deployed instance, shut down your project and delete it to stop spending money.

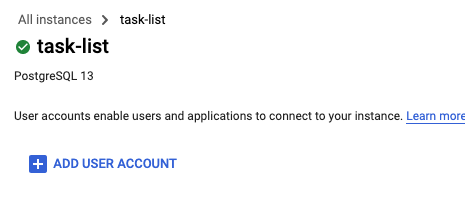

Next go to SQL in the Google Cloud console and create an instance of postgres.

You will need to enable the API

This will take a few minutes.

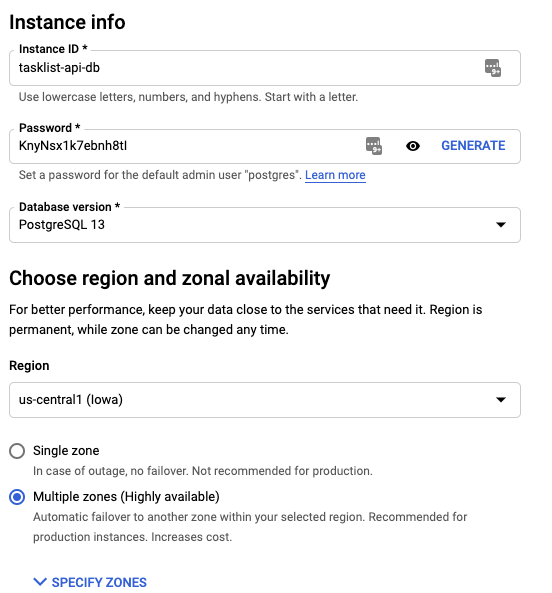

Give the Instance a name (name of the DB server) and pick or generate a password (write down the password).

Also pick an appropriate region. All your servers will be deployed to that region. Write it down.

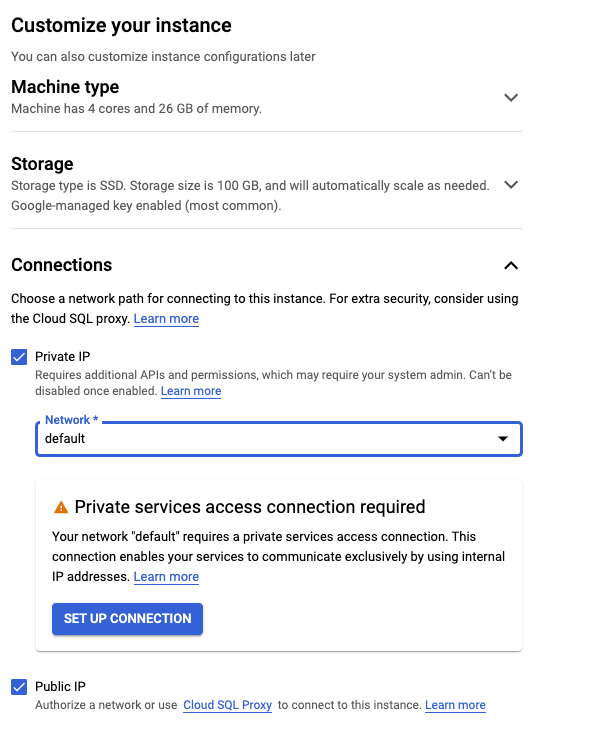

In the Customize your instance give your app a private and public IP address.

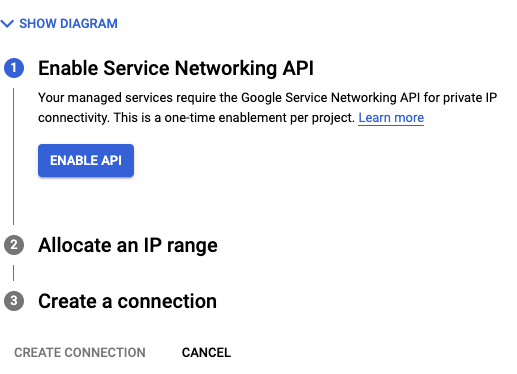

You will need to set up a private services access connection on the default network.

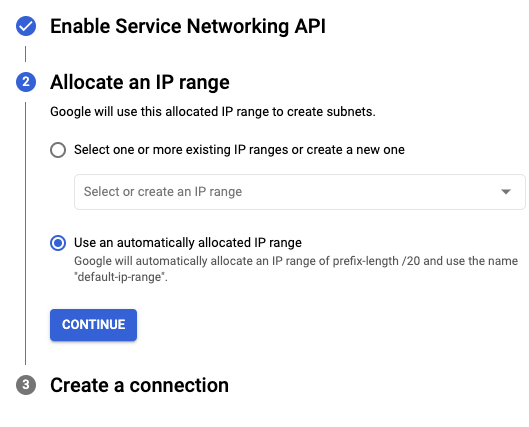

Automatically allocate an IP Range

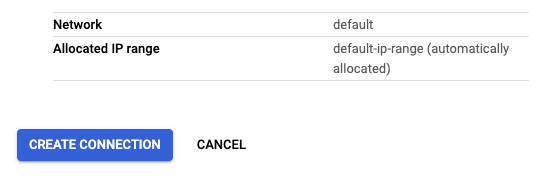

Create the networking service

Then Create the instance and go get a coffee. This will take a while ☕️.

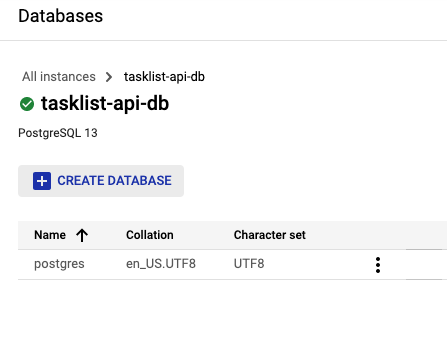

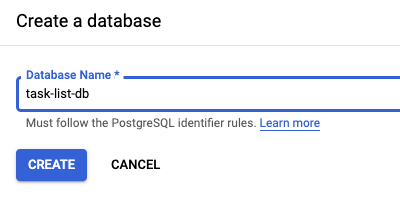

Once the Postgres instance is created, Go to Databases and create a database with a name of your choosing. I choose task-list-db

Click on databbases

Then create the database

And name it

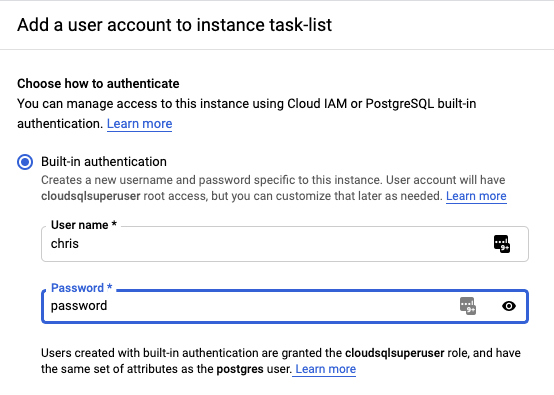

Then Go to Users and create a user account. Pick a strong password i.e. not "password".

Take note of the following:

- On the DB Overview

- The Connection Name

- The public IP

- The private IP

- The Database name you want to use

- The Username you choose

- The Password you choose

These will be very important for... connecting to the database.

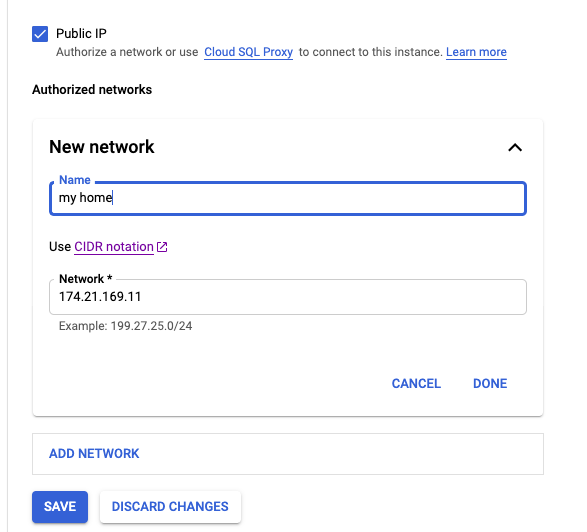

From your local computer to the CloudSQL you can either use the public IP or use a Cloud SQL Proxy.

In the google console go to the connections tab and under authorized networks add your public ip address with any name you want. Click the link for your IP Address.

We can run our docker container adjusting the scripts/docker_run.sh file.

scripts/docker_run.sh

#!/bin/bash

docker run --env PORT=8080 \

--env DB_USER=<DB_USER> \

--env DB_PASS=<DB_PASSWORD> \

--env DB_NAME=<DB_NAME> \

--env DB_HOST="<PUBLIC IP>:5432" \

-it -p 8080:8080 task-list-api

Replace the <> fields with the values for the deployed server in the docker_db_init.sh, docker_db_migrate.sh, docker_db_upgrade.sh, and docker_db_run.sh files.

Then run:

# if it says the migrations folder exists

# that's no problem

scripts/docker_db_init.sh

scripts/docker_db_migrate.sh

scripts/docker_db_upgrade.sh

Then you should be able to run the app locally and use the Google Cloud SQL Database!

scripts/docker_db_run.sh

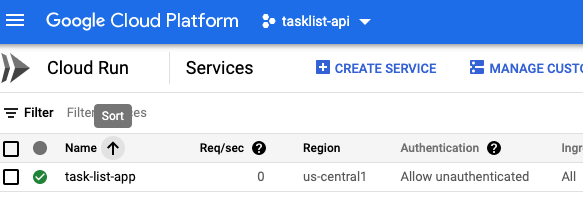

Google Cloud Run is a tool to take a container image (like one produced by Docker) and deploy it into production quickly. It's a good way to deploy apps.

We can build our Docker container onto the Google Cloud platform with the command:

gcloud builds submit --tag gcr.io/<PROJECT_ID>/<IMAGE_NAME> . \

--project <PROJECT_ID>This command will take the current directory and build an image and save the image on Google's container registry.

Run the command and you will be prompted to turn on the API.

API [cloudbuild.googleapis.com] not enabled on project [989904320255].

Would you like to enable and retry (this will take a few minutes)?

(y/N)?

Press y and watch it build the container.

The Google container registry uses Docker to build your Docker container on the Google registry and save it for further use. If you get an error check and verify that you filled in the PROJECT_ID and IMAGE_NAME fields.

We can then take the Image and deploy it with the command.

gcloud run deploy <APP_NAME> \

--image gcr.io/<PROJECT_ID>/<IMAGE_ID> \

--platform managed \

--region <REGION> \

--allow-unauthenticated \

--project <PROJECT_I>

It will prompt you to enable the api to publish images. Hit y and continue.

API [run.googleapis.com] not enabled on project [989904320255]. Would

you like to enable and retry (this will take a few minutes)? (y/N)?

If you go to the given URL yoou will get Service Unavailable

Oh No! What went wrong!

We need to do a couple of things:

- Enable network connectivity

- Add Environmental Variables For

- DB_USER

- DB_PASS

- DB_NAME

- DB_HOST

- DB_PORT

To add network connectivity find the app in Cloud Run. Then click on Edit and Deploy a New Revision

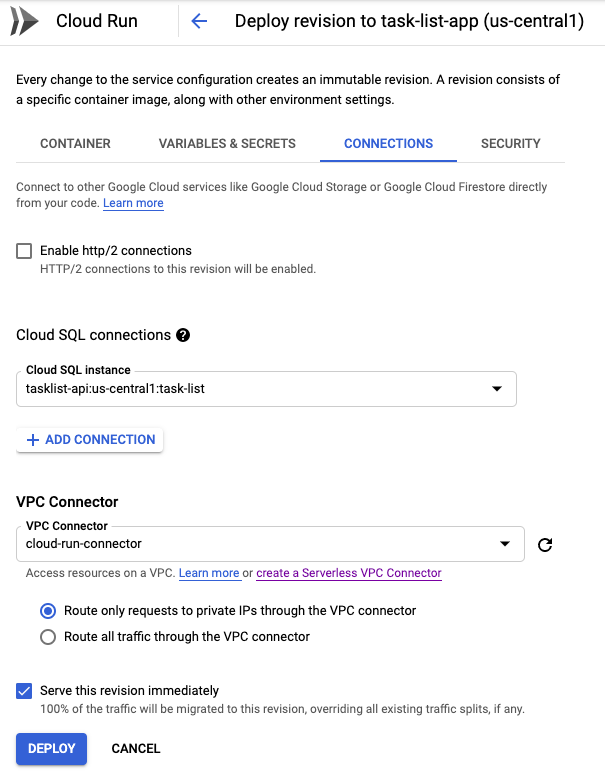

Go to connections and add a Cloud SQL Connector. You will need to ENABLE CLOUD SQL ADMIN API. Do not check the "Enable http/2 connections" box.

Then click on create a Serverless VPC Connector

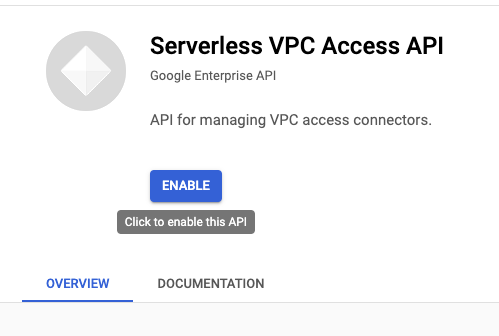

Then enable the API.

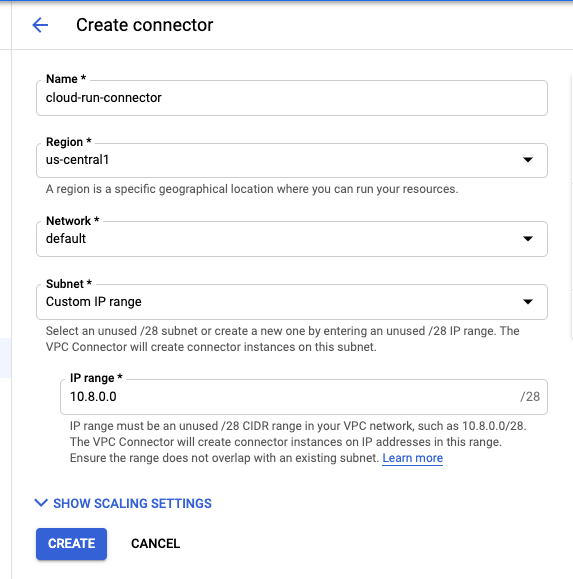

Create a connector, you can select your region, the default network and a 10.8.0.0 custom ip range.

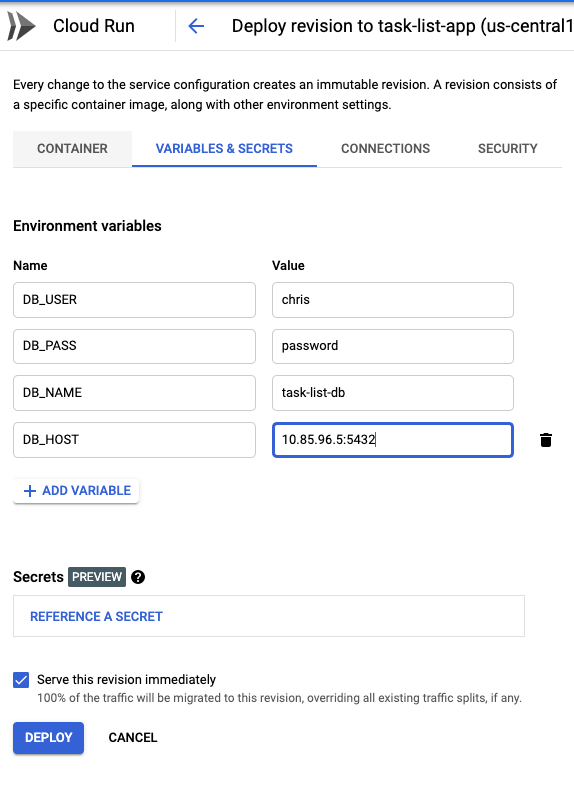

Then go to VARIABLES & SECRETS and add environment variables and values from your notes earlier. Make sure to use your SQL Server's private IP Address.

Then click on Deploy and wait for it to finish deploying.

build.sh

#!/bin/bash

gcloud builds submit --tag gcr.io/flask-deployment-322202/videostore-api . --project flask-deployment-322202deploy.sh

#!/bin/bash

gcloud run deploy videostore-api \

--image gcr.io/flask-deployment-322202/videostore-api \

--platform managed \

--region us-central1 \

--allow-unauthenticated \

--project flask-deployment-322202You should now be deployed to Google Cloud!