This repository contains Global Multi-temporal Cropland Mapping developed by Penghua Liu.

The pixel-wise cropland extraction is implemented by deep semantic segmentation networks and the pre-trained (or fine-tuned) model is deployed to the Google Drive. Afterwards, we request GPU computational resources in Google Colab (Colab) and create sessions for inference from satellite images using the deployed model. By the way, the satellite images were exported from Google Earth Engine (GEE).

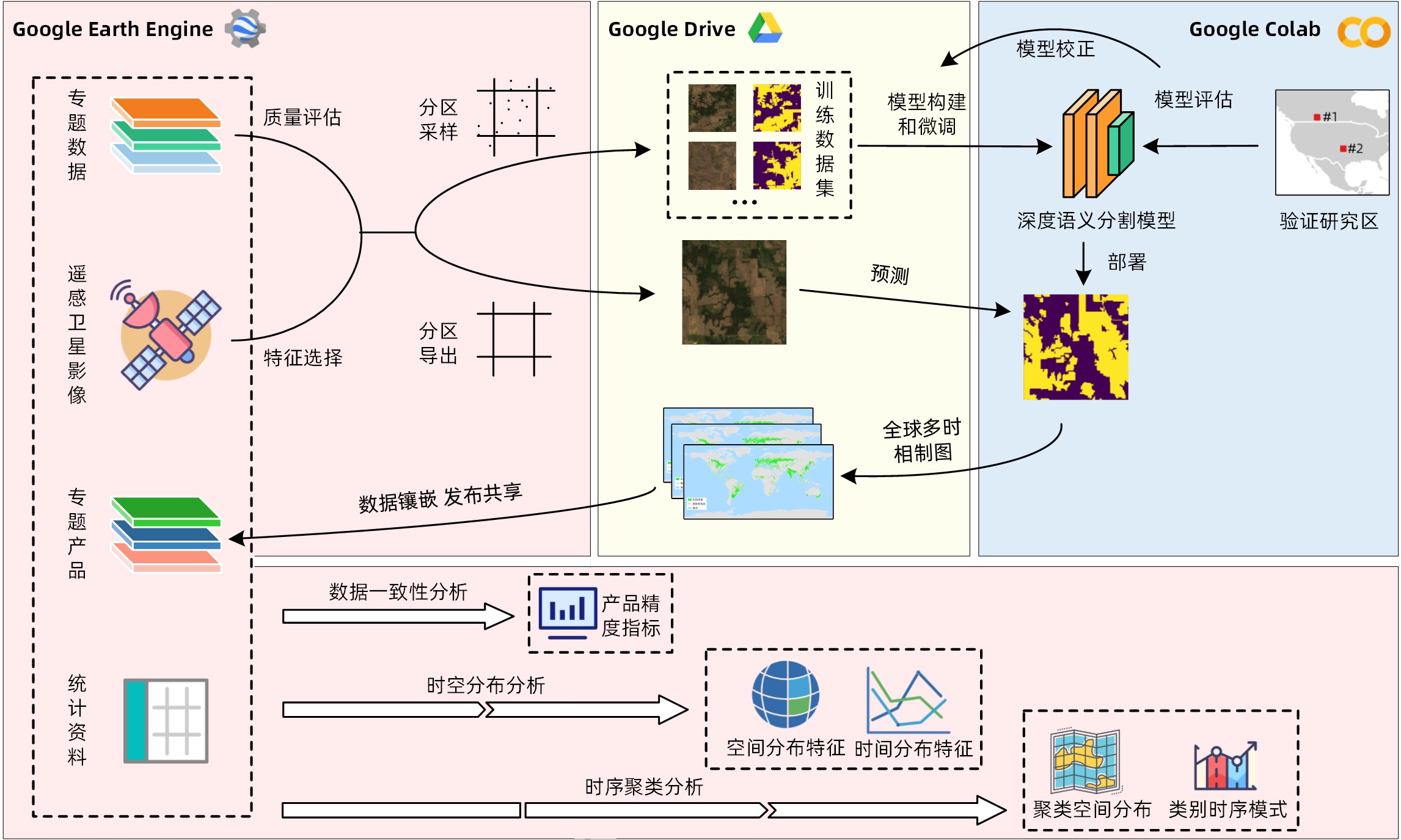

The overall workflow is presented in the following figure.

As shown in the above figure, there are 4 steps for global cropland mapping, including training data preparation, model training and calibration, model deployment and inference, and evaluation.

It is well known that training datasets for semantic sementation are patch of images and pixel-wise dense labeled images. That is, for an input image with a shape of

To export training samples from GEE, you can run my shared script in GEE here, or you can copy the codes in S1-1 ExportTrainingSamples.js and run it in the GEE code editor.

Note: The exported samples are formatted in '*.tfrecord' to save storage. Each TFRecord file contains all the collected sample points in each grid (TFRecord files are memory-limited, so you can't store too much data). To take as many samples as possible, the size of the grid can be reduced.

Tensorflow simplifies the data loading process by building sequences of TFRecord files. However, it is very inefficient for each batch to extract samples from the tfrecord sequence. So, we have to extract samples from grids and convert tfrecords to '*.h5' files.

You can dwownload the tfrecord samples to your local machine and convert them to '*.h5' files.

S1-2 Preprocess.ipynb provides a interactive notebook to download tfrecord files from Google Cloud Storage (GCS) (only if you export samples to GCS) and convert them to H5s. A data description table can be ontained by running the Statistic the feature properties to a table cell.

You have to modify the settings to fit your tasks.

Note: Converting TFRecords to H5s and calculate feature properties also take some time.

Basides, the privided notebook provides some functions for data exploratory analysis. You can view the distribution of feature bands and labels and their co-relations.

Model training and calibration is the core task.

Before training, you have to split the training set and validation set. Treating the positive and negative classes equally, we find that the proportional distribution of positive and negative classes in each sample is extremely uneven. Thus, we have to oversample the positive samples.

Specifically, we recalss each label image with a class according to the proportion of positive pixels. For example, if a label image has a positive class pixel ratio of 0.23, we recalss this label image to 2. Criteria for reclassification can be fomulated as

After reclassification, we oversample samples in the low frequency category and create texts that record train/val filenames.

The above steps can be achieved by simply running the Split Train/Val Dataset cell in S1-2 Preprocess.ipynb.

You only have to modify the cfgs.py file and then run the S2-2 TrainSegModel.py file.