By Xin Wang, National Institute of Informatics, 2021

I am a new pytorch user. If you have any suggestions or questions, pleas email wangxin at nii dot ac dot jp

- 2021-04: Add projects on ASVspoof ./project/03-asvspoof-mega for paper A Comparative Study on Recent Neural Spoofing Countermeasures for Synthetic Speech Detection (on arxiv)

- 2020-04: Update core_scripts and functions

- 2020-11: Update for Pytorch 1.6

- 2020-11: Add preliminary projects on ASVspoof ./project/02-asvspoof

- 2020-08: Add tutorial materials ./tutorials. Most of the materials are Jupyter notebooks and can be run on your Laptop using CPU.

This repository hosts Pytorch codes for the following projects:

2.1 Neural waveform model ./project/01-nsf

All NSF projects come with pre-trained models on CMU-arctic (4 speakers) and a one-click demo script to run, train, do inference.

Generated samples from pre-trained models can be found in ./project/01-nsf/*/__pre_trained/output.

Note that this is the re-implementation of projects based on CURRENNT. All the papers published so far used CURRENNT implementation. Many samples can be found on NSF homepage.

2.2 ASVspoof project with toy example ./project/04-asvspoof2021-toy

It takes time to download ASVspoof2019. Therefore, this project demonstrates how to train and evaluate the ASVspoof model using a toy dataset.

Please try this project before checking other ASVspoof projects.

A similar project is adopted for ASVspoof2021 LFCC-LCNN baseline (https://www.asvspoof.org/), although the LFCC front-end is slightly different.

2.3 Speech anti-spoofing for ASVspoof 2019 LA ./project/03-asvspoof-mega

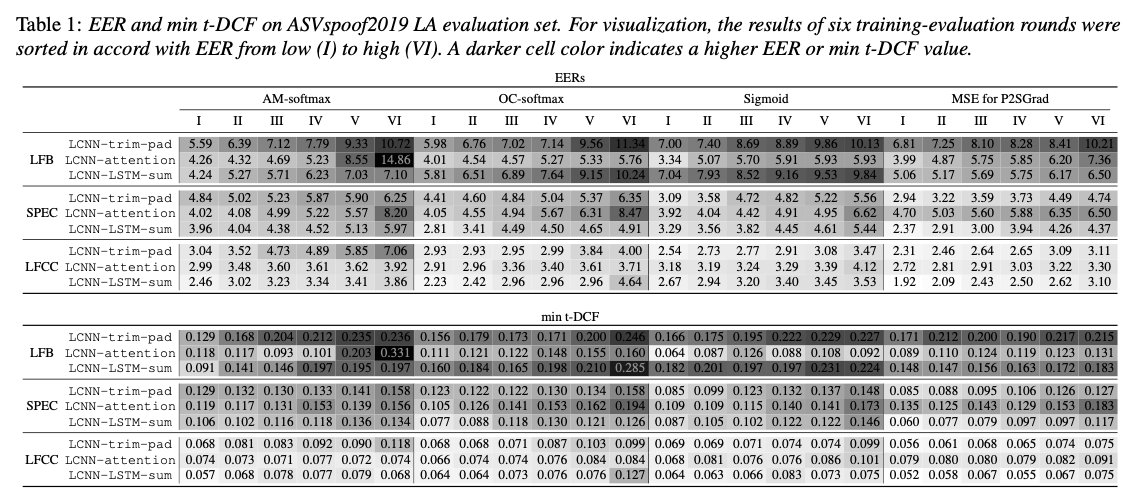

There were 36 systems investigated, each of which was trained and evaluated for 6 rounds with different random seeds.

Pre-trained models, scores, training recipes are all available.

To use this project, please do:

- Follow 4. Usage below and setup the Pytorch environment

- Download ASVspoof 2019 LA and convert FLAC to WAV;

- Put evaluation set waveforms to ./project/03-asvspoof-mega/DATA/asvspoof2019_LA/eval and others to DATA/asvspoof2019_LA/train_dev

- Go to ./project/03-asvspoof-mega, run this script ./project/03-asvspoof-mega/00_demo.sh. It will run pre-trained models, compute EERs, and train a new model.

Now the script also accepts FLAC as input. Please check ./project/03-asvspoof-mega/README.

2.4 (Preliminary) speech anti-spoofing ./project/02-asvspoof

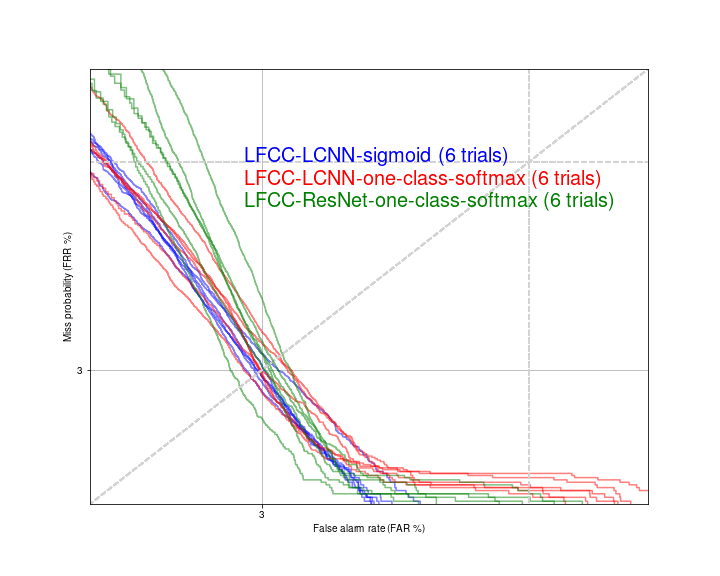

- Baseline LFCC + LCNN-binary-classifier (lfcc-lcnn-sigmoid)

- LFCC + LCNN + angular softmax (lfcc-lcnn-a-softmax)

- LFCC + LCNN + one-class softmax (lfcc-lcnn-ocsoftmax)

- LFCC + ResNet18 + one-class softmax (lfcc-restnet-ocsoftmax)

On ASVspoof2019 LA, EER is around 3%, and min-tDCF (legacy-version) is around 0.06~0.08. I trained each system for 6 times on various GPU devices (single V100 or P100 card), each time with a different random initial seed. Figure below shows the DET curves for these systems:

As you can see how the results vary a lot when simply changing the initial random seends. Even with the same random seed, Pytorch environment, and deterministic algorithm selected, the trained model may be different due to the CUDA and GPU. It is encouraged to run the model multiple times with different random seeds and show the variance of the evaluation results.

For LCNN, please check (Lavrentyeva 2019); for LFCC, please check (Sahidullah 2015); for one-class softmax in ASVspoof, please check (Zhang 2020).

Only a few packages are required:

- Python 3 (test on python3.8)

- Pytorch 1.6 and above (test on pytorch-1.6)

- numpy (test on 1.18.1)

- scipy (test on 1.4.1)

- torchaudio (test on 0.6.0)

- librosa (0.8.0) with numba (0.48.0) and mir_eval (0.6) for some music projects

I use miniconda to manage Python environment. You may use ./env.yml or ./env2.yml to create the environment:

# create environment

$: conda env create -f env.yml

# load environment (whose name is pytorch-1.6)

$: conda activate pytorch-1.6

Take cyc-noise-nsf as an example:

# Load python environment

# (Alternatively, you may use env.sh to activate conda environment)

$: conda activate pytorch-1.6

# cd into one project

$: cd project/01-nsf/cyc-noise-nsf-4

# add PYTHONPATH and activate conda environment

$: source ../../../env.sh

# run the script

$: bash 00_demo.sh

The printed info will tell you what is happening. The script may need 1 day or more to finish.

You may also put the job to background rather than waiting for the job while keeping the terminal open:

# run the script in background

$: bash 00_demo.sh > log_batch 2>&1 &

The above steps will download the CMU-arctic data, run waveform generation using a pre-trained model, and train a new model (which may take 1 day or more on Nvidia V100 GPU).

Here are some details on the data format and project file structure:

-

Waveform: 16/32-bit PCM or 32-bit float WAV that can be read by scipy.io.wavfile.read

-

Other data: binary, float-32bit, litten endian (numpy dtype <f4). The data can be read in python by:

# for a data of shape [N, M]

>>> f = open(filepath,'rb')

>>> datatype = np.dtype(('<f4',(M,)))

>>> data = np.fromfile(f,dtype=datatype)

>>> f.close()

I assume data should be stored in c_continuous format (row-major). There are helper functions in ./core_scripts/data_io/io_tools.py to read and write binary data:

# create a float32 data array

>>> import numpy as np

>>> data = np.asarray(np.random.randn(5, 3), dtype=np.float32)

# write to './temp.bin' and read it as data2

>>> import core_scripts.data_io.io_tools as readwrite

>>> readwrite.f_write_raw_mat(data, './temp.bin')

>>> data2 = readwrite.f_read_raw_mat('./temp.bin', 3)

>>> data - data2

# result should 0

| Directory | Function |

|---|---|

| ./core_scripts | scripts to manage the training process, data io, and so on |

| ./core_modules | finished pytorch modules |

| ./sandbox | new functions and modules to be test |

| ./project | project directories, and each folder correspond to one model for one dataset |

| ./project/*/*/main.py | script to load data and run training and inference |

| ./project/*/*/model.py | model definition based on Pytorch APIs |

| ./project/*/*/config.py | configurations for training/val/test set data |

The motivation is to separate the training and inference process, the model definition, and the data configuration. For example:

- To define a new model, change model.py only

- To run on a new database, change config.py only

-

Input data: 00_demo.sh above will download a data package for the CMU-arctic corpus, including wav (normalized), f0, and Mel-spectrogram. If you want to train the model on your own data, please prepare the input/output data by yourself. There are scripts to extract features from 16kHz in the CMU-arctic data package (in ./project/DATA after running 00_demo.sh)

-

Batch size: implementation works only for batchsize = 1. My previous experiments only used batchsize = 1. I haven't update the data I/O to load varied length utterances

-

To 24kHz: most of my experiments are done on 16 kHz waveforms. If you want to try 24 kHz waveforms, FIR or sinc digital filters in the model may be changed for better performance:

-

in hn-nsf: lp_v, lp_u, hp_v, and hp_u are calculated on for 16 kHz configurations. For different sampling rate, you may use this online tool http://t-filter.engineerjs.com to get the filter coefficients. In this case, the stop-band for lp_v and lp_u is extended to 12k, while the pass-band for hp_v and hp_u is extended to 12k. The reason is that, no matter what is the sampling rate, the actual formats (in Hz) and spectral of sounds don't change along the sampling rate;

-

in hn-sinc-nsf and cyc-noise-nsf: for the similar reason above, the cut-off-frequency value (0, 1) should be adjusted. I will try (hidden_feat * 0.2 + uv * 0.4 + 0.3) * 16 / 24 in model.CondModuleHnSincNSF.get_cut_f();

-

There may be more, but here are the important ones:

-

"Batch-normalization": in CURRENNT, "batch-normalization" is conducted along the length sequence, i.e., assuming each frame as one sample. There is no equivalent implementation on this Pytorch repository;

-

No bias in CNN and FF: due to the 1st point, NSF in this repository uses bias=false for CNN and feedforward layers in neural filter blocks, which can be helpful to make the hidden signals around 0;

-

smaller learning rate: due to the 1st point, learning rate in this repository is decreased from 0.0003 to a smaller value. Accordingly, more training epochs;

-

STFT framing/padding: in CURRENNT, the first frame starts from the 1st step of a signal; in this Pytorch repository (as Librosa), the first frame is centered around the 1st step of a signal, and the frame is padded with 0;

-

(minor one) STFT backward: in CURRENNT, STFT backward follows the steps in this paper; in Pytorch repository, backward over STFT is done by the Pytorch library.

-

...

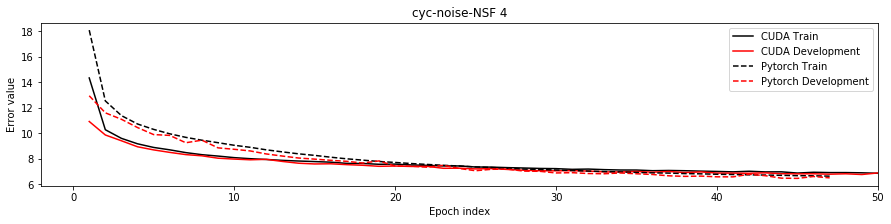

The learning curves look similar to the CURRENNT (cuda) version.

-

Xin Wang and Junichi Yamagishi. 2019. Neural Harmonic-plus-Noise Waveform Model with Trainable Maximum Voice Frequency for Text-to-Speech Synthesis. In Proc. SSW, pages 1–6, ISCA, September. ISCA. http://www.isca-speech.org/archive/SSW_2019/abstracts/SSW10_O_1-1.html

-

Xin Wang, Shinji Takaki, and Junichi Yamagishi. 2020. Neural source-filter waveform models for statistical parametric speech synthesis. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 28:402–415. https://ieeexplore.ieee.org/document/8915761/

-

Xin Wang, Junichi Yamagishi. 2020. Using Cyclic-noise as source for Neural source-filter waveform model. Accepted, Interspeech

-

Galina Lavrentyeva, Sergey Novoselov, Andzhukaev Tseren, Marina Volkova, Artem Gorlanov, and Alexandr Kozlov. 2019. STC Antispoofing Systems for the ASVspoof2019 Challenge. In Proc. Interspeech, 1033–1037.

-

You Zhang, Fei Jiang, and Zhiyao Duan. 2020. One-Class Learning towards Generalized Voice Spoofing Detection. ArXiv Preprint ArXiv:2010.13995.

-

Md Sahidullah, Tomi Kinnunen, and Cemal Hanilçi. 2015. A Comparison of Features for Synthetic Speech Detection. In Proc. Interspeech, 2087–2091.