This repository contains a reference implementation of the algorithms described in our paper.

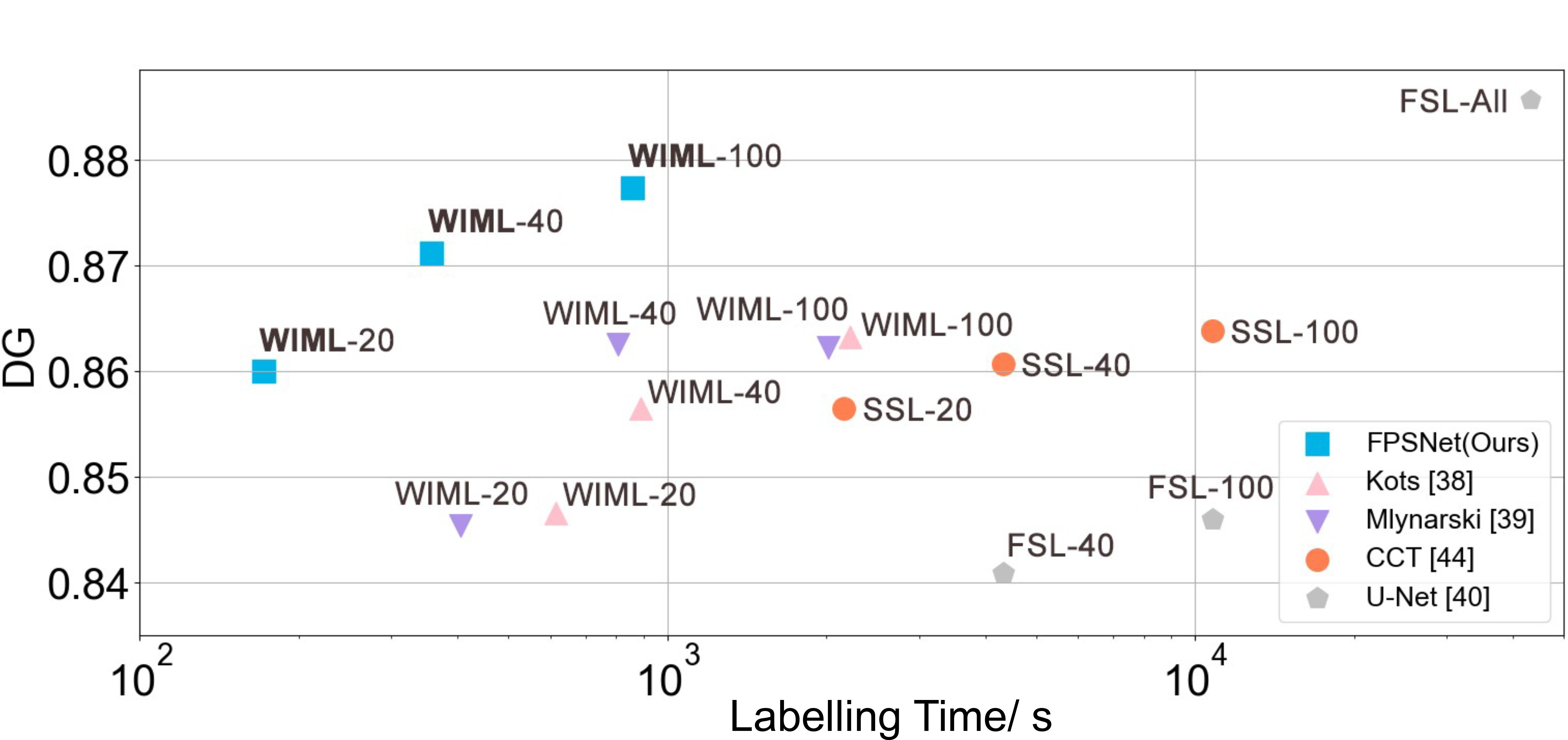

Common medical image segmentation tasks require large training datasets with pixel-level annotations which are very expensive and time-consuming to prepare. In this paper, we aim to address the following two issues in a unified framework: 1) How to utilize weak labels to reduce the annotation time of strong labels; 2) How to utilize weak labels and very few strong labels to ensure desired segmentation accuracy. To this end, we propose a novel Weakly-Interactive-Mixed Learning (WIML) framework with a multi-task Full-Parameter-Sharing Network (FPSNet), which effectively alleviates the annotation burden of medical images and obtains the desired segmentation results with only weak labels. Experiments demonstrate that our method outperforms several state-of-the-art segmentation methods with minimal annotation efforts. This work is promising to free clinicians from the exhaustive labor of annotation and can be applied to different imaging types of medical images for further clinical use.

The following dependencies are needed:

-

PyTorch version>=1.0.1

-

Common packages: Numpy, SimpleITK, OpenCV, pyqt5, scipy, skimage...

cd WIML/WIA

python main.pyWe have prepared some images in the file "data_source". Firstly, click the "Load Image" button to load an image for annotation. Secondly, click the "Segment" button for initial segmentation. Two color contours will appear on the image, the green one is the initial prediction and the blue one is the ground-truth of the image for comparison. Thirdly, give some clicks on the image to refine the initial mask for the desired strong label. Press the left mouse button to click on the under-segmented regions (foreground) and press the right mouse button to click on the over-segmented regions (background). Finally, click the "Save Segmentation" button. The CAM performance, initial mask, strong label, and interaction recording will be saved in the file "result".

Note that, the pretrained model is trained by the weakly-supervised subnetwork of the FPSNet with the liver CT data with image tags, which can be download from [google drive] and place them as WIA/weakly_supervised_model.pth.

cd WIML/MSL/code

python train_MSL_FPSNet.pycd WIML/WSL/code

python test_MSL_FPSNet.pyWe have prepared some images in the file "test_data" for testing.

Note that, the pretrained model trained by the FPSNet with only five strong labels generated by the WIA method, which can be download from [google drive] and place them as MSL/code/saved/WIML_FPSNet_five_strong_labels.pth.

This project was only designed for academic research.