Pytorch implementation of Emotions generation with VAE using EmoV-DB.

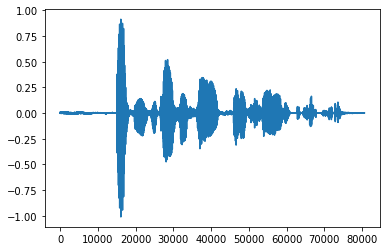

- The idea behind this project is to build a machine learning model that could generate more samples of voiced emotions.

- Using the pre-trained model you could use both the latent vector of your voice for classification and for generation a new sample which sounds similar to your voice by using the reparametrization trick.

Dataset: EmoV-DB

- Download EmoV-DB

- Run

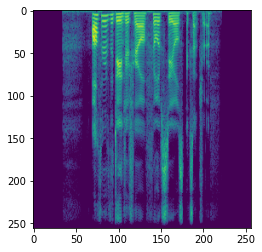

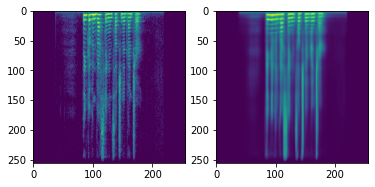

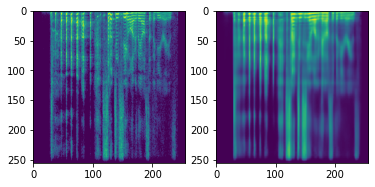

python 'emodb_preprocess.py' --data_dir './data/audio/' --frame_size 256 --hop_length 313 --duration 5This will split the data to 80% train and 20% test. The max length audio would be 5 second. You should see the creation of a spectrogram dir.

- Run

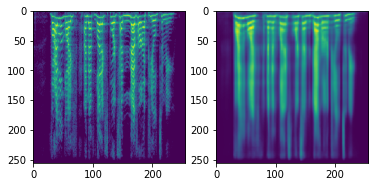

python model_training.pyThis will save a model each epoch

- In order to get better reconstruction results:

- Use more data - i.e., augmentations, another dataset, etc.

- Play around the reconstruction_term_weight

- Run

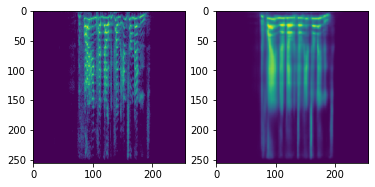

python generator.pyThis script takes spectrograms from SPECTROGRAM_PATH and save audio signals in SAVE_DIR_GENERATED

256X256 spectrogram model Place it under 'saved_models_256'