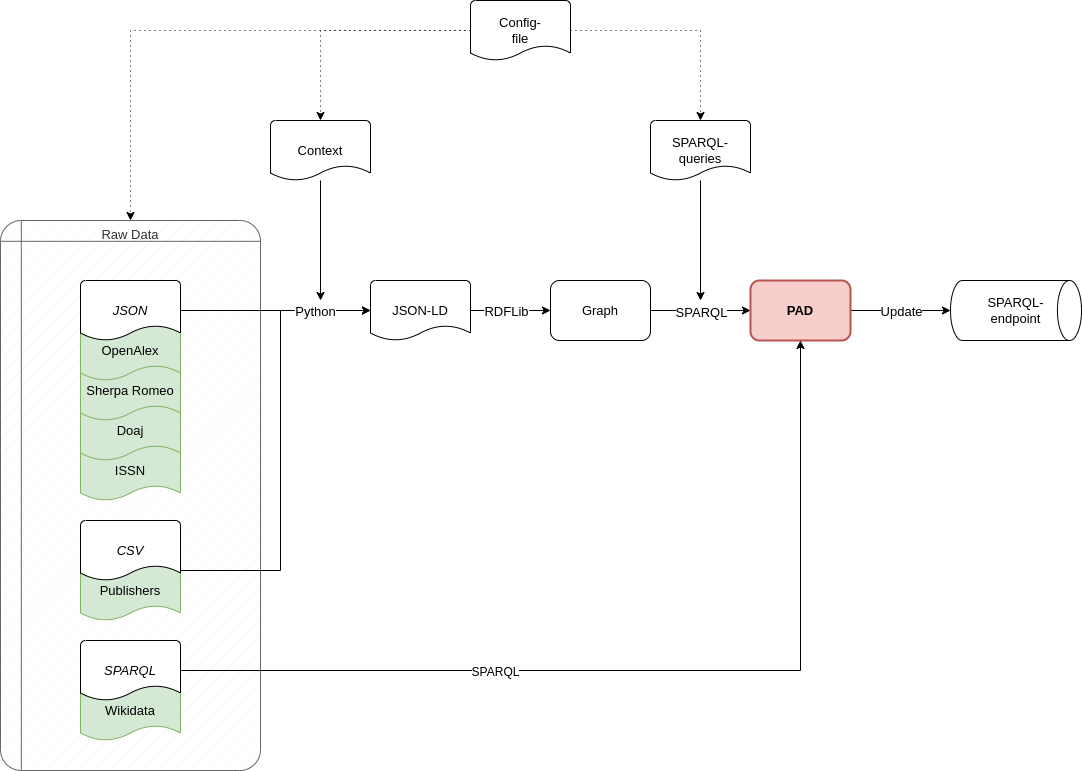

This repository contains the source code developed in the Journal Observatory project for collecting, converting, unifying, and aggregating information about scholarly communication platforms from various data sources using the Scholarly Communication Platform Framework format.

To run any Python script directly, please add the environment variable PYTHONPATH containing the path to the src/ folder to your environment to make sure that the sub-modules can be found.

The application can be configured by providing the config/job.conf file. Documentation of this file can be found in config/template.conf.

The bulk module (src/bulk/) contains all the necessary code to download the (latest version of the) data for various sources of journal information. Currently, the following data sources are supported:

Also provided are a set of excel files (data/publisher_peer_review/xlsx), directly obtained from collaborating publishers, containing information about the peer-review policies of their journals in accordance with the STM peer-review terminology.

Additionally, there is support to load ISSN-L information from ISSN, which links ISSN identifiers to ISSN-L identifiers.

For each data source, the data can be downloaded by setting the appropriate configuration settings in config/job.conf and running python bulk/bulk_{source}.py. This will download the data to the folder specified in the data_path configuration option of the source.

The store module converts platform data from various sources into separate PADs. Currently, the following data sources are supported:

- OpenAlex (JSON, from bulk module result)

- DOAJ (JSON, from bulk module result)

- Sherpa Romeo (JSON, from bulk module result)

- Publisher Peer Review (JSON, from bulk module result)

- ISSN-L (CSV, from bulk module result)

- Wikidata (SPARQL, directly from endpoint)

Translation between JSON data and PADs is relatively easy to extend. The functions in json_convert.py provide a generic way to add a context to a JSON document, and then converting the resulting document by providing a SPARQL query which inserts the appropriate graphs.

CSV data is currently handled by converting the data into JSON using a custom script, and then following the same procedure as outlined above.

Data sources which provide a SPARQL endpoint only need a list of platform identifiers and a SPARQL query to convert the data.

PADs are stored directly in a compatible triplestore via a SPARQL endpoint.

A very basic example of unification of PADs can be found in the store.job_unify module. This module clusters PADs on any matching object of dcterms:identifier for a scpo:Platform. These pads are collected, the assertions are unified and the source pads are linked via the pad:hasSourceAssertion property. The resulting pads are stored in a compatible triplestore via a SPARQL endpoint. This triplestore is the basis for the JournalObservatory prototype.

To transform JSON into RDF, generally the only thing that is needed is to add a context. In JSON-LD, this context is just syntactic sugar, it provides short names for identifiers. We can use it to transform JSON into JSON-LD by defining the JSON keys as shortcuts for proper identifiers.

There are some issues with this approach. For one, it can be hard to find identifiers for some keys, because the original designers did not need to think about this. Keys like =”name”= can be simple enough (for instance: https://schema.org/name), but for publisher_policy.permitted_oa.embargo it can be difficult to find an ontology which already describes this key. It would be the most efficient if data-providers themselves describe the keys in their JSON data (and provide identifiers). Another solution can be to provide an ad-hoc dummy identifier, and just prefix the key with the website of the data-provider. publisher_policy.permitted_oa.embargo will become https://v2.sherpa.ac.uk/id/publisher_policy_permitted_oa_embargo or romeo:publisher_policy_permitted_oa_embargo. This can be done by constructing the @context by hand, or providing the @vocab JSON-LD keyword.

Adding the @vocab keyword can have unintended side effects like key-collisions so it is not recommended. A On the other hand, failing to define keys while not providing the @vocab keyword leads to omission of that key when converting the JSON-LD to RDF.

On of the main uses for JSON is defining nested data. RDF does support nesting, but as it is built on the idea of triples, nesting can be unintuitive. In RDF nested data structures need an intermediate node.

See the following example:

json-ld-to-turtle()

import json

from rdflib import Graph

from pyld import jsonld

record = json.loads(record)

record = jsonld.compact(record, record["@context"])

g = Graph().parse(data=record, format="json-ld")

print(g.serialize(format="turtle").strip())approach 1

{

"@context": {

"ex": "https://example.org/",

"@vocab": "https://example.org/",

"@base": "https://example.org/",

"id": "@id"

},

"id": "example",

"nest": {

"key1": "value1",

"key2": "value2"

}

}

->

@prefix ex: <https://example.org/> .

ex:example ex:nest [ ex:key1 "value1" ;

ex:key2 "value2" ] .

In theory, we do not need the “nest” key from the example. It has no actual value, so the “key1” and “key2” properties could be properties of ex:example as well:

approach 2

{

"@context": {

"ex": "https://example.org/",

"@base": "https://example.org/",

"nest": "@nest",

"key1": "ex:nest_key1",

"key2": "ex:nest_key2"

},

"@graph": {

"@id": "example",

"nest": {

"key1": "value1",

"key2": "value2"

}

}

}

->

@prefix ex: <https://example.org/> .

ex:example ex:nest_key1 "value1" ;

ex:nest_key2 "value2" .

However, because there is no ambiguity using the same key name in a different nested structure in JSON, this can lead to ambiguity in RDF:

approach 3

{

"@context": {

"ex": "https://example.org/",

"@base": "https://example.org/",

"nest1": "@nest",

"nest2": "@nest",

"key": "ex:key"

},

"@graph": {

"@id": "example",

"nest1": {

"key": "value1"

},

"nest2": {

"key": "value2"

}

}

}

->

@prefix ex: <https://example.org/> .

ex:example ex:key "value1",

"value2" .

The “key” property of “nest1” and the key property of “nest2” might have different meanings in the JSON structure, but this meaning is lost in the conversion to RDF. A better way to deal with this is to use ‘scoped contexts’ to mirror the nested structure of the JSON:

approach 4

{

"@context": {

"ex": "https://example.org/",

"@base": "https://example.org/",

"nest1": {

"@id": "ex:nest1",

"@context": {

"key": "ex:nest1_key"

}

},

"nest2": {

"@id": "ex:nest2",

"@context": {

"key": "ex:nest2_key"

}

}

},

"@graph": {

"@id": "example",

"nest1": {

"key": "value1"

},

"nest2": {

"key": "value2"

}

}

}

->

@prefix ex: <https://example.org/> .

ex:example ex:nest1 [ ex:nest1_key "value1" ] ;

ex:nest2 [ ex:nest2_key "value2" ] .

Note that we cannot use the @nest keyword to get rid of the blank nodes that are introduced this way as the scoped context of @nest objects is ignored during conversion, meaning the “key” properties are not included in the resulting RDF graph.

To minimize the use of blank nodes, as they can complicate the data-structure, it is recommended to use approach2 or approach3 when it does not lead to ambiguity and to use approach4 otherwise.

Use the VALUES keyword to match variables to new types.

In this case we translate schema:eissn to scpo:hasEISSN and schema:pissn to scpo:hasPISSN.

construct {

?journal ?hasissn ?issn .

where {

?journal ?issntype ?issn .

values (?issntype ?hasissn) {

(schema:eissn scpo:hasEISSN)

(schema:pissn scpo:hasPISSN)

}

}Use the OPTIONAL, COALESCE and FILTER keywords in tandem to define an order of preference for specific terms.

In this case, we define a preference for the e-ISSN of a journal to the p-ISSN. We use the OPTIONAL keyword to make sure that records are not duplicated when both e-ISSN and p-ISSN exist (they will both be matched to the same record). We use the COALESCE keyword to obtain the first defined term in order of preference. Even though both issn types are optional, we do want to match on either of them, for this we use the FILTER keyword.

construct {

?journal scpo:hasISSN ?issn .

}

where {

optional { ?journal schema:pissn ?pissn } .

optional { ?journal schema:eissn ?eissn } .

bind(coalesce(?eissn, ?pissn) as ?issn)

?journal ?issntype ?issn .

filter (?issntype in (schema:eissn, schema:pissn))

}It is advisable to split up SPARQL queries that construct a PAD to have a query for different parts of the assertion. Not only does this simplify the query and lead to better readability, it also makes sure that there are no empty assertions and it minimizes the “explosive growth of BNodes”.

GraphDB is an enterprise grade semantic graph database.

Pros:

- Easy setup

- Extensive modern web-interface

- Rest API

- Extensive documentation

Cons:

- Free tier is limited

- Mostly proprietary software

Apache Jena is a set of tools to work with semantic data. Fuseki is the packaged tool to serve a SPARQL endpoint. Jena has its own database-backend, called TDB.

Pros:

- Free and Open Source

- Active development

- Extensive Documentation

- Web-interface

- Flexible Tooling

Cons:

- Almost no configuration via web-interface

- Cumbersome setup

- No first-class integration with RDFLib (parsing a graph with SPARQLStore backend is very slow)

- Bulk import can be difficult

Blazegraph is a performant SPARQL store. It has been acquired by Amazon.

Pros:

- Free and Open Source

- Performant

- Fairly easy setup

Cons:

- Very little development

- Little documentation

- No first-class integration with RDFLib

Virtuoso is a Graph database that offers SPARQL and SQL endpoints.

Pros:

- Open Source

- Flexible, not constrained to SPARQL

Cons:

- Not free

- Difficult setup

- No first-class integration with RDFLib

Neo4j is a popular Graph database. n10s is an extension that adds semantic technologies to the Neo4j database.

Pros:

- Open Source

- Flexible, not constrained to SPARQL

- Popular, active development

- Extensive documentation

- First class integration with RDFLib

Cons:

- No real support for SPARQL

- n10s is not core functionality