Hiring research interns for neural architecture search projects: houwen.peng@microsoft.com

This is a collection of our AutoML-NAS work

iRPE (

NEW): Rethinking and Improving Relative Position Encoding for Vision Transformer

AutoFormer (

NEW): AutoFormer: Searching Transformers for Visual Recognition

Cream (

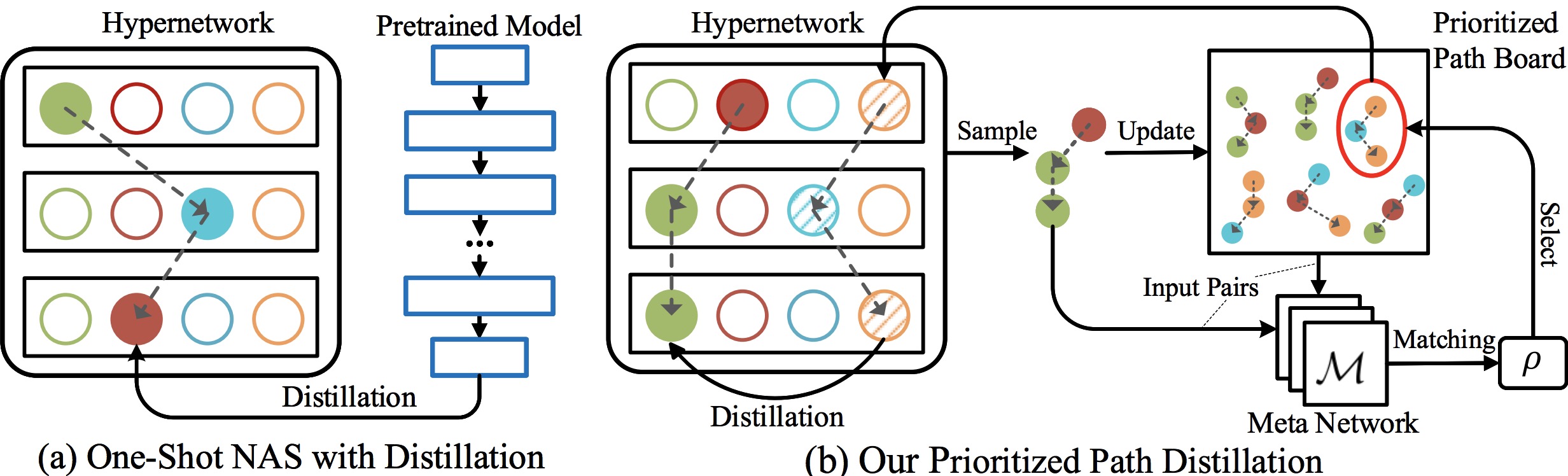

@NeurIPS'20): Cream of the Crop: Distilling Prioritized Paths For One-Shot Neural Architecture Search

- 💥 July, 2021: iRPE is now released [pdf].

- 💥 July, 2021: iRPE was accepted by ICCV'21.

- 💥 July, 2021: AutoFormer was accepted by ICCV'21.

- 💥 July, 2021: AutoFormer is now available on arXiv.

- 💥 Oct, 2020: Code for Cream is now released.

- 💥 Oct, 2020: Cream was accepted to NeurIPS'20

Image RPE (iRPE for short) methods are new relative position encoding methods dedicated to 2D images, considering directional relative distance modeling as well as the interactions between queries and relative position embeddings in self-attention mechanism. The proposed iRPE methods are simple and lightweight, being easily plugged into transformer blocks. Experiments demonstrate that solely due to the proposed encoding methods, DeiT and DETR obtain up to 1.5% (top-1 Acc) and 1.3% (mAP) stable improvements over their original versions on ImageNet and COCO respectively, without tuning any extra hyperparamters such as earning rate and weight decay. Our ablation and analysis also yield interesting findings, some of with run counter to previous understanding.

Coming soon!!!

AutoFormer is new one-shot architecture search framework dedicated to vision transformer search. It entangles the weights of different vision transformer blocks in the same layers during supernet training. Benefiting from the strategy, the trained supernet allows thousands of subnets to be very well-trained. Specifically, the performance of these subnets with weights inherited from the supernet is comparable to those retrained from scratch.

[Paper] [Models-Google Drive][Models-Baidu Disk (password: wqw6)] [Slides] [BibTex]

In this work, we present a simple yet effective architecture distillation method. The central idea is that subnetworks can learn collaboratively and teach each other throughout the training process, aiming to boost the convergence of individual models. We introduce the concept of prioritized path, which refers to the architecture candidates exhibiting superior performance during training. Distilling knowledge from the prioritized paths is able to boost the training of subnetworks. Since the prioritized paths are changed on the fly depending on their performance and complexity, the final obtained paths are the cream of the crop.

@article{chen2021autoformer,

title={AutoFormer: Searching Transformers for Visual Recognition},

author={Chen, Minghao and Peng, Houwen and Fu, Jianlong and Ling, Haibin},

journal={arXiv preprint arXiv:2107.00651},

year={2021}

}

@article{Cream,

title={Cream of the Crop: Distilling Prioritized Paths For One-Shot Neural Architecture Search},

author={Peng, Houwen and Du, Hao and Yu, Hongyuan and Li, Qi and Liao, Jing and Fu, Jianlong},

journal={Advances in Neural Information Processing Systems},

volume={33},

year={2020}

}

License under an MIT license.