Accepted by International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI 2024)

Chenxin Li1* Hengyu Liu1* Yifan Liu1* Brandon Y. Feng2 Wuyang Li1 Xinyu Liu1 Zhen Chen3 Jing Shao4 Yixuan Yuan1✉

1CUHK 2MIT CSAIL 3CAS CAIR 4Shanghai AI Lab

* Equal Contributions. ✉ Corresponding Author.

- A high-fidelity medical video generation framework, tested on endoscopy scenes, laying the groundwork for further advancements in the field.

- The first public benchmark for endoscopy video generation, featuring a comprehensive collection of clinical videos and adapting existing general-purpose generative video models for this purpose.

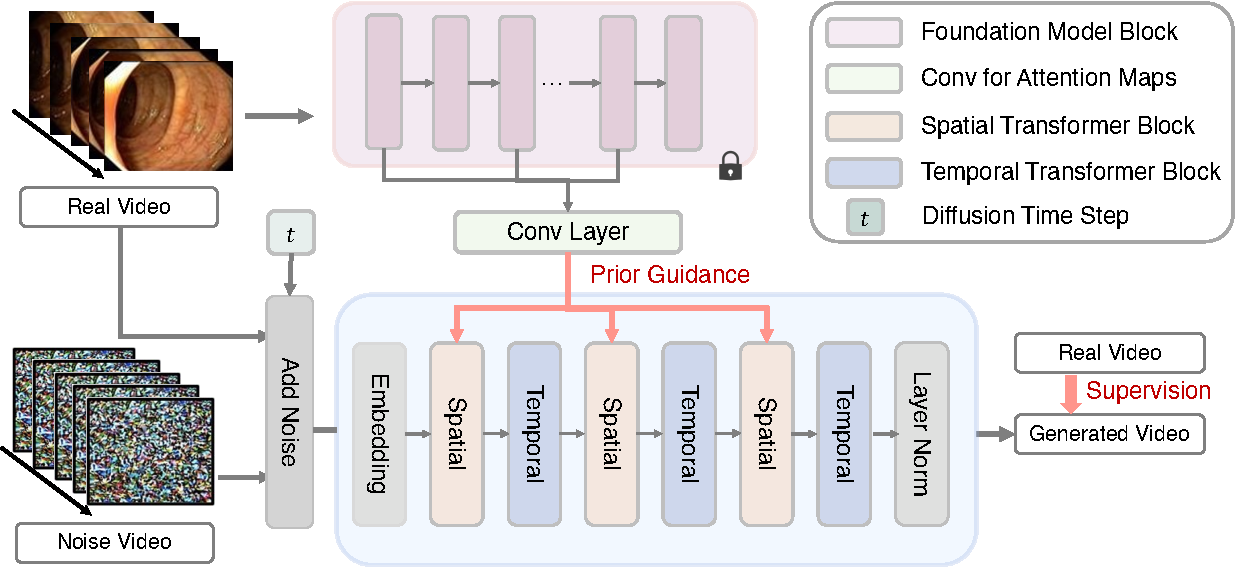

- A novel technique to infuse generative models with features distilled from a 2D visual foundation model, ensuring consistency and quality across different scales.

- Versatile ability through successful applications in video-based disease diagnosis and 3D surgical scene reconstruction, highlighting its potential for downstream medical tasks

git clone https://github.com/XGGNet/Endora.git

cd Endora

conda create -n Endora python=3.10

conda activate Endora

pip install torch==2.1.2 torchvision==0.16.2 torchaudio==2.1.2 --index-url https://download.pytorch.org/whl/cu118

pip install -r requirements.txtTips A: We test the framework using pytorch=2.1.2, and the CUDA compile version=11.8. Other versions should be also fine but not totally ensured.

Tips B: GPU with 24GB (or more) is recommended for video sampling by Endora inference, and 48GB (or more) for Endora training.

Colonoscopic: The dataset provided by paper can be found here. You can directly use the processed video data by Endo-FM without further data processing.

Kvasir-Capsule: The dataset provided by paper can be found here. You can directly use the processed video data by Endo-FM without further data processing.

CholecTriplet: The dataset provided by paper can be found here. You can directly use the processed video data by Endo-FM without further data processing.

Please run process_data.py and process_list.py to get the split frames and the corresponding list at first.

CUDA_VISIBLE_DEVICES=gpu_id python process_data.py -s /path/to/datasets -t /path/to/save/video/frames

CUDA_VISIBLE_DEVICES=gpu_id python process_list.py -f /path/to/video/frames -t /path/to/save/textThe resulted file structure is as follows.

├── data

│ ├── CholecT45

│ ├── 00001.mp4

| ├── ...

│ ├── Colonoscopic

│ ├── 00001.mp4

| ├── ...

│ ├── Kvasir-Capsule

│ ├── 00001.mp4

| ├── ...

│ ├── CholecT45_frames

│ ├── train_128_list.txt

│ ├── 00001

│ ├── 00000.jpg

| ├── ...

| ├── ...

│ ├── Colonoscopic_frames

│ ├── train_128_list.txt

│ ├── 00001

│ ├── 00000.jpg

| ├── ...

| ├── ...

│ ├── Kvasir-Capsule_frames

│ ├── train_128_list.txt

│ ├── 00001

│ ├── 00000.jpg

| ├── ...

| ├── ...

You can directly sample the endoscopy videos from the checkpoint model. Here is an example for quick usage for using our pre-trained models:

- Download the pre-trained weights from here and put them to specific path defined in the configs.

- Run

sample.pyby the following scripts to customize the various arguments like adjusting sampling steps.

Simple Sample to generate a video

bash sample/col.sh

bash sample/kva.sh

bash sample/cho.shDDP sample

bash sample/col_ddp.sh

bash sample/kva_ddp.sh

bash sample/cho_ddp.shThe weight of pretrained DINO can be found here, and in our implementation we use ViT-B/8 during training Endora. And the saved path need to be edited in ./configs

Train Endora with the resolution of 128x128 with N GPUs on the Colonoscopic dataset

torchrun --nnodes=1 --nproc_per_node=N train.py \

--config ./configs/col/col_train.yaml \

--port PORT \

--mode type_cnn \

--prr_weight 0.5 \

--pretrained_weights /path/to/pretrained/DINORun training Endora with scripts in ./train_scripts

bash train_scripts/col/train_col.sh

bash train_scripts/kva/train_kva.sh

bash train_scripts/cho/train_cho.shWe first split the generated videos to frames and use the code from StyleGAN to evaluate the model in terms of FVD, FID and IS.

Test with process_data.py and code in stylegan-v

CUDA_VISIBLE_DEVICES=gpu_id python process_data.py -s /path/to/generated/video -t /path/to/video/frames

cd /path/to/stylegan-v

CUDA_VISIBLE_DEVICES=gpu_id python ./src/scripts/calc_metrics_for_dataset.py \

--fake_data_path /path/to/video/frames \

--real_data_path /path/to/dataset/frames Test with scipt test.sh

bash test.shWe provide the code of training and testing scripts of compared methods on endoscopy video generation (as shown in Table 1. Quantitative Comparison in paper).

Please enter Other-Methods/ for more details. We will keep cleaning up the code.

The pre-trained weights for all the comparison methods are available here.

Here is an overview of performance&checkpoints on Colonoscopic Dataset.

| Method | FVD↓ | FID↓ | IS↑ | Checkpoints |

|---|---|---|---|---|

| StyleGAN-V | 2110.7 | 226.14 | 2.12 | Link |

| LVDM | 1036.7 | 96.85 | 1.93 | Link |

| MoStGAN-V | 468.5 | 53.17 | 3.37 | Link |

| Endora (Ours) | 460.7 | 13.41 | 3.90 | Link |

We also provide the training of other variants of Endora (as shown in Table 3. Ablation Studies in paper). Training and Sampling Scripts are in train_scripts/ablation and sample/ablation respectively.

bash /train_scripts/ablation/train_col_ablation{i}.sh % e.g., i=1 to run the 1st-row ablation experiments.

bash /sample/ablation/col_ddp_ablation{i}.sh % e.g., i=1 to run the 1st-row ablation experiments. | Modified Diffusion | Spatiotemporal Encoding | Prior Guidance | FVD↓ | FID↓ | IS↑ | Checkpoints |

|---|---|---|---|---|---|---|

| ❌ | ❌ | ❌ | 611.9 | 22.44 | 3.61 | Link |

| ✅ | ❌ | ❌ | 593.7 | 17.75 | 3.65 | Link |

| ✅ | ✅ | ❌ | 493.5 | 13.88 | 3.89 | Link |

| ✅ | ✅ | ✅ | 460.7 | 13.41 | 3.90 | Link |

We provide the reproduction steps for reproducing the results of extending Endora to downstream applications (as shown in Section 3.3 in paper).

Please follow the steps:

- Enter the path "Downstream-Semi/"

- Download PolypDiag dataset provided by paper from here. You can directly use the processed video data by Endo-FM without further data processing.

- Run the script

bash semi_baseline.shto obtain the Supervised-only lowerbound of semi-supervised disease diagnosis. - Sample the endoscopy videos on Colonoscopic and CholecTriplet as augmented data. We also provide the sampled videos here for direct usage.

- Run the script

bash semi_gen.shfor semi-supervised disease diagnosis using the augmented unlabeled data.

| Method | Colonoscopic | CholeTriplet |

|---|---|---|

| Supervised-only | 74.5 | 74.5 |

| LVDM | 76.2 | 78.0 |

| Endora (Ours) | 87.0 | 82.0 |

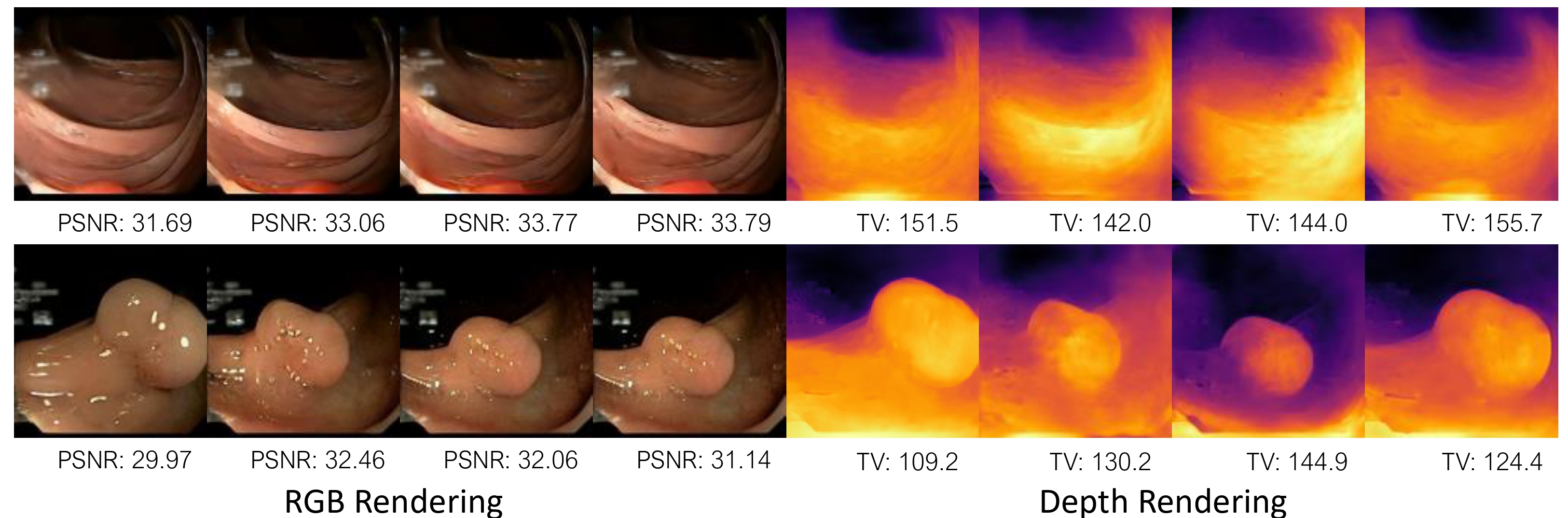

Please follow the steps:

- Run COLMAP on the generated videos as the point initialization.

- Use EndoGaussian to train 3D representation of Gaussian Splatting.

Videos of Rendered RGB & Rendered Depth

- Release code for Endora

- Clean up the code for Endora

- Upload the ckpt for compared methods.

- Clean up the codes for training compared methods.

Greatly appreciate the tremendous effort for the following projects!

If you find this work helpful for your project,please consider citing the following paper:

@article{li2024endora,

author = {Chenxin Li and Hengyu Liu and Yifan Liu and Brandon Y. Feng, and Wuyang Li and Xinyu Liu, Zhen Chen and Jing shao and Yixuan Yuan},

title = {Endora: Video Generation Models as Endoscopy Simulators},

journal = {arXiv preprint arXiv:2403.11050},

year = {2024}

}