Official PyTorch implementation of the paper:

ArtFlow: Unbiased Image Style Transfer via Reversible Neural Flows

Jie An*, Siyu Huang*, Yibing Song, Dejing Dou, Wei Liu and Jiebo Luo

CVPR 2021

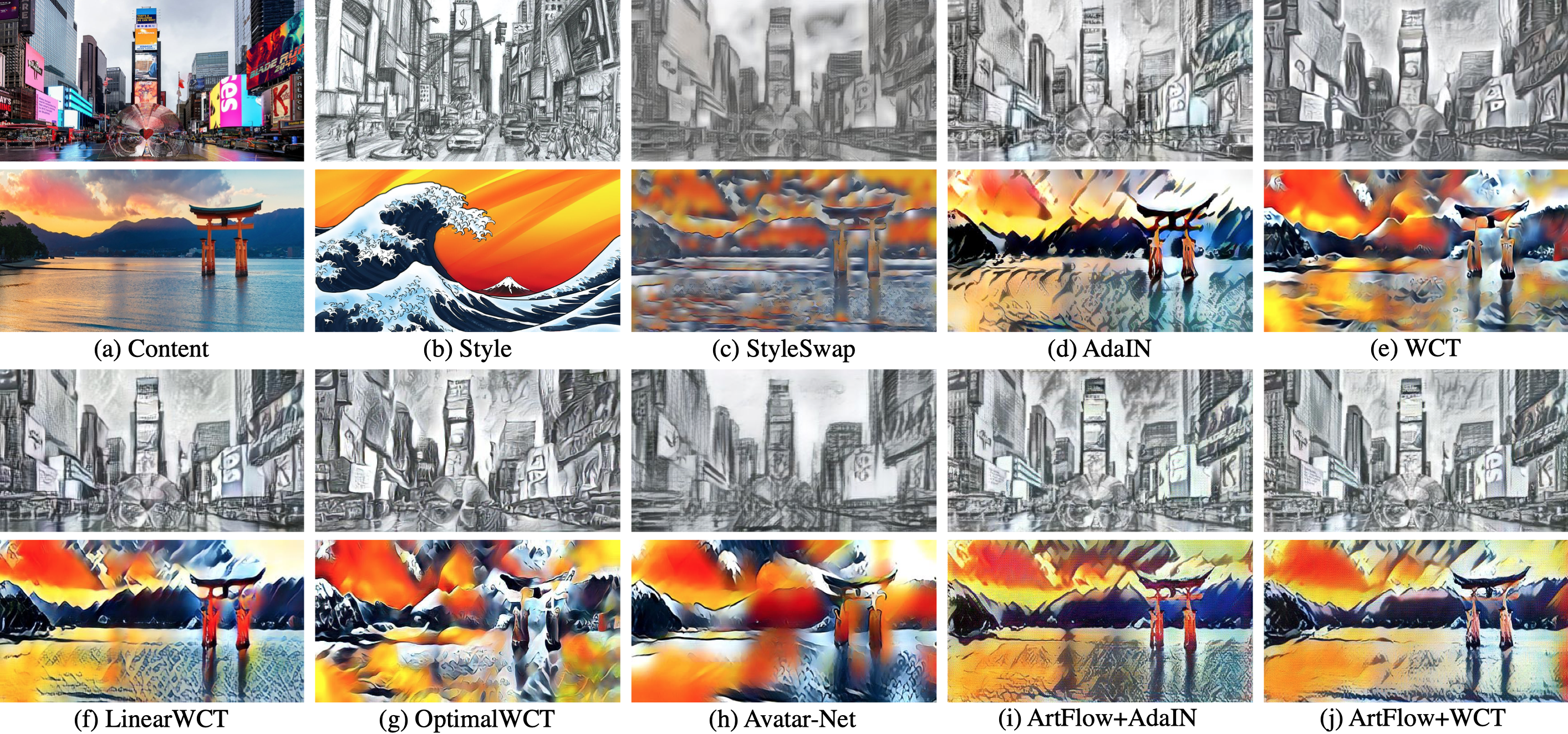

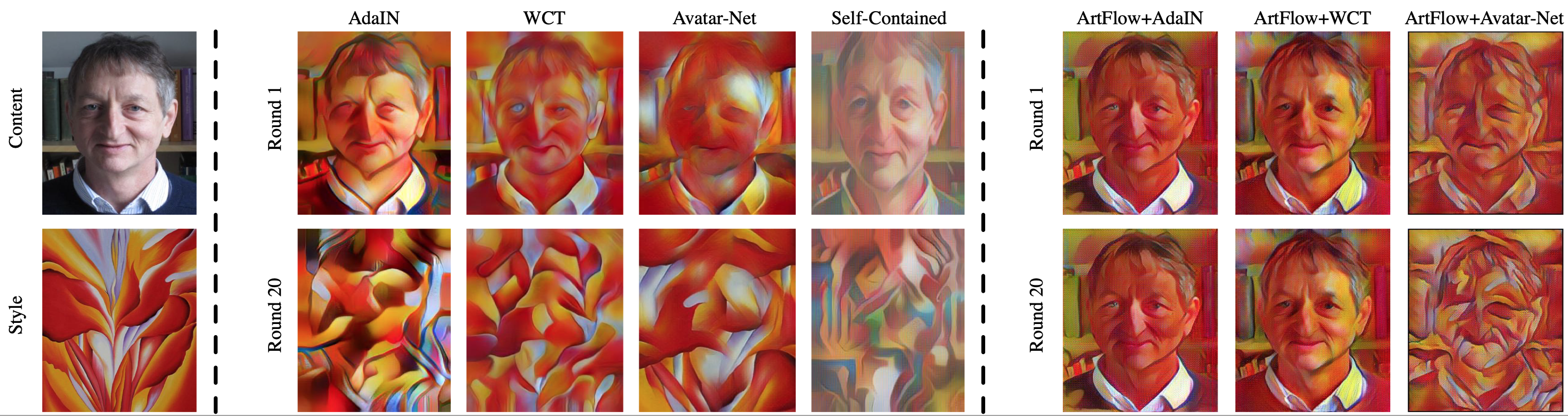

ArtFlow is a universal style transfer method that consists of reversible neural flows and an unbiased feature transfer module. ArtFlow adopts a projection-transfer-reversion scheme instead of the encoder-transfer-decoder to avoid the content leak issue of existing style transfer methods and consequently achieves unbiased style transfer in continuous style transfer.

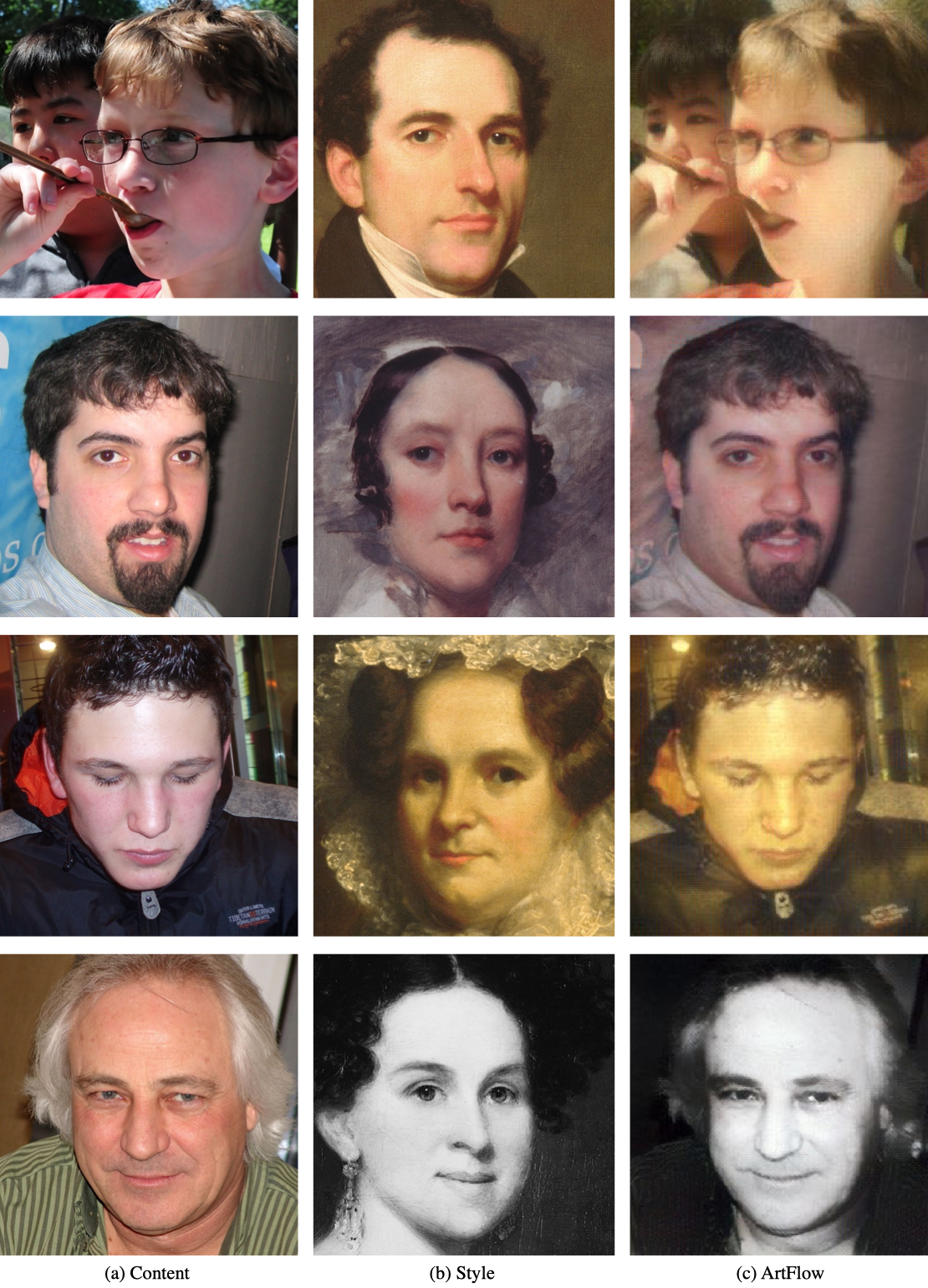

We also train a model with the FFHQ dataset as the content and Metfaces as the style to convert a portrait photo into an artwork.

When we continuously perform style transfer with a style transfer algorithm, the produced result will gradually lose the detail of the content image. The code in this repository solves this problem.

- Python=3.6

- PyTorch=1.8.1

- CUDA=10.2

- cuDNN=7.6

- Scipy=1.5.2

Optionally, if you are a conda user, you can execute the following command in the directory of this repository to create a new environment with all dependencies installed.

conda env create -f environment.yaml

If you want to use pretrained models to perform style transfer, please download the pre-trained models in Google Drive and put the downloaded experiments directory under the root of this repository. Then execute the following command in the root of the repository.

The command with the default settings is:

CUDA_VISIBLE_DEVICES=0 python3 -u test.py --content_dir data/content --style_dir data/style --size 256 --n_flow 8 --n_block 2 --operator adain --decoder experiments/ArtFlow-AdaIN/glow.pth --output output_ArtFlow-AdaIN

content_dir: path for the content images. Default isdata/content.style_dir: path for the style images. Default isdata/style.size: image size for style transfer. Default is256.n_flow: number of the flow module used per block in the backbone network. Default is8.n_block: number of the block used in the backbone network. Default is2.operator: style transfer module. Options:[adain, wct, decorator].decoder: path for the pre-trained model, if you let the--operator wct, then you should load the pre-trained model with--decoder experiments/ArtFlow-WCT/glow.pth. Otherwise, if you use AdaIN, you should set--decoder experiments/ArtFlow-AdaIN/glow.pth. If you want to use this code for portrait style transfer, please set--operator adainand--decoder experiments/ArtFlow-AdaIN-Portrait/glow.pth.output: path of the output directory. This code will produce a style transferred image for every content-style combination in your designated directories.

We provide a script to make style transfer with a content and a series of style images to demonstrate that our code can avoid the content leak issue. The command with the default settings is:

CUDA_VISIBLE_DEVICES=0 python3 continuous_transfer.py --content_dir data/content --style_dir data/style --size 256 --n_flow 8 --n_block 2 --operator adain --decoder experiments/ArtFlow-AdaIN/glow.pth --output output_ArtFlow-AdaIN

All parameters are the same as the style transfer part above.

To test the style transfer performance of the pre-trained model with the given content and style images under data directory. Please run the following commands:

bash test_adain.sh

The style transfer results will be saved in output_ArtFlow-AdaIN.

bash test_wct.sh

The style transfer results will be saved in output_ArtFlow-WCT.

To train ArtFlow by yourself. Please firstly download the Imagenet pre-trained VGG19 model from Google Drive and put the downloaded models directory under the root of the repository. Then run the following commands.

CUDA_VISIBLE_DEVICES=0,1 python3 -u train.py --content_dir $training_content_dir --style_dir $training_style_dir --n_flow 8 --n_block 2 --operator adain --save_dir $param_save_dir --batch_size 4

content_dir: path for the training content images.style_dir: path for the training style images.n_flow: number of the flow module used per block in the backbone network. Default is8.n_block: number of the block used in the backbone network. Default is2.operator: style transfer module. Options:[adain, wct, decorator].save_dir: path for saving the trained model.

The datasets we used for training in our experiments are as follows:

| Model | Content | Style |

|---|---|---|

| General | MS_COCO | WikiArt |

| Portrait | FFHQ | Metfaces |

If you want to reproduce the model in our experiments. Here are two bash scripts with our settings:

bash train_adain.sh

bash train_wct.sh

Please note that you may need to change the path of the train content and style datasets in the above two bash scripts.

@inproceedings{artflow2021,

title={ArtFlow: Unbiased image style transfer via reversible neural flows},

author={An, Jie and Huang, Siyu and Song, Yibing and Dou, Dejing and Liu, Wei and Luo, Jiebo},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2021}

}

We thank the great work glow-pytorch, AdaIN and WCT as we benefit a lot from their codes and papers.

If you have any questions, please do not hesitate to contact jan6@cs.rochester.edu and huangsiyutc@gmail.com.