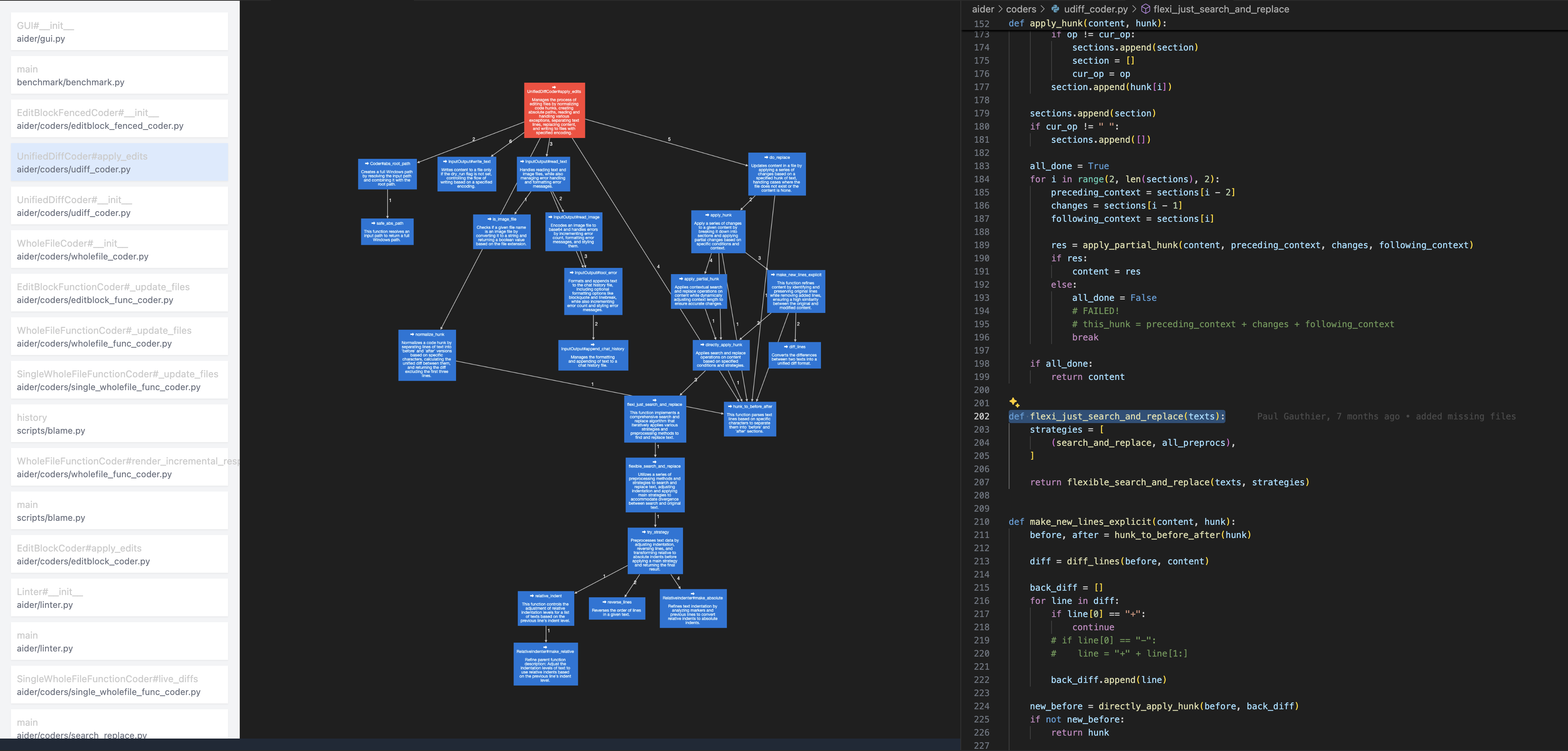

Code Charter is a tool designed to enhance the developer experience by transforming complex code into diagrams that distill key user-centric patterns. This tool addresses the need for clearer code understanding and more efficient development processes, enabling developers to think at a higher level and quickly grasp essential patterns in both their own and others' codebases. By visualising code structures, Code Charter aims to accelerate the learning curve and improve code quality.

Currently in development and set to be released soon as a VSCode extension, Code Charter's roadmap includes integrations with coding Agent libraries. These integrations will facilitate greater collaboration by improving the observability and interactivity of AI-powered assistants.

To use Code Charter, ensure you have the following installed and running:

-

Docker: Docker is required to manage the containerised environment for Code Charter. You can download and install Docker from Docker's official website.

-

Ollama: Ollama is necessary for specific functionalities within Code Charter. Make sure Ollama is installed and running on your system. You can find more information and download links on Ollama's website.

-

Download the Code Charter Extension: The extension can be downloaded from the Visual Studio Code marketplace.

Once installed and configured, you can start using Code Charter to visualise and understand your codebase more effectively. Detailed usage instructions and examples will be available in the official documentation.

- Detect call graphs in a codebase.

- Present these call graphs by node count, assuming the higher nocount call graphs are more important.

- Create summaries of functions and render in a diagram.

- Click on diagram node to navigate to the function in the codebase.

- This allows developers to quickly move between the high and low levels of abstraction.

- Regenerate summaries as the codebase changes.

- Enable user to edit the summaries.

- Enable user to delete, merge or add nodes.

- Enable user to edit the summarisation prompt to refine for specific use cases.

- Add other summary targets (other than distilling business logic).

This is based on the availability of SCIP parsers.

| Language | Supported |

|---|---|

| Java, Scala, Kotlin | |

| TypeScript, JavaScript | |

| Rust | |

| C++, C | |

| Ruby | |

| Python | ✔ |

| C#, Visual Basic | |

| Dart | |

| PHP |

- Index the codebase using SCIP protocol

- Parse the scip index and detect call graphs, output a JSON file containing the call graphs

- Summarise all the individual functions in the call graphs by via LLM, focusing on business logic.

- Re-summarise functions alongside their child-node functions to reduce repetition and improve the narrative flow of the diagram.

Mit License, see LICENSE for more information.