Another TensorFlow implementation of Learning from Simulated and Unsupervised Images through Adversarial Training.

Thanks to TaeHoon Kim, I was able to run simGAN that generates refined synthetic eye dataset.

This is just another version of his code that can generate NYU hand datasets.

The structure of the refiner/discriminator networks are changed as it is described in the Apple paper.

The only code added in this version is ./data/hand_data.py.

Rest of the code runs in the same way as the original version.

To set up the environment(or to run UnityEyes dataset), please follow the instructions in this link.

###Notes

-NYU hand dataset is preprocessed(e.g. background removed)

-Image size set to 128x128

-Buffer/Batch size was reduced due to memory issues

-Changed the size of the refiner/discriminator network

##Results

Given these synthetic images,

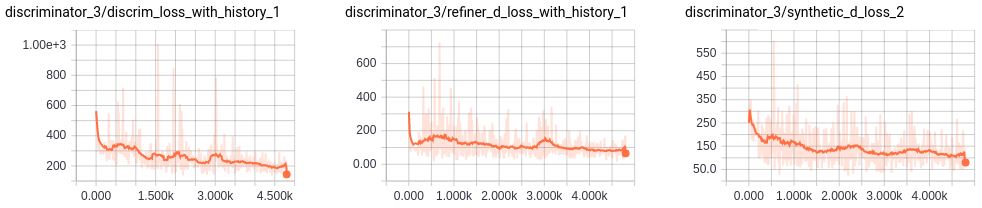

###Test 1

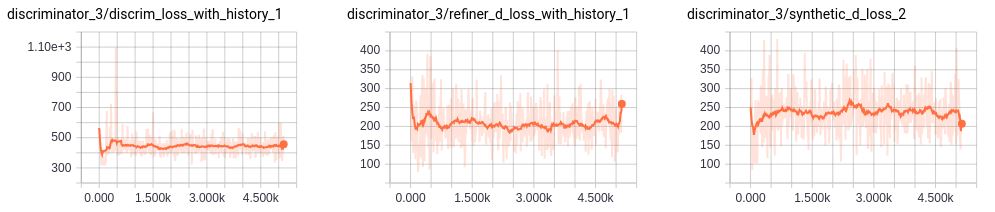

'lambda=0.1' with 'optimizer=sgd' after ~10k steps.

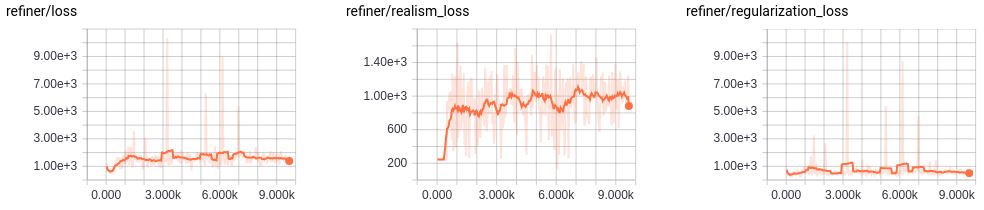

###Test 2

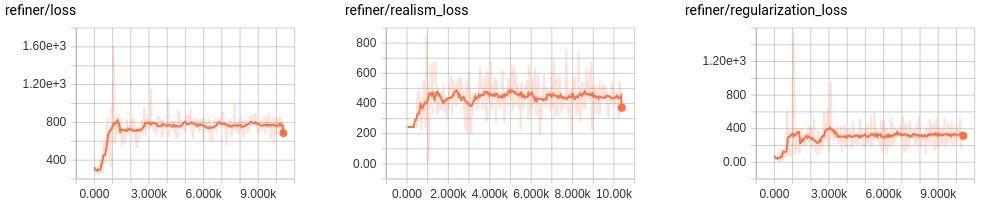

'lambda=0.5' with 'optimizer=sgd' after ~10k steps.

###Test 3

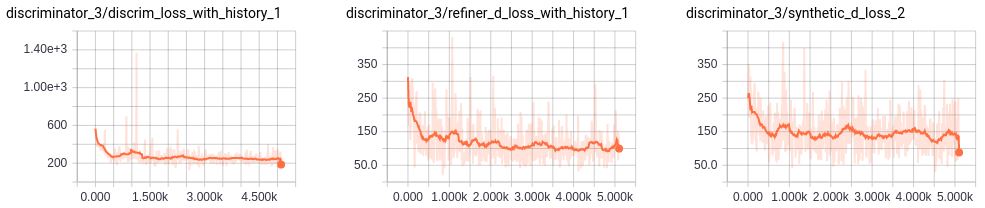

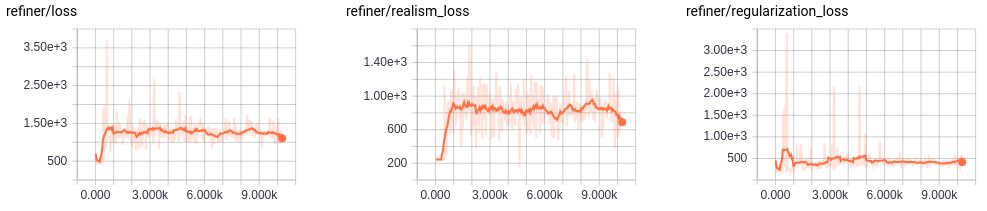

'lambda=1.0' with 'optimizer=sgd' after ~10k steps.

##Summary

-The result clearly shows that the refined images look more like the ones in the real dataset as the value of lambda gets smaller.

-The background of the refined images are darker. This is because some of the real image backgrounds were not properly removed while obtaining the arm hand segments. When the refiner tries to make refined synthetic images, it also changes the colour of the background to make it look like the ones in the real dataset.

Seung Shin / @shinseung428