Pytorch implementation of "Beyond Self-attention: External Attention using Two Linear Layers for Visual Tasks"

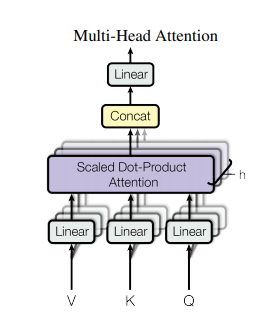

Pytorch implementation of "Attention Is All You Need---NIPS2017"

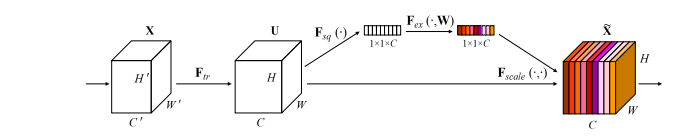

Pytorch implementation of "Squeeze-and-Excitation Networks---CVPR2018"

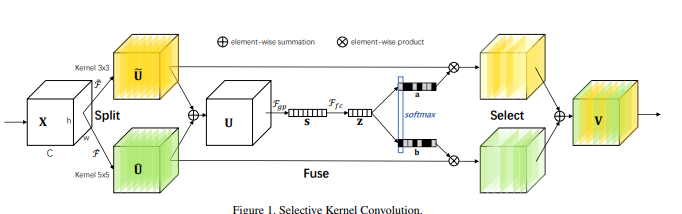

Pytorch implementation of "Selective Kernel Networks---CVPR2019"

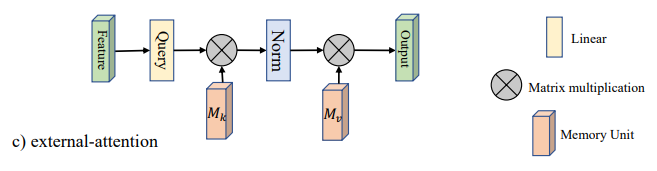

"Beyond Self-attention: External Attention using Two Linear Layers for Visual Tasks"

from ExternalAttention import ExternalAttention

import torch

input=torch.randn(50,49,512)

ea = ExternalAttention(d_model=512,S=8)

output=ea(input)

print(output.shape)from SelfAttention import ScaledDotProductAttention

import torch

input=torch.randn(50,49,512)

sa = ScaledDotProductAttention(d_model=512, d_k=512, d_v=512, h=8)

output=sa(input,input,input)

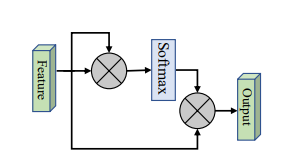

print(output.shape)from SimplifiedSelfAttention import SimplifiedScaledDotProductAttention

import torch

input=torch.randn(50,49,512)

ssa = SimplifiedScaledDotProductAttention(d_model=512, h=8)

output=ssa(input,input,input)

print(output.shape)"Squeeze-and-Excitation Networks"

from SEAttention import SEAttention

import torch

input=torch.randn(50,512,7,7)

se = SEAttention(channel=512,reduction=8)

output=se(input)

print(output.shape)from SKAttention import SKAttention

import torch

input=torch.randn(50,512,7,7)

se = SKAttention(channel=512,reduction=8)

output=se(input)

print(output.shape)