This repo provides code for the course project #7 of Numerical Analysis, THU-CST, 2023 Spring.

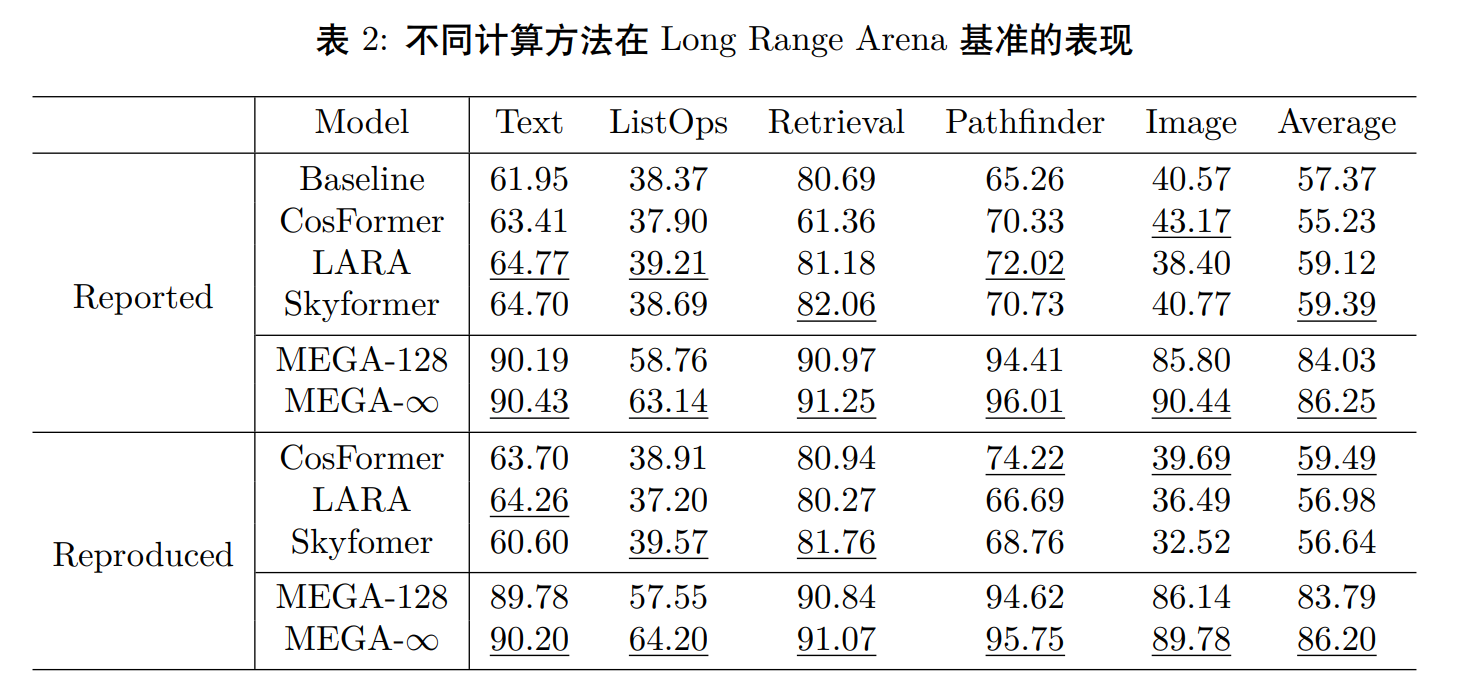

On Long Range Arena, we tried to reproduce results in Skyformer, CosFormer, LARA and MEGA. We also measured the training speed and inference speed of these models.

-

Download Preprocessed data from TsinghuaCloud

-

Unzip

lra_data_mega.zipandlra_data_skyformer.zipand make the directory structure as follows:

data/skyformer

├── lra-image.dev.pickle

├── lra-image.test.pickle

├── lra-image.train.pickle

├── ...

├── lra-text.dev.pickle

├── lra-text.test.pickle

└── lra-text.train.pickle

data/mega

├── aan

│ ├── dict-bin

│ ├── label-bin

│ ├── src-bin

│ └── src1-bin

├── cifar10

│ ├── input

│ └── label

├── imdb-4000

│ ├── label-bin

│ └── src-bin

├── listops

│ ├── label-bin

│ └── src-bin

├── path-x

│ ├── input

│ └── label

└── pathfinder

├── input

└── label

Prepare the environment by

conda create -n acce python=3.8

conda activate acce

# install `torch==1.8.0` follow your CUDA version, e.g.

conda install pytorch==1.8.0 torchvision==0.9.0 torchaudio==0.8.0 cudatoolkit=11.1 -c pytorch -c conda-forge

# install skyformer dependencies

pip install -r skyformer/requirements.txt

# install mega and its dependencies

pip install -e mega-

CosFormer, LARA and Skyformer

cd skyformer python main.py --mode train --attn <attention-name> --task <task-name>

-

<attention-name>:softmax: baseline attentionskyformercosformerlara

-

<task-name>:lra-listopslra-pathfinderlra-retrievallra-textlra-image

-

-

MEGA

cd mega bash training_scripts/run_<task-name>.sh

<task-name>:listopspathfinderretrievaltextimage

-

The scripts select the best checkpoint on vavlidation set and evaluate on test set at the end of training.

-

CosFormer, LARA and Skyformer

cd skyformer bash speed_tests.shIt runs speed tests for all

softmax,skyformer,cosformerandlaraon all 5 tasks. -

MEGA

cd mega bash timing_scripts/speed_tests.shIt runs speed tests for all

MEGA-∞andMEGA-128on all 5 tasks.

This repo is derived from Skyformer and MEGA, with implementation refernce from CosFormer and LARA. We thank the authors for their great work.