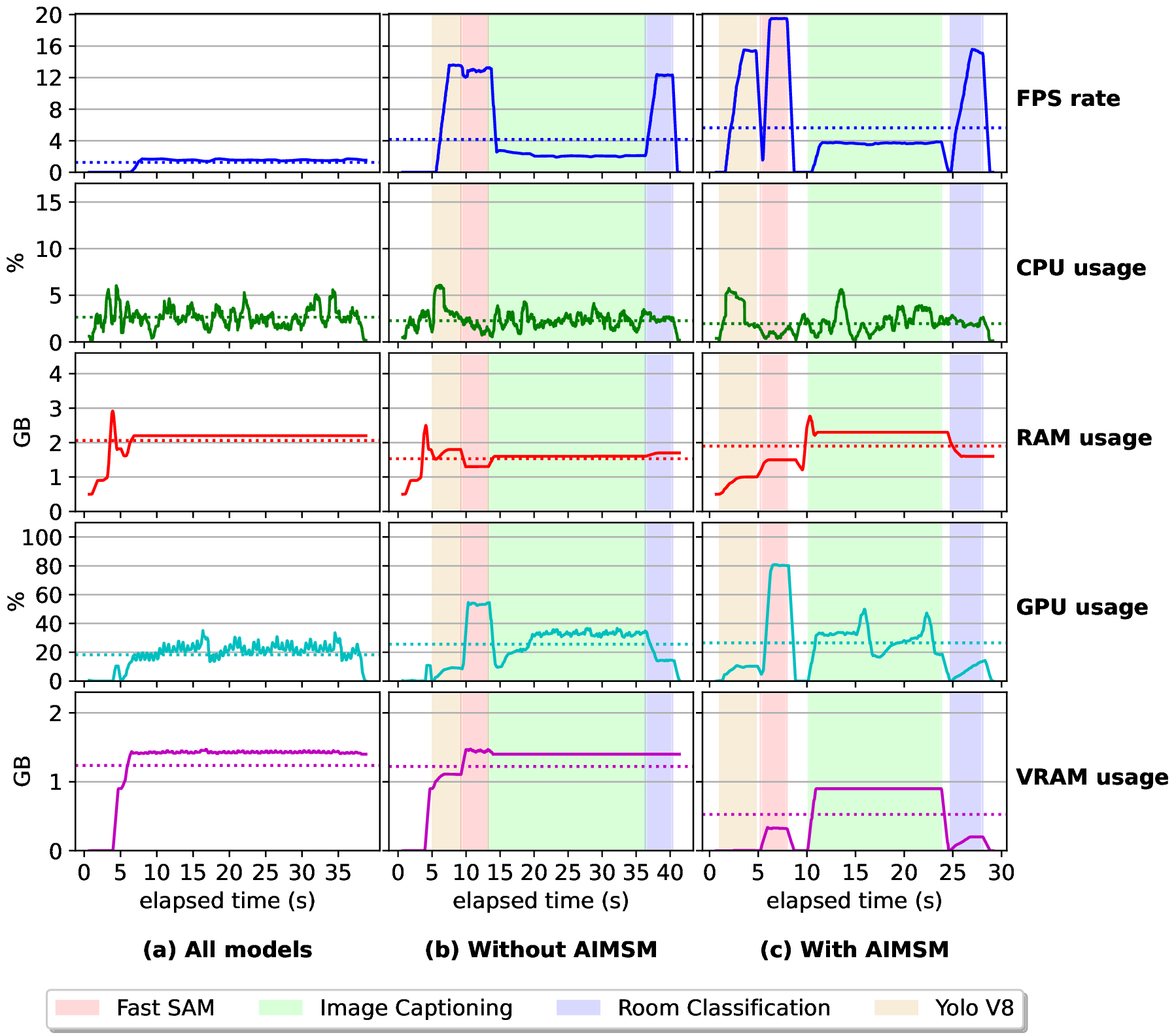

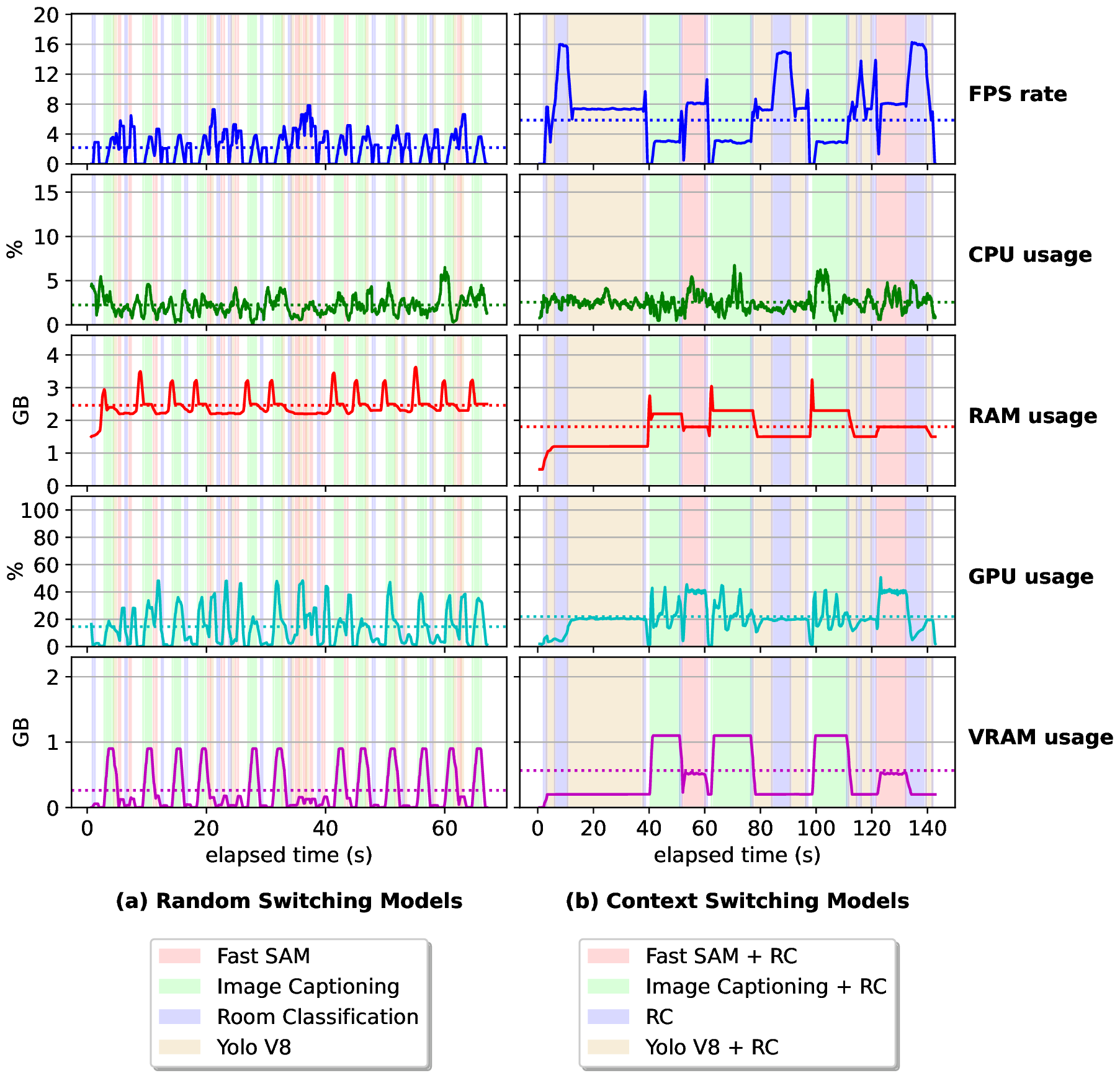

This work introduces the Artificial Intelligence Models Switching Mechanism (AIMSM), which enhances adaptability in preexisting AI systems by activating and deactivating AI models during runtime. AIMSM optimizes resource consumption by allocating only the necessary models for each situation, making it especially useful in dynamic environments with multiple AI models.

# CREATE CONDA ENVIRONMENT

conda create -n aimsm python=3.10

# ROS INSTALLATION

sudo sh -c 'echo "deb http://packages.ros.org/ros/ubuntu $(lsb_release -sc) main" > /etc/apt/sources.list.d/ros-latest.list'

sudo apt install curl # if you haven't already installed curl

curl -s https://raw.githubusercontent.com/ros/rosdistro/master/ros.asc | sudo apt-key add -sudo apt updatesudo apt update

sudo apt update

sudo apt install ros-melodic-desktop-full

echo "source /opt/ros/melodic/setup.bash" >> ~/.bashrc

source ~/.bashrc

# ROS DEPENDENCIES

sudo apt-get install ros-melodic-rosbridge-suite

sudo apt-get install ros-melodic-slam-gmapping

# ROS PYTHON PACKAGES

pip install --upgrade pip

pip install rospkg pyyaml

# AIMSM DEPENDENCIES

git submodule update --recursive --remote --init

pip install -r requirements.txt

pip install -r fastsam/requirements.txt- Download FastSAM model and place it in

weightsfolder

To correctly run and configure the Unity simulation, please follow the instructions provided at: https://github.com/DavidFernandezChaves/RobotAtVirtualHome

This will enable communication between the ROS environment and the PySide2 app.

source /opt/ros/melodic/setup.bash

conda activate melodic

roslaunch rosbridge_server rosbridge_websocket.launchThis will enable you to visualize the robot's movements and sensors in the simulation using the RViz tool.

Note: It must be at the root of the project before run the following code:

source /opt/ros/melodic/setup.bash

rviz -d rviz/robot_at_virtualhome.rvizOBS: must be at the root of the project

This will collect sensor data from the robot and generate a map of the environment.

source /opt/ros/melodic/setup.bash

rosrun gmapping slam_gmapping scan:=/RobotAtVirtualHome/scanThis will start the PySide2 app and connect to the ROSBridge server.

conda activate aimsm

./run_linux.shThe following script will execute the "All Models" experiment and save the results in labs/all_models_experiment.csv.

cd experiments

python all_models_experiment.pyThe following script will execute the "Single Model - without AIMSM" experiment and save the results in labs/single_model_without_AIMSM.csv.

cd experiments

python single_model_without_AIMSM.pyThe following script will execute the "Single Model - with AIMSM" experiment and save the results in labs/single_model_with_AIMSM.csv.

cd experiments

python single_model_with_AIMSM.pyThe following script will execute the "Random Switching Models" experiment and save the results in labs/random_switch_models_experiment.csv.

cd experiments

python random_switch_models_experiment.pyThe following script will execute the "Context Switching Models" experiment and save the results in labs/context_switching_experiment.csv.

Note: This experiment requires the Unity simulation to be running.

cd experiments

python context_switching_experiment.pyThe following script will execute the visualization of the experiments "All Models" and "Single Model" (with and without AIMSM).

cd labs

python plot_data_tripled.pyThe following script will execute the visualization of the experiments "Random Switching Models" and "Context Switching Models".

cd labs

python plot_data_doubled.py