A very much Work In Progress renderer that wraps the D3D12 API.

The primary goal is to provide example implementations of various computer graphics techniques such as clustered shading, PBR shading, etc. and the secondary goal is to do it efficiently using a simple, thin abstraction around D3D12.

It's an extension of my other repo, BAE, but here I'm implementing the rendering abstraction myself rather than using BGFX (which is great, just wanted to learn more about building lower level abstractions).

The project uses premake to generate project files, but I've only tested support for creating a Visual Studio 2019 solution. To create the solution, open up Powershell and run:

$ make generate

This will create the solutions in the .build folder.

Annoyingly, the way that premake adds the nuget package for the WindowsPixRuntime is a bit broken, so you'll likely get errors telling you that the package is missing. It uses build\native\WinPixEvent instead of the actual path which is build\WinPixEvent. If you're using MYSYS or another terminal that supports sed then you can just run the following after the generation step:

$ make fix

Alternatively, just run make to both generate and fix at once (if you can use sed).

For now, I'm including all the non-nuget dependencies used inside external and their licenses are included in each folder ofexternal/include. Here's a complete list:

- A single header from

boost_contextwhich FibreTaskingLib needs - Catch2

- D3D12MemAlloc

- d3dx12

- DirectX Shader Compiler

- DirectXTex texture processing library

- FibreTaskingLib

- gainput

- Dear Imgui

- imgui-filebrowser

- MurmurHash3

- Pix runtime headers

- Optick

- tinygltf

Not all of these are being fully utilized -- for instance, FTL was used for some experiments but most of the code is not multi-threaded (yet).

Currently, the following implementations have been created, in part as a way of dogfooding my rendering abstraction:

- Image based lighting with multiple scattering

- ISPC accelerated view frustum culling, as documented in another blog post

- Clustered Forward Shading using compute shaders

- More, coming soon

This is largely a port of the work I previously documented in a blog post that used BGFX. The implementation is based off Karis 2013 and uses compute shaders to produce the pre-filtered irradiance and radiance cube maps documented in that paper.

Additionally, it features corrections for multiple scattering based on Fdez-Agüera 2019 that improve conservation of energy for metals at higher roughness values.

Most of the details are in the two blog posts (part 1, and part 2) but to summarize the view culling transforms model-space AABBs to view space and performs a check using the separating axis theorem against the view frustum to determine whether the object is in view or not. ISPC is used to vectorize the system and test 8 AABBs at once.

For 10,000 AABBs, the system can produce a visibility list in about 0.3ms using a single core on my i5 6600k.

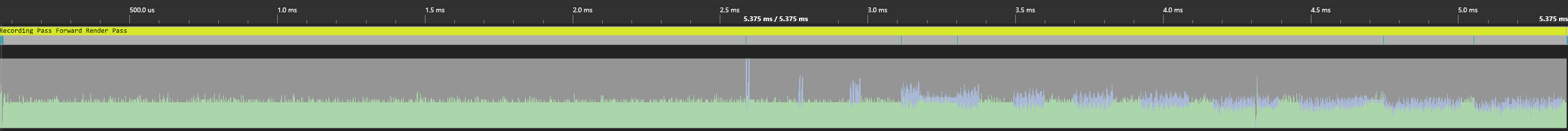

Here's a graphic of how this reduces wasted vertex work on the GPU as well, as without culling the GPU ends up spending time executing vertex shader work without any corresponding fragment/pixel shader output:

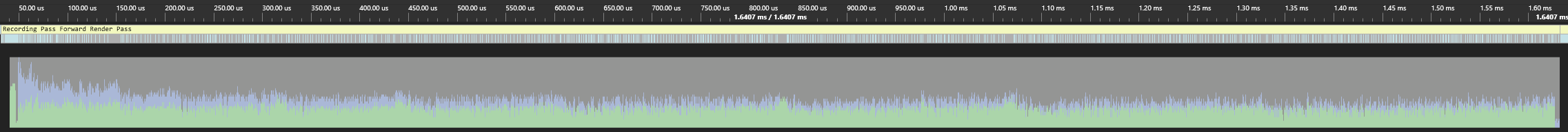

With culling, the wasted work is reduced greatly:

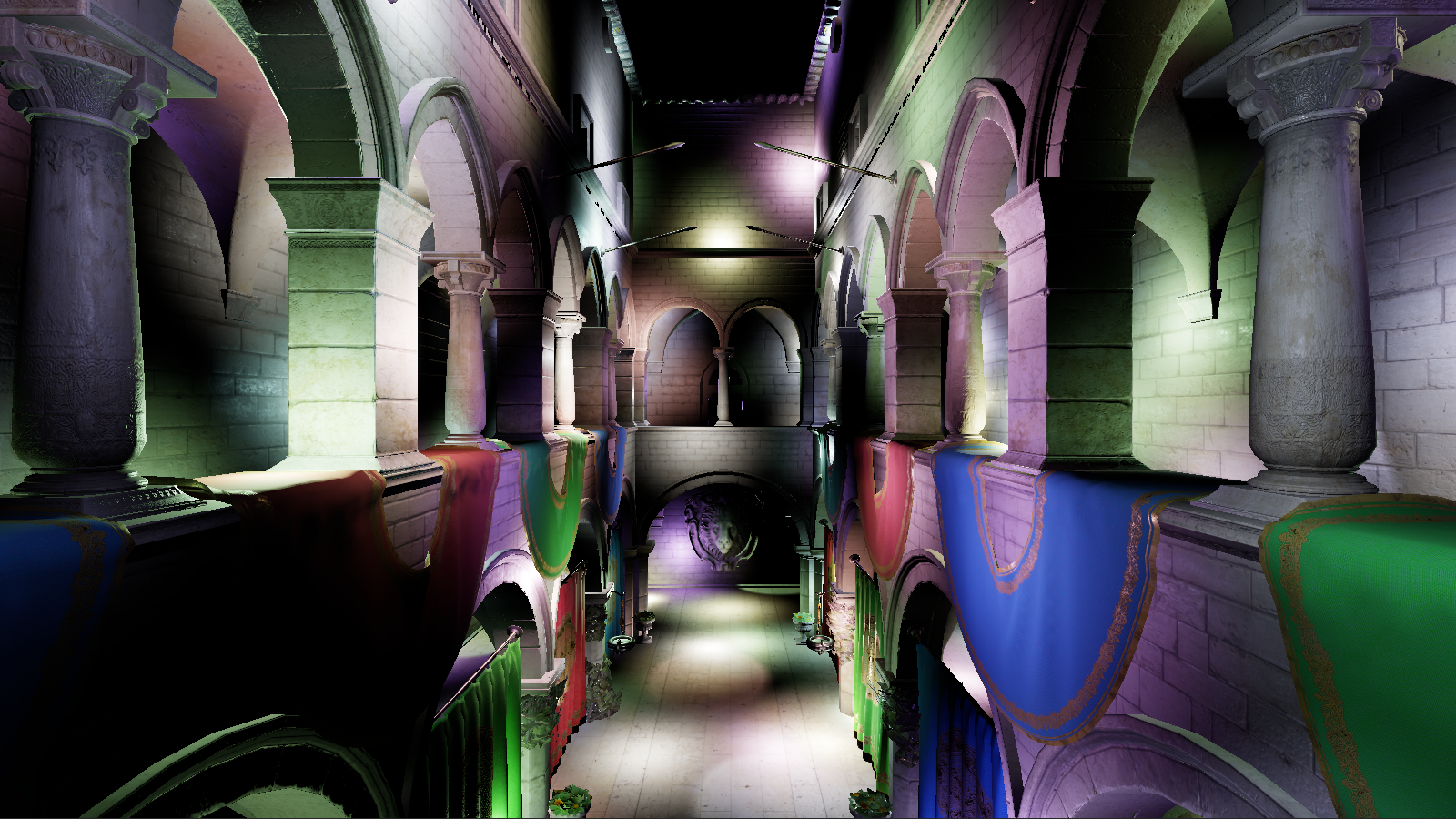

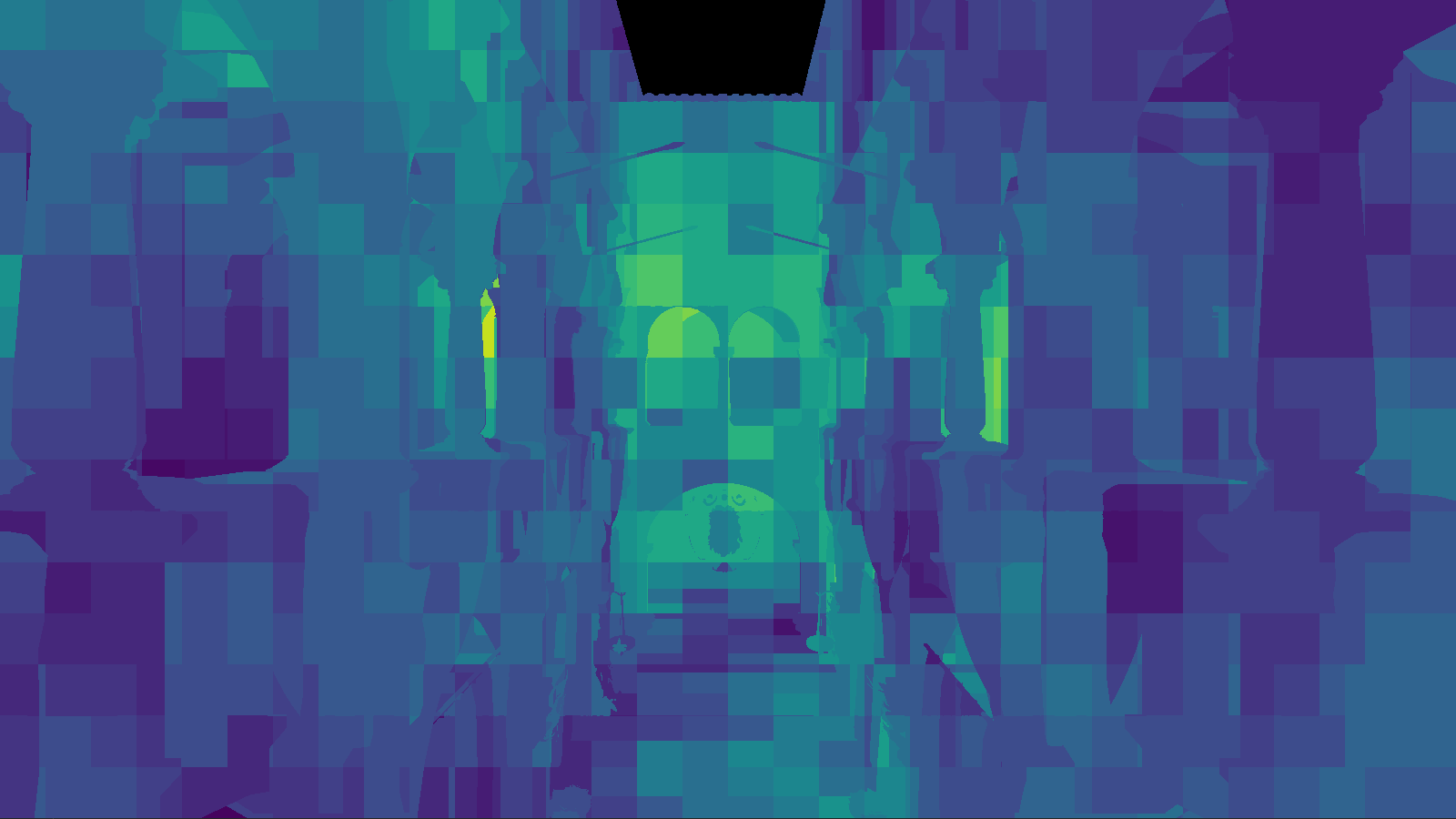

Largely based off Olsson et al, 2012, this implementation uses compute shaders to populate a buffer containing per-cluster index lists, which are then used to index into the global light lists.

The current implementation supports binning of both point lights and spot lights using clusters defined as AABBs in view-space. The intersection test used for the spot lights is based of Bart Wronski's excellent blog post, which works great with Olsson's view-space z-partitioning scheme which attempts to maintain clusters that are as cubical as possible.

One small change is that I actually implemented at piece-wise partitioning scheme such that the clusters between the near-plane and some user defined "mid-plane" use a linear partitioning scheme, while those beyond the mid-plane use the Olsson partitioning scheme. This was done due to the fact that the Olsson scheme produces very small clusters close to the camera, and using a linear scheme (while providing a worse fit for the bounding sphere tests) provides about a 30% memory reduction.

One thing to note is that I used 32-byte uints to store light indices, but this is total overkill for the number of lights I reasonably expect to support and I could easily get away with 16-bits for the index list entries BUT my laptop's 960m lacks the feature support for 16-bit arithmetic 😢

The D3D12 abstraction, as mentioned, is pretty thin and is still WIP. It's also a bit opinionated in that I haven't made any effort to support traditional resource binding, opting instead to use the "bindless" model. This makes things much simpler but, for instance, the lib doesn't allow you to create non-shader visible CBV_SRV_UAV heaps (since there's simply no need, we just bind the entire heap).

While it currently only supports D3D12, care has been taken such that the D3D API objects do not "leak" and instead the API hands out opaque handles to clients which can then be used to record commands.

Future plans include:

- Individual resource deletion! Right now resources are just deleted in bulk on shutdown.

- Asynchronous asset loading. Right now it's possible to use the COPY queue, but the actual CPU code that opens and reads files is all single threaded. It'd be nice to use something like Promises for this.

- Custom asset format. Right now I'm using a slightly modified GLTF with the textures replaced with DDS versions that include mip maps. GLTF includes a lot of stuff I don't need at all and it'd be nice to be able to just dump the entire vertex data onto the GPU and use offsets instead of Vertex Buffer Views.

It also supports using a "Render Pass" abstraction (but it's not necessary) that will manage pass resource creation and transitions between passes. For instance, we might have a depth pass that "outputs" a depth buffer, which other passes mark as an "input" that will be sampled from pixel or fragment shaders -- the Render pass system insert a resource transition at the beginning of the first pass that consumes the input "automatically".

Additionally, passes can submit work on the asynchronous compute queue and the abstraction will submit that work separately and even insert cross queue barriers when subsequent passes use the outputs. However, I haven't robustly tested this part of the system yet and I know that it currently breaks when the resources are transitioned using a command list created from the compute queue.

Future plans include:

- Better support for async compute

- Multi-threaded command list recording. Each pass already gets its own command list, but I haven't yet introduced any threading into the library or application.

- Better support for multiple sets of passes. Right now each list of passes is it's own heavyweight structure, making it annoying to support switching between them during runtime. It'd be nicer to have a pool of passes and then lightweight lists that describe recording of commands.

The projects in this folder are currently broken, as I've changed the zec::gfx and zec::render_pass_system APIs since writing them. I'm not sure whether I'll bother fixing them since they were mostly written as proofs of concept and meant to be throw away code.