This project aims to detect early indicators of depression by analyzing data from a range of social media platforms, including images and texts.

Table of Contents

Data collection ↑

Data were collected from Pexels, Unsplash and Twitter .

Pexels and Unsplash are two freely-usable images platforms.

Tweets used are publicly available.

Visual Data:

The overall process of scraping images from unsplash and pexels is presented as follows:

Images were crawled from Pexels using Selenium and from Unsplash using UnsplashAPI.

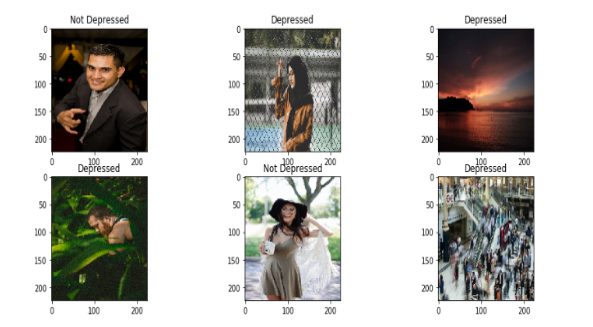

- 6250 images labeled as "Depressed"

- 5234 images labeled as "Not Depressed"

This is a sample of the dataset:

Images can be loaded as shown in Project Cheat Sheet and codes are available here .

Textual Data:

Hashtags that were used are trending hashtags using Keywords inspired from DSM-5(Diagnostic and Statistical Manual of Mental Disorders).

Textual data were collected from Twitter users sharing their posts publicly using twint.

Overall, 5460 tweets were collected.

The process was:

You can check the result of texts loader in Project Cheat Sheet and codes are available here . This is a sample of the dataset:

Models ↑

Models for Images:

Trained five different types of models:

- Deep CNN

- ResNet50

- BiT-L(ResNet50x1)

- BiT-L(ResNet50x3)

- BiT-L(ResNet101x1): This was the best model in term of Accuracy(0.82), Precisions, Recalls, and F1-scores with hyperparameters as follow SGD (Stochastic gradient descent) as optimizer, 50 epochs, leraning rate is variable and size of images is 128*128p

Models for Texts:

Trained two different types of models:

- LSTM

- GloVe+BiLSTM: This was the best model in term of Accuracy(0.7), Precisions, Recalls, and F1-scores.

For the best models I actually chose, you can find three notebooks:

- For images: this notebook presents the test of BiT-L(ResNet101x1) model which is the best model for classifying images.

- For Texts: this notebook presents the test of GloVe+BiLSTM which is the best model for classifying texts.

- For integrating models: this notebook is to test the integration of BiT-L(ResNet101x1) and GloVe+BiLSTM to get a multimodal model.

You can find the saved weights for images best model and texts best model here.

Software and technologies: ↑

- Python (version 3.8.3)

- Anaconda (Distribution 2020.02)

- TensorFlow

- Keras

- Jupyter Notebook

- Pycharm (Community Edition)

Hardware ↑

In the process of the implementation of our solution we used two main machines, a local machine for refactoring codes, testing models and research, and a virtual machine (VM) on Google Cloud Platform (GCP) to run models and codes that are heavy in term of computation and time. Following are the specifications of these machines:

- Local Machine: Lenovo E330:

- Operating System : Kali Linux 2020.2

- CPU: Intel Core i5-3230M 2,6GHz

- RAM: 8 Go DDR3

- Disk: 320 GB HDD

- Virtual Machine on GCP: mastermind

I used two configurations:- For tasks that are not heavy in both computation and time:

- Operating System : Ubuntu 19.10

- Machine Type: n1-highmem-8

- CPU: 8 vCPUs

- RAM: 16 Go

- Disk: 100 GB SSD

-

For training models:

- Operating System : Ubuntu 19.10

- Machine Type: n1-highmem-8

- CPU: 8 vCPUs

- RAM: 52 Go

- Disk: 100 GB SSD

Finally, I hope you enjoyed my work and got inspired to help people get noticed 🧐. If you want more details you can find my report here .

Please contact me for more details, I would be really happy to share more infos 😋.

- For tasks that are not heavy in both computation and time: