- Overview

- Song Dataset

- Log Dataset

- Schema

- Project Files

- ETL Pipeline

- Environment

- How to run

- Reference

In this project, we apply Data Modeling with Postgres and build an ETL pipeline using Python. A startup wants to analyze the data they've been collecting on songs and user activity on their new music streaming app. Currently, they are collecting data in json format and the analytics team is particularly interested in understanding what songs users are listening to.

Songs dataset is a subset of Million Song Dataset.

Sample Record :

{"num_songs": 1, "artist_id": "ARJIE2Y1187B994AB7", "artist_latitude": null, "artist_longitude": null, "artist_location": "", "artist_name": "Line Renaud", "song_id": "SOUPIRU12A6D4FA1E1", "title": "Der Kleine Dompfaff", "duration": 152.92036, "year": 0}

Logs dataset is generated by Event Simulator.

Sample Record :

{"artist": null, "auth": "Logged In", "firstName": "Walter", "gender": "M", "itemInSession": 0, "lastName": "Frye", "length": null, "level": "free", "location": "San Francisco-Oakland-Hayward, CA", "method": "GET","page": "Home", "registration": 1540919166796.0, "sessionId": 38, "song": null, "status": 200, "ts": 1541105830796, "userAgent": "\"Mozilla\/5.0 (Macintosh; Intel Mac OS X 10_9_4) AppleWebKit\/537.36 (KHTML, like Gecko) Chrome\/36.0.1985.143 Safari\/537.36\"", "userId": "39"}

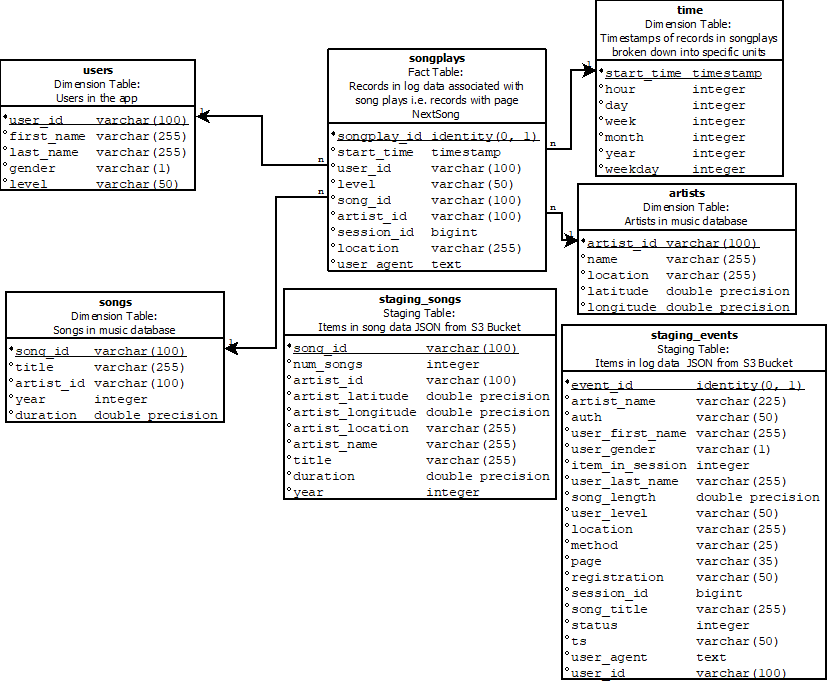

A star schema is required for optimized queries on song play queries

songplays is fact table and contains foreign keys to four dimension tables - start_time - user_id - song_id - artist_id

There are two staging tables - staging table for event dataset - staging table for song dataset

songplays - records in log data associated with song plays i.e. records with page NextSong

songplay_id, start_time, user_id, level, song_id, artist_id, session_id, location, user_agent

users - users in the app

user_id, first_name, last_name, gender, level

songs - songs in music database

song_id, title, artist_id, year, duration

artists - artists in music database

artist_id, name, location, latitude, longitude

time - timestamps of records in songplays broken down into specific units

start_time, hour, day, week, month, year, weekday

images\ -> contains images for README.md

sql_queries.py -> contains SQL queries for dropping and creating fact and dimension tables, partioned by DROP, CREATE, COPY and INSERT statements

create_tables.py -> contains code for setting up database. Running this file connects to Redshift, drops old tables (if exist) and also creates the new fact and dimension tables.

etl.py -> contains code for executing the queries that load JSON data from S3 bucket into staging tables on Redshift, then ingest the data into your analytics tables on Redshift

dwh.cfg -> configuration file contains information about Redshift, AIM and S3. File format for dwh.cfg :

[AWS]

KEY=<Your Access key ID>

SECRET=<Your Secret access key>

[DWH]

DWH_CLUSTER_TYPE=multi-node

DWH_NUM_NODES=4

DWH_NODE_TYPE=dc2.large

DWH_IAM_ROLE_NAME=dwhRole

DWH_CLUSTER_IDENTIFIER=dwhCluster

DWH_DB=dwh

DWH_DB_USER=dwhuser

DWH_DB_PASSWORD=Passw0rd

DWH_PORT=5439

[CLUSTER]

HOST=<REDSHIFT ENDPOINT>

DB_NAME=dwh

DB_USER=dwhuser

DB_PASSWORD=Passw0rd

DB_PORT=5439

[IAM_ROLE]

ARN=<IAM Role arn>

[S3]

LOG_DATA='s3://udacity-dend/log_data'

LOG_JSONPATH='s3://udacity-dend/log_json_path.json'

SONG_DATA='s3://udacity-dend/song_data'

- Supply the configuration for AWS cluster

- Write configuration for boto3 (AWS SDK for Python)

- Launch Redshift cluster and create an IAM role that has read access to S3.

- Add Redshift database and IAM role info to `dwh.cfg`.

- Launch database connectivity configuration

- Run create_tables.py and checking the table schemas in your redshift database

- Delete the cluster, roles and assigned permission

Python 3.6 or above

psycopg2 - PostgreSQL database adapter for Python

The data source are provided at S3 Bucket and you only need to run the project for AWS Redshift Cluster

python create_tables.py

python etl.py