AniPortrait: Audio-Driven Synthesis of Photorealistic Portrait Animations

Huawei Wei, Zejun Yang, Zhisheng Wang

Tencent Games Zhiji, Tencent

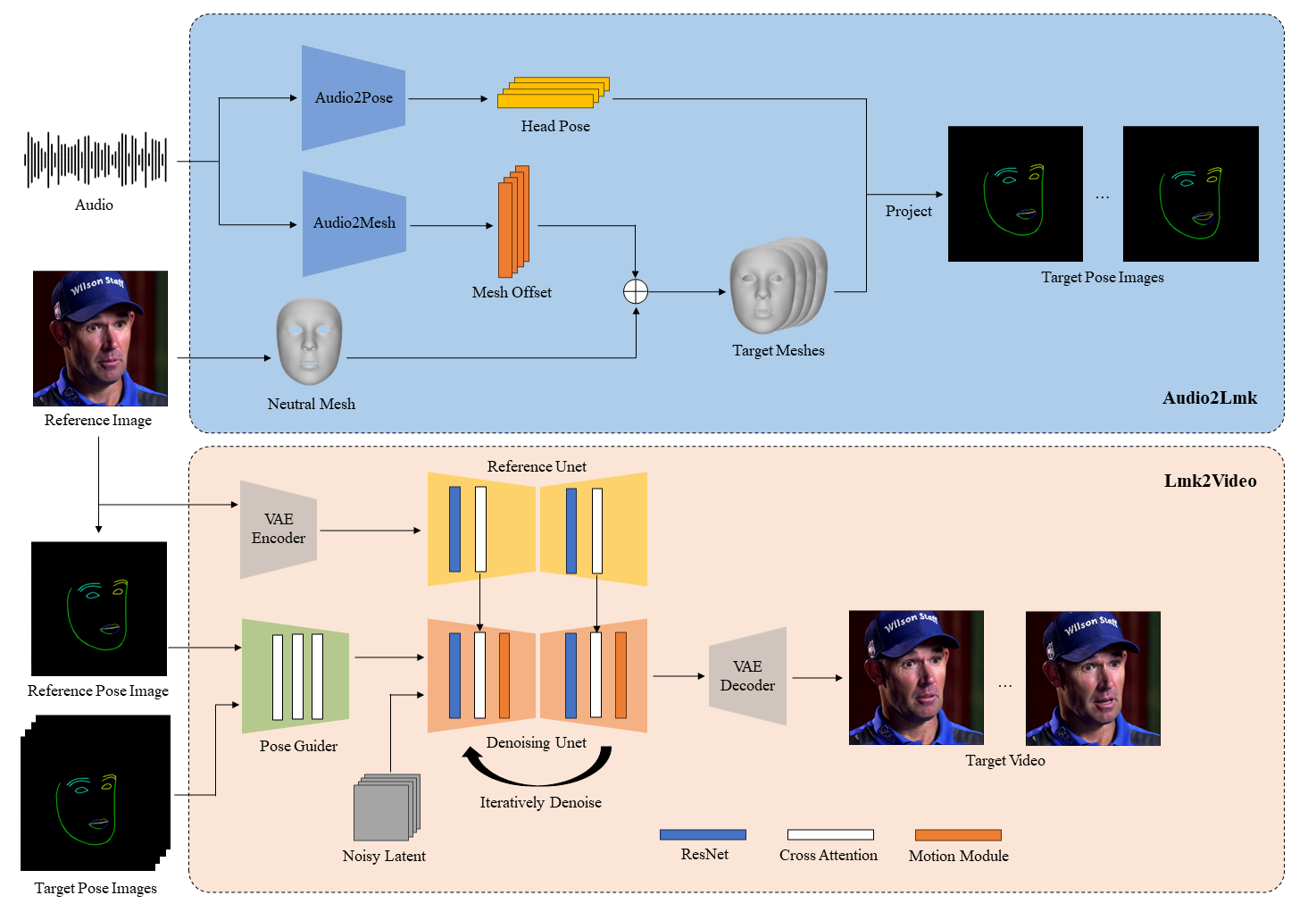

Here we propose AniPortrait, a novel framework for generating high-quality animation driven by audio and a reference portrait image. You can also provide a video to achieve face reenacment.

This is a fork of the original project located here: https://github.com/Zejun-Yang/AniPortrait I've added a Gradio UI for the Vid2Vid pipeline.

The changes & additions to the original project are as follows:

- Updated requirements to include Gradio install

- The Gradio UI allows for reference image upload, reference video upload, frame counting with automatic length field populating, and customizations for height, width, length, cfg, seed, and steps.

- Added app.py in the root dir, which is what you will launch for the Gradio UI

- Added a modified version of vid2vid.py, called vid2vid_gr.py in the scripts dir. I had to make a slight modification to the vid2vid pipeline, but I didn't want to break the existing one if users wanted to have access to the cmd line inference options. The primary change was how the generated videos were saved. Previously, they were saved in a unique directory with a unique file name based on date, time, size, seed, etc. This made it difficult for Gradio to display the result. So vid2vid_gr.py will save the result the same each time, which also means it will be overwritten, so download it if you want to keep it.

We recommend a Python version >=3.10 and a Cuda version =11.7. Then, build the environment as follows:

Create a conda environment:

conda create -n AniPortrait python=3.10

conda activate AniPortraitpip install -r requirements.txtAll the weights should be placed under the ./pretrained_model directory. You can download weights manually as follows:

-

Download our trained models, which include four parts:

denoising_unet.pth,reference_unet.pth,pose_guider.pth,motion_module.pthandaudio2mesh.pt. -

Download pretrained models of based models and other components:

Finally, these weights should be organized as follows:

./pretrained_model/

|-- image_encoder

| |-- config.json

| `-- pytorch_model.bin

|-- sd-vae-ft-mse

| |-- config.json

| |-- diffusion_pytorch_model.bin

| `-- diffusion_pytorch_model.safetensors

|-- stable-diffusion-v1-5

| |-- feature_extractor

| | `-- preprocessor_config.json

| |-- model_index.json

| |-- unet

| | |-- config.json

| | `-- diffusion_pytorch_model.bin

| `-- v1-inference.yaml

|-- wav2vec2-base-960h

| |-- config.json

| |-- feature_extractor_config.json

| |-- preprocessor_config.json

| |-- pytorch_model.bin

| |-- README.md

| |-- special_tokens_map.json

| |-- tokenizer_config.json

| `-- vocab.json

|-- audio2mesh.pt

|-- denoising_unet.pth

|-- motion_module.pth

|-- pose_guider.pth

`-- reference_unet.pth

Note: If you have installed some of the pretrained models, such as StableDiffusion V1.5, you can specify their paths in the config file (e.g. ./config/prompts/animation.yaml).

From AniPortrait Root Dir, enable conda env if it's not:

conda activate AniPortraitStart Gradio UI App:

python app.py

cxk.mp4 |

solo.mp4 |

Aragaki.mp4 |

num18.mp4 |

jijin.mp4 |

kara.mp4 |

lyl.mp4 |

zl.mp4 |

Here are the cli commands for running inference scripts:

python -m scripts.pose2vid --config ./configs/prompts/animation.yaml -W 512 -H 512 -L 64You can refer the format of animation.yaml to add your own reference images or pose videos. To convert the raw video into a pose video (keypoint sequence), you can run with the following command:

python -m scripts.vid2pose --video_path pose_video_path.mp4python -m scripts.vid2vid --config ./configs/prompts/animation_facereenac.yaml -W 512 -H 512 -L 64Add source face videos and reference images in the animation_facereenac.yaml.

python -m scripts.audio2vid --config ./configs/prompts/animation_audio.yaml -W 512 -H 512 -L 64Add audios and reference images in the animation_audio.yaml.

You can use this command to generate a pose_temp.npy for head pose control:

python -m scripts.generate_ref_pose --ref_video ./configs/inference/head_pose_temp/pose_ref_video.mp4 --save_path ./configs/inference/head_pose_temp/pose.npyExtract keypoints from raw videos and write training json file (here is an example of processing VFHQ):

python -m scripts.preprocess_dataset --input_dir VFHQ_PATH --output_dir SAVE_PATH --training_json JSON_PATHUpdate lines in the training config file:

data:

json_path: JSON_PATHRun command:

accelerate launch train_stage_1.py --config ./configs/train/stage1.yamlPut the pretrained motion module weights mm_sd_v15_v2.ckpt (download link) under ./pretrained_weights.

Specify the stage1 training weights in the config file stage2.yaml, for example:

stage1_ckpt_dir: './exp_output/stage1'

stage1_ckpt_step: 30000 Run command:

accelerate launch train_stage_2.py --config ./configs/train/stage2.yamlWe first thank the authors of EMO. Additionally, we would like to thank the contributors to the Moore-AnimateAnyone, majic-animate, animatediff and Open-AnimateAnyone repositories, for their open research and exploration.

@misc{wei2024aniportrait,

title={AniPortrait: Audio-Driven Synthesis of Photorealistic Portrait Animations},

author={Huawei Wei and Zejun Yang and Zhisheng Wang},

year={2024},

eprint={2403.17694},

archivePrefix={arXiv},

primaryClass={cs.CV}

}