RSE: Relational Sentence Embedding for Flexible Semantic Matching

This repository contains the code for our paper: Relational Sentence Embedding for Flexible Semantic Matching.

- Feb. 21, 2023: We released the training demo for all tasks.

- Feb. 20, 2023: We released the complete checkpoints and their evaluation scripts on a wide range of tasks.

- Feb. 13, 2023: We released our first checkpoint and inference demo. Check it out.

- Dec. 17, 2022: Our paper is available online: RSE Paper.

Outline

- RSE: Relational Sentence Embedding for Flexible Semantic Matching

- Overview

- Getting Started

- Model List

- Evaluation-Only

- Training, Inference, and Evaluation

- Citation

Overview

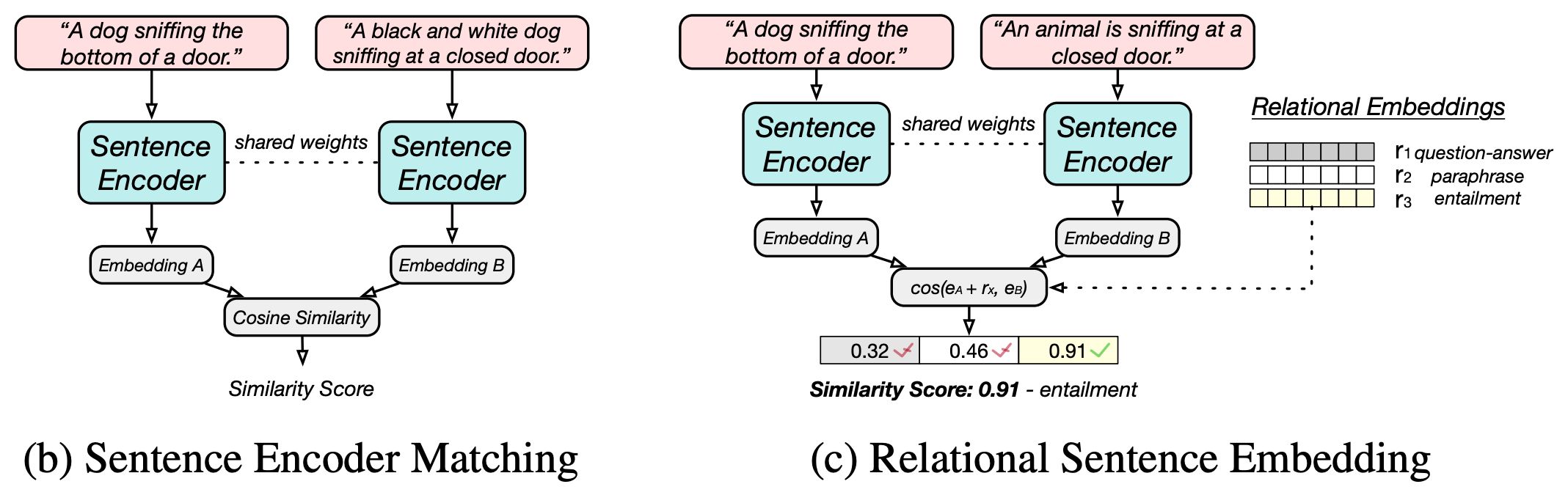

We propose a new sentence embedding paradigm to further discover the potential of sentence embeddings. Previous sentence embedding learns on vector representation for each sentence and no relation information is incorporated. Here, in RSE, the sentence relations are explicitly modelled. During inference, we can obtain sentence similarity for each relation type. The relational similarity score can be flexible used for sentence embedding applications like clustering, ranking, similarity modeling, and retrieval.

We believe the Relational Sentence Embedding has great potential in developing new models as well as applications.

Getting Started

Step 1: Environment Setup

Step-by-step Environment Setup: We provide step-by-step environment setup.

Step 2: Inference Demo

After environment setup, we can process with the inference demo. The trained model will be automatically downloaded through Huggingface.

bash scripts/demo_inference_local.sh

-

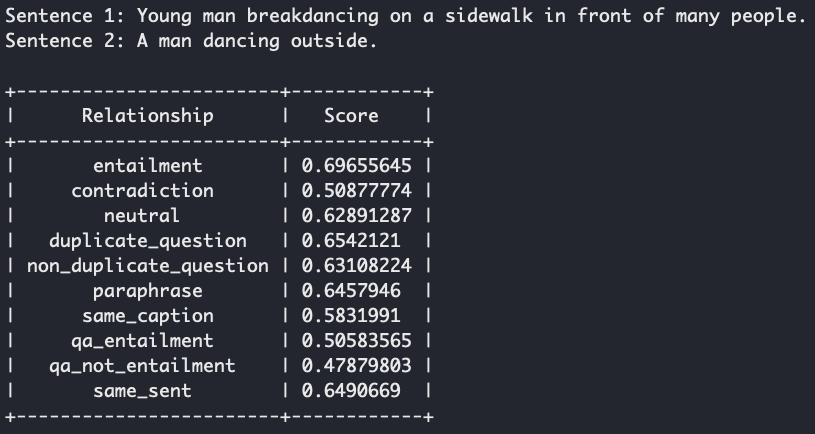

Analysis: We can see that the highest relational similarity score between the above two sentences is entailment. Meantime, you get scores with any relations, this can be used flexiblely for various tasks.

-

To choose other models:

--model_name_or_path binwang/RSE-BERT-base-10-relations --model_name_or_path binwang/RSE-BERT-large-10-relations --model_name_or_path binwang/RSE-RoBERTa-base-10-relations --model_name_or_path binwang/RSE-RoBERTa-large-10-relations

Model List

Here are our provided model checkpoints, all available on Huggingface.

Evaluation-Only

We include the evaluation with (1) STS tasks, (2) Transfer tasks, (3) EvalRank, and (4) USEB tasks.

STS Tasks

- Download STS datasets first

cd rse_src/SentEval/data/downstream/

bash download_RSE_SentEval_data.sh

- To reproduce the evaluation on STS (

RSE-BERT-base-STSas am example, run in the main folder)

bash scripts/demo_inference_STS.sh

It is same with:

accelerate launch --config_file accelerate_config.yaml --num_cpu_threads_per_process 10 \

rse_src/inference_eval.py \

--model_name_or_path binwang/RSE-BERT-base-STS \

--mode RSE \

--rel_types entailment duplicate_question \

--sim_func 1.0 0.5 \

--cache_dir scripts/model_cache/cache \

--pooler_type cls \

--max_seq_length 32 \

--metric_for_eval STS

The expected results:

+-------+-------+-------+-------+-------+--------------+-----------------+-------+

| STS12 | STS13 | STS14 | STS15 | STS16 | STSBenchmark | SICKRelatedness | Avg. |

+-------+-------+-------+-------+-------+--------------+-----------------+-------+

| 76.27 | 84.43 | 80.60 | 86.03 | 81.86 | 84.34 | 81.73 | 82.18 |

+-------+-------+-------+-------+-------+--------------+-----------------+-------+

Explaination of the arguments of evaluation code (in scripts/demo_inference_STS.sh):

--model_name_or_path: The model to be loaded for evaluation. We provide a serious of models and their performance comparison.

--rel_types: The relations to be used in the current model. It should match the number of relations and their order during training. In the above example, two relations are used in training entailment and duplicate_question.

--sim_func: The weights for each relation when computing the final weights. As we have multiple relational scores, the argument is the weight for weighted sum to calcuate the final score between two sentences. It can be flexibly adjusted for different applications.

--metric_for_eval: Current evaluation tasks. Can be STS, USEB, Transfer or EvalRank.

Performance of other models (simply change model_name_or_path argument):

+-------------------------------+----------+

| Model | Avg. STS |

+-------------------------------+----------+

| binwang/RSE-BERT-base-STS | 82.18 |

+-------------------------------+----------+

| binwang/RSE-BERT-large-STS | 83.42 |

+-------------------------------+----------+

| binwang/RSE-RoBERTa-base-STS | 82.71 |

+-------------------------------+----------+

| binwang/RSE-RoBERTa-large-STS | 84.41 |

+-------------------------------+----------+

Performance on "STR" dataset:

+-------------------------------+----------+

| Model | STR |

+-------------------------------+----------+

| binwang/RSE-BERT-base-STS | 80.69 |

+-------------------------------+----------+

| binwang/RSE-BERT-large-STS | 81.98 |

+-------------------------------+----------+

| binwang/RSE-RoBERTa-base-STS | 81.50 |

+-------------------------------+----------+

| binwang/RSE-RoBERTa-large-STS | 83.72 |

+-------------------------------+----------+

USEB Tasks

- Download USEB datasets

cd rse_src/useb/data/

bash download_USEB_data.sh

It will download and untar the datasets.

- To reproduce the evaluation on USEB (

RSE-BERT-base-USEBas am example, run in the main folder)

bash scripts/demo_inference_USEB.sh

It is same with:

accelerate launch --config_file accelerate_config.yaml --num_cpu_threads_per_process 10 \

rse_src/inference_eval.py \

--model_name_or_path binwang/RSE-BERT-base-USEB \

--mode RSE \

--rel_types entailment duplicate_question paraphrase same_caption qa_entailment same_sent \

--cache_dir scripts/model_cache/cache \

--pooler_type cls \

--max_seq_length 32 \

--metric_for_eval USEB

The expected results:

+-----------+-------------+-------------+---------+-------+

| AskUbuntu | CQADupStack | TwitterPara | SciDocs | Avg. |

+-----------+-------------+-------------+---------+-------+

| 54.8 | 13.7 | 75.2 | 71.0 | 53.7 |

+-----------+-------------+-------------+---------+-------+

--model_name_or_path: The model to be loaded for evaluation. We provide a serious of models and their performance comparison.

Performance of other models (simply change model_name_or_path argument):

+--------------------------------+-----------+-------------+-------------+---------+-------+

| Model | AskUbuntu | CQADupStack | TwitterPara | SciDocs | Avg. |

+--------------------------------+-----------+-------------+-------------+---------+-------+

| binwang/RSE-BERT-base-USEB | 54.8 | 13.7 | 75.2 | 71.0 | 53.7 |

+--------------------------------+-----------+-------------+-------------+---------+-------+

| binwang/RSE-BERT-large-USEB | 56.2 | 13.9 | 76.7 | 71.9 | 54.7 |

+--------------------------------+-----------+-------------+-------------+---------+-------+

| binwang/RSE-RoBERTa-base-USEB | 56.2 | 13.3 | 74.8 | 69.7 | 53.5 |

+--------------------------------+-----------+-------------+-------------+---------+-------+

| binwang/RSE-RoBERTa-large-USEB | 58.0 | 15.2 | 77.5 | 72.4 | 55.8 |

+--------------------------------+-----------+-------------+-------------+---------+-------+

Transfer Tasks

-

No need to download data if you have done in

STSsection. -

To reproduce the evaluation on Transfer Tasks (

RSE-BERT-base-Transferas am example, run in the main folder)

bash scripts/demo_inference_Transfer.sh

It is same with:

accelerate launch --config_file accelerate_config.yaml --num_cpu_threads_per_process 10 \

rse_src/inference_eval.py \

--model_name_or_path binwang/RSE-RoBERTa-large-Transfer \

--mode RSE \

--rel_types entailment paraphrase \

--cache_dir scripts/model_cache/cache \

--pooler_type cls \

--max_seq_length 32 \

--layer_aggregation 5 \

--metric_for_eval transfer_tasks

The expected results:

+-------+-------+-------+-------+-------+-------+-------+-------+

| MR | CR | SUBJ | MPQA | SST2 | TREC | MPRC | Avg. |

+-------+-------+-------+-------+-------+-------+-------+-------+

| 82.34 | 88.98 | 95.33 | 90.60 | 88.36 | 93.00 | 77.39 | 88.00 |

+-------+-------+-------+-------+-------+-------+-------+-------+

--model_name_or_path: The model to be loaded for evaluation. We provide a serious of models and their performance comparison.

Performance of other models (simply change model_name_or_path argument):

+------------------------------------+-------+-------+-------+-------+-------+-------+-------+-------+

| Model | MR | CR | SUBJ | MPQA | SST2 | TREC | MPRC | Avg. |

+------------------------------------+-------+-------+-------+-------+-------+-------+-------+-------+

| binwang/RSE-BERT-base-Transfer | 82.34 | 88.98 | 95.33 | 90.60 | 88.36 | 93.00 | 77.39 | 88.00 |

+------------------------------------+-------+-------+-------+-------+-------+-------+-------+-------+

| binwang/RSE-BERT-large-Transfer | 84.53 | 90.46 | 95.71 | 90.51 | 90.17 | 95.20 | 76.70 | 89.04 |

+------------------------------------+-------+-------+-------+-------+-------+-------+-------+-------+

| binwang/RSE-RoBERTa-base-Transfer | 84.52 | 91.21 | 94.40 | 90.20 | 91.49 | 91.40 | 77.10 | 88.62 |

+------------------------------------+-------+-------+-------+-------+-------+-------+-------+-------+

| binwang/RSE-RoBERTa-large-Transfer | 86.28 | 91.21 | 95.18 | 91.16 | 91.43 | 95.40 | 78.20 | 89.84 |

+------------------------------------+-------+-------+-------+-------+-------+-------+-------+-------+

EvalRank Tasks

- To reproduce the evaluation on EvalRank Tasks (

RSE-BERT-base-USEBas am example, run in the main folder, we did not train a dedicated model for EvalRank, but still can perform evaluation. I simply use thesame_sentrelationship as the relational score.)

bash scripts/demo_inference_EvalRank.sh

It is same with:

accelerate launch --config_file accelerate_config.yaml --num_cpu_threads_per_process 10 \

rse_src/inference_eval.py \

--model_name_or_path binwang/RSE-BERT-base-USEB \

--mode RSE \

--rel_types entailment duplicate_question paraphrase same_caption qa_entailment same_sent \

--sim_func 0.0 0.0 0.0 0.0 0.0 1.0 \

--cache_dir scripts/model_cache/cache \

--pooler_type cls \

--max_seq_length 32 \

--metric_for_eval evalrank

The expected results:

+------------------------------------+-------+---------+---------+

| Model | MRR | Hits@1 | Hits@3 |

+------------------------------------+-------+---------+---------+

| binwang/RSE-BERT-base-USEB | 68.61 | 47.90 | 87.47 |

+------------------------------------+-------+---------+---------+

| binwang/RSE-BERT-large-USEB | 68.17 | 46.73 | 87.18 |

+------------------------------------+-------+---------+---------+

| binwang/RSE-RoBERTa-base-USEB | 70.92 | 50.52 | 90.49 |

+------------------------------------+-------+---------+---------+

| binwang/RSE-RoBERTa-large-USEB | 70.48 | 49.25 | 90.97 |

+------------------------------------+-------+---------+---------+

Training, Inference, and Evaluation

Data Preparation

Please download all seven relational data or necessary ones and place them in the './data' folder.

cd data/

bash download_relational_data.sh

Training - STS

Training STS model with (BERT-base-uncased), it takes me around 1 hours with RTX-3090:

# For BERT-base-uncased

bash scripts/demo_train_STS_bert_base.sh

# For BERT-large-uncased

bash scripts/demo_train_STS_bert_large.sh

# For RoBERTa-base-uncased

bash scripts/demo_train_STS_roberta_base.sh

# For RoBERTa-large-uncased

bash scripts/demo_train_STS_roberta_large.sh

It is the same as (for BERT-base-uncased):

accelerate launch --config_file accelerate_config.yaml --num_cpu_threads_per_process 10 \

rse_src/main.py \

--mode RSE \

--add_neg 0 \

--add_hard_neg \

--output_dir scripts/model_cache \

--metric_for_best_model STSBenchmark_unsup \

--layer_aggregation 0 \

--eval_every_k_steps 125 \

--train_file data/mnli_full.json data/snli_full.json data/qqp_full.json data/paranmt_5m.json data/qnli_full.json data/wiki_drop.json data/flicker_full.json \

--rel_types entailment duplicate_question \

--rel_max_samples 330000 330000 \

--sim_func 1.0 0.5 \

--model_name_or_path bert-base-uncased \

--num_train_epochs 3 \

--per_device_train_batch_size 512 \

--grad_cache_batch_size 256 \

--learning_rate 5e-5 \

--rel_lr 1e-2 \

--cache_dir scripts/model_cache \

--pooler_type cls \

--temp 0.05 \

--preprocessing_num_workers 8 \

--max_seq_length 32 \

--gradient_accumulation_steps 1 \

--weight_decay 0.0 \

--num_warmup_steps 0 \

--seed 1234

Training - Transfer Tasks

# For BERT-base-uncased

bash scripts/demo_train_transfer_bert_base.sh

# For BERT-large-uncased

bash scripts/demo_train_transfer_bert_large.sh

# For RoBERTa-base-uncased

bash scripts/demo_train_transfer_roberta_base.sh

# For RoBERTa-large-uncased

bash scripts/demo_train_transfer_roberta_large.sh

Training - USEB Tasks

# For BERT-base-uncased

bash scripts/demo_train_USEB_bert_base.sh

# For BERT-large-uncased

bash scripts/demo_train_USEB_bert_large.sh

# For RoBERTa-base-uncased

bash scripts/demo_train_USEB_roberta_base.sh

# For RoBERTa-large-uncased

bash scripts/demo_train_USEB_roberta_large.sh

Citation

Please cite our paper if you find RSE useful in your work.

@article{wang2022rse,

title={Relational Sentence Embedding for Flexible Semantic Matching},

author={Wang, Bin and Li, Haizhou},

journal={arXiv preprint arXiv:2212.08802},

year={2022}

}Please contact Bin Wang @ bwang28c@gmail.com or raise an issue.