Objective - use various techniques to train and evaluate supervised learning models with imbalanced classes to identify the creditworthiness of credit applicants.

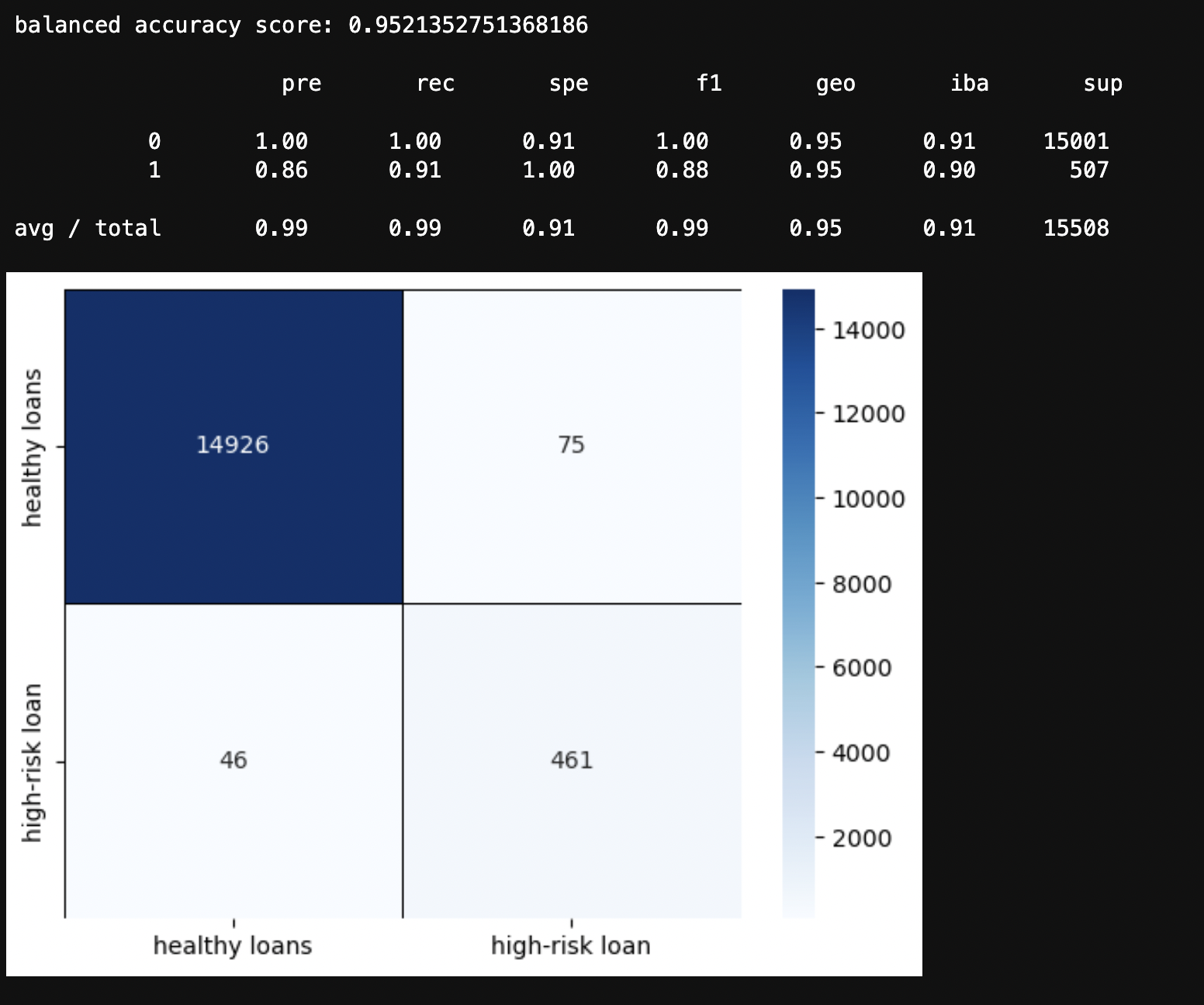

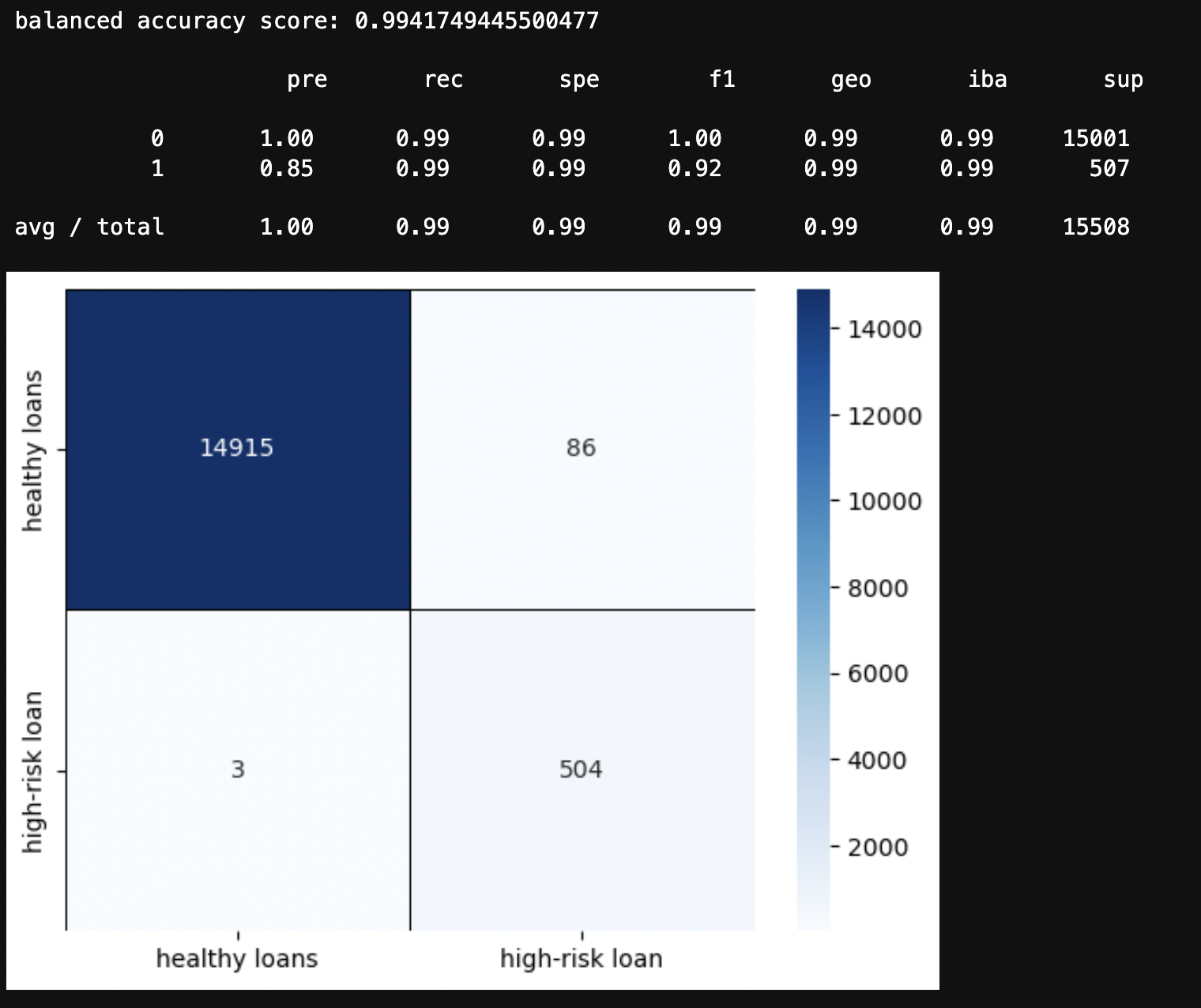

Scenario - Given a dataset of historical lending activity from a peer-to-peer lending services company, use your knowledge of the imbalanced-learn library with a logistic regression model to compare two versions (imbalanced vs resampled) of the dataset and build a model that can identify the creditworthiness of borrowers.

Product - Jupyter notebook with both data set versions providing the count of the 'target' classes, train a logistic regression classifier, calculate the balanced accuracy score, generate a confusion matrix, and generate a classification report.

PCA,

Feature Scaling with StandardScaler,

train_test_split,

random undersampling,

random oversampling,

SMOTEENN resampling,

K-Means (KMeans) 2 and 3 cluster modeling,

KN regression (KNeighborsRegressor) modeling,

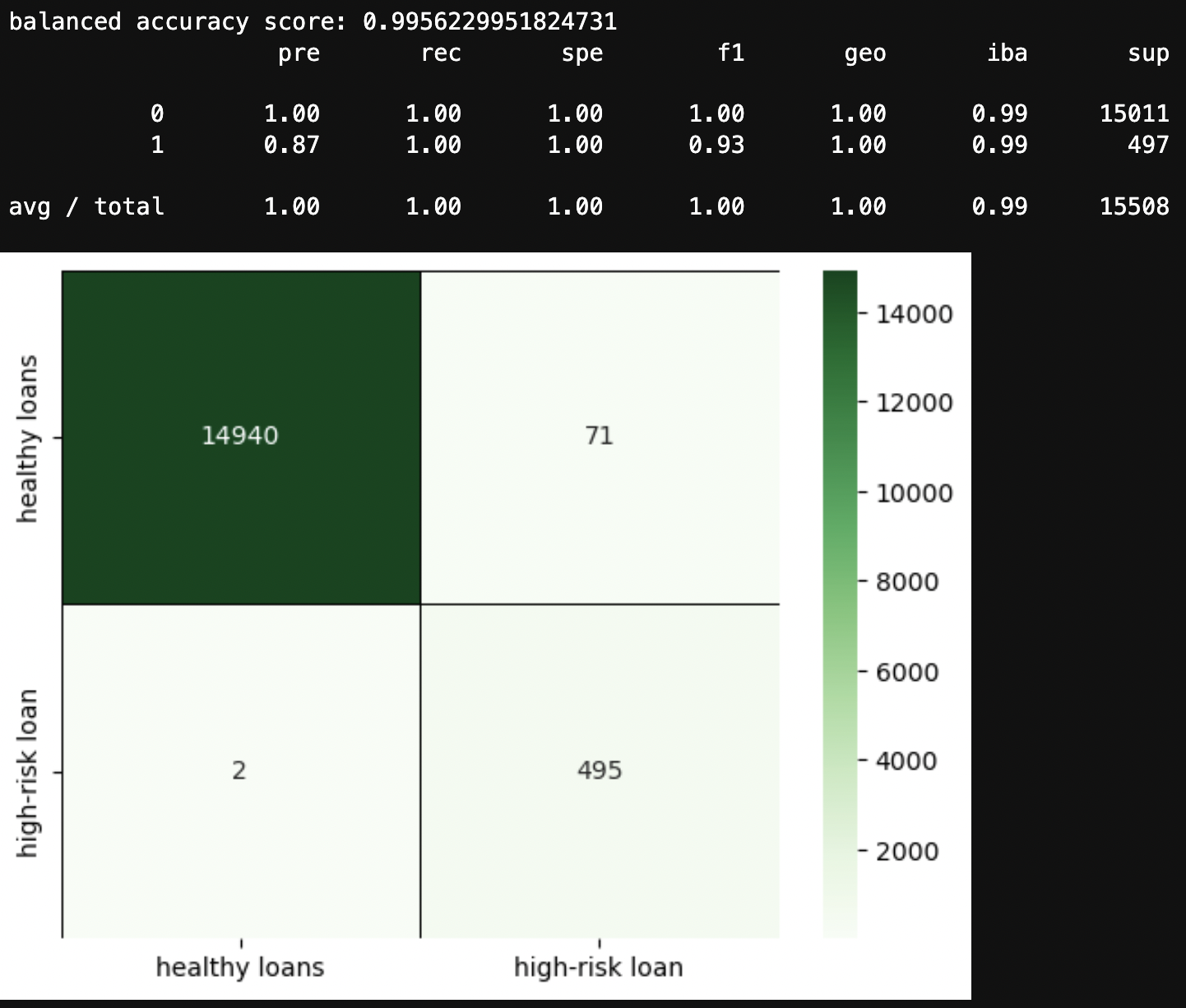

KNeighborsClassifier analysis modeling,

random forest (RandomForestClassifier) modeling,

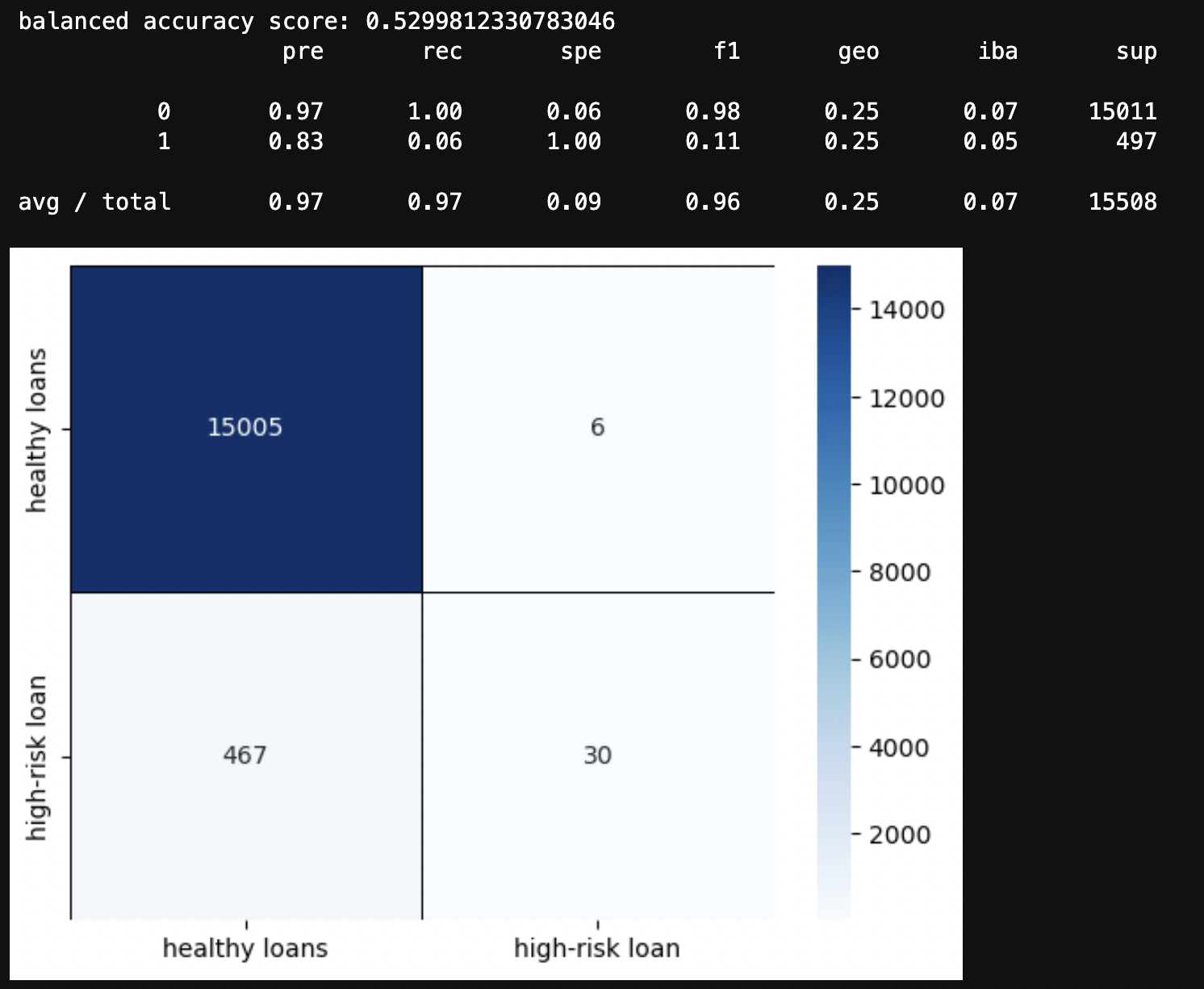

logistic regression (LogisticRegression) modeling,

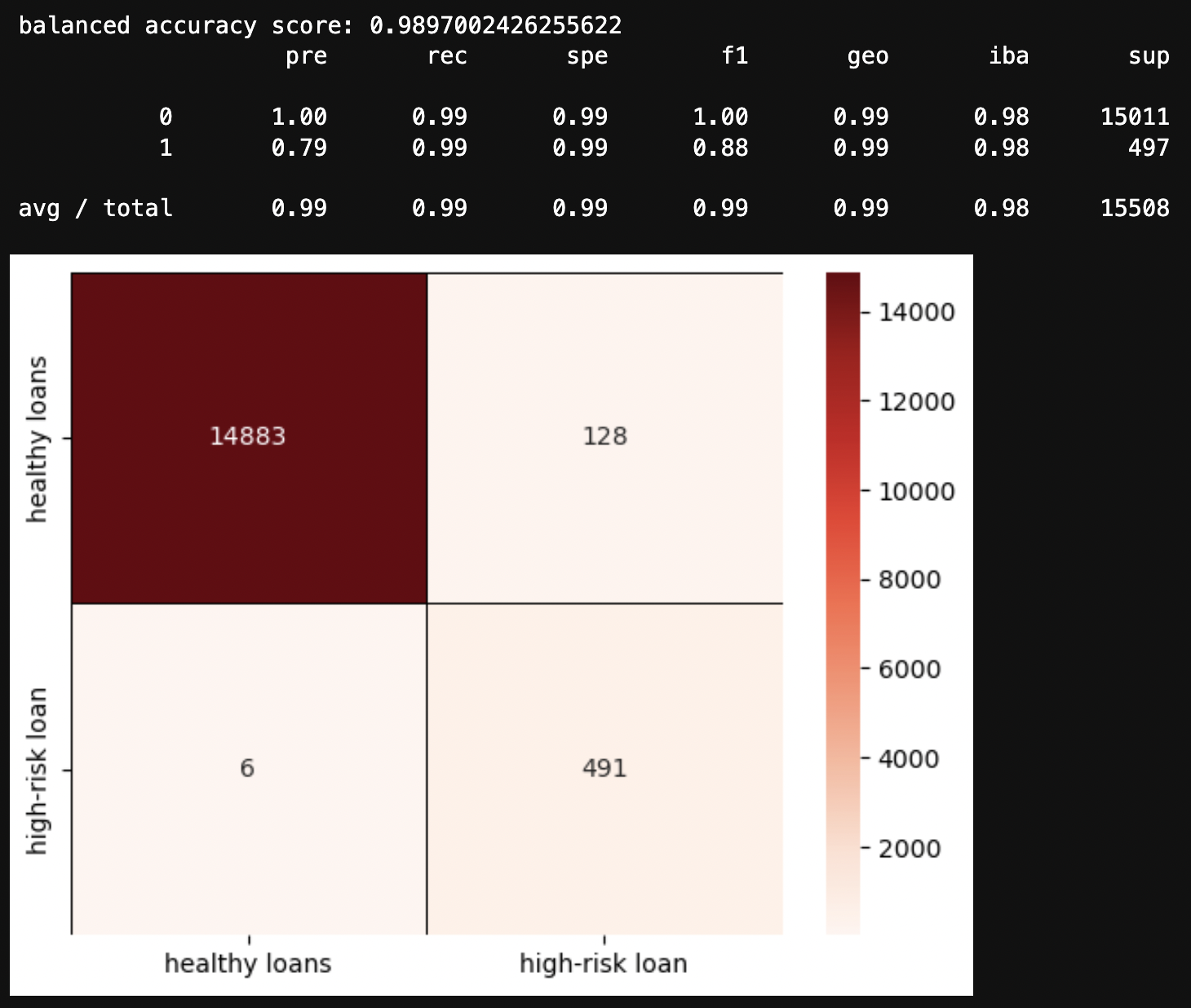

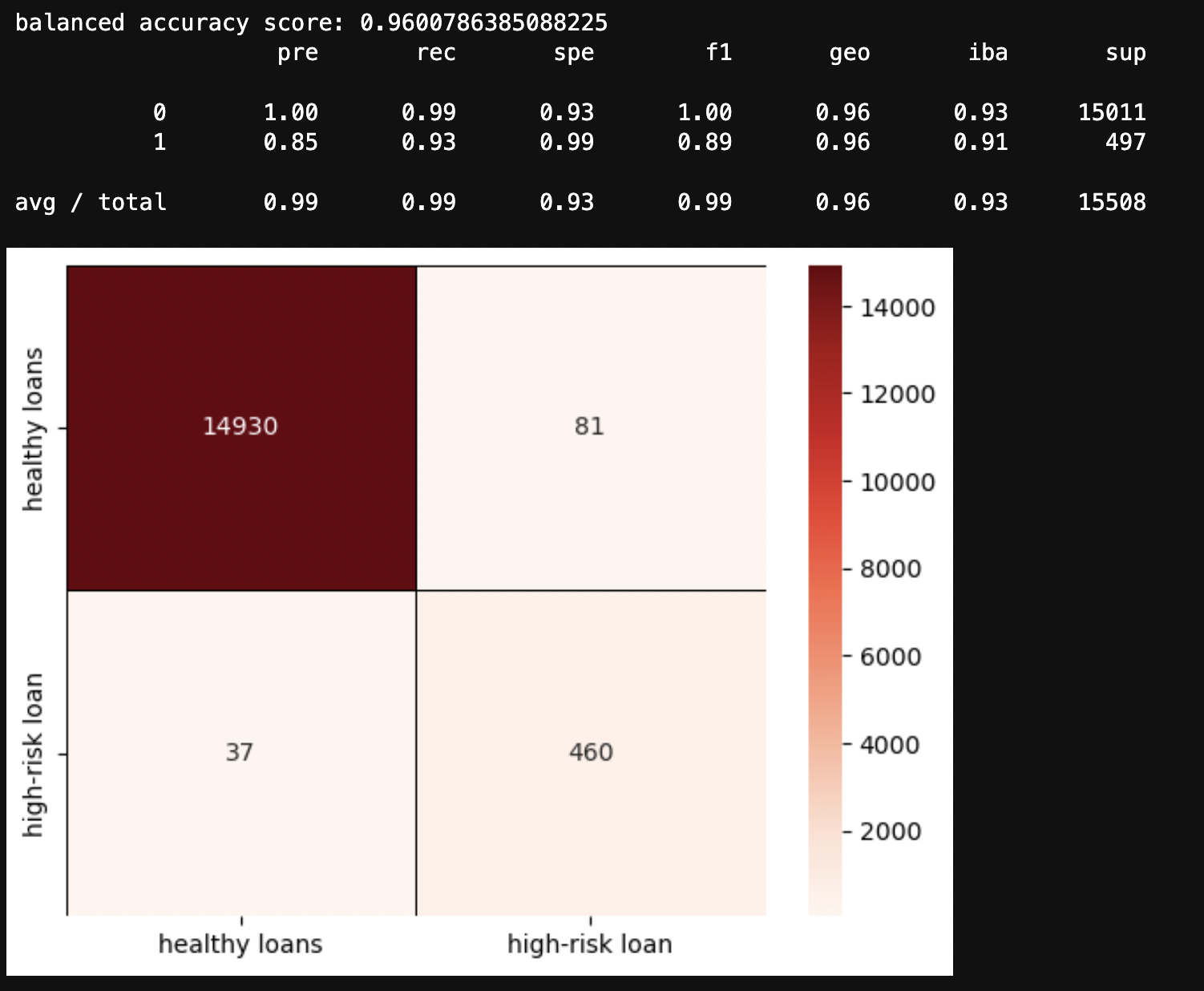

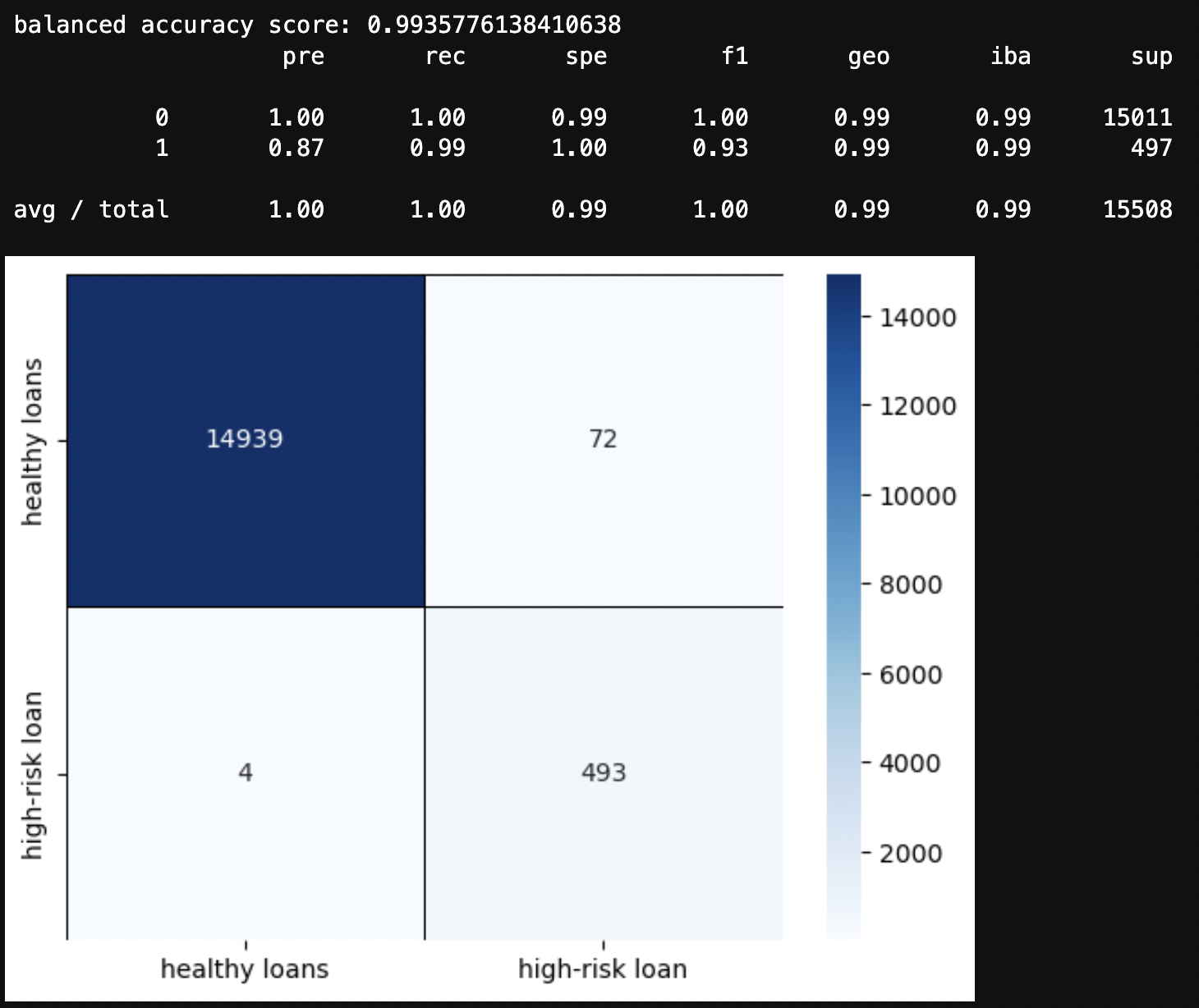

confusion matrices,

balanced_accuracy scores,

imbalanced classification reports,

HV scatter plot,

seaborn heatmaps

pickle

joblib

Supplemental processing and analysis:

Beyond the scope of the assignment, the author sought to conduct additional analysis of the data obtained; supplemental material script precedes the primary assignment. Additionally, the top trained models are saved via pickle and joblib methods to directory 'Resources_models'.

Feature Scaling with StandardScaler,

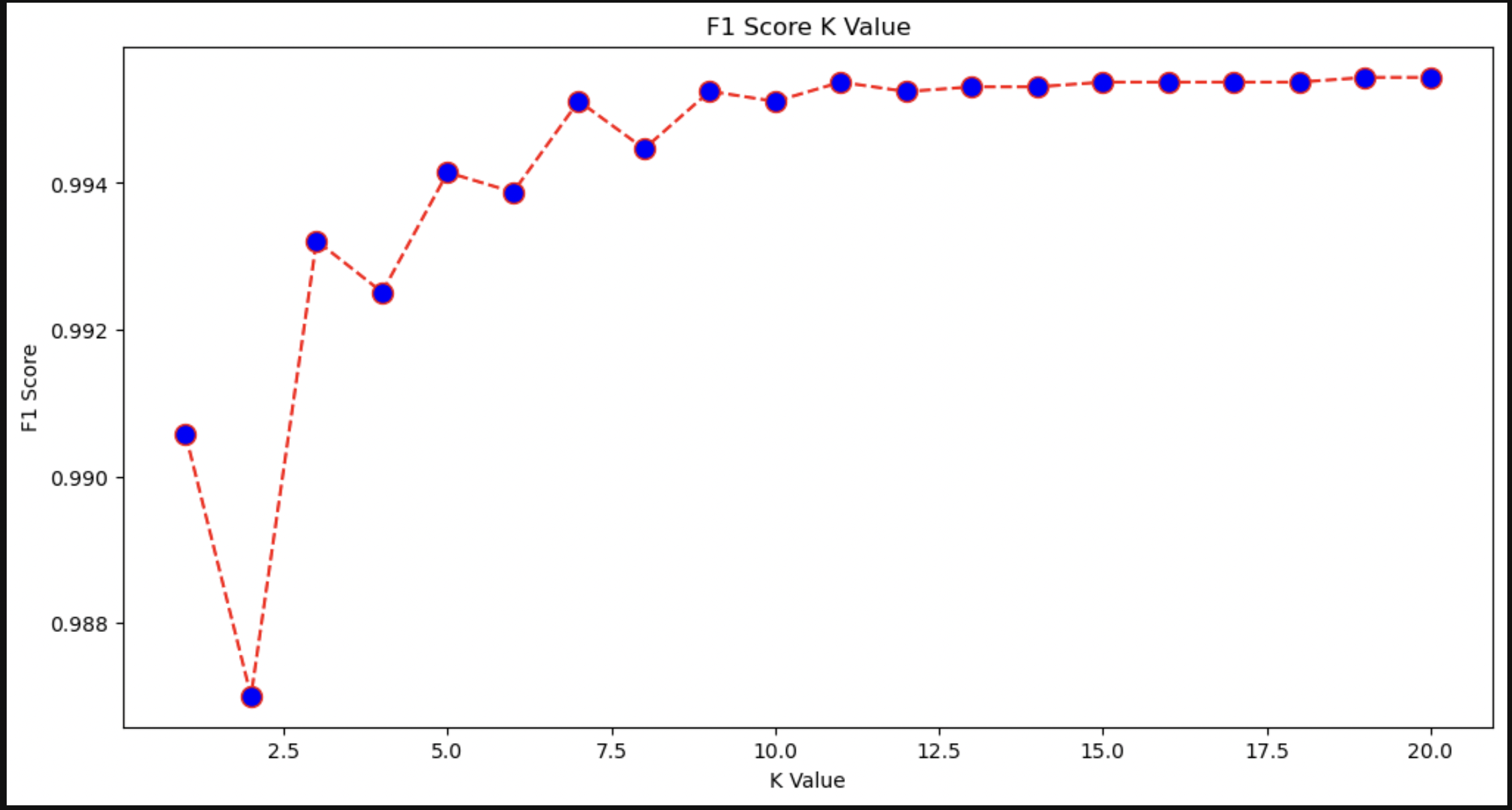

Calculated Best K for KNeighborsClassifier through maximum f1,

KNN for Classification.

Feature Scaling with StandardScaler,

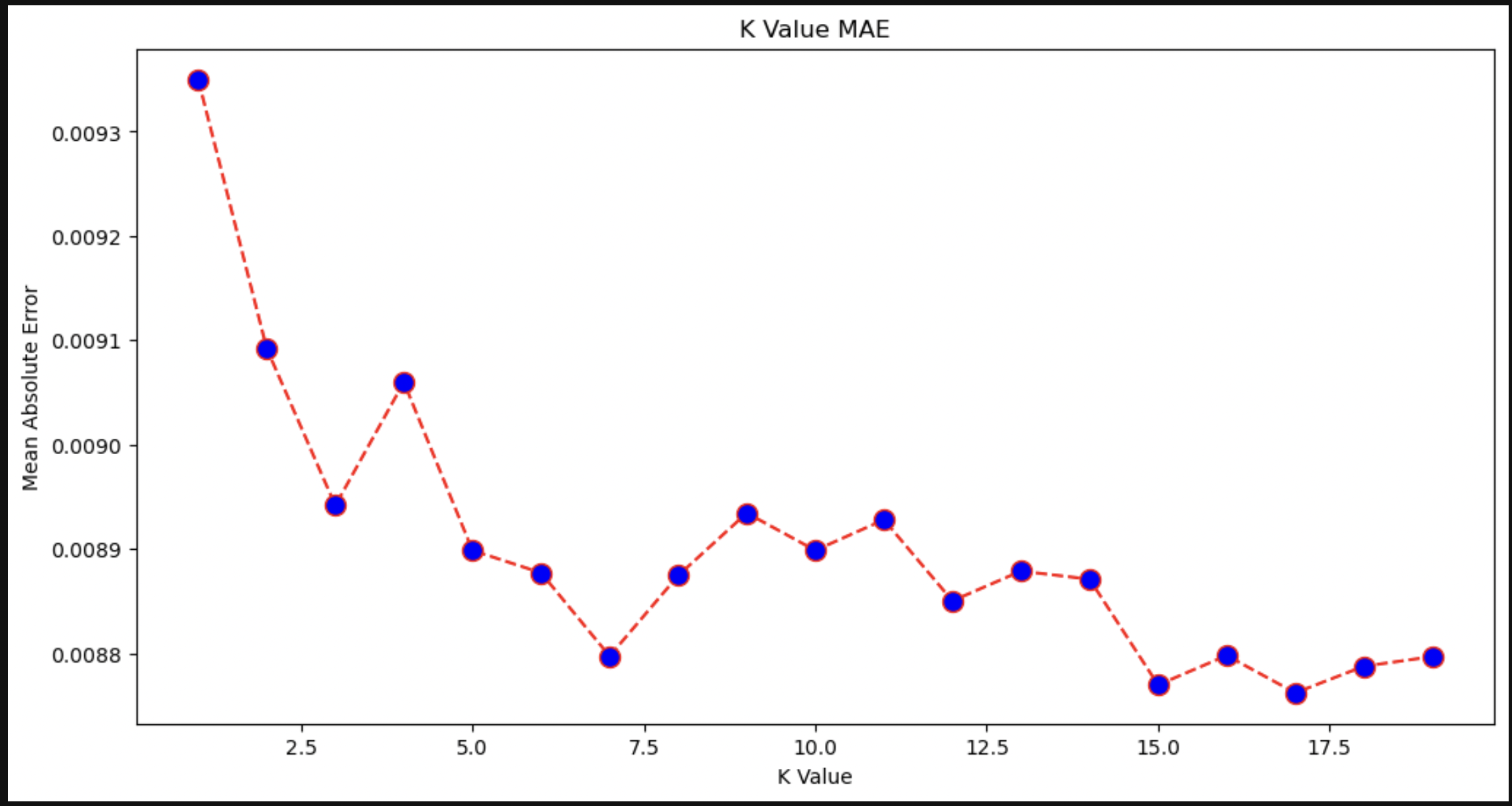

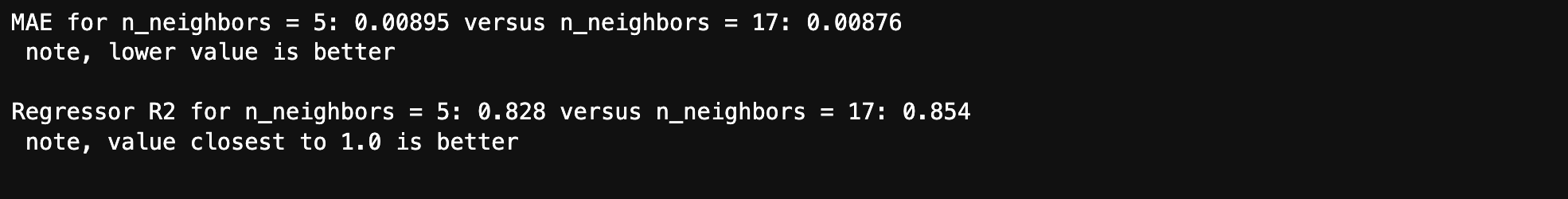

Calculated Best K for KN Regression (KNeighborsRegressor) through minimum MAE,

KNN Regression.

Random undersampling (RandomUnderSampler),

RandomForestClassifier

Random oversampling (RandomOverSampler),

RandomForestClassifier

Feature Scaling with StandardScaler,

SMOTEENN resampling,

RandomForestClassifier

This project leverages Jupyter Lab v3.4.4 and Python version 3.9.13 (main, Oct 13 2022, 16:12:30) with the following packages:

-

sys - module provides access to some variables used or maintained by the interpreter and to functions that interact strongly with the interpreter.

-

NumPy - an open source Python library used for working with arrays, contains multidimensional array and matrix data structures with functions for working in domain of linear algebra, fourier transform, and matrices.

-

pandas - software library written for the python programming language for data manipulation and analysis.

-

Path - from pathlib - Object-oriented filesystem paths, Path instantiates a concrete path for the platform the code is running on.

-

train_test_split - from sklearn.model_selection, a quick utility that wraps input validation and next(ShuffleSplit().split(X, y)) and application to input data into a single call for splitting (and optionally subsampling) data in a oneliner.

-

cross_val_score - from sklearn.model_selection, evaluates a score by cross-validation.

-

LogisticRegression - from sklearn.linear_model, a Logistic Regression (aka logit, MaxEnt) classifier; implements regularized logistic regression using the ‘liblinear’ library, ‘newton-cg’, ‘sag’, ‘saga’ and ‘lbfgs’ solvers - regularization is applied by default.

-

RandomForestClassifier - from sklearn.ensemble, a random forest classifier; a meta estimator that fits a number of decision tree classifiers on various sub-samples of the dataset and uses averaging to improve the predictive accuracy and control over-fitting.

-

confusion_matrix - from sklearn.metrics, computes confusion matrix to evaluate the accuracy of a classification; confusion matrix C is such that Cij is equal to the number of observations known to be in group i and predicted to be in group j.

-

balanced_accuracy_score - from sklearn.metrics, compute the balanced accuracy in binary and multiclass classification problems to deal with imbalanced datasets; defined as the average of recall obtained on each class.

-

f1_score - from sklearn.metrics, computes the F1 score, also known as balanced F-score or F-measure; can be interpreted as a harmonic mean of the precision and recall, where an F1 score reaches its best value at 1 and worst score at 0.

-

mean_absolute_error - from sklearn.metrics, mean absolute error regression loss.

-

RandomOverSampler - from imblearn.over_sampling, a class to perform random over-sampling; object to over-sample the minority class(es) by picking samples at random with replacement.

-

RandomUnderSampler - from imblearn.under_sampling, a class to perform random under-sampling; under-sample the majority class(es) by randomly picking samples with or without replacement.

-

SMOTEENN - from imblearn.combine, over-sampling using SMOTE and cleaning using ENN; combine over- and under-sampling using SMOTE and Edited Nearest Neighbours.

-

classification_report_imbalanced - from imblearn.metrics, compiles the metrics: precision/recall/specificity, geometric mean, and index balanced accuracy of the geometric mean.

-

KMeans - from sklearn.cluster, K-Means clustering.

-

PCA - from sklearn.decomposition, principal component analysis (PCA); linear dimensionality reduction using Singular Value Decomposition of the data to project it to a lower dimensional space.

-

StandardScaler - from sklearn.preprocessing, standardize features by removing the mean and scaling to unit variance.

-

KNeighborsRegressor - from sklearn.neighbors, a regression based on k-nearest neighbors; target is predicted by local interpolation of the targets associated of the nearest neighbors in the training set.

-

KNeighborsClassifier - from sklearn.neighbors, a classifier implementing the k-nearest neighbors vote.

-

NearestNeighbors - from sklearn.neighbors, a unsupervised learner for implementing neighbor searches.

-

kneighbors_graph - from sklearn.neighbors, computes the (weighted) graph of k-Neighbors for points in X.

-

kneighbors_graph - from sklearn.neighbors, computes the (weighted) graph of k-Neighbors for points in X.

-

hvplot - provides a high-level plotting API built on HoloViews that provides a general and consistent API for plotting data into numerous formats listed within linked documentation.

-

matplotlib.pyplot a state-based interface to matplotlib. It provides an implicit, MATLAB-like, way of plotting. It also opens figures on your screen, and acts as the figure GUI manager

-

Seaborn a library for making statistical graphics in Python. It builds on top of matplotlib and integrates closely with pandas data structures.

MacBook Pro (16-inch, 2021)

Chip Appple M1 Max

macOS Monterey version 12.6

Homebrew 3.6.11

Homebrew/homebrew-core (git revision 01c7234a8be; last commit 2022-11-15)

Homebrew/homebrew-cask (git revision b177dd4992; last commit 2022-11-15)

anaconda Command line client 1.10.0

conda 22.9.0

Python 3.9.13

pandas 1.5.1

pip 22.3 from /opt/anaconda3/lib/python3.9/site-packages/pip (python 3.9)

git version 2.37.2

In the terminal, navigate to directory where you want to install this application from the repository and enter the following command

git clone git@github.com:Billie-LS/give_me_cred.gitFrom terminal, the installed application is run through jupyter lab web-based interactive development environment (IDE) interface by typing at prompt:

> jupyter labThe file you will run is:

credit_risk_resampling.ipynbVersion control can be reviewed at:

https://github.com/Billie-LS/give_me_credLoki 'billie' Skylizard LinkedIn @GitHub

Vinicio De Sola LinkedIn @GitHub

Santiago Pedemonte LinkedIn @GitHub

Stratis Gavnoudias LinkedIn @GitHub

None

For accuracy hyperparameter analysis- GitHub

MIT License

Copyright (c) [2022] [Loki 'billie' Skylizard]

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.