ApiQ is a framework for quantizing and finetuning an LLM in low-bit format. It can:

- act as a post-trianing quantization framework, achieveing superior performance for various bit levels

- finetune the quantized model for saving GPU memory and obtaining superior finetuning results

-

ApiQ-bw for quantizing the following LLMs in 4, 3 and 2 bits

-

Llama-2

-

Mistral-7B-v0.1

-

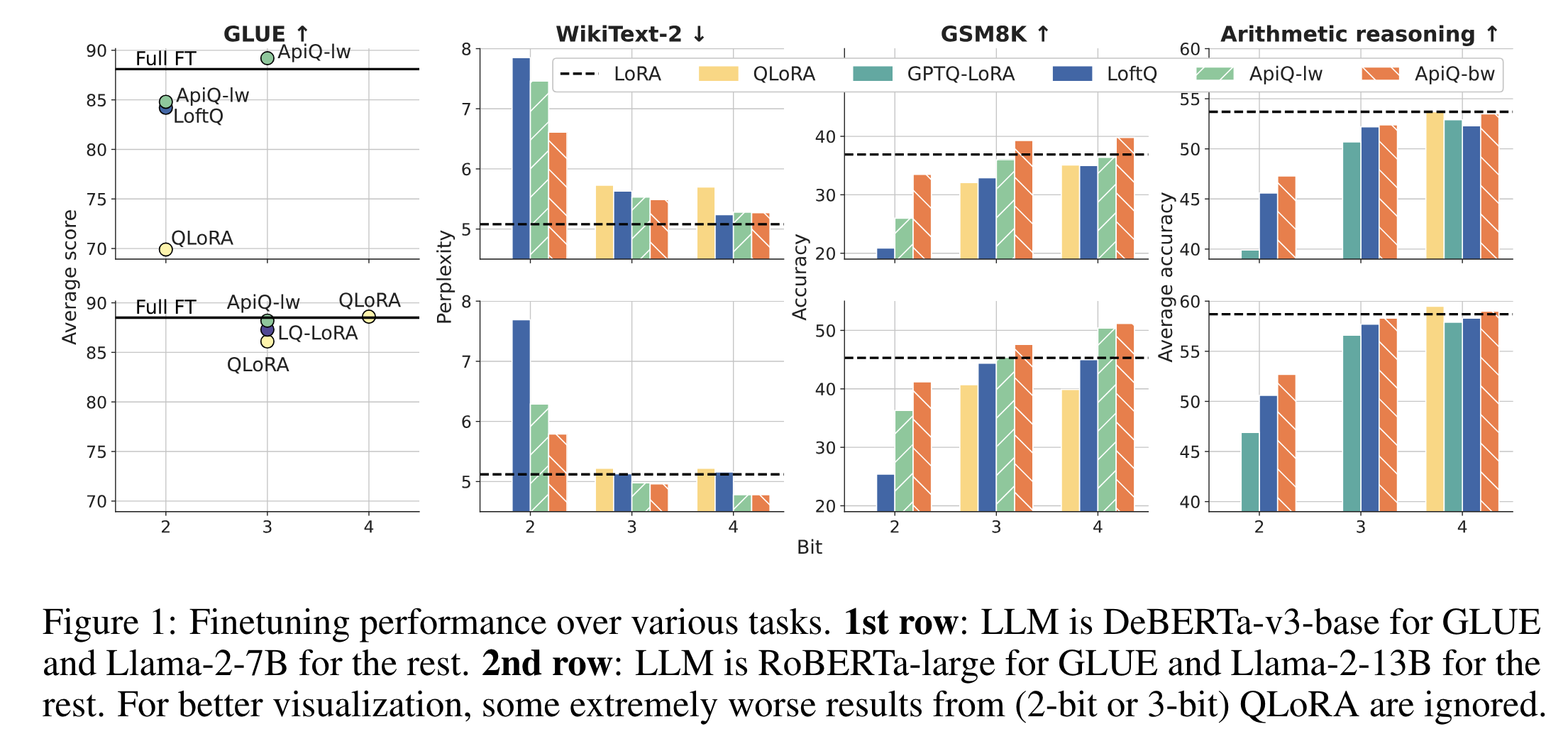

Fintuning of real/fake quantized LLM on

-

WikiText-2

-

GSM8K

-

4 arithmetic reasoning tasks (GSM8K, SVAMP, MAWPS, AQuA)

-

eight commonsense reasoning tasks (BoolQ, PIQA, SIQA, HellaSwag, WinoGrande, ARC-easy, ARC-challenge, OBQA)

- [2024.06.19] Release of code

conda create -n apiq python=3.10 -y

conda activate apiq

git clone https://github.com/BaohaoLiao/ApiQ.git

cd ApiQ

pip install --upgrade pip

pip install -e .

If you want to finetune a real quantized LLM, we leverage the kernel from AutoGPTQ. You can install AutoGPTQ and optimum as follows:

git clone https://github.com/PanQiWei/AutoGPTQ.git && cd AutoGPTQ

pip install gekko

pip install -vvv --no-build-isolation -e .

pip install optimum>=0.20.0

We provide fake/real and symmetrically/asymmetrically quantized models at Huggingface.

fake: The LLM's weights are still in FP16real: The LLM's weights are in GPTQ formatsymmetric: The quantization is symmetric, friendly to vllmasymmetric: The quantization is asymmetric

Note:

- For the finetuning of real quantized LLM, you need to use the real and symmetric version, because there is a bug in AutoGPTQ for the asymmetric quantizaion (see discussion).

- Fortunately, the difference between the symmetric and asymmetric quantization is very tiny. All results in the paper are from the asymmetric quantization.

- Quantize an LLM with GPU as ./scripts/quantize.sh.

SIZE=7

BIT=2

GS=64

SAVE_DIR=./model_zoos/Llama-2-${SIZE}b-hf-w${BIT}g${GS}-fake-sym

mkdir -p $SAVE_DIR

python ./apiq/main.py \

--model_name_or_path meta-llama/Llama-2-${SIZE}b-hf \

--lwc --wbits ${BIT} --group_size ${GS} \

--epochs 20 --seqlen 2048 --nsamples 128 \

--peft_lr 0.0005 --peft_wd 0.1 --lwc_lr 0.005 --lwc_wd 0.1 \

--symmetric \

--eval_ppl \

--aug_loss \

--save_dir $SAVE_DIR

It will output some files in --save_dir:

peft.pth: PEFT parameterslwc.pth: quantization parameters- folder

apiq_init: contain necessary files for finetuning a PEFT model - Other: The quantized version of LLM in FP16 format, tokenizer files, etc

- Evaluate a quantized LLM with

peft.pthandlwc.pth. After quantization, you can evaluate the model again with--resume.

SIZE=7

BIT=2

GS=64

SAVE_DIR=./model_zoos/Llama-2-${SIZE}b-hf-w${BIT}g${GS}-fake-sym

python ./apiq/main.py \

--model_name_or_path meta-llama/Llama-2-${SIZE}b-hf \

--lwc --wbits ${BIT} --group_size ${GS} \

--epochs 0 --seqlen 2048 --nsamples 128 \ # set epochs to 0

--symmetric \

--eval_ppl \

--save_dir $SAVE_DIR \

--resume $SAVE_DIR

- Convert the fake quantized LLM to a real quantized LLM in GPTQ format (only work for symmetric quantization):

SIZE=7

BIT=2

GS=64

RESUME_DIR=SAVE_DIR=./model_zoos/Llama-2-${SIZE}b-hf-w${BIT}g${GS}-fake-sym

SAVE_DIR=./model_zoos/Llama-2-${SIZE}b-hf-w${BIT}g${GS}-real-sym

mkdir -p $SAVE_DIR

python ./apiq/main.py \

--model_name_or_path meta-llama/Llama-2-${SIZE}b-hf \

--lwc --wbits ${BIT} --group_size ${GS} \

--epochs 0 --seqlen 2048 --nsamples 128 \ # set epochs to 0

--symmetric \

--eval_ppl \

--save_dir $SAVE_DIR \

--resume $RESUME_DIR \

--convert_to_gptq --real_quant

- WikiText-2

bash ./scripts/train_clm.sh

- GSM8K

bash ./scripts/train_test_gsm8k.sh

- Arithmetic / commonsense reasoning

# Download the training and test sets

bash ./scripts/download_datasets.sh

# Finetune

bash ./scripts/train_multitask.sh

- Our quantization code is based on OmniQuant

- Our finetuning code is based on LoftQ, pyreft and LLM-Adapters

If you find ApiQ or our code useful, please cite our paper:

@misc{ApiQ,

title={ApiQ: Finetuning of 2-Bit Quantized Large Language Model},

author={Baohao Liao and Christian Herold and Shahram Khadivi and Christof Monz},

year={2024},

eprint={2402.05147},

archivePrefix={arXiv}

}