This is a showcase of a small collection of neural networks implemented in Caffe, in order to evaluate their speed and accuracy on videos and images. A webcam interface is also available for live demos.

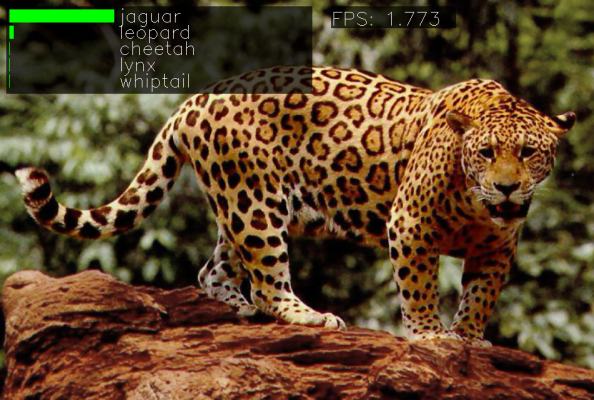

Demo interface. Image classification with SqueezeNet. Jaguar image by CaptainGiraffehands on Imgur.

Download all the models:

cd models && ./download_models.sh all

cd ..Try the classification interface with SqueezeNet [1]:

python deep_classification.py images/jaguar.jpg squeezenetTry classification on webcam with GoogleNet:

python deep_classification.py webcam googlenetTry the detection interface (CoCo classes) with the Yolo-tiny model:

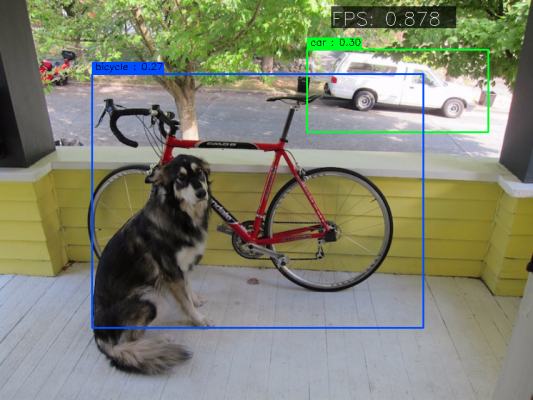

python deep_classification.py images/giraffe.jpg tiny_yoloAnd the detection interface with Pascal VOC classes:

python deep_classification.py images/dog.jpg tiny_yolo_vocDetection interface. Object detection with YOLO-tiny. Image from Darknet by Joseph Chet Redmon.

To run the demos, you need to compile the toolbox Caffe with Python support; follow the instructions on the BVLC website. You both Python 2.7 and Python 3.5 distributions are supported.

You need also to install the Python interfaces for OpenCV; on Ubuntu:

sudo apt-get install python-opencvThe script searches the current location of the Caffe toolbox in the

environmental variable CAFFE_ROOT. Set it to your Caffe installation, e.g:

export CAFFET_ROOT=${HOME}/caffeYou can download automatically all the networks supported by the demo running the following script (beware: it will download around 500 MB):

cd models && ./download_models.sh allYou can also download only the models you plan to use by passing them as parameters, e.g.

./download_models.sh caffenet squeezenetYOLO models cannot be automatically downloaded from Google Drive and thus you have to download them manually in the models/yolo path. The links to the network weights in Caffe format are here:

- tiny_yolo (CoCo classes)

- tiny_yolo_voc (Pascal VOC classes)

- darknet (ImageNet 1k)

- tiny (ImageNet 1k)

You can run the demos in Windows by installing one of the Caffe for Windows pre-built binaries.

To install the script dependences, such as OpenCV, Anaconda is suggested. If you use Python 3, Caffe currently supports only the version 3.5, so you may have to create a virtual environment and activate it. Type in the command or in the Anaconda console (not Powershell as it has issues with Anaconda environments) if you are using Python 3:

conda create -n caffe python=3.5

activate caffe

conda install scikit-image

conda install -c conda-forge py-opencv protobufAn Anaconda environment file is avalable in caffe-env.yml.

Create the environent with:

conda create -n caffe -f caffe-env.ymlSet CAFFE_ROOT to point to the directory where you unpacked the Caffe

distribution.

To download the models, use the Git bash shell:

cd models && sh download_models.sh allThe YOLO models will need to be downloaded manually.

The supported networks are specified in a network.ini configuration

file in the same directory of the script. Each section of the

configuration file specifies a supported network and you can add new sections or

modify the existing ones to support your Caffe networks.

Each section has the form:

[network_name]

type = class

model = path_to_caffe_prototxt

weights = path_to_caffemodel

labels = path_to_list_of_dataset_labels

mean = mean_pixel

anchors = list of floatsThe parameter type specifies the kind of network to load; as for

now, the supported types are:

class: classification network with a n-way softmax at the last layer namedprobclass_yolo: classification network from Darknet, with different pixel scaling and center cropyolo_detect: YOLO detection network [2] where the last layer specifies at once detected classes, regressed bounding boxes and box confidence

The parameters model and weights point to the Caffe files

required to load the network structure (.prototxt) and weights

(.caffemodel). All the paths are relative to the configuration file.

The labels parameter points to a file with the name of the

recognized classes in the order expected by the model. Currently are

available the classes for the ImageNet, Places250, PascalVOC and MSCoCo

datasets.

The optional mean parameter specifies the mean pixel value for the

dataset as a triple of byte values in BGR format. If the mean is not

available, the mean of the input image is used instead.

The optional anchors parameter specifies the bounding box biases in Darknet

v2 detection networks and it has to be manuall copied from the corresponding

.cfg file.

CPU mode: an additional parameter device in the DEFAULT

section specifies if the CPU or the GPU (default) should be used for the

model. You can override the parameter for a specific network by

specifying a different device in its section.

[1] Iandola, F. N., Moskewicz, M. W., Ashraf, et al. (2016). SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <1MB model size. Arxiv.

[2] Redmon, J., Divvala, S., Girshick, R., & Farhadi, A. (2015). You Only Look Once: Unified, Real-Time Object Detection. Arxiv.

The code is released under the BDS 2-clause license, except for the yolo_detector module which under the Darknet license (public domain).