A toolkit for neural audio effect modeling. Users can conduct experiments conveniently with this toolkit for audio effect modeling.

You can install PyNeuralFx via

$ pip install pyneuralfxBy using the frame work, you cam

$ git clone https://github.com/ytsrt66589/pyneuralfx.gitthen

$ cd frame_work/ Due to the rising importance of the audio effect related task, several easy-to-use toolkits are developed. For example, dasp-package (repo_link) for differetntiable signal processing in pytorch and grafx (ref_link) for audio effect processing graph in pytorch. However, there is no easy-to-use toolkit for neural audio effect modeling task, especially for the black-box method. PyNeuralFx aims to overcome this issue, helping beginners easily start the neural audio effect modeling research and inspiring experienced researchers with different aspects.

You can find out the tutorial in /tutorials.

PyNeuralFx follows the naming principles: [control-model] For example, if we use the concat as the conditioning method and the gru as the model, then the model called: Concat-GRU. If the model is only for snapshot modeling, then the control will always be snapshot

PyNeuralFx supports:

- snapshot-tcn

- snapshot-gcn

- snapshot-vanilla-rnn

- snapshot-lstm

- snapshot-gru

- snapshot-tfilm-gcn (ref_link)

- snapshot-tfilm-tcn (ref_link)

- concat-gru

- film-gru

- statichyper-gru

- dynamichyper-gru

- concat-lstm

- film-lstm

- statichyper-lstm

- dynamichyper-lstm

- film-vanilla-rnn

- statichyper-vanilla-rnn

- concat-gcn

- film-gcn

- hyper-gcn

- concat-tcn

- film-tcn

- hyper-tcn

- film-ssm (ref_link)

In our opinion, loss functions often aim for different purposes. Some are for the reconstuction loss (Overall reconstruction), some are for eliminateing specific problems (Improving sound details), and some are for leveraging perceptual properties (Aligning human perceptual). More research are needed for the exploration of the loss of different audio effects.

PyNeuralFx supports:

- esr loss

- l1 loss

- l2 loss

- complex STFT loss

- multi-resolution complex STFT loss

- STFT loss

- multo-resolution STFT loss

- dc eliminating loss

- shot-time energy-loss (ref_link)

- adversarial loss (ref_link, ref_link)

Also, PyNeuralFx supports

- pre emphasize filter (ref_link)

The loss functions used above can be used as the evaluation metric also, for estimation the reconstruction error. Moreover, PyNeuralFx also supports other metrics for comprehensive evaluation:

- Loudness error (ref_link)

- Transient reconstruction (ref_link)

- Crest factor (ref_link)

- RMS energy (ref_link)

- Spectral centroid (ref_link)

- signal-to-aliasing noise ratio (SNRA) (ref_link)

- signal-to-harmonic noise ratio (SNRH) (ref_link)

Notice Due to the original implementation of the transient extraction is slow, we implement another implementation of the transient extraction. Users can experiment with those two methods and compare the difference.

PyNeuralFx supports two types of visualization:

- Wave file comparison

- time-domain wave comparison

- spectrum difference

- Model's behavior visualization

- harmonic response

- distortion curve

- sine sweep visualization (for observing the aliasing problem)

- phase response

(First run the command cd frame_work. Ensure that the working directory is frame_work)

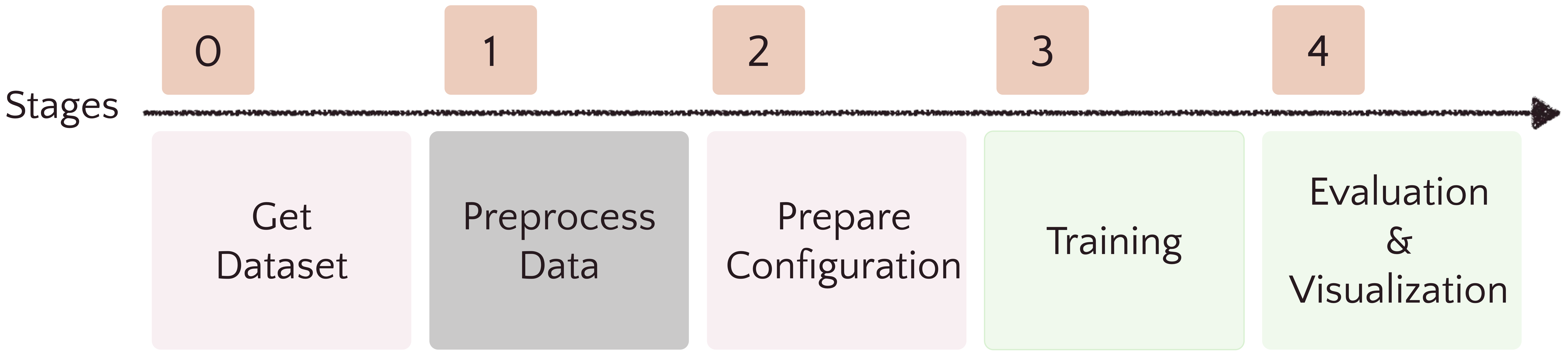

- Download dataset: Download dataset from the commonly used academic paper or prepare the dataset by yourself. Then put the data under the folder

data. Current supported dataset is listed below sections, for the supported dataset, we provide the preprocess file to match the data template we expected. - Preprocess data: Write your own code or manually to match the data template we expected for using the frame work provided in pyneuralfx. Please refer to dataset.md section for more details. If you use the dataset pyneuralfx supported then the preprocess file is already provided in

preprocess/{name_of_the_dataset}.py. - Prepare Configuration: modify the configuration files in

configs/. All experiments are record by configuration file to ensure the reproducibility. Further detail of configuration setting is shown in configuration.md. - Training: run the code to train the model depends on the configuration files. Please refer to train.md for more details.

- Evaluation & Visualization: evaluate your results by several metrics or visualize the comparison or important system properties. Please refer to evalvis.md for more details.

Tricks

- During training, you can use

loss_analysis/compare_loss.pyto check to validation loss curve. (Remember to modify the experiment root in compare_loss.py)

-

LA2A Compressor downlod link, paper link

-

Marshall JVM 410H (Guitar Amp) downlod link, paper link

-

Boss OD-3 Overdrive download link, paper link

-

EGDB Guitar Amp Dataset download link, paper link

-

Compressor Datasets download link, paper link

-

EGFxSet download link, project link, paper link

-

Time-varying audio effect download link, paper link

Those datasets are collected from previous works, if you use them in your paper or in your project, please cite the corresponding paper.

Interested in contributing? Check out the contributing guidelines. Please note that this project is released with a Code of Conduct. By contributing to this project, you agree to abide by its terms.

pyneuralfx was created by yytung. It is licensed under the terms of the MIT license.

pyneuralfx was created with cookiecutter and the py-pkgs-cookiecutter template.

This project is highly inspired by the following repositories, thanks to the amazaing works they have done. If you are interested in the audio effect related works, please look at the following repositories or websites to gain more insights.