This is the official repository for the paper A Hierarchical Assessment of Adversarial Severity. This paper recieved the Best Paper Awards at the ICCV2021 workshop on Adversarial Robustness in the Real World)

First, install the environment via anaconda by following:

conda env create -f environment.yml

conda activate AdvSeverityThen, install AutoAttack and tqdm by running:

pip install git+https://github.com/fra31/auto-attack

pip install tqdm- Download train+val sets of iNaturalist'19

- Unzip the zip file

dataset_splits/splits_inat19.py - Create the dataset by running the

generate_splits.pyscript. Change theDATA_PATHANDOUTPUT_DIRvariables to fit your specifications. - Resize all the images into the 224x224 format by using the

resize_images.shscript:bash ./resize_images.sh new_splits_backup new_splits_downsampled 224x224! - Rename

data_paths.ymland edit it to reflect the paths on your system.

To use our trained models, you must download them first. If you check the models folders, the uploaded files weight ~4kb each. Once the environment is installed and activated, run git lfs install --local and git lfs pull. Finally, check the weights of each file, it should be ~164Mb.

To run the training rutine, run the main.py script as follows:

python main.py \

--arch resnet18 \

--dropout 0.5 \

--output PATH/TO/OUTPUT \

--num-training-steps 200000 \

--gpu GPU \

--val-freq 1 \

--attack-eps EPSILON \

--attack-iter-training ITERATIONS \

--attack-step STEP \

--attack free \

--curriculum-trainingIf you want to train the method without the proposed curriculum, don't use the flag --curriculum-training. Further, if you want to train the model with TRADES change the flag --attack free with --attack trades.

To restore the training from a checkpoint, just use the same command the fristly used.

To perform the evaluation with the proposed attacks, pgd or NHAA, run the main.py script as follows:

python main.py \

--arch resnet18 \

--output PATH/TO/OUTPUT \

--gpu GPU \

--val-freq 1 \

--attack-eps EPSILON \

--attack-iter-training ITERATIONS \

--attack-step 1 \

--evaluate ATTACK \

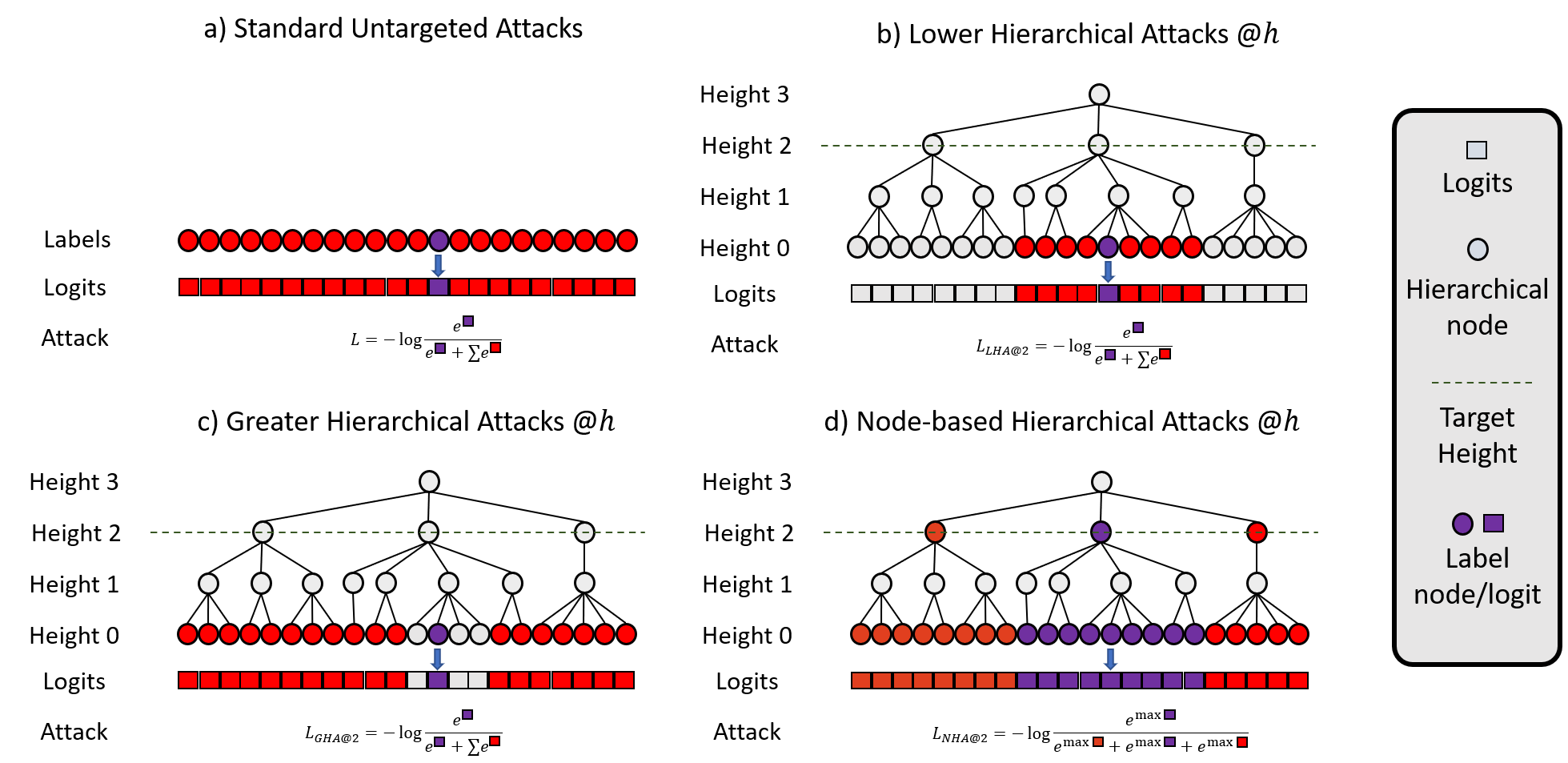

--attack-iter-evaluation ATTACKITERATIONSIf --evaluate uses as input hPGD, use the --hPGD flag to select between LHA, GHA or NHA and --hPGD-level to select the target height. To set the total number of attack iterations, use the flag --attack-iter-training.

If you found our paper or code useful, please cite our work:

@InProceedings{Jeanneret_2021_ICCV,

author = {Jeanneret, Guillaume and Perez, Juan C. and Arbelaez, Pablo},

title = {A Hierarchical Assessment of Adversarial Severity},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops},

month = {October},

year = {2021},

pages = {61-70}

}

This code was based on Bertinetto's Making Better Mistakes official repository

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License. Commercial licenses available upon request.