The RAG pattern enables businesses to use the reasoning capabilities of LLMs, using their existing models to process and generate responses based on new data. RAG facilitates periodic data updates without the need for fine-tuning, thereby streamlining the integration of LLMs into businesses.

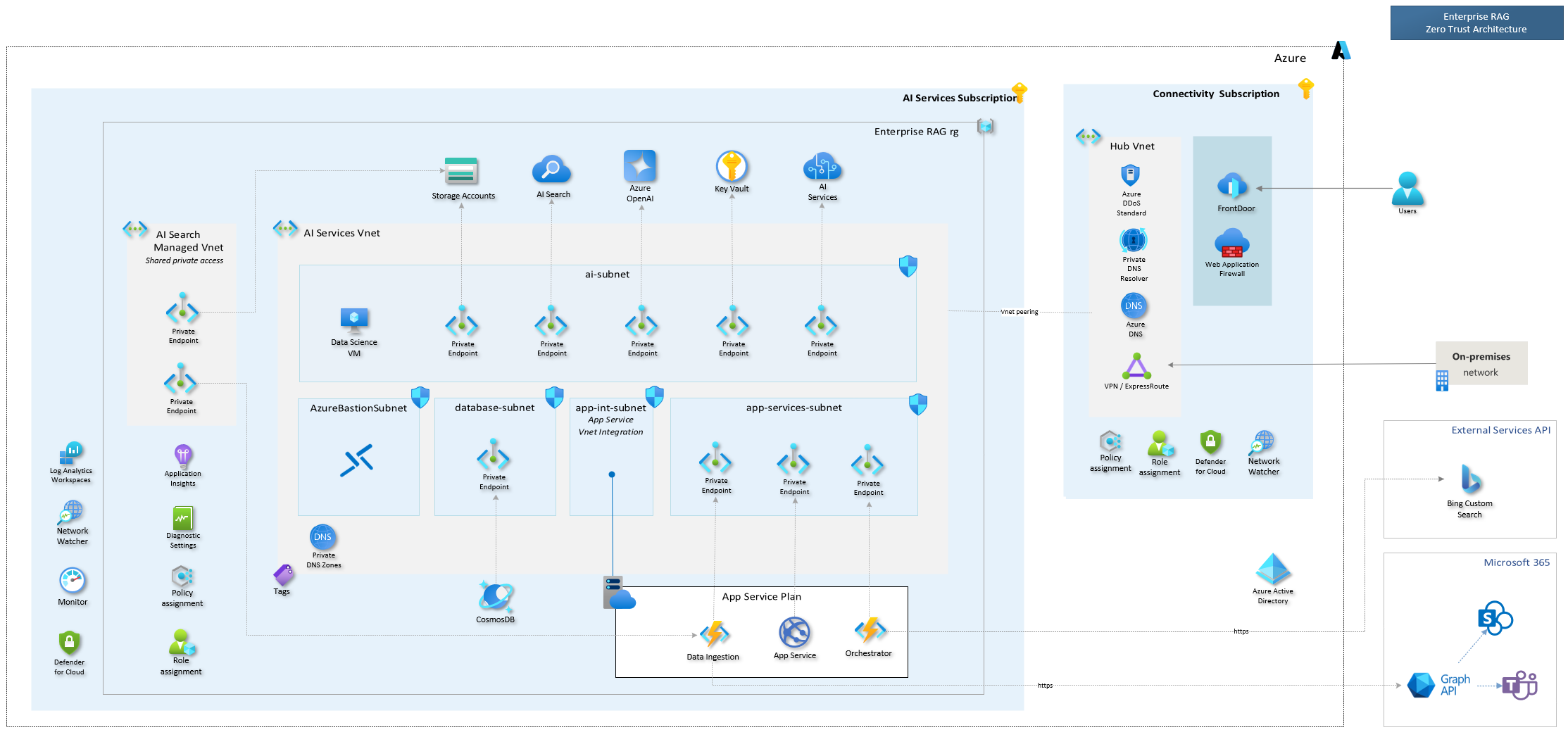

The Enterprise RAG Solution Accelerator (GPT-RAG) offers a robust architecture tailored for enterprise-grade deployment of the RAG pattern. It ensures grounded responses and is built on Zero-trust security and Responsible AI, ensuring availability, scalability, and auditability. Ideal for organizations transitioning from exploration and PoC stages to full-scale production and MVPs.

- Discord Channel Enterprise RAG - Connected Community

GPT-RAG follows a modular approach, consisting of three components, each with a specific function.

-

Data Ingestion - Optimizes data chunking and indexing for the RAG retrieval step.

-

Orchestrator - Orchestrates LLMs and knowledge bases calls to generate optimal responses for users.

-

App Front-End - Uses the Backend for Front-End pattern to provide a scalable and efficient web interface.

If you want to learn more about the RAG Pattern and GPT-RAG architecture.

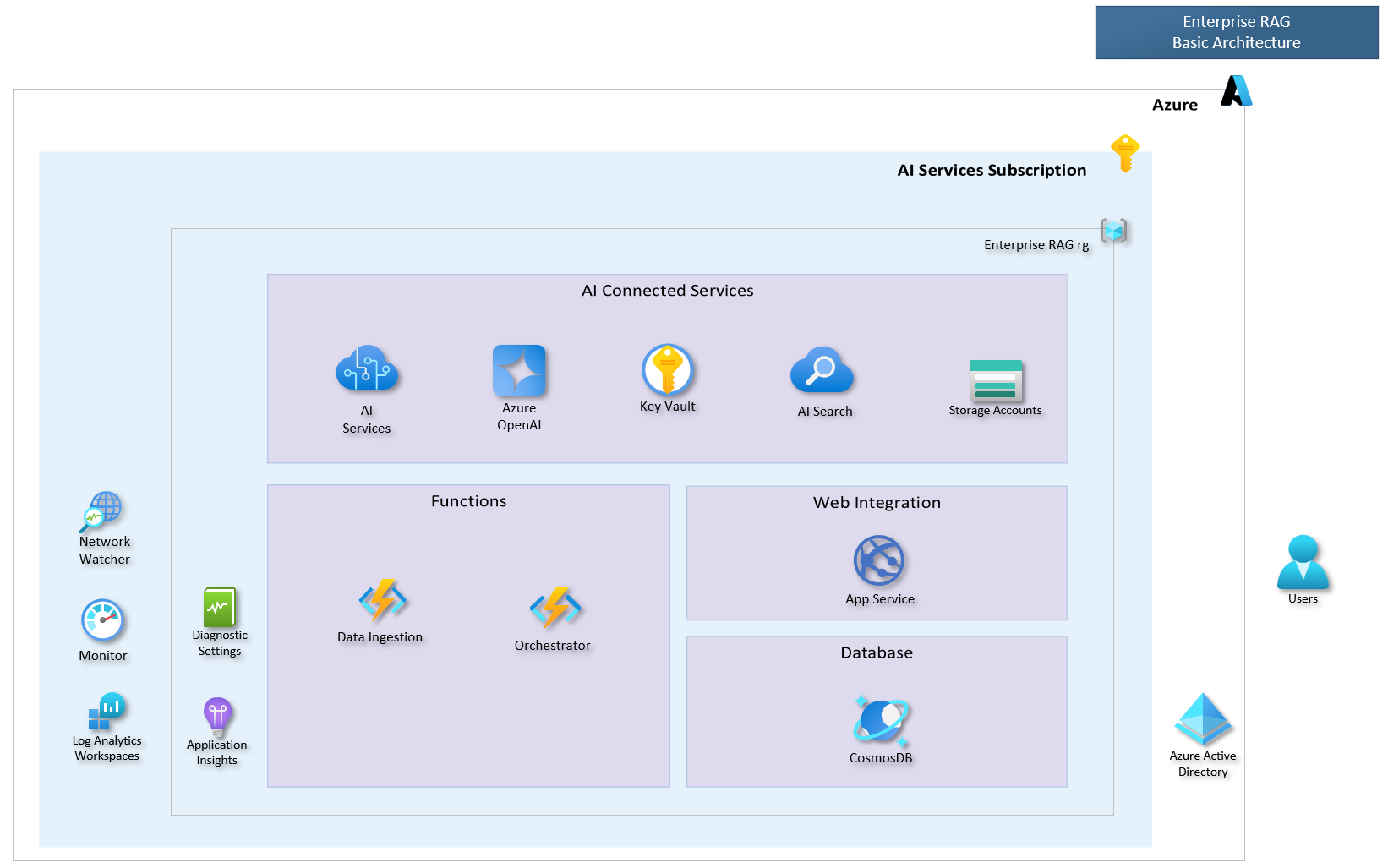

- Basic Architecture Deployment: for quick demos with no network isolation⚙️

Learn how to quickly set up the basic architecture for scenarios without network isolation. Click the link to proceed.

- Standard Zero-Trust Architecture Deployment: fastest Zero-Trust deployment option⚡

Deploy the solution accelerator using the standard zero-trust architecture with pre-configured solution settings. No customization needed. Click the link to proceed.

- Custom Zero-Trust Architecture Setup: most used ⭐

Explore options for customizing the deployment of the solution accelerator with a zero-trust architecture, adjusting solution settings to your needs. Click the link to proceed.

- Step-by-Step Manual Setup: Zero-Trust Architecture: hands-on approach 🛠️**

For those who prefer complete control, follow this detailed guide to manually set up the solution accelerator with a zero-trust architecture. Click the link to proceed.

This guide will walk you through the deployment process of Enterprise RAG. There are two deployment options available, Basic Architecture and Zero Trust Architecture. Before beginning the deployment, please ensure you have prepared all the necessary tools and services as outlined in the Pre-requisites section.

Pre-requisites

- Azure Developer CLI: Download azd for Windows, Other OS's.

- Powershell 7+ with AZ module (Windows only): Powershell, AZ Module

- Git: Download Git

- Node.js 16+ windows/mac linux/wsl

- Python 3.11: Download Python

- Initiate an Azure AI services creation and agree to the Responsible AI terms **

** If you have not created an Azure AI service resource in the subscription before

For quick demonstrations or proof-of-concept projects without network isolation requirements, you can deploy the accelerator using its basic architecture.

The deployment procedure is quite simple, just install the prerequisites mentioned above and follow these four steps using Azure Developer CLI (azd) in a terminal:

1 Download the Repository:

azd init -t azure/gpt-rag2 Login to Azure:

2.a Azure Developer CLI:

azd auth login2.b Azure CLI:

az login3 Start Building the infrastructure and components deployment:

azd up4 Add source documents to object storage

Upload your documents to the 'documents' folder located in the storage account. The name of this account should start with 'strag'. This is the default storage account, as shown in the sample image below.

Done! Basic deployment is completed.

Recommended: Add app authentication to your web app

For more secure and isolated deployments, you can opt for the Zero Trust architecture. This architecture is ideal for production environments where network isolation and stringent security measures are highly valued.

Before deploying the Zero Trust architecture, make sure to review the prerequisites. It's important to note that you will only need Node.js and Python for the second part of the process, which will be carried out on the VM created during the deployment of this architecture.

The deployment procedure is similar to that of the Basic Architecture, but with some additional steps. For a detailed guide on deploying this option, refer to the instructions below:

1 Download the Repository

azd init -t azure/gpt-rag2 Enable network isolation

azd env set AZURE_NETWORK_ISOLATION true 3 Login to Azure:

2.a Azure Developer CLI:

azd auth login2.b Azure CLI:

az login4 Start Building the infrastructure and components deployment:

azd provision5 Next, you will use the Virtual Machine with the Bastion connection (created during step 4) to continue the deployment.

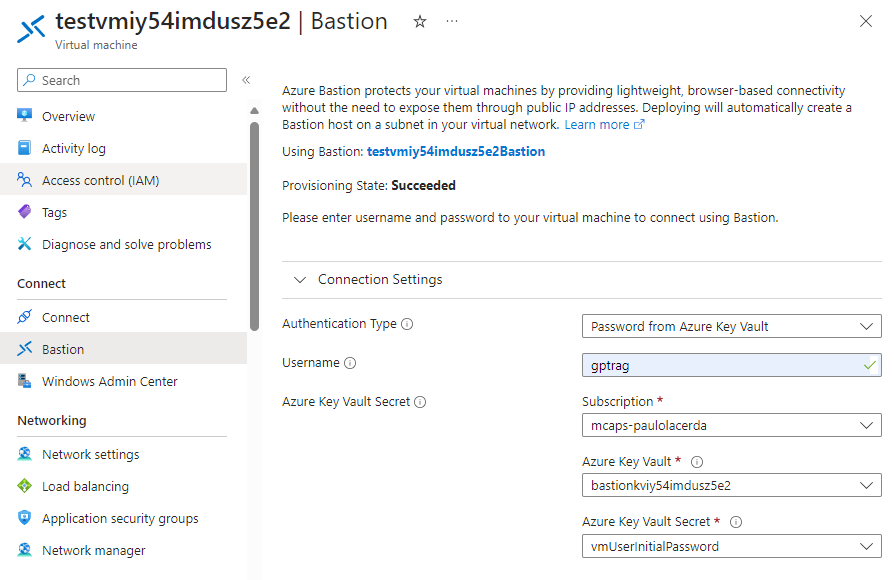

Log into the created VM with the user gptrag and authenticate with the password stored in the keyvault, similar to the figure below:

6 Upon accessing Windows, install Powershell, as the other prerequisites are already installed on the VM.

7 Open the command prompt and run the following command to update azd to the latest version:

choco upgrade azd

After updating azd, simply close and reopen the terminal.

8 Create a new directory, for example, deploy then enter the created directory.

mkdir deploy

cd deploy

To finalize the procedure, execute the subsequent commands in the command prompt to successfully complete the deployment:

azd init -t azure/gpt-rag

azd auth login

azd env refresh

azd package

azd deploy

Note: when running the

azd init ...andazd env refresh, use the same environment name, subscription, and region used in the initial provisioning of the infrastructure.

Done! Zero trust deployment is completed.

Recommended: Add app authentication to your web app

The standard deployment process sets up Azure resources and deploys the accelerator components with a standard configuration. To tailor the deployment to your specific needs, follow the steps in the Custom Deployment section for further customization options.

Expand your data retrieval capabilities by integrating new data sources such as Bing Custom Search, SQL Server, and Teradata. For detailed instructions, refer to the AI Integration Hub page.

If you encounter any errors during the deployment process, consult the Troubleshooting page for guidance on resolving common issues.

To assess the performance of your deployment, refer to the Performance Testing guide for testing methodologies and best practices.

Learn how to query and analyze conversation data by following the steps outlined in the How to Query and Analyze Conversations document.

Understand the cost implications of your deployment by reviewing the Pricing Model for detailed pricing estimation.

Ensure proper governance of your deployment by following the guidelines provided in the Governance Model.

This project welcomes contributions and suggestions. Most contributions require you to agree to a Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.

When you submit a pull request, a CLA bot will automatically determine whether you need to provide a CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions provided by the bot. You will only need to do this once across all repos using our CLA.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.

This project may contain trademarks or logos for projects, products, or services. Authorized use of Microsoft trademarks or logos is subject to and must follow Microsoft's Trademark & Brand Guidelines. Use of Microsoft trademarks or logos in modified versions of this project must not cause confusion or imply Microsoft sponsorship. Any use of third-party trademarks or logos are subject to those third-party's policies.