Xiaoming Li, Xinyu Hou, Chen Change Loy

We propose a

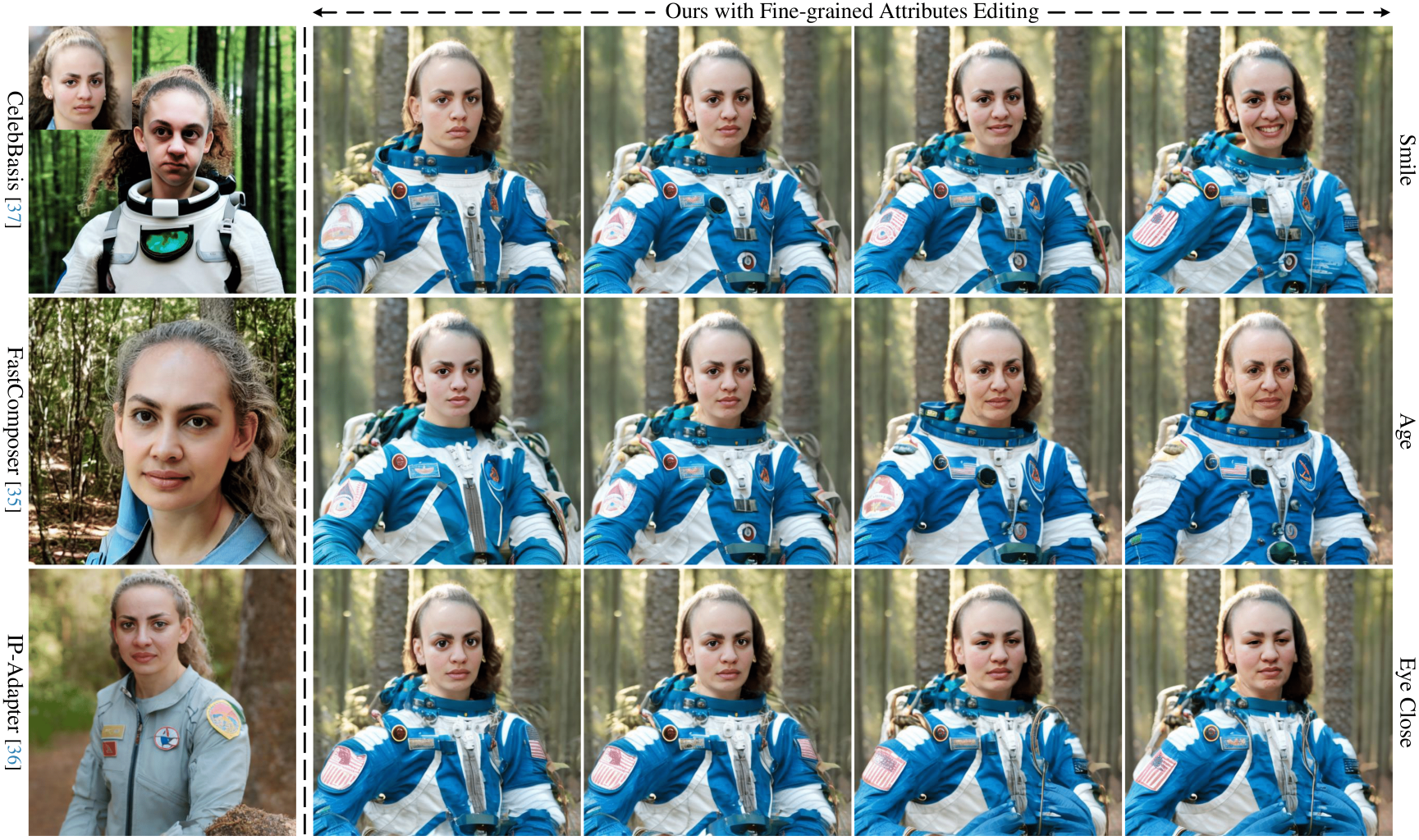

Given a single reference image (thumbnail in the top left), our

- Release the source code and model.

- Extend to more diffusion models.

conda create -n wplus python=3.8

conda activate wplus

pip install torch==2.0.1 torchvision==0.15.2 --index-url https://download.pytorch.org/whl/cu118

pip install -r requirements.txt

BASICSR_EXT=True pip install basicsr

If you encounter errors about StyleGAN that are not easy to solve, you can create a new environment and use a lower torch version, e.g., 1.12.1+cu113. You can refer to installation of our MARCONet

python script/download_weights.py

For in the wild face image:

CUDA_VISIBLE_DEVICES=0 python ./script/ProcessWildImage.py -i ./test_data/in_the_wild -o ./test_data/in_the_wild_Result -n

For aligned face image:

CUDA_VISIBLE_DEVICES=0 python ./script/ProcessWildImage.py -i ./test_data/aligned_face -o ./test_data/aligned_face_Result

# Parameters:

-i: input path

-o: save path

-n: need alignment like FFHQ. This is for in-the-wild images.

-s: blind super-resolution using PSFRGAN. This is for low-quality face images

- The base model supports many pre-trained stable diffusion models, like

runwayml/stable-diffusion-v1-5,dreamlike-art/dreamlike-anime-1.0and Controlnet, without any training. See the details in thetest_demo.ipynb - You can control the parameter of

residual_att_scaleto balance the identity preservation and text alignment.

- Prompt: 'a woman wearing a red shirt in a garden'

- Seed: 23

- e4e_path: ./test_data/e4e/1.pth

Emotion Editing

Lipstick Editing

Roundness Editing

Eye Editing using Animate Model of

dreamlike-anime-1.0

ControlNet using

control_v11p_sd15_openpose

See test_demo_stage1.ipynb

Face Image Inversion and Editing

Training Data for Stage 1:

- face image

- e4e vector

./train_face.sh

Training Data for Stage 2:

- face image

- e4e vector

- background mask

- in-the-wild image

- in-the-wild face mask

- in-the-wild caption

./train_wild.sh

For more details, please refer to the

./train_face.pyand./train_wild.py

You can convert the pytorch_model.bin to wplus_adapter.bin by running:

python script/transfer_pytorchmodel_to_wplus.py

Since our

This project is licensed under NTU S-Lab License 1.0. Redistribution and use should follow this license.

This project is built based on the excellent IP-Adapter. We also refer to StyleRes, FreeU and PSFRGAN.

@article{li2023w-plus-adapter,

author = {Li, Xiaoming and Hou, Xinyu and Loy, Chen Change},

title = {When StyleGAN Meets Stable Diffusion: a $\mathcal{W}_+$ Adapter for Personalized Image Generation},

journal = {arXiv preprint arXiv: 2311.17461},

year = {2023}

}