This is a torch implementation of the paper A Neural Algorithm of Artistic Style by Leon A. Gatys, Alexander S. Ecker, and Matthias Bethge.

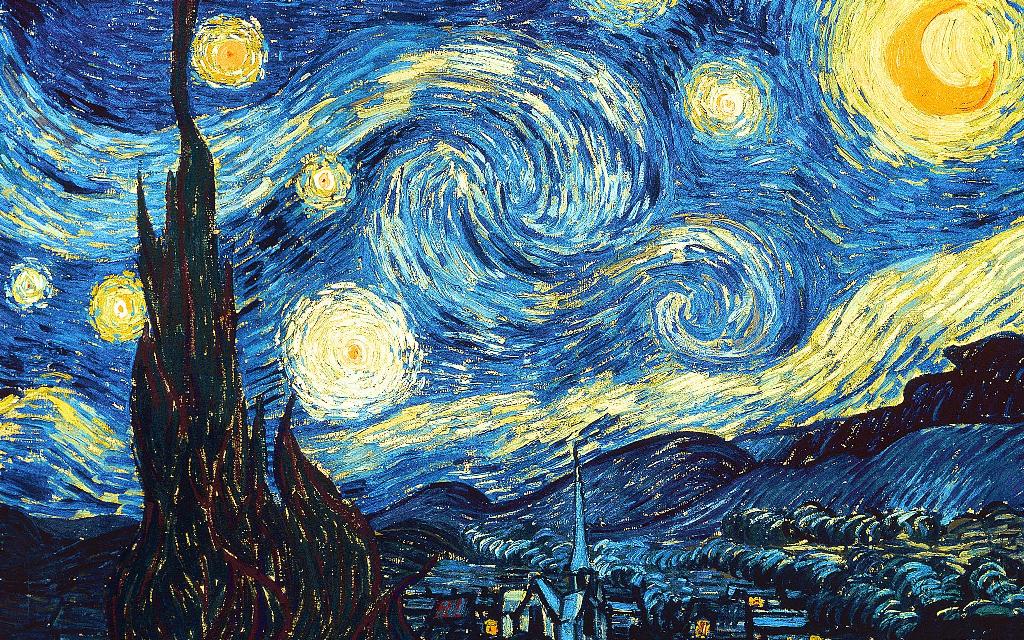

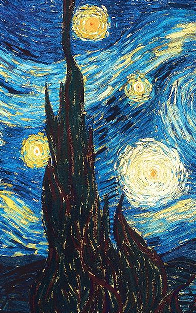

The paper presents an algorithm for combining the content of one image with the style of another image using convolutional neural networks. Here's an example that maps the artistic style of The Starry Night onto a night-time photograph of the Stanford campus:

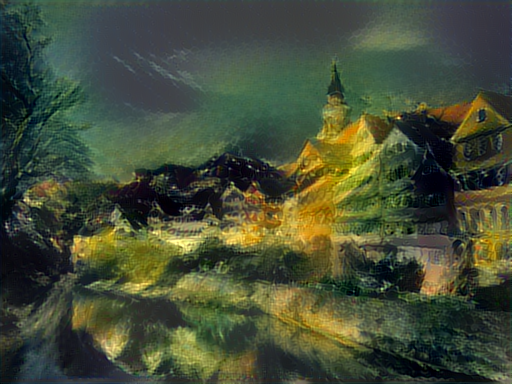

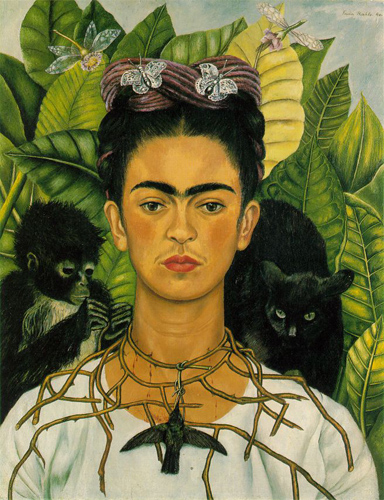

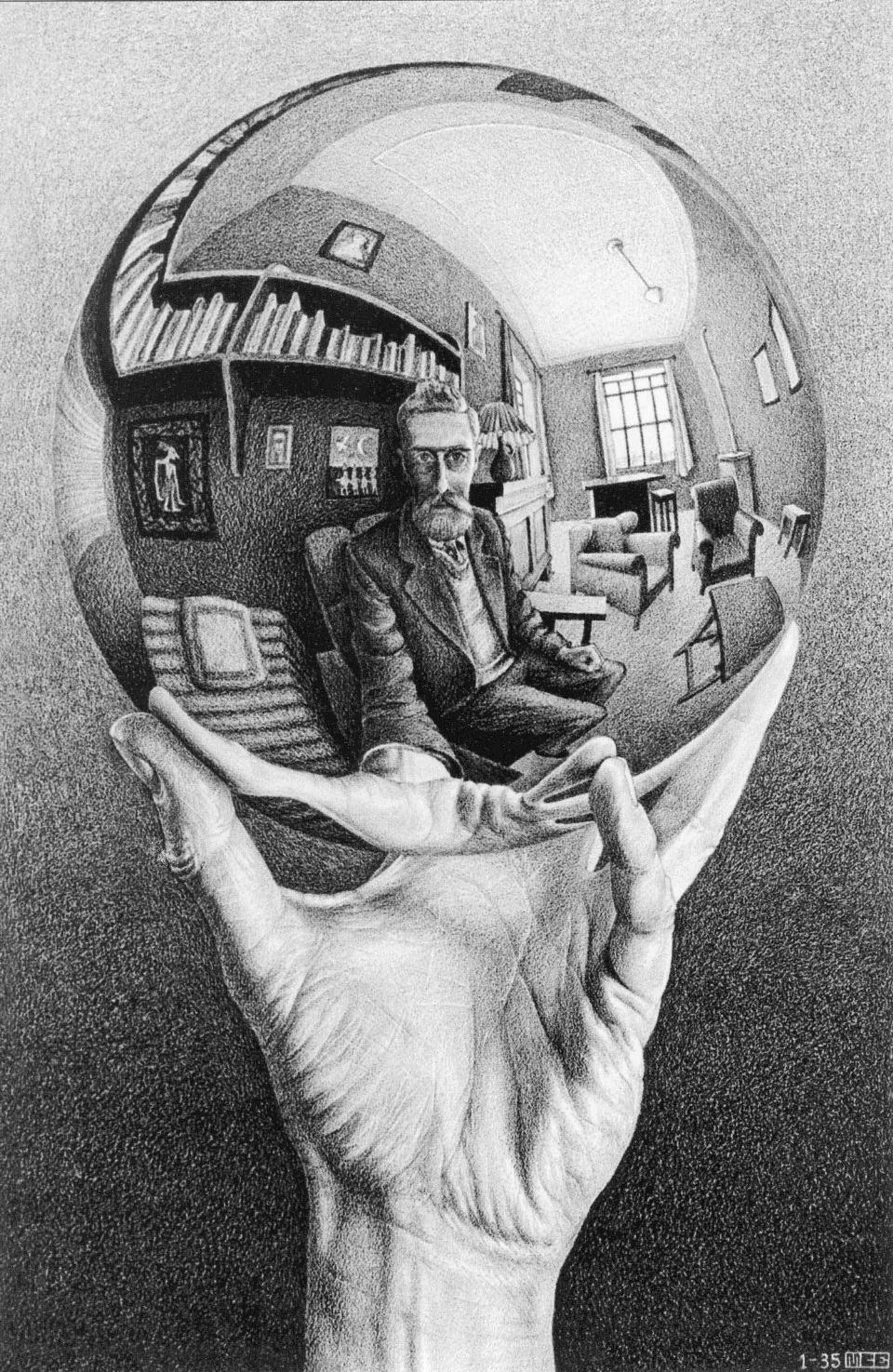

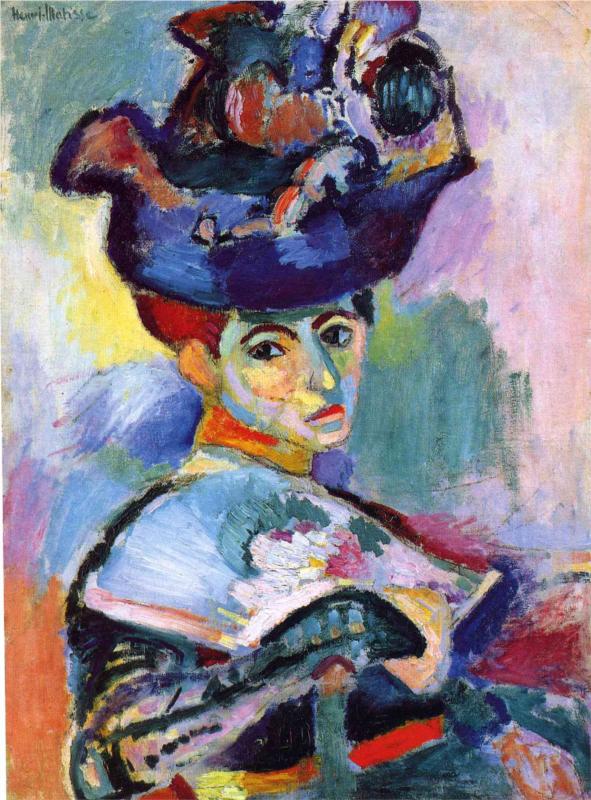

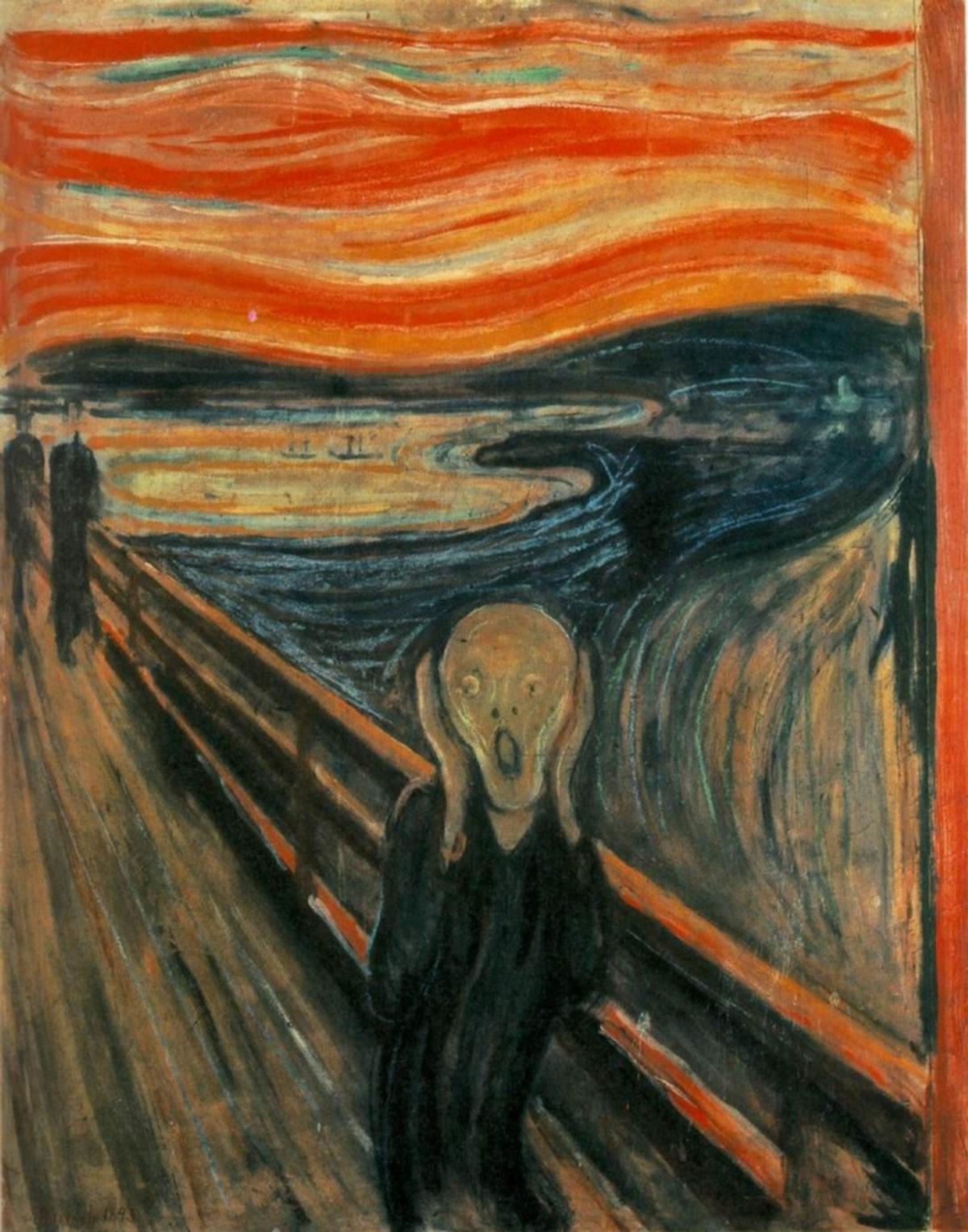

Applying the style of different images to the same content image gives interesting results. Here we reproduce Figure 2 from the paper, which renders a photograph of the Tubingen in Germany in a variety of styles:

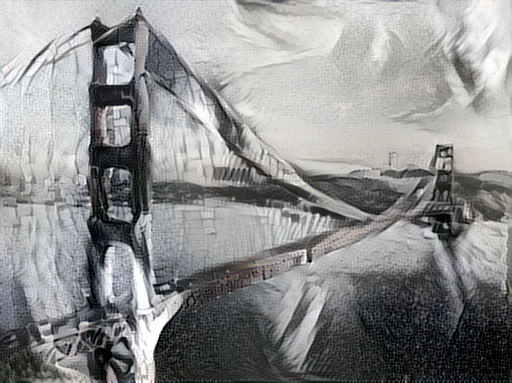

Here are the results of applying the style of various pieces of artwork to this photograph of the golden gate bridge:

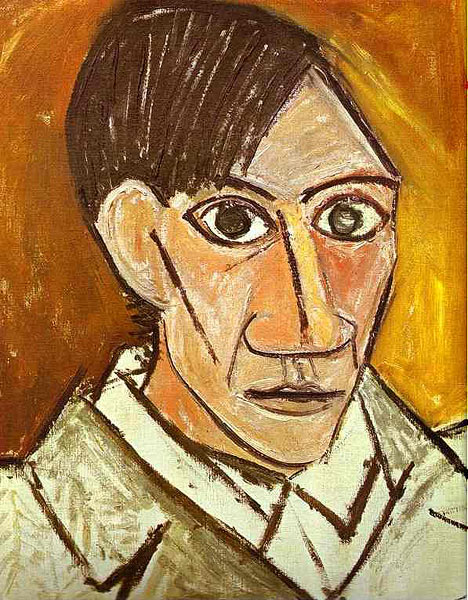

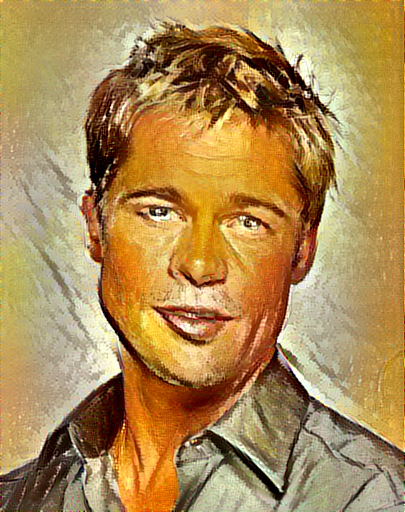

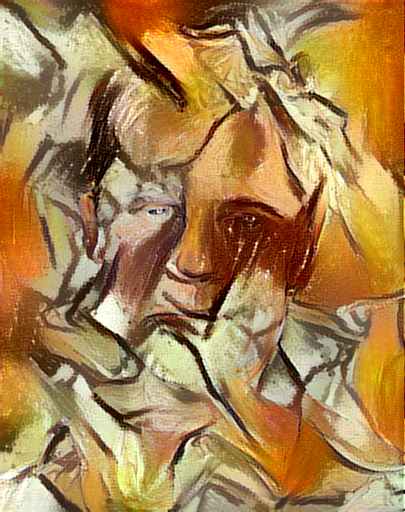

The algorithm allows the user to trade-off the relative weight of the style and content reconstruction terms, as shown in this example where we port the style of Picasso's 1907 self-portrait onto Brad Pitt:

Dependencies:

Optional dependencies:

- CUDA 6.5+

- cudnn.torch

NOTE: If your machine does not have CUDA installed, then you may need to install loadcaffe manually like this:

git clone https://github.com/szagoruyko/loadcaffe.git

# Edit the file loadcaffe/loadcaffe-1.0-0.rockspec

# Delete lines 21 and 22 that mention cunn and inn

luarocks install loadcaffe/loadcaffe-1.0-0.rockspec

After installing dependencies, you'll need to run the following script to download the VGG model:

sh models/download_models.sh

Basic usage:

th neural_style.lua -style_image <image.jpg> -content_image <image.jpg>

Options:

-image_size: Maximum side length (in pixels) of of the generated image. Default is 512.-gpu: Zero-indexed ID of the GPU to use; for CPU mode set-gputo -1.

Optimization options:

-content_weight: How much to weight the content reconstruction term. Default is 0.1-style_weight: How much to weight the style reconstruction term. Default is 1.0.-num_iterations: Default is 1000.

Output options:

-output_image: Name of the output image. Default isout.png.-print_iter: Print progress everyprint_iteriterations. Set to 0 to disable printing.-save_iter: Save the image everysave_iteriterations. Set to 0 to disable saving intermediate results.

Other options:

-proto_file: Path to thedeploy.txtfile for the VGG Caffe model.-model_file: Path to the.caffemodelfile for the VGG Caffe model.-backend:nnorcudnn. Default isnn.cudnnrequires cudnn.torch.

On a GTX Titan X, running 1000 iterations of gradient descent with -image_size=512 takes about 2 minutes.

In CPU mode on an Intel Core i7-4790k, running the same takes around 40 minutes.

Most of the examples shown here were run for 2000 iterations, but with a bit of parameter tuning most images will

give good results within 1000 iterations.

Images are initialized with white noise and optimized using L-BFGS.

We perform style reconstructions using the conv1_1, conv2_1, conv3_1, conv4_1, and conv5_1 layers

and content reconstructions using the conv4_2 layer. As in the paper, the five style reconstruction losses have

equal weights.