This markdown only summarize the notebook I made. It does not reflect the whole process. I only aims to answer the question "What has been finally done?" bu not how or why. For instance, I will not speak about GridSearch here. To see the full notebook you can refer to :

- The true notebook : Axel_THEVENOT_Python_For_Data_Analysis.ipynb

- Its HTML version : Axel_THEVENOT_Python_For_Data_Analysis.html

- Its HTML version without input you can read as a full report of the project : Axel_THEVENOT_Python_For_Data_Analysis_report.html

On the client side, it will be ask to create a matrix of features to predict. Each row is a list of ordered features as following :

| Feature | Format |

|---|---|

| Date | dd/mm/yyyy |

| Hour | int |

| Temperature | °C |

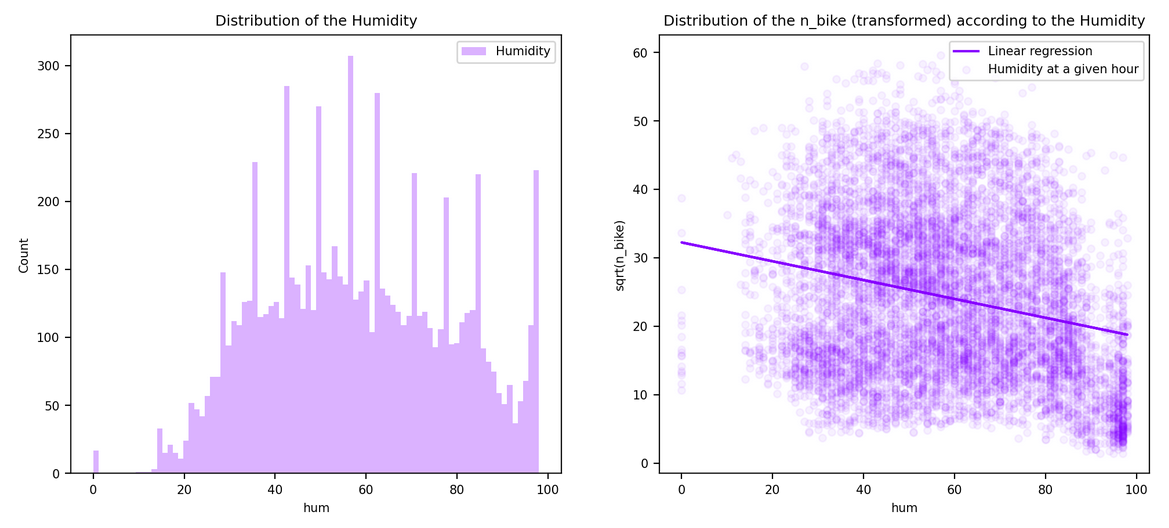

| Humidity | % |

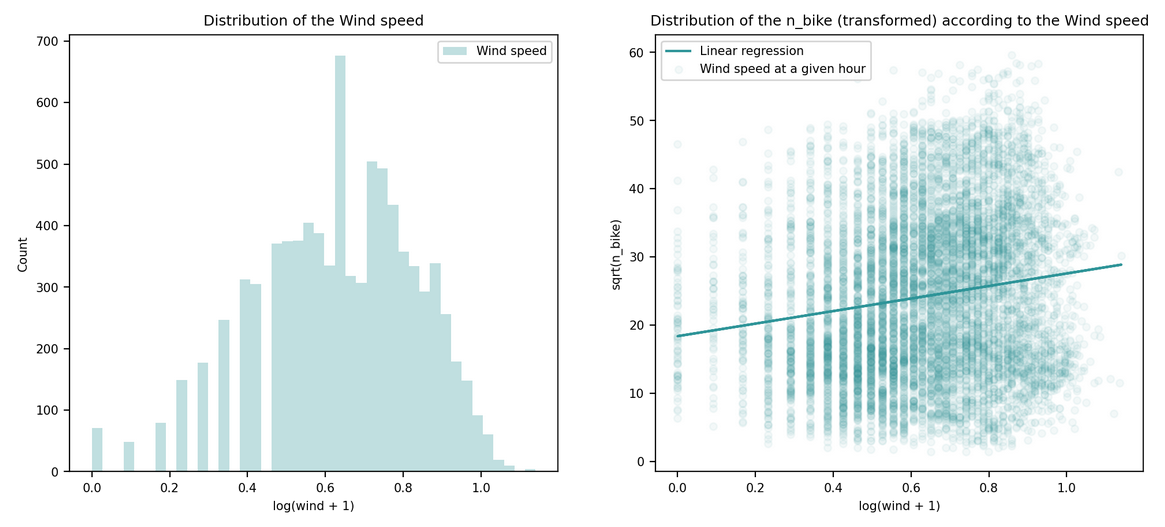

| Wind speed | m/s |

| Visibility | 10m |

| Dew point temperature | °C |

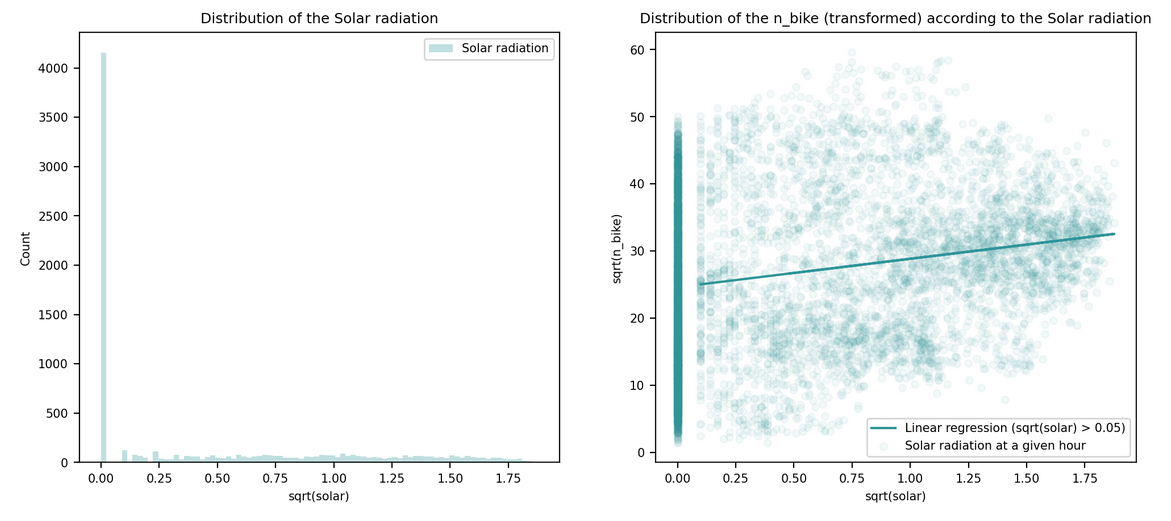

| Solar Radiation | MJ/m2 |

| Rainfal | mm |

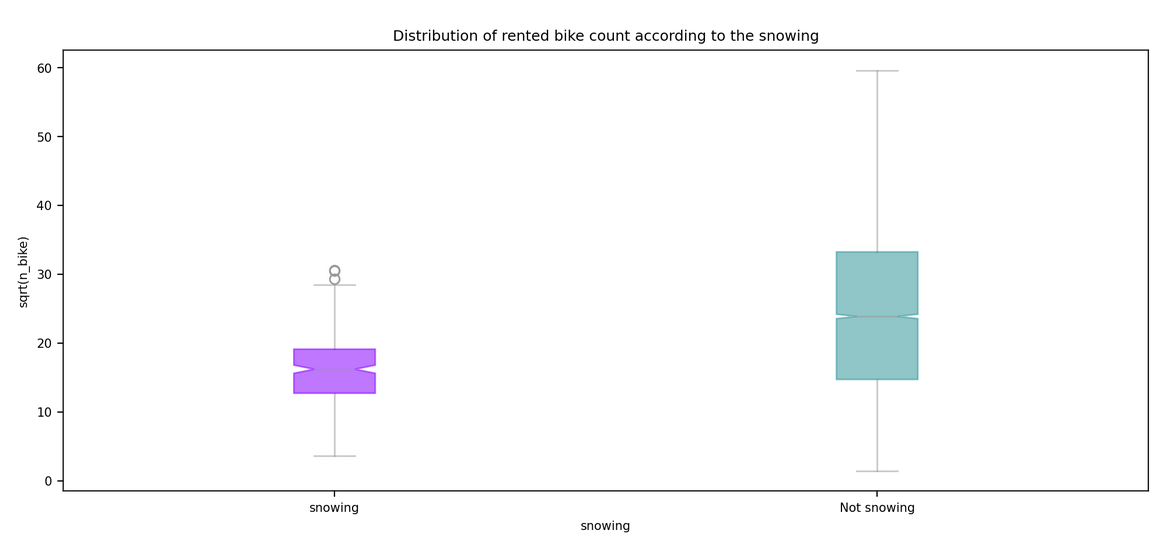

| Snowfall | mm |

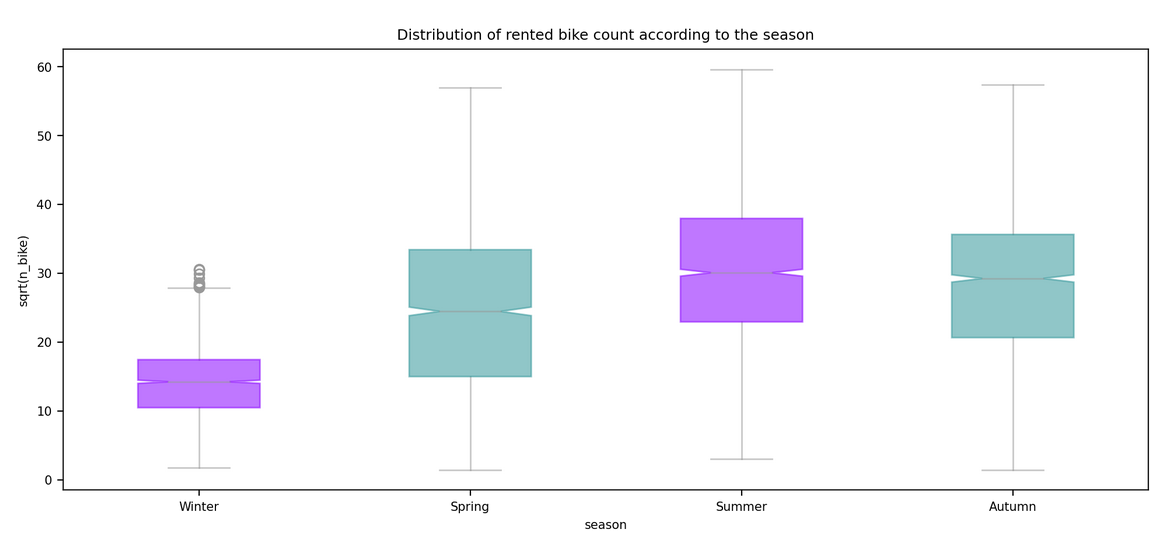

| Seasons | {"Winter", "Autumn", "Spring", "Summer"} |

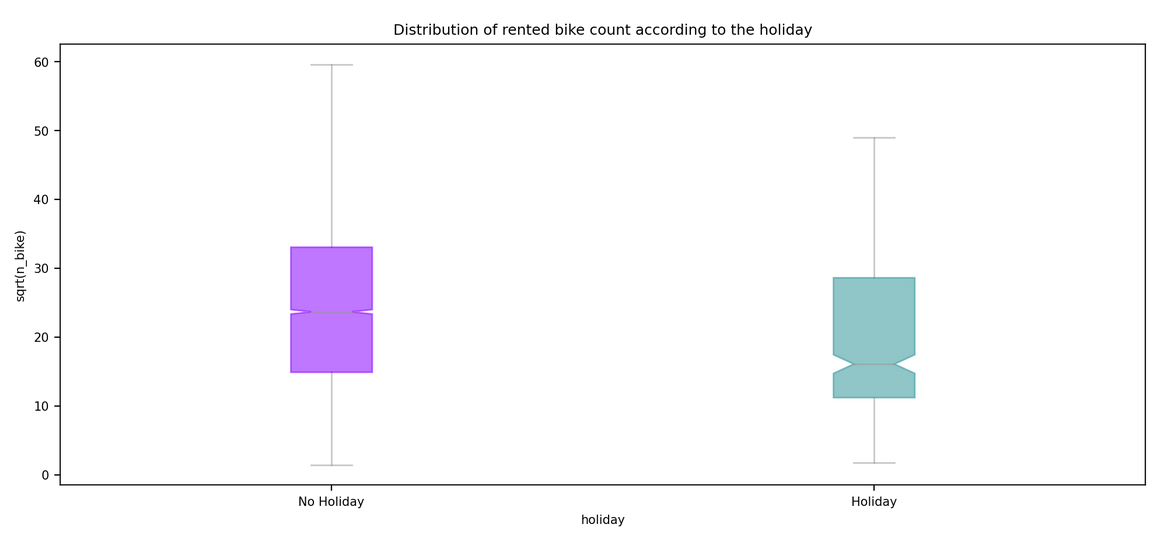

| Holiday | {"Holiday", "No Holiday"} |

We can run this server from terminal running the python deployed_model.py or python3 deployed_model.py according to your OS and python version.

From the preprocessing.py file I recreate the preprocessing function :

- set holiday feautre as boolean

- extract time features

- trasnform meteorological arguments

- complete preprocessing with one hot encoding on categorical feautures and norm/standardization on numerical ones``

import requests

from preprocessing import preprocess

X = None # Matrix of raw features

url = 'http://localhost:5000/predict' # API request url

def serialize(df):

return [[value for value in row] for row in df.values]

# preprocess de feature matrix

X = serialize(preprocess(X))

# request the API

r = requests.post(url, json={'inputs': serialize(X)})

# get the predictions

prediction = r.json()My Iranian churn dataset was missing so I decided to choose the Seoul bike sharing demand dataset dataset found on UC Irvine Machine Learning Repository.

Abstract

The dataset contains count of public bikes rented at each hour in Seoul Bike haring System with the corresponding Weather data and Holidays information.

Data Set Information

Currently Rental bikes are introduced in many urban cities for the enhancement of mobility comfort. It is important to make the rental bike available and accessible to the public at the right time as it lessens the waiting time. Eventually, providing the city with a stable supply of rental bikes becomes a major concern. The crucial part is the prediction of bike count required at each hour for the stable supply of rental bikes. The dataset contains weather information (Temperature, Humidity, Windspeed, Visibility, Dewpoint, Solar radiation, Snowfall, Rainfall), the number of bikes rented per hour and date information.

The objective of the notebook is to analyze the dataset I get assigned and to make from there:

- A powerpoint explaining the ins and outs of the problem, my reflections on the question raised, the different variables I have created, how the problem fits into the context of the study. In this case, I will try to predict the number of bikes rented in Seoul based on temporal and meteorological features.

- A python code :

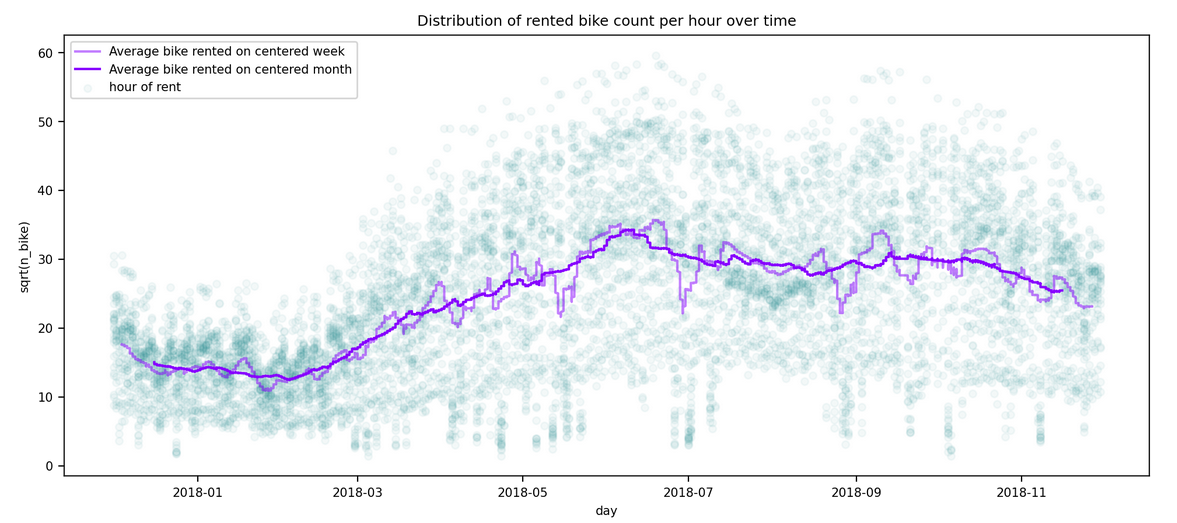

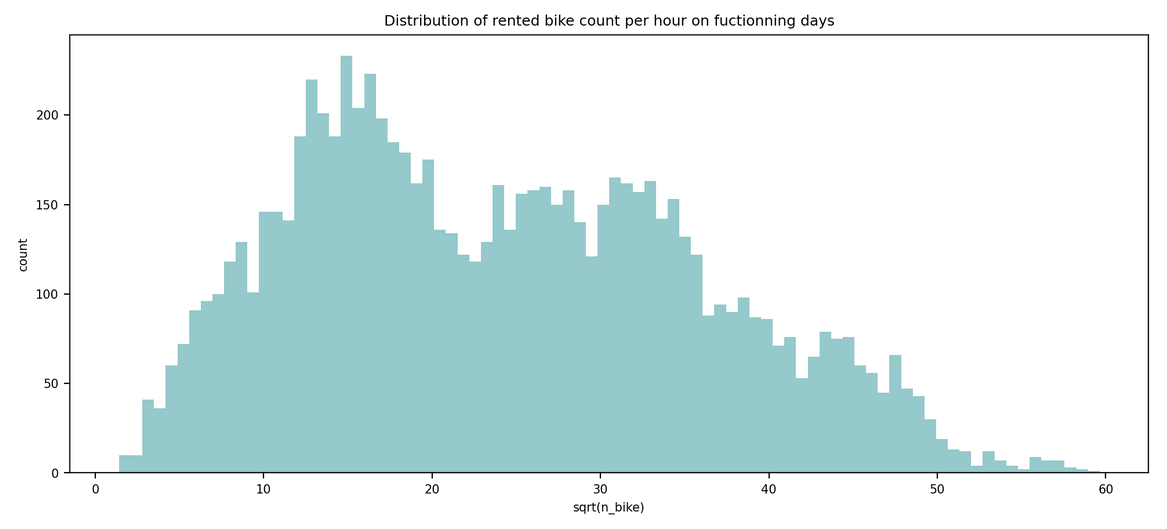

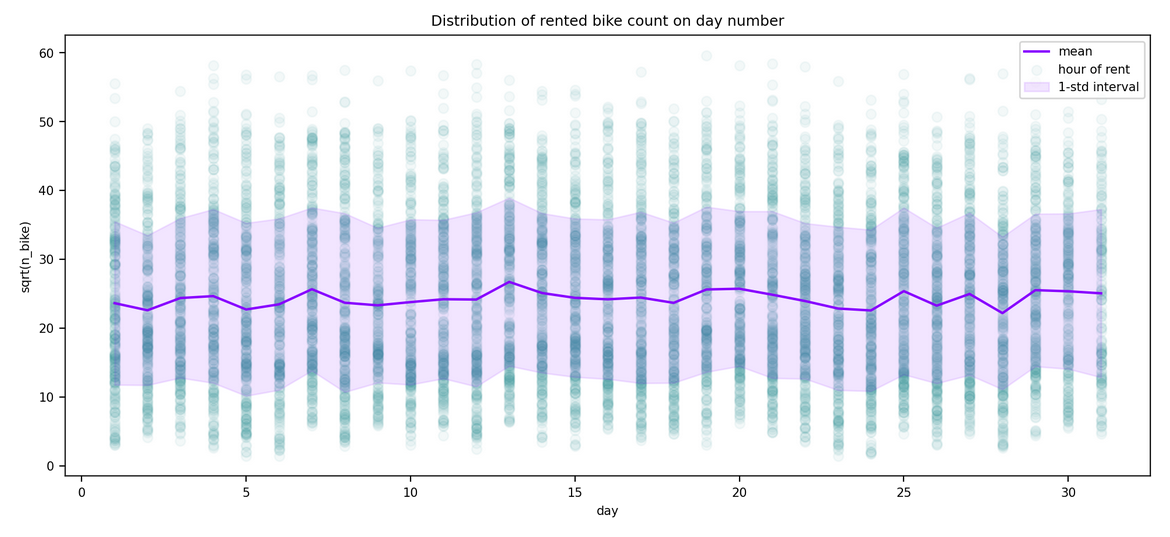

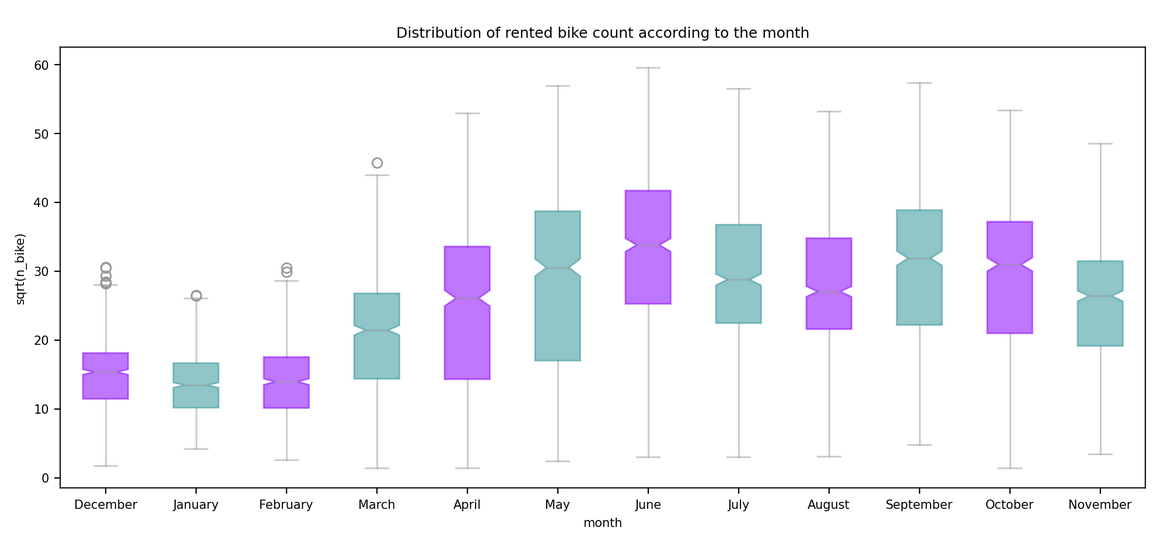

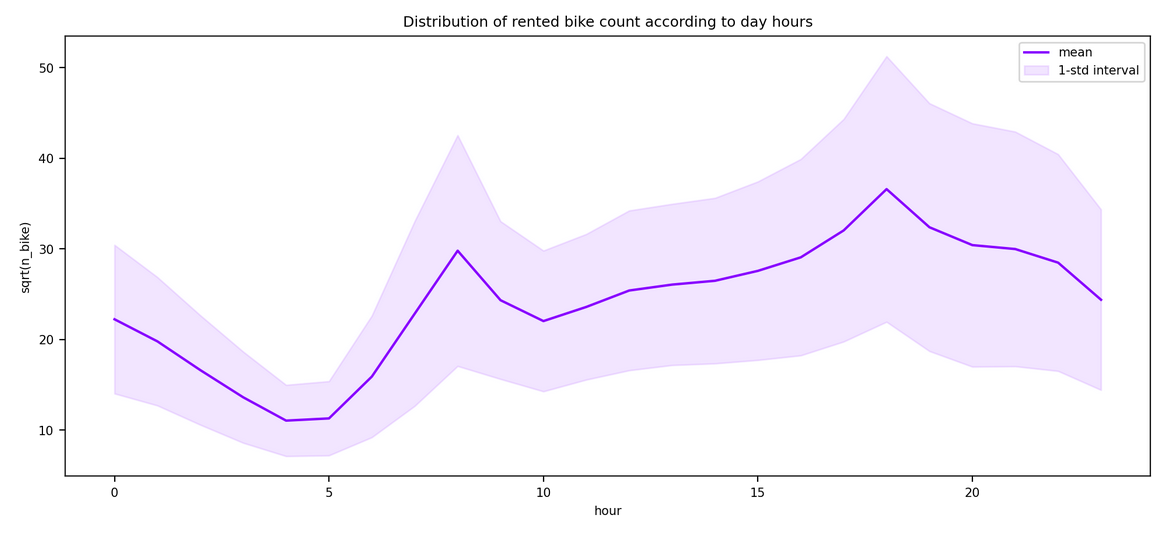

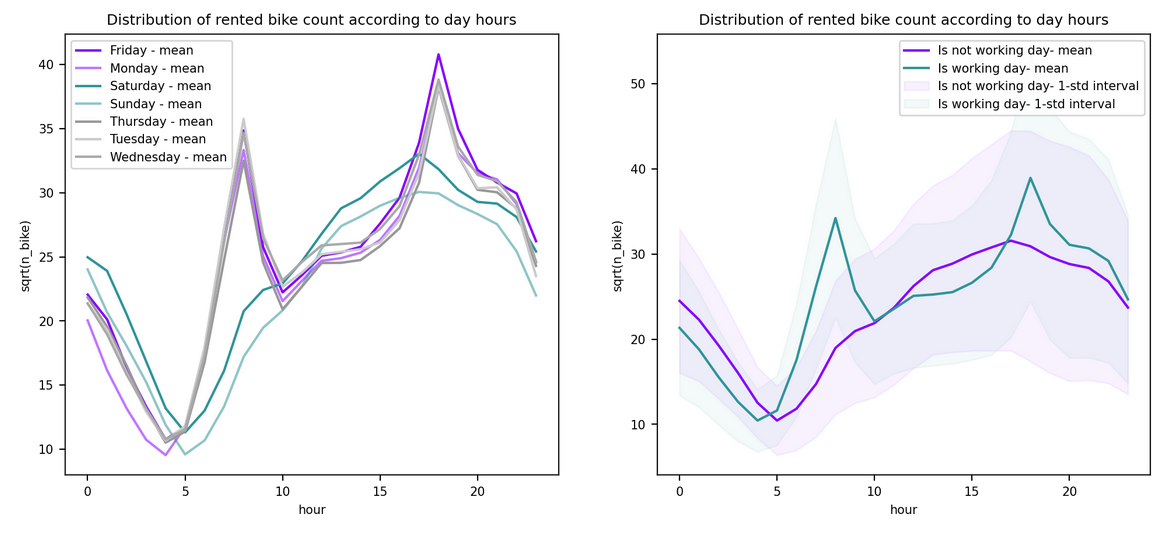

- Data-visualization - showing the link between the variables and the target

- Modeling - take scikit-learn and try several algorithms, change hyper parameters, make a search grid, compare results of my models in graphs.

- Transformation of the model into a Django of Flask API

Date: Extract date informations :

- year number

- month name

- day number

- week day name

- working day condition

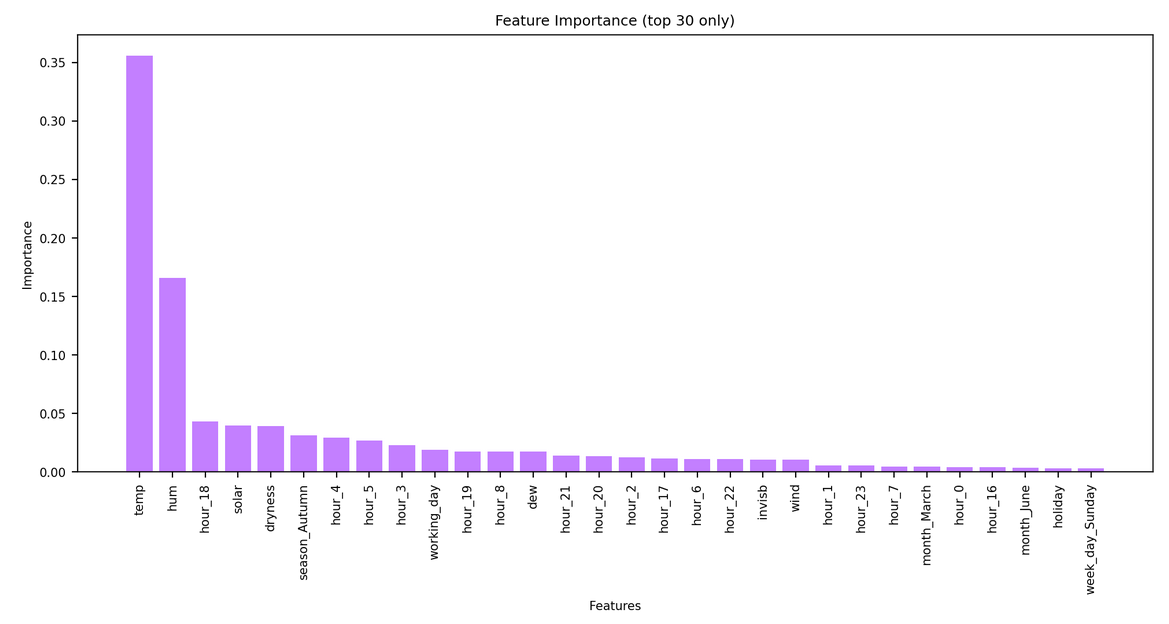

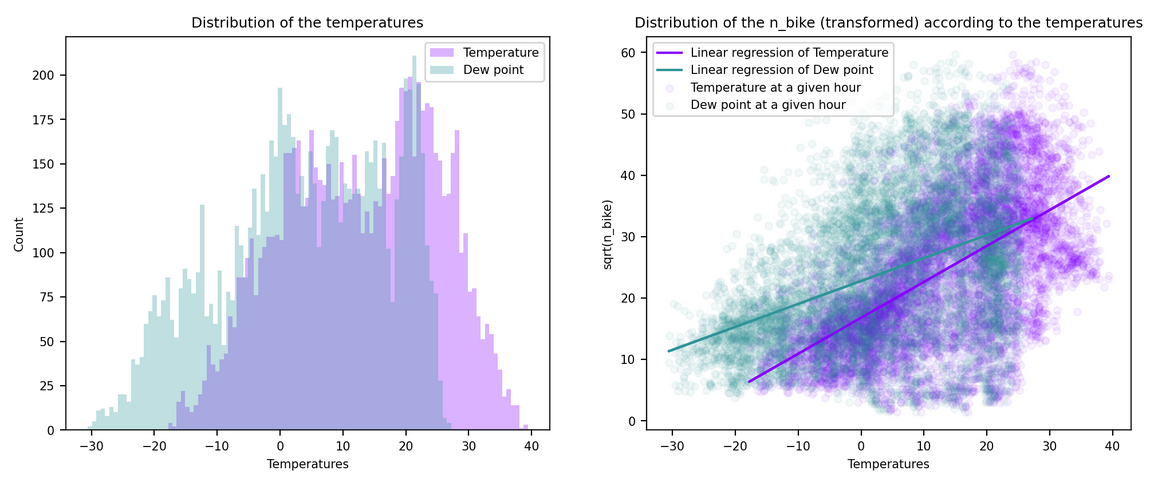

Temperature and Dew point temperature: Unchanged

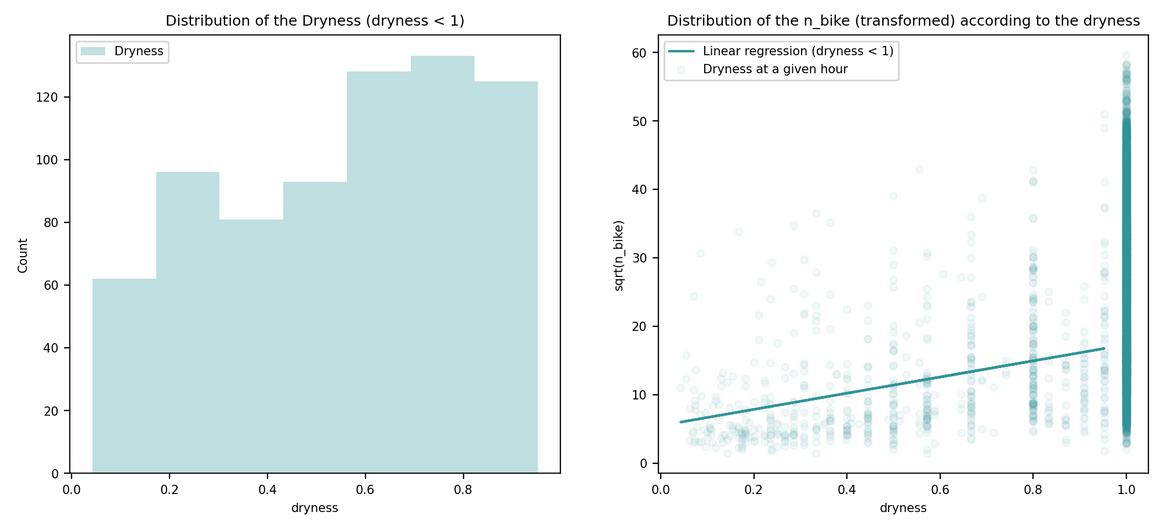

Rainfall: changed to dryness on the two lasts hours

{

{

Snowfall: changed to snowing condition on the 8 lasts hours

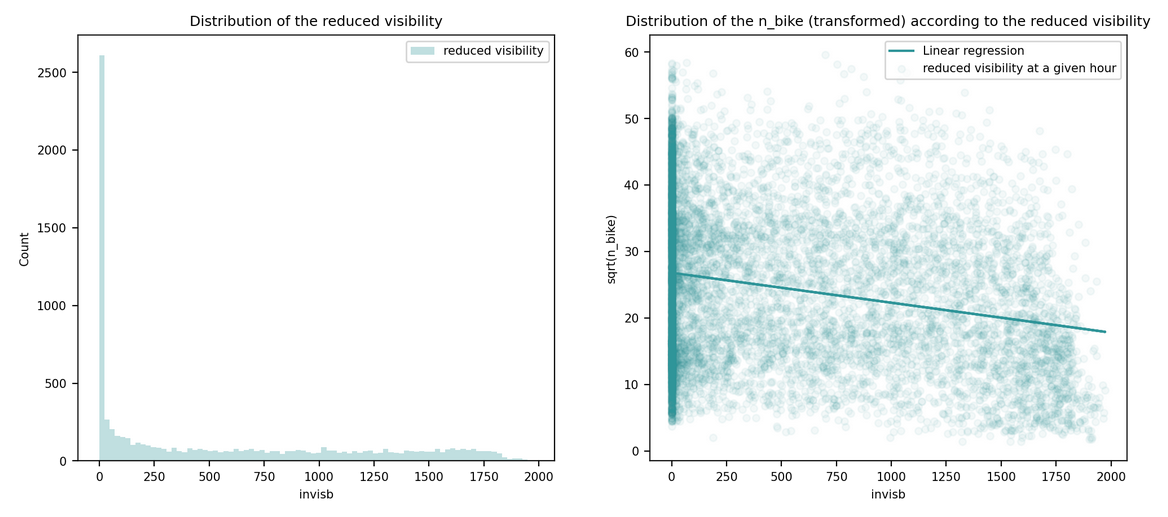

Visibility: changed to loosed visibility compared to default

hour, season, month, day, week_day: to dummies

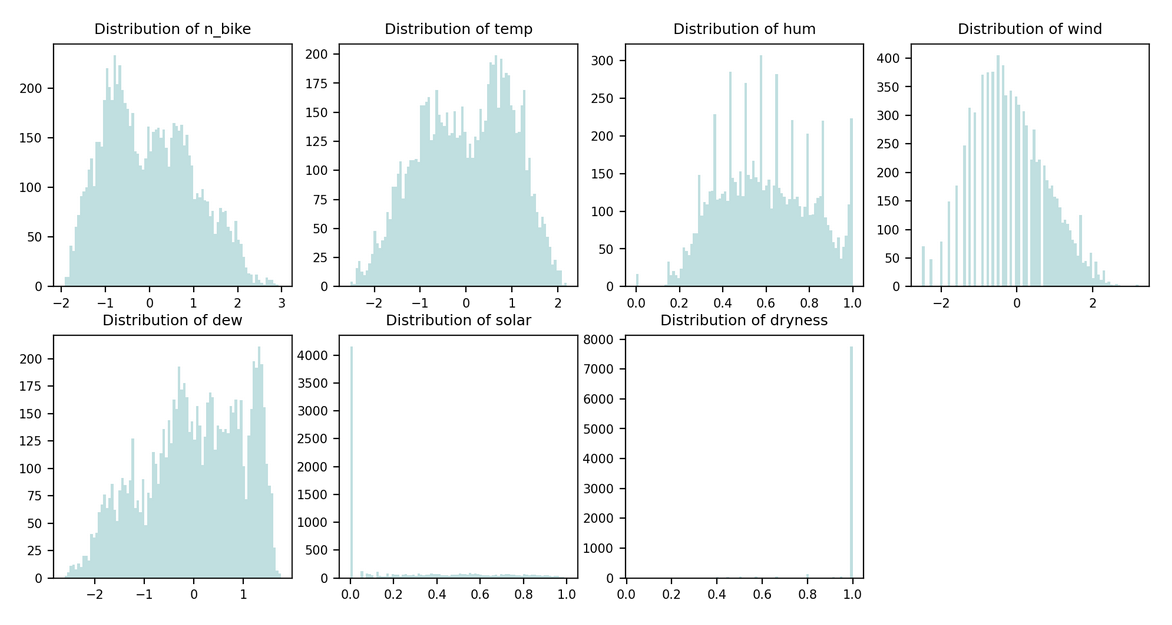

n_bike, temp, wind, dew: Standardization

hum, solar, dryness: Normalization

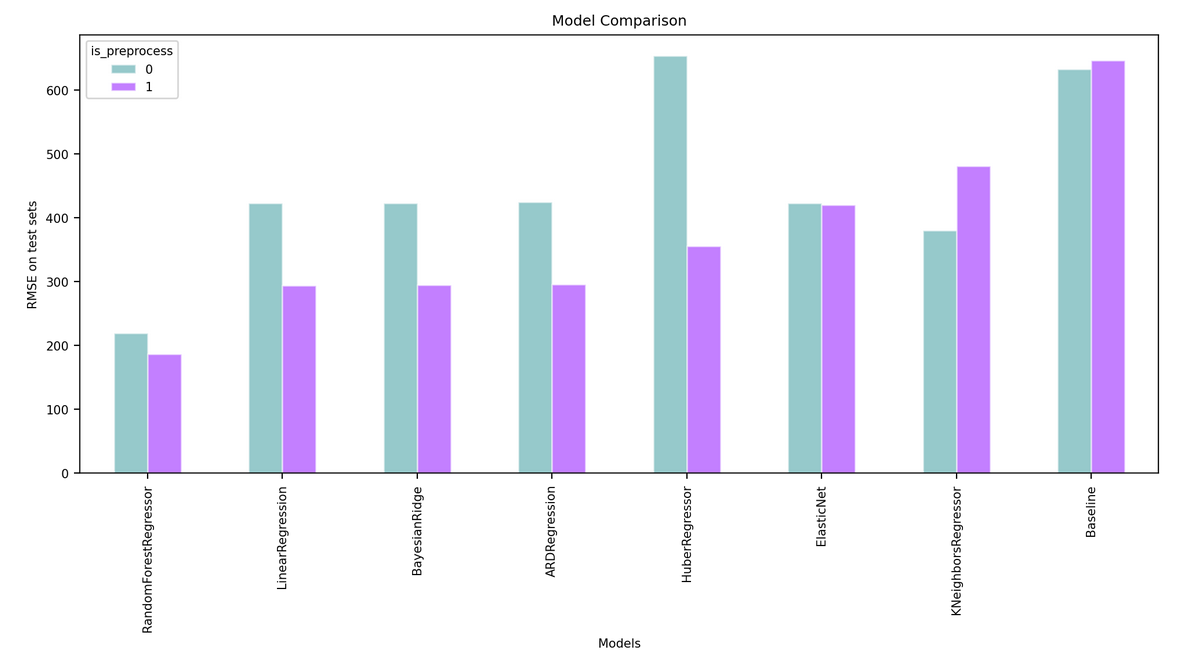

For each following models I performed the same process :

- LinearRegression

- ElasticNet

- HuberRegressor

- BayesianRidge

- ARDRegression

- KNeighborsRegressor

- RandomForestRegressor

For each dataset in {transformed dataset, untransformed dataset}: For each model :

- Grid search on hyperparameters (if needed) ON THE TRAINING SET

- Get the best hyperparameters for the model

- Compute the RMSE on the prediction ON THE TEST SET

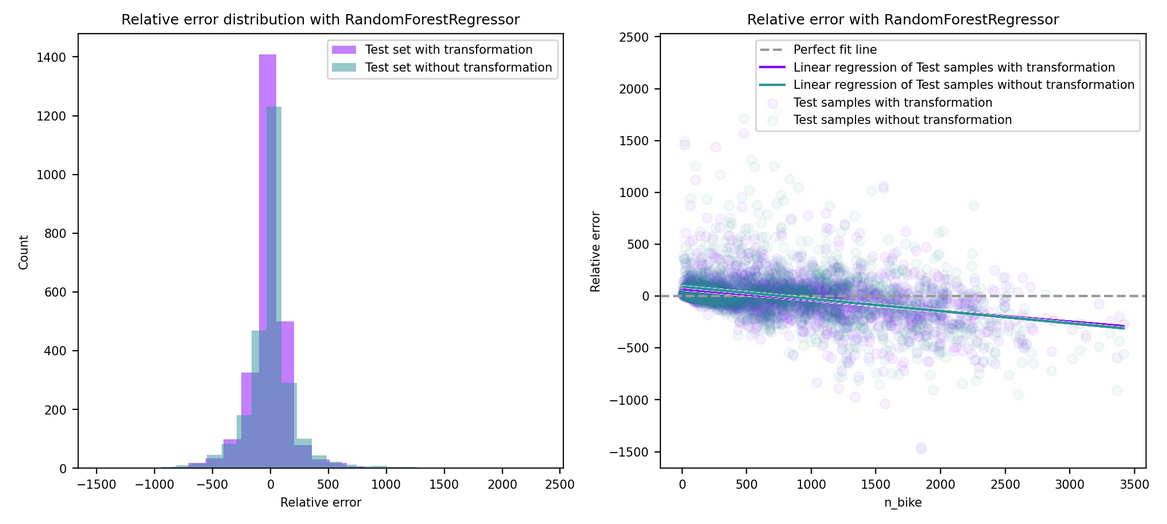

Model Name: RandomForestRegressor

Description: A random forest is a meta estimator that fits a number of classifying decision trees on various sub-samples of the dataset and uses averaging to improve the predictive accuracy and control over-fitting.

Prevents Overfitting: yes

Handles Outliers: yes

Handles several features: yes

Adaptive Regularization: no

Large Dataset: yes

Non linear: yes

Interpretability Score: 3 / 5

When to Use: Nonlinear data groups in buckets

When to Use Expanded:

Advantages:

- Can export tree structure to see which features the tree is splitting on

- Handles sparse and correlated data well

- Able to tune the model to help with overfitting problem

Disadvantages: - Prediction accuracy on complex problems is usually inferior to gradient-boosted trees.

- A forest is less interpretable than a single decision tree.

Sklearn Package: tree

Required Args: None

Helpful Args: criterion and max_depth

Variations: gradient-boosted trees

Grid Search:

n_estimators: int, default=100

The number of trees in the forest.criterion: str, default=”mse”

The function to measure the quality of a split. Supported criteria are “mse” for the mean squared error, which is equal to variance reduction as feature selection criterion, and “mae” for the mean absolute error.max_depth: int, default=None

The maximum depth of the tree. If None, then nodes are expanded until all leaves are pure or until all leaves contain less than min_samples_split samples.max_features: str, int or float, default=”auto”

The number of features to consider when looking for the best split.