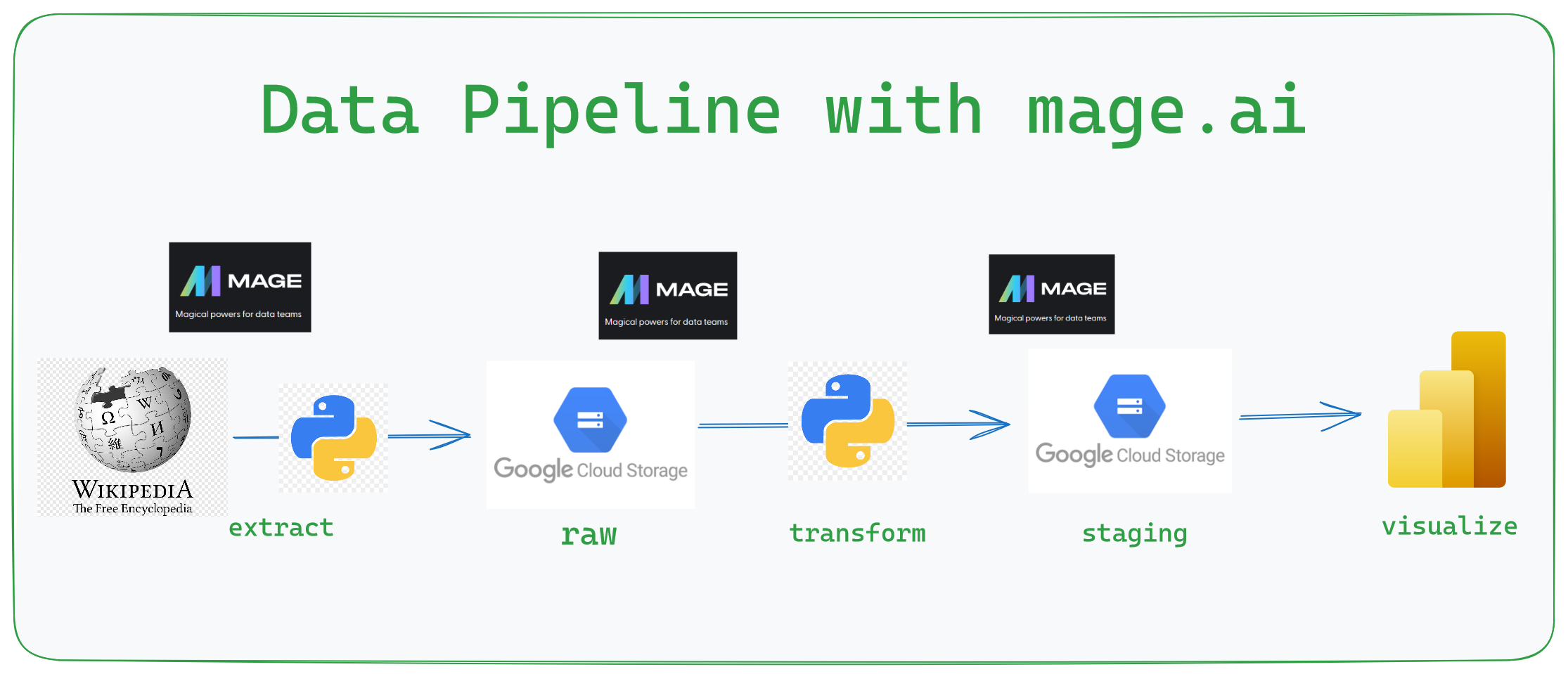

This data project details the usage of how mage.ai can be used as a tool to aid automation of a data pipeline

Dataset is extracted from a website source which is based on the historical death of hip hop rap artists all over the world.

- Extract data from source url using python

- Load raw data into Google Cloud Storage data lake

- Connect to data lake storage and perform transformation on raw data

- Load transformed data into staging area on Google Cloud Storage

- Connect Power BI tool to enable visualization and insights

- Clone the github repository -> git clone repo-url

- cd into etl_mage directory

cd etl_mage - Locate the io_config_copy.yaml file and rename to io_config.yaml

- Inside the io_config.yaml file, locate the GOOGLE_SERVICE_ACCOUNT_KEY section and fill in your Google service account credentials

- Alternatively, you can give a path to your service account key using the GOOGLE_SERVICE_ACC_KEY_FILEPATH option

- cd into the

etl_magedirectory - type

mage startin the terminal to start the development environment

- Code refactor and optimization

- Data Visualizationa

- Setting up a coding environment using Google cloud compute engine

- ssh into compute engine instance using vscode

- Setting up Google Cloud Service account key

- Setting up a mage.ai instance and developing its data pipeline

- Data extraction from a web source using python web scraping tool (BeautifulSoup, requests)