This paper has been accepted by ECCV 2022

By Ziyue Feng, Liang Yang, Longlong Jing, Haiyan Wang, Yingli Tian, and Bing Li.

Arxiv: Link Youtube: link Project Site: link

You can install the dependencies with:

conda create -n dynamicdepth python=3.6.6

conda activate dynamicdepth

conda install pytorch torchvision torchaudio cudatoolkit=11.1 -c pytorch -c conda-forge

pip install tensorboardX==1.4

conda install opencv=3.3.1 # just needed for evaluation

pip install open3d

pip install wandb

pip install scikit-image

python -m pip install cityscapesscriptsWe ran our experiments with PyTorch 1.8.0, CUDA 11.1, Python 3.6.6 and Ubuntu 18.04.

Pull the repository and make a folder named CS_RAW for cityscapes raw data:

git clone https://github.com/AutoAILab/DynamicDepth.git

cd DynamicDepth

cd data

mkdir CS_RAWFrom Cityscapes official website download the following packages: 1) leftImg8bit_sequence_trainvaltest.zip, 2) camera_trainvaltest.zip into the CS_RAW folder.

Preprocess the Cityscapes dataset using the prepare_train_data.py(from SfMLearner) script with following command:

cd CS_RAW

unzip leftImg8bit_sequence_trainvaltest.zip

unzip camera_trainvaltest.zip

cd ..

python prepare_train_data.py \

--img_height 512 \

--img_width 1024 \

--dataset_dir CS_RAW \

--dataset_name cityscapes \

--dump_root CS \

--seq_length 3 \

--num_threads 8Download cityscapes depth ground truth(provided by manydepth) for evaluation:

cd ..

cd splits/cityscapes/

wget https://storage.googleapis.com/niantic-lon-static/research/manydepth/gt_depths_cityscapes.zip

unzip gt_depths_cityscapes.zip

cd ../..(Recommended)Download Manydepth pretrained model from Here and put in the log folder. Training from these weights will converge much faster.

mkdir log

cd log

# Download CityScapes_MR.zip to here

unzip CityScapes_MR.zip

cd ..Download dynamic object masks for Cityscapes dataset from (Google Drive or OneDrive) and extract the train_mask and val_mask folder to DynamicDepth/data/CS/. (232MB for train_mask.zip and 5MB for val_mask.zip)

By default models and log event files are saved to log/dynamicdepth/models.

python -m dynamicdepth.train # the configs are defined in options.pyval() function in the trainer.py evaluates the model on Cityscapes testing set.

You can download our pretrained model from the following links:

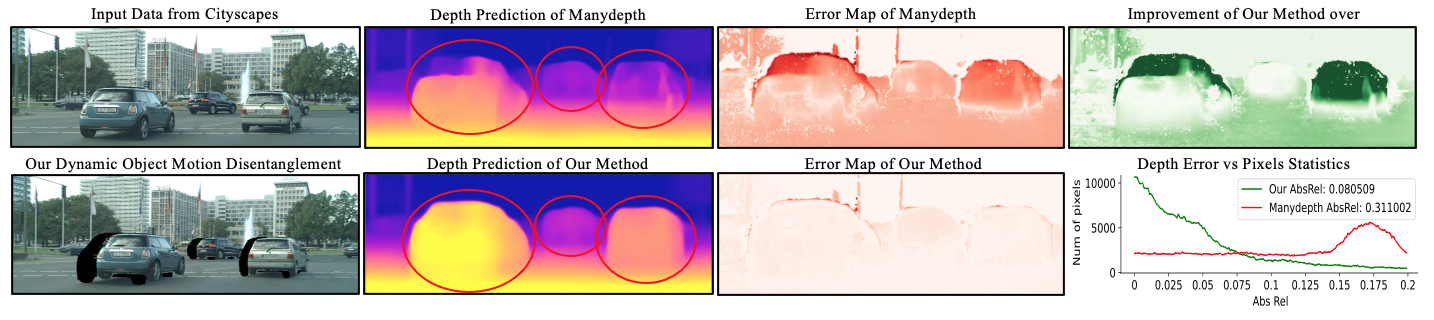

| CNN Backbone | Input size | Cityscapes AbsRel | Link |

|---|---|---|---|

| ResNet 18 | 640 x 192 | 0.104 | Download 🔗 |

@article{feng2022disentangling,

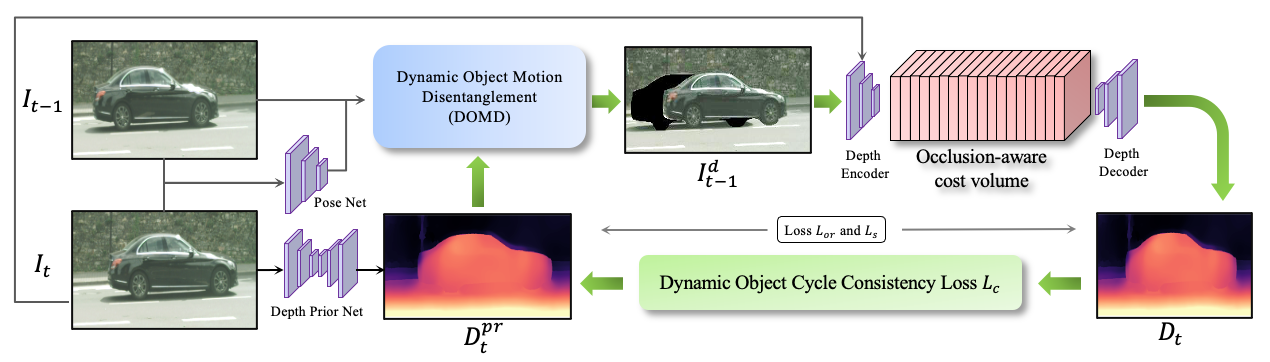

title={Disentangling Object Motion and Occlusion for Unsupervised Multi-frame Monocular Depth},

author={Feng, Ziyue and Yang, Liang and Jing, Longlong and Wang, Haiyan and Tian, YingLi and Li, Bing},

journal={arXiv preprint arXiv:2203.15174},

year={2022}

}

InstaDM: https://github.com/SeokjuLee/Insta-DM ManyDepth: https://github.com/nianticlabs/manydepth

If you have any concern with this paper or implementation, welcome to open an issue or email me at 'zfeng@clemson.edu'