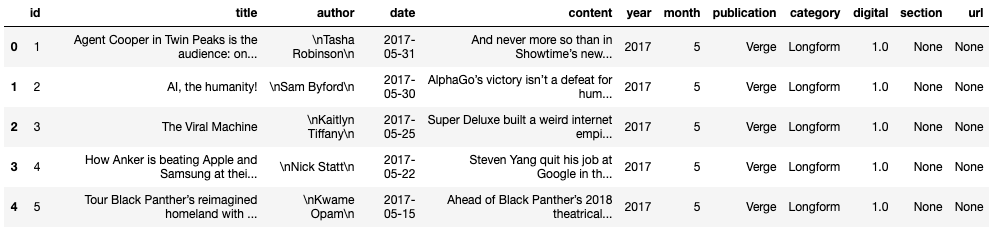

- Obtain news articles to analyze text data

- Utilize Natrural Language Tool Kit to preprocess text

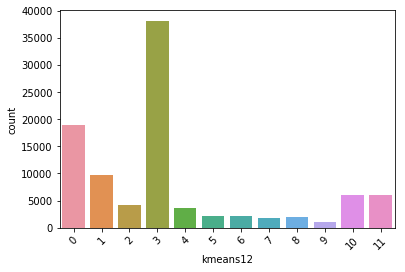

- Train K-Means Clustering algorithm to segment groups of news articles based on similarities in text content

- Train supervised classification algorithms to predict which cluster a new article would fall closest to

- Perform pairwise cosine similarity on an article from user input to obtain extractive text summarization to give the reader a quick view of the main points of the article

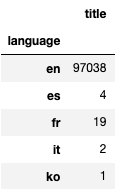

A look at the languages of each article

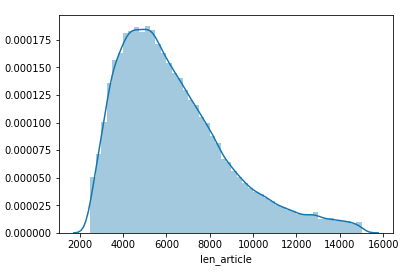

Distribution of article length (in characters)

After cutting out extrememly large and small articles along with non-English articles, I ended with a datset of just over 97k articles, still a pretty good size to train with.

Preprocessing

In order to preprocess the text data for K-Means, a number of initial steps have to be taken:

- Tokenize words

- Lemmatize words

- Stem words

- Concatenate words of each record into a string and convert the entire pandas series into a list of strings

- Perform Count Vectorization and remove stop words

- Transform list of strings with TF-IDF transformation

A preview of the top words in each cluster is below:

- Cluster 0: state, new, year, president, people, nation, one, unit, country, govern

- Cluster 1: Trump, president, Donald, would, white, house, campaign, American, Washington, administration, nation

- Cluster 2: Trump, republican, party, Donald, democrat, presidential, senate, candidate, GOP, voter, nominee

- Cluster 3: one, year, new, first, world, game, live, week, people, make, get, say, work, show

- Cluster 4: Clinton, Hillary, Trump, democrat, campaign, Sanders, presidential, election, emails, Bernie, support

- Cluster 5: Trump, Russia, investigation, intelligence, comey, election, director, Putin, Flynn

- Cluster 6: school, student, university, education, year, teacher, class, week, graduate

- Cluster 7: court, supreme, justice, judge, rule, federal, senate, law, appeal, Obama, legal

- Cluster 8: republican, care, health, house, bill, Trump, act, senate, Obamacare, president, insurance, reform

- Cluster 9: please, story, great, need, write, continue, step, block, display, extend, part, idea

- Cluster 10: company, year, percent, U.S., market, billion, bank, price, rate, stock, investor, share, report, oil

- Cluster 11: police, state, attack, kill, North Korea, Islam, Syria, president, military, force

- Clusters 0, 1 and 2 seems to be mainly political and it looks like clusters 1 and 2 mainly lean towards articles regarding the republican election campaign. Cluster 0 seems to be quite broad politically.

- Cluster 3 looks extremely broad as well, and it is also the largest cluster BY FAR. This could be due to the fact that there are a large amount of articles in the dataset that have a wide range of topics. After testing my classification model, it looks like most sports articles will end up being classified as cluster 3.

- Cluster 4 is quite strong and it is mainly based on articles about the democratic party and Hillary Clinton

- Cluster 5 is specifically related to articles written about Russian meddling in the 2016 election

- Cluster 6 shows a strong relation to articles written about schooling

- Cluster 7 is highly related to the federal court system

- Cluster 8 looks to be primarily about political issues such as health care, tax reform etc

- Cluster 9 is another cluster that has a wide range of topics that don't seem to generalize to a small amount of ideas

- Cluster 10 is clearly made up of articles regarding financial markets

- Cluster 11 to me is the most impressive, this cluster seems to be built around police, military and foreign conflicts

Further Clustering:

After noticing the size and proportion of cluster #3, I had the inclination to re cluster the rows within it. As it turns out, Each cluster created from cluster #3 was soley devoted around Donald Trump and the election. Because of this, I decided not to include my reclustering into my model, instead I left cluster #3 as it is. As it turns out, the top words for cluster #3 were misleading, although I reached an answer after digging deeper. This cluster still remains broad but it seems that it is centered politically as well.

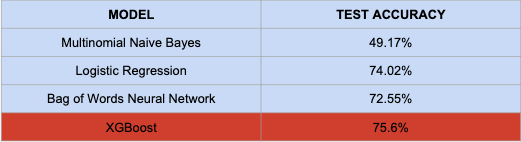

Using the clusters created by the K-Means algorithm, I trained multiple models to try to classify which cluster a new article will be put into. The overall top performer was XGBoost which gave a test accuracy of 75.6%. Somewhat surprisingly, the bag of words neural network that I trained was outperformed by logistic regression and XGBoost.

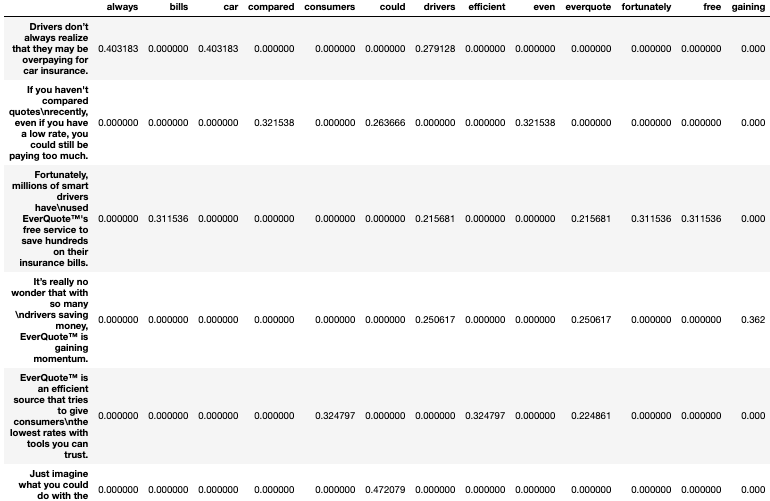

TF-IDF (Term Frequency - Inverse Document Frequency) gives weights to individual words based on their uniqueness compared to the document's overall vocabulary. Words with higher weights (more unique) often have more importance or provide more meaning to the document. To use this, I built a function that takes in an articles text, tokenizes each sentence (dataframe rows), creates a vocabulary without stopwords for the individual document (dataframe columns) and finally gives TF-IDF weights to each individual word in the vocab for each sentence. A preview is given below for a short text snippet.

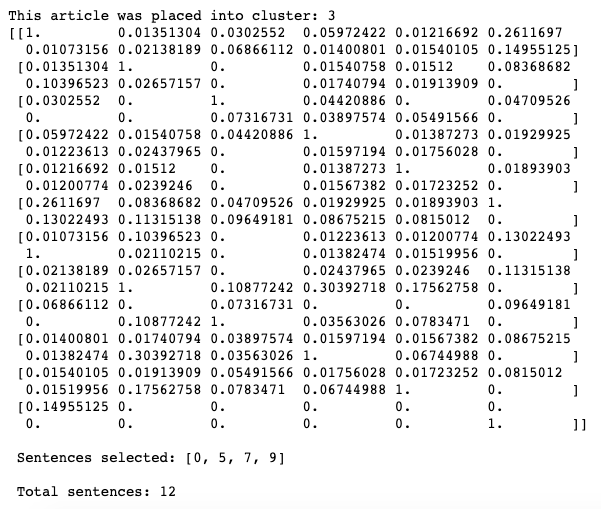

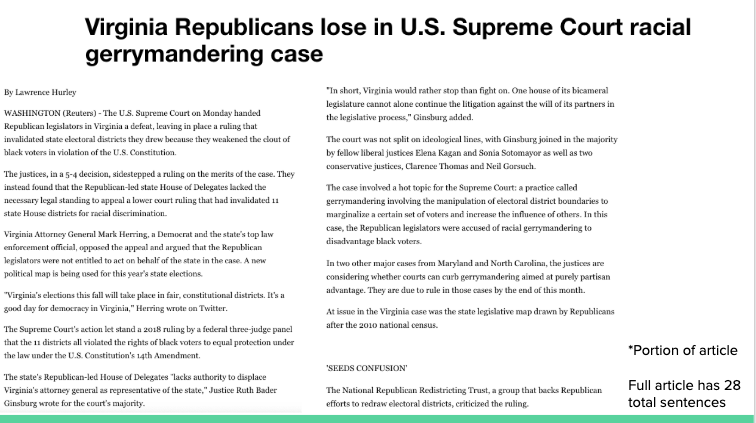

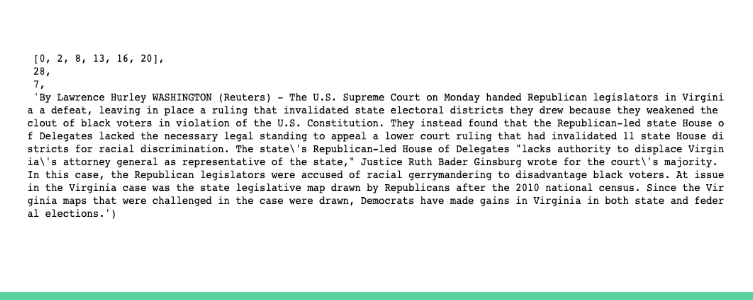

Using the TF-IDF weights for each sentence, I convert each row into a vector and store them in a matrix. Next, I find the cosine-similarity of each TF-IDF vectorized sentence pair. An example of this is shown below for a news article:

Finally, after finding the cosine-similarity for all vectorized pairs, I average the weights of each vector, and return the indexes of the vectors with the highest averages. These indexes are then used to pull out the sentences from the original text for the summarization. To see the full function, head over to the new_user_functions notebook.

For simplicity, the amount of sentences that are returned for the summarization is equal to the square root (rounded up to the nearest integer) of the number of sentences in the article.

Looking at the output, I show a list of sentence indexes that were selected by the summarization tool to be extracted from the original text. The number 28 represents the number of total sentences in the original article. The number 7 represents that this article was placed into cluster #7 (court articles). And finally, the extractive summary that was outputted seems to be done quite well

- Adjust number of clusters to find "optimal" K-Means

- Add more articles from non-political topics to the dataset to adjust class imbalance

- Use summarizations to find cosine-similarity with other articles in the dataset

- Add functionality to article scraper to support more news websites