Harbeth is a tiny set of utils and extensions over Apple's Metal framework dedicated to make your Swift GPU code much cleaner and let you prototype your pipelines faster.

English | 简体中文

🟣 At the moment, the most important features of metal moudle can be summarized as follows:

- Support iOS and macOS.

- Support operator chain filter.

- Support

UIImage,CIImage,CGImage,CMSampleBufferandCVPixelBufferadd fillters. - Support quick design filters.

- Support merge multiple filter effects.

- Support fast expansion of output sources.

- Support camera capture effects.

- Support video to add filter special effects.

- Support matrix convolution.

- Support

MetalPerformanceShadersrelated types of filters. - Support compatible for

CoreImage. - The filter part is roughly divided into the following modules:

- Blend: This module mainly contains image blend filters.

- Blur: Blur effect filters.

- Pixel: Basic pixel processing of images.

- Effect: Effect processing.

- Lookup: Lookup table filters.

- Matrix: Matrix convolution filters.

- Shape: Image shape size related filters.

- Visual: Visual dynamic effect filters.

- MPS: MetalPerformanceShaders related types of filters.

- Original code.

lazy var ImageView: UIImageView = {

let imageView = UIImageView(image: originImage)

//imageView.contentMode = .scaleAspectFit

imageView.translatesAutoresizingMaskIntoConstraints = false

imageView.layer.borderColor = R.color("background2")?.cgColor

imageView.layer.borderWidth = 0.5

return imageView

}()

ImageView.image = originImage- 🫡 Code zero intrusion add filter function.

let filter1 = C7ColorMatrix4x4(matrix: Matrix4x4.Color.sepia)

let filter2 = C7Granularity(grain: 0.8)

let filter3 = C7SoulOut(soul: 0.7)

let filters = [filter1, filter2, filter3]

// Use:

let dest = BoxxIO.init(element: originImage, filters: filters)

ImageView.image = try? dest.output()

// OR Use:

ImageView.image = try? originImage.makeGroup(filters: filters)

// OR Use Operator:

ImageView.image = originImage -->>> filters- 📸 Camera capture generates pictures.

// Add an edge detection filter:

let filter = C7EdgeGlow(lineColor: .red)

// Add a particle filter:

let filter2 = C7Granularity(grain: 0.8)

// Generate camera collector:

let camera = C7CollectorCamera.init(delegate: self)

camera.captureSession.sessionPreset = AVCaptureSession.Preset.hd1280x720

camera.filters = [filter, filter2]

extension CameraViewController: C7CollectorImageDelegate {

func preview(_ collector: C7Collector, fliter image: C7Image) {

DispatchQueue.main.async {

self.originImageView.image = image

}

}

}- Local video or Network video are simply apply with filters.

- 🙄 For details, See PlayerViewController.

- You can also extend this by using BoxxIO to filter the collected

CVPixelBuffer.

lazy var video: C7CollectorVideo = {

let videoURL = URL.init(string: "Link")!

let asset = AVURLAsset.init(url: videoURL)

let playerItem = AVPlayerItem.init(asset: asset)

let player = AVPlayer.init(playerItem: playerItem)

let video = C7CollectorVideo.init(player: player, delegate: self)

video.filters = [C7ColorMatrix4x4(matrix: Matrix4x4.Color.sepia)]

return video

}()

self.video.play()

extension PlayerViewController: C7CollectorImageDelegate {

func preview(_ collector: C7Collector, fliter image: C7Image) {

self.originImageView.image = image

// Simulated dynamic effect.

if let filter = self.tuple?.callback?(self.nextTime) {

self.video.filters = [filter]

}

}

}- Operator chain processing

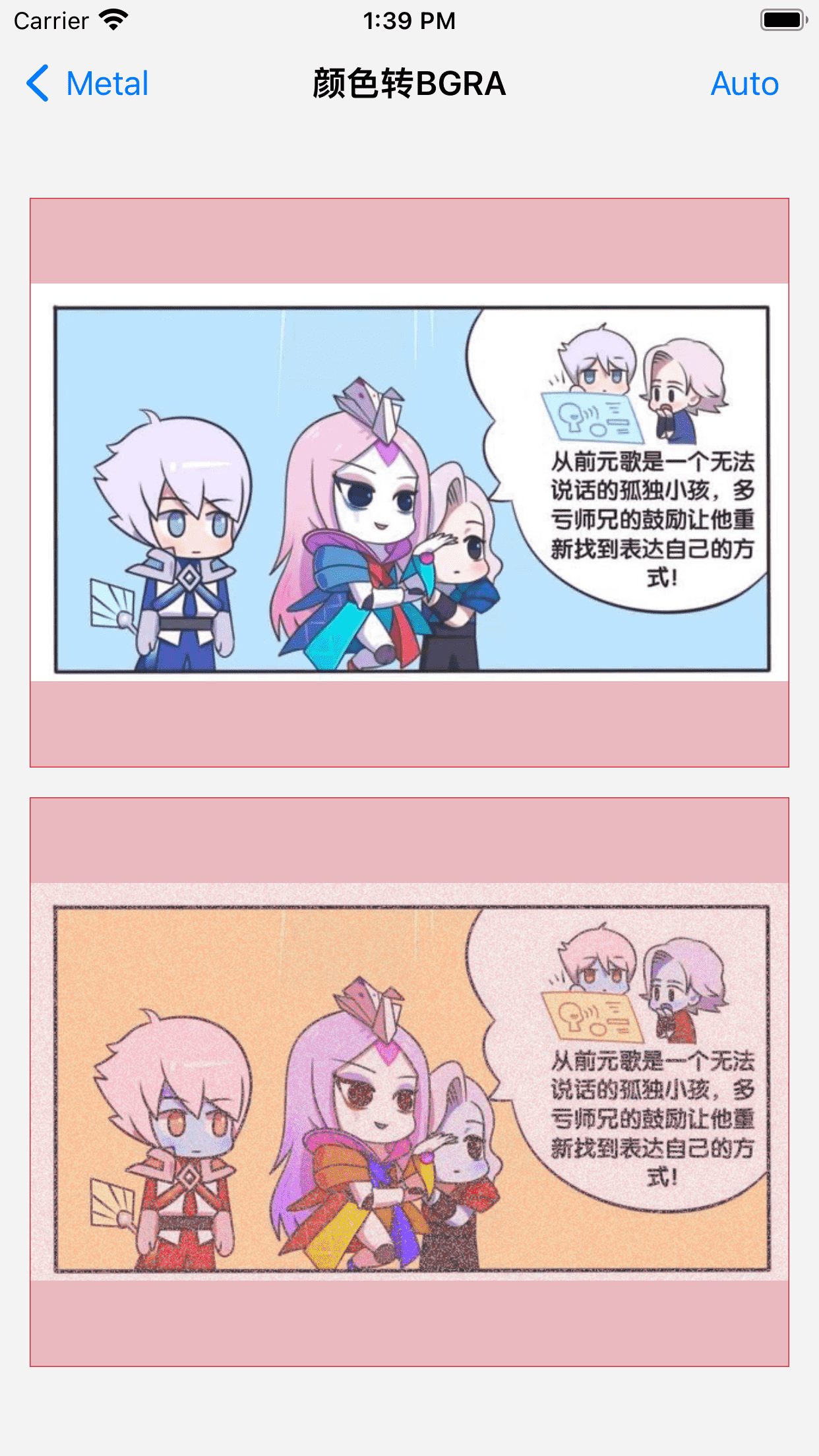

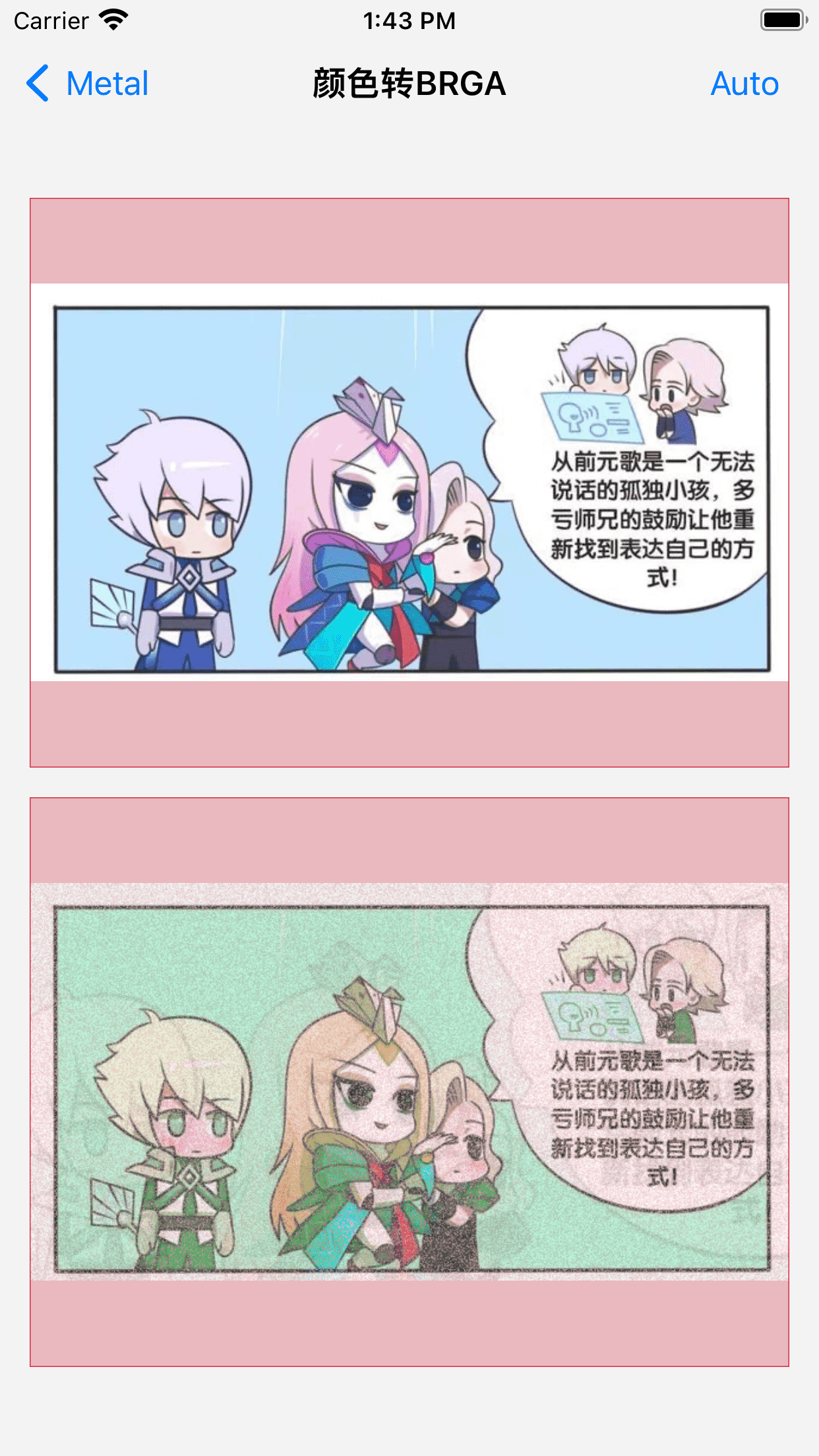

/// 1.Convert to BGRA

let filter1 = C7ColorConvert(with: .bgra)

/// 2.Adjust the granularity

let filter2 = C7Granularity(grain: 0.8)

/// 3.Adjust white balance

let filter3 = C7WhiteBalance(temperature: 5555)

/// 4.Adjust the highlight shadows

let filter4 = C7HighlightShadow(shadows: 0.4, highlights: 0.5)

/// 5.Combination operation

filterImageView.image = originImage ->> filter1 ->> filter2 ->> filter3 ->> filter4- Batch processing

/// 1.Convert to RBGA

let filter1 = C7ColorConvert(with: .rbga)

/// 2.Adjust the granularity

let filter2 = C7Granularity(grain: 0.8)

/// 3.Soul effect

let filter3 = C7SoulOut(soul: 0.7)

/// 4.Combination operation

let group: [C7FilterProtocol] = [filter1, filter2, filter3]

/// 5.Get the result

filterImageView.image = try? originImage.makeGroup(filters: group)Both methods can handle multiple filter schemes, depending on your mood.✌️

- For example, how to design an soul filter.🎷

- Accomplish

C7FilterProtocal

public struct C7SoulOut: C7FilterProtocol {

public static let range: ParameterRange<Float, Self> = .init(min: 0.0, max: 1.0, value: 0.5)

/// The adjusted soul, from 0.0 to 1.0, with a default of 0.5

@ZeroOneRange public var soul: Float = range.value

public var maxScale: Float = 1.5

public var maxAlpha: Float = 0.5

public var modifier: Modifier {

return .compute(kernel: "C7SoulOut")

}

public var factors: [Float] {

return [soul, maxScale, maxAlpha]

}

public init(soul: Float = range.value, maxScale: Float = 1.5, maxAlpha: Float = 0.5) {

self.soul = soul

self.maxScale = maxScale

self.maxAlpha = maxAlpha

}

}-

Configure additional required textures.

-

Configure the passed parameter factor, only supports

Floattype.- This filter requires three parameters:

soul: The adjusted soul, from 0.0 to 1.0, with a default of 0.5maxScale: Maximum soul scale.maxAlpha: The transparency of the max soul.

- This filter requires three parameters:

-

Write a kernel function shader based on parallel computing.

kernel void C7SoulOut(texture2d<half, access::write> outputTexture [[texture(0)]],

texture2d<half, access::sample> inputTexture [[texture(1)]],

constant float *soulPointer [[buffer(0)]],

constant float *maxScalePointer [[buffer(1)]],

constant float *maxAlphaPointer [[buffer(2)]],

uint2 grid [[thread_position_in_grid]]) {

constexpr sampler quadSampler(mag_filter::linear, min_filter::linear);

const half4 inColor = inputTexture.read(grid);

const float x = float(grid.x) / outputTexture.get_width();

const float y = float(grid.y) / outputTexture.get_height();

const half soul = half(*soulPointer);

const half maxScale = half(*maxScalePointer);

const half maxAlpha = half(*maxAlphaPointer);

const half alpha = maxAlpha * (1.0h - soul);

const half scale = 1.0h + (maxScale - 1.0h) * soul;

const half soulX = 0.5h + (x - 0.5h) / scale;

const half soulY = 0.5h + (y - 0.5h) / scale;

const half4 soulMask = inputTexture.sample(quadSampler, float2(soulX, soulY));

const half4 outColor = inColor * (1.0h - alpha) + soulMask * alpha;

outputTexture.write(outColor, grid);

}- Simple to use, since my design is based on a parallel computing pipeline, images can be generated directly.

/// Add a soul out of the body filter.

let filter = C7SoulOut(soul: 0.5, maxScale: 2.0)

/// Display directly in ImageView.

ImageView.image = originImage ->> filter- As for the animation above, it is also very simple, add a timer, and then change the value of

souland you are done, simple.

- If you want to import Metal module, you need in your Podfile:

pod 'Harbeth'

- If you want to import OpenCV image module, you need in your Podfile:

pod 'OpencvQueen'

Swift Package Manager is a tool for managing the distribution of Swift code. It’s integrated with the Swift build system to automate the process of downloading, compiling, and linking dependencies.

Xcode 11+ is required to build Harbeth using Swift Package Manager.

To integrate Harbeth into your Xcode project using Swift Package Manager, add it to the dependencies value of your Package.swift:

dependencies: [

.package(url: "https://github.com/yangKJ/Harbeth.git", branch: "master"),

]The general process is almost like this, the Demo is also written in great detail, you can check it out for yourself.🎷

Tip: If you find it helpful, please help me with a star. If you have any questions or needs, you can also issue.

Thanks.🎇

- 🎷 E-mail address: yangkj310@gmail.com 🎷

- 🎸 GitHub address: yangKJ 🎸

Harbeth is available under the MIT license. See the LICENSE file for more info.