====

The R package RandomForestsGLS: Random Forests for dependent data fits non-linear regression models on dependent data with Generalized Least Square (GLS) based Random Forest (RF-GLS). Classical Random forests ignore the correlation structure in the data for purpose of greedy partition, mean estimation and resampling for each regression tree. The package implements a RF-GLS proposed in Saha et al. (2021) which circumvents the aforementioned problems by incorporating a working correlation structure of the data for partition, mean estimation and resampling. In this article, it is shown that the greedy split criterion of classical regression trees can be written as an Ordinary Least Square (OLS) optimization with membership in current leaf nodes forming the design matrix. The article extends RF to RF-GLS in a similar fashion to how OLS is extended to GLS by incorporating the covariance structure of the data in the cost function. This ensures that the node splitting and node representatives involve contribution from points belonging to other nodes, weighed by their respective spatial correlations. In classical Random Forest (RF), data points are resampled/subsampled for each of the regression trees, without accounting for their inherent correlation structure. RF-GLS circumvents this problem by resampling/subsampling uncorrelated contrasts instead of original data points. RandomForestsGLS implements a fast version of RF-GLS which approximates the working correlation structure using Nearest Neighbor Gaussian Process (NNGP) (Datta et al., 2016) which makes it suitable for larger datasets.

In order to install the development version of the package, please run the following command in R:

if (!require("devtools")) install.packages("devtools")

devtools::install_github("ArkajyotiSaha/RandomForestsGLS", ref ="HEAD")

For installation of the CRAN version of the package, please use the following command in R:

install.packages("RandomForestsGLS")

The package vignette, available at https://cran.rstudio.com/web/packages/RandomForestsGLS/vignettes/RandomForestsGLS_user_guide.pdf demonstrates with example how the functions available in RandomForestsGLS can be used for non-linear regression analysis of dependent data. Specific functions are discussed in much detail in the code documentation of the package.

For detailed help on the functions in RandomForestsGLS please use the following:

?RFGLS_estimate_spatial #(for estimation in spatial data)

?RFGLS_estimate_timeseries #(for estimation in timeseries data)

?RFGLS_predict #(for prediction of mean function)

?RFGLS_predict_spatial #(for prediction of Spatial Response)

The function input and outputs are described in detail in the reference manual documentation, available in https://cran.rstudio.com/web/packages/RandomForestsGLS/RandomForestsGLS.pdf .

More often than not, the true covariance parameters of the data are not known apriori. Given a choice from a prespecified list of covariance functions (software presently allows for exponential, spherical, Matérn and Gaussian covariances), the software accommodates parameter estimation with the argument param_estimate = TRUE. For this, first we fit a classical RF ignoring the correlation structure. Next, we fit a zero mean Gaussian process with the covariance structure of choice on the residuals to obtain the estimate of the model parameters through maximum likelihood estimation. This initial RF fitting and estimation of the spatial parameters from the RF residuals is justified by theoretical results in Saha et al. (2021) proving that even under dependence, naive RF is consistent (although empirically suboptimal). The estimated model parameters are then used to fit RF-GLS. As demonstrated in Saha et al. (2021), this leads to significant improvement over classical RF both in terms of estimation and prediction accuracy. This procedure is again analogous to the linear model setup, where fitting a feasible GLS model often involves pre-estimating the covariance parameters from residuals from an OLS fit.

It has also been demonstrated (Section 4.4 in Saha et al. (2021)) that even under misspecification in covariance structure (i.e. when the true spatial effects are generated from a different covariance structure than the specified covariance for estimation or arbitrary smooth function), using RF-GLS with exponential covariance (the default choice of covariance function in the software) leads to noticeable improvement over simply using classical RF.

One additional consideration for model parameter estimation involves the computational complexity of the maximum likelihood estimation of Gaussian process. In its original form, this involves

For time-series data, most often the model parameters for the autoregressive process (order of autoregression and the autocorrelation coefficients) are unknown. These are estimated using a strategy similar to the spatial case. With the option param_estimate = TRUE, the software estimates the model coefficients by fitting a zero mean autoregressive process of user defined order on the residuals from a naive RF fit. The autoregressive parameter fitting is done using arima from base R package stats.

For RFGLS_estimate_spatial, RFGLS_estimate_timeseries, RFGLS_predict and RFGLS_predict_spatial one can also take the advantage of parallelization, contingent upon the availability of multiple cores. One aspect of the parallelizatiion is through the multithreaded implementation of derivation of the NNGP (Datta et al., 2016) components following BRISC package (which helps when we are dealing with a large dataset). We also run the regression trees on parallel, which can be beneficial when the number of trees is large. With very small dataset and small number of trees, communication overhead between the nodes for parallelization outweighs the benefits of the parallel computing. Hence it is recommended to parallelize only for moderately large dataset and/or large number of trees.

-

Implementation: The source code of the package are written in C/C++ for sake of optimizing execution time. The functions available to the user are wrappers around the source code, built with

R's foreign language interface. For the basic structure of the code, we make use of the open-source code of the regression trees inRbased implementation of classical RF inrandomForestpackage. As the split criterion in RF-GLS involves computationally intensive linear algebra operation in nested loops, we useFortran's Basic Linear Algebra Subprograms (BLAS) and Linear Algebra Package (LAPACK). This is achieved by storing all matrices in contiguous memory column-major format. We also offer multicore computation by building each regression tree independently. -

NNGP approximation: Node splitting in RF-GLS requires optimizing a cost function involving the Cholesky factor of the precision matrix. Use of the full dense precision matrix in spatial processes becomes taxing on typical personal computing resources both in terms of computational cost ($O(n^3)$) and storage cost ($O(n^2)$). In order to circumvent this problem, we use NNGP (Datta et al., 2016) to replace the dense graph among spatial locations with a nearest neighbor graphical model. NNGP components can be combined to obtain a sparse Cholesky factor, which closely approximates the decorrelation performance of the true Cholesky. We implement a convenient nearest neighbor search following spNNGP (Finley et al., 2020) and efficient sparse matrix multiplication as in BRISC (Saha and Datta, 2018). The structure of the loops used in the process facilitates parallelization using

openMP(Dagum and Menon, 1998) for this stage of calculation. In time series analysis, the sparsity in the precision matrix is inherently induced by AR covariance structure. -

Scalable node splitting: Another aspect of optimization of the proposed algorithm involves resourceful implementation of the cost function optimization. Provided candidate cut direction (

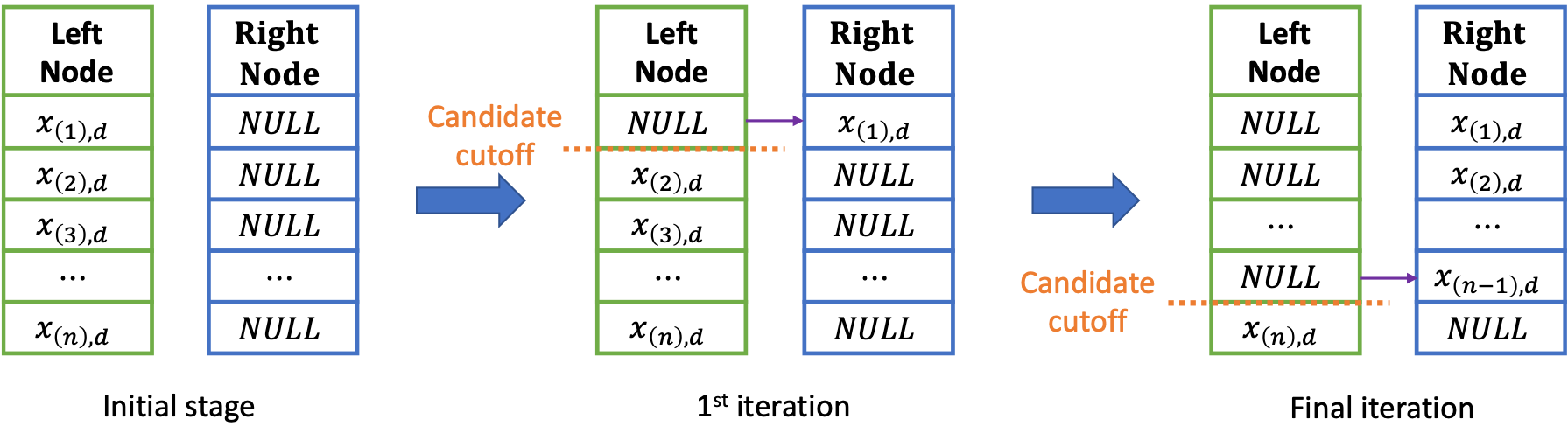

$d$ ), the optimal cutoff point ($c$ ) is chosen by searching through the "gaps" in the corresponding covariate. Following the implementation of classical Regression Forest in randomForest, we start with a list of ordered covariate values corresponding to the prefixed candidate direction and assign the associated data points to one of the nodes initially. This helps with searching through the "gaps" in the data, as searching the next "gap" is equivalent to switch of membership of the existing smallest member (w.r.t the covariate value in the prefixed direction) of the initially assigned node. In order to determine the cost function corresponding to each of the potential cutoff points, in each iteration, we serially switch the membership of the data points from the initial node. The process is graphically demonstrated in the following figure.

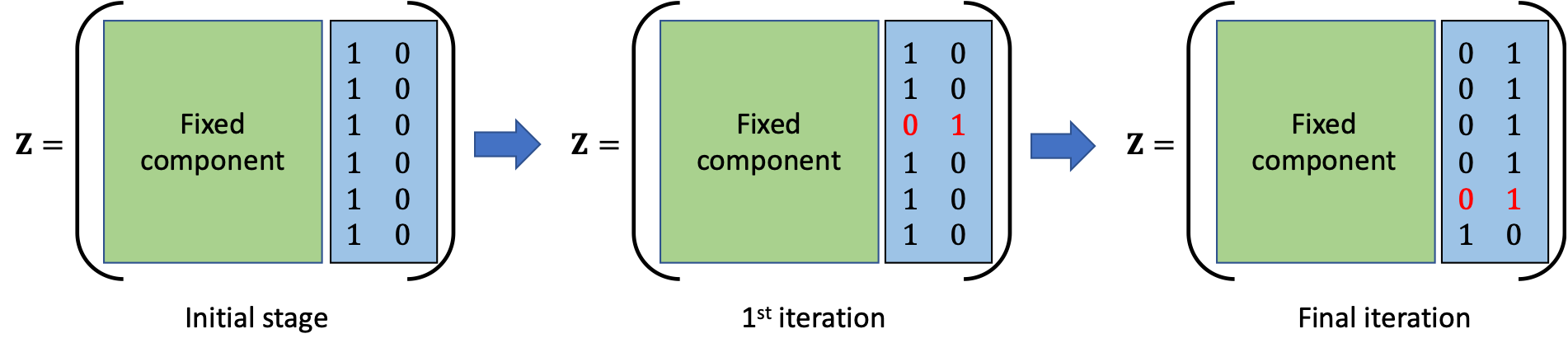

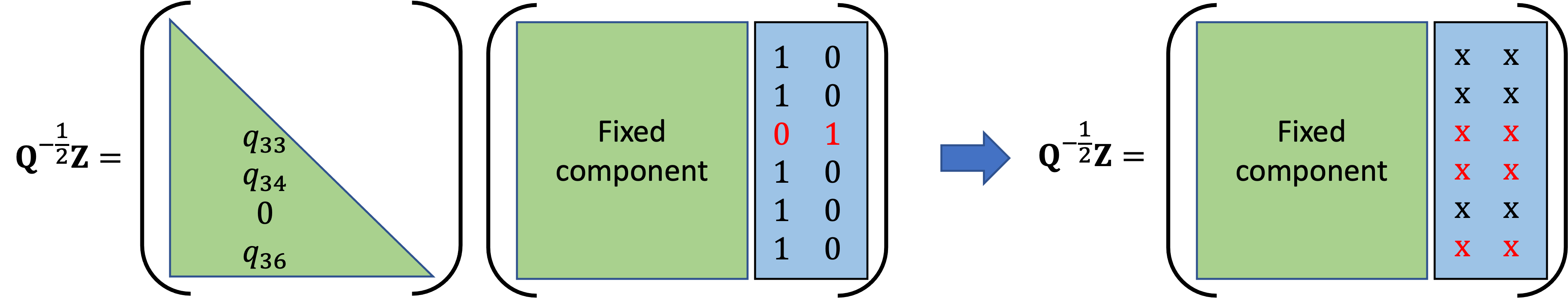

Since a serial update only affects one row in two columns of design matrix Z (corresponding to the newly formed nodes, an example of changes in Z corresponding to the changes in the figure above is demonstrated in the following figure, here we note that the covariate values corresponding to the matrix components are unordered, hence the serial update will not follow the natural ordering), the resulting correlation adjusted effective design matrix (Q1/2 Z) used in GLS loss, only experiences changes in the corresponding two columns and the rows corresonding to the points, which have this specific point in their nearest neighbor set. If we consider the specific example in the following figure, location "3" is a nearest neighbor of only locations "4" and "6". Hence the update rule only effects rows 3, 4 and 6. We efficiently implement this in the package which provides efficiency over brute force recomputation of the effective design matrix for each serial update. The process is graphically demonstrated in the following figure.

Please report issues, bugs or problem with the software at https://github.com/ArkajyotiSaha/RandomForestsGLS/issues . For contribution to the software and support please get in touch with the maintainer Arkajyoti Saha (arkajyotisaha93@gmail.com).

Some code blocks are borrowed from the R packages: spNNGP: Spatial Regression Models for Large Datasets using Nearest Neighbor Gaussian Processes https://CRAN.R-project.org/package=spNNGP and randomForest: Breiman and Cutler's Random Forests for Classification and Regression https://CRAN.R-project.org/package=randomForest .

RF-GLS uses nearest neighbor Gaussian process (NNGP) to approximate the covariance structure in the data, tools necessary to implement the NNGP are borrowed from the spNNGP package, which include util.cpp and parts of updateBF_org and RFGLS_BFcpp in RFGLS.cpp. The basic building blocks for Random Forest is borrowed from randomForest which include parts of RFGLStree_cpp, findBestSplit RFGLSpredicttree_cpp in RFGLS.cpp.

Please cite the following paper when you use RF-GLS

Saha, Arkajyoti, Sumanta Basu, and Abhirup Datta. "Random forests for spatially dependent data." Journal of the American Statistical Association (2021): 1-19. https://www.tandfonline.com/doi/full/10.1080/01621459.2021.1950003 .

Dagum, Leonardo, and Ramesh Menon. "OpenMP: an industry standard API for shared-memory programming." IEEE computational science and engineering 5, no. 1 (1998): 46-55.

Datta, Abhirup, Sudipto Banerjee, Andrew O. Finley, and Alan E. Gelfand. "Hierarchical nearest-neighbor Gaussian process models for large geostatistical datasets." Journal of the American Statistical Association 111, no. 514 (2016): 800-812. https://www.tandfonline.com/doi/full/10.1080/01621459.2015.1044091 .

Saha, Arkajyoti, and Abhirup Datta. "BRISC: bootstrap for rapid inference on spatial covariances." Stat 7, no. 1 (2018): e184. https://onlinelibrary.wiley.com/doi/10.1002/sta4.184 .

Finley, Andrew O., Abhirup Datta, and Sudipto Banerjee. "spNNGP R package for nearest neighbor Gaussian process models." arXiv preprint arXiv:2001.09111 (2020).

Saha, Arkajyoti, Sumanta Basu, and Abhirup Datta. "Random forests for spatially dependent data." Journal of the American Statistical Association (2021): 1-19. https://www.tandfonline.com/doi/full/10.1080/01621459.2021.1950003 .