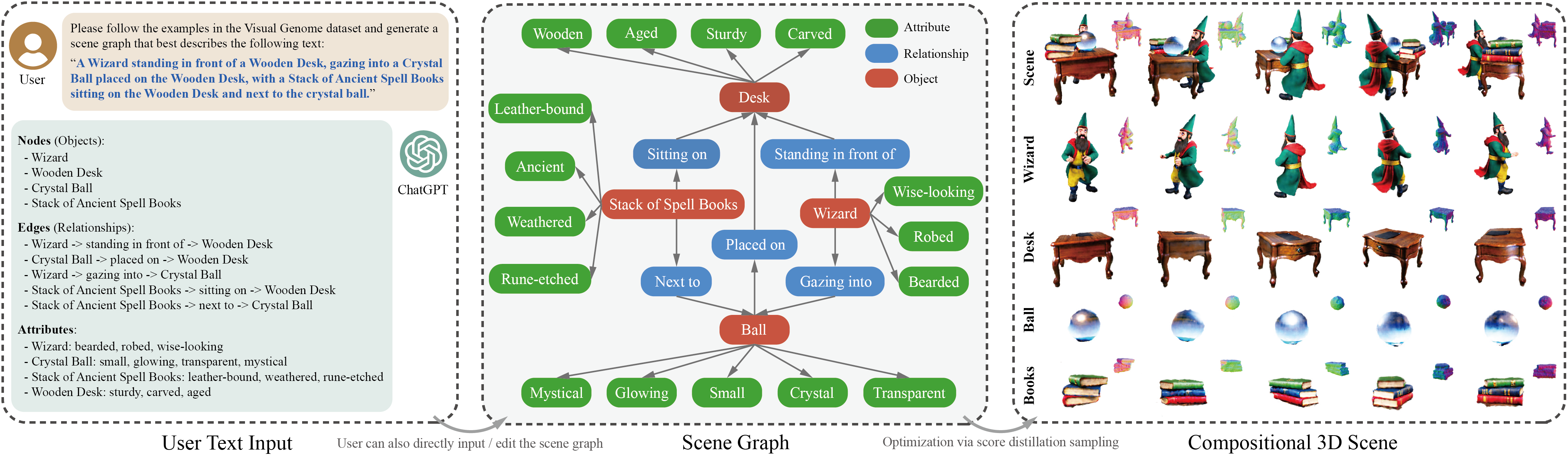

GraphDreamer takes scene graphs as input and generates object compositional 3D scenes.

This repository contains a pytorch implementation for the paper GraphDreamer: Compositional 3D Scene Synthesis from Scene Graphs. Our work present the first framework capable of generating compositional 3D scenes from scene graphs, where objects are represented as nodes and their interactions as edges. See the demo bellow to get a general idea.

git clone https://github.com/GGGHSL/GraphDreamer.git

cd GraphDreamerCreate environment:

python3.10 -m venv venv/GraphDreamer

source venv/GraphDreamer/bin/activate # Repeat this step for every new terminalInstall dependencies:

pip install -r requirements.txtInstall tiny-cuda-nn for running Hash Grid based representations:

pip install git+https://github.com/NVlabs/tiny-cuda-nn/#subdirectory=bindings/torchInstall NerfAcc for NeRF acceleration:

pip install git+https://github.com/KAIR-BAIR/nerfacc.gitGuidance model DeepFloyd IF currently requires to accept its usage conditions. To do so, you need to have a Hugging Face account (login in the terminal by huggingface-cli login) and accept the license on the model card of DeepFloyd/IF-I-XL-v1.0.

Generate a compositional scene of "a blue jay standing on a large basket of rainbow macarons":

bash scripts/blue_jay.shResults of the first (coarse) and the second (fine) stage will be save to examples/gd-if/blue_jay/ and examples/gd-sd-refine/blue_jay/.

Try different seeds by setting seed=YOUR_SEED in the script.

Use different tags to name different trials by setting export TG=YOUR_TAG to avoid overwriting. More examples can be found under scripts/.

Generating a compositional scene with GraphDreamer is as easy as with other dreamers. Here are the steps:

Give each object you want to create in the scene a prompt by setting

export P1=YOUR_TEXT_FOR_OBJECT_1

export P2=YOUR_TEXT_FOR_OBJECT_2

export P3=YOUR_TEXT_FOR_OBJECT_3and system.prompt_obj=[["$P1"],["$P2"],["$P3"]] in the bash script .

By default, object SDFs will be initialized as spheres centered randomly, with the dispersion of the centers adjusted by multiplying a hyperparameter system.geometry.sdf_center_dispersion set to 0.2.

Compose your objects into a scene by giving each object a prompt on its relationship to another object

export P12=RELATIONSHIP_BETWEEN_OBJECT_1_AND_2

export P13=RELATIONSHIP_BETWEEN_OBJECT_1_AND_3

export P23=RELATIONSHIP_BETWEEN_OBJECT_2_AND_3and add system.prompt_global=[["$P12"],["$P23"],["$P13"]] to your script. Based on these relationships, a graph is created accordingly with edges export E=[[0,1],[1,2],[0,2]] and system.edge_list=$E.

Prompt the global scene by combining P12, P13, and P23 into a sentence

export P=GLOBAL_TEXT_FOR_THE_SCENEand add system.prompt_processor.prompt="$P" into the script.

In this compositional senarios, we found a simple way to create the "negative" prompt for individual objects. For each object, all other objects plus their relationships can be used as a negative prompt,

export N1=$P23

export N2=$P13

export N3=$P12and settingsystem.prompt_obj_neg=[["$N1"],["$N2"],["$N3"]].

You can further refine each negative prompts based on this general rule.

Start a new trainining simply by

export TG=YOUR_OWN_TAG

# Use different tags to avoid overwriting

python launch.py --config CONFIG_FILE --train --gpu 0 exp_root_dir="examples" system.geometry.num_objects=3 use_timestamp=false tag=$TG OTHER_CONFIGSSet your own tag of the saving folder by export TG=YOUR_OWN_TAG and tag=$TG, enable time stamps for naming the folder by settinguse_timestamp=true.

The training configurations for the coarse stage are stored in configs/gd-if.yaml and the fine stage in configs/gd-sd-refine.yaml.

To resume from a previous checkpoint, e.g., resume from a coarse-stage training for the fine stage

resume=examples/gd-if/$TG/ckpts/last.ckptGraphDreamer can be used to inverse the semantics in a given image into a 3D scene, by extracting a scene graph directly from an input image with ChatGPT-4.

To generate more objects and accelerate convergence, you may provide rough center coordinates for initializing each object by setting in the script:

export C=[[X1,Y1,Z1],[X2,Y2,Z3],...,[Xm,Ym,Zm]]This will initialize the SDF-based objects as spheres centered at your given coordinates. The initial size of each object SDF sphere can also be custimized by setting the radius:

export R=[R1,R2,...,Rm]Check ./threestudio/models/geometry/gdreamer_implicit_sdf.py for more details on this implementation.

The authors extend their thanks to Zehao Yu and Stefano Esposito for their invaluable feedback on the initial draft. Our thanks also go to Yao Feng, Zhen Liu, Zeju Qiu, Yandong Wen, and Yuliang Xiu for their proofreading of the final draft and for their insightful suggestions which enhanced the quality of this paper. Additionally, we appreciate the assistance of those who participated in our user study.

Weiyang Liu and Bernhard Sch"olkopf was supported by the German Federal Ministry of Education and Research (BMBF): T"ubingen AI Center, FKZ: 01IS18039B, and by the Machine Learning Cluster of Excellence, the German Research Foundation (DFG): SFB 1233, Robust Vision: Inference Principles and Neural Mechanisms, TP XX, project number: 276693517. Andreas Geiger and Anpei Chen were supported by the ERC Starting Grant LEGO-3D (850533) and the DFG EXC number 2064/1 - project number 390727645.

This codebase is developed upon threestudio. We appreciate its maintainers for their significant contributions to the community.

@Inproceedings{gao2024graphdreamer,

author = {Gege Gao, Weiyang Liu, Anpei Chen, Andreas Geiger, Bernhard Schölkopf},

title = {GraphDreamer: Compositional 3D Scene Synthesis from Scene Graphs},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2024},

}