This repository contains the code implementation of a Cycle GAN model to convert landscape images to Monet-style paintings. The Cycle GAN architecture consists of generators and discriminators to achieve image-to-image translation.

The project involves training two generators and discriminators:

- Generators: Converts between landscape images and Monet-style paintings.

- Discriminators: Distinguishes between real and generated images for both landscapes and paintings.

- Python 3.x

- PyTorch

- Albumentations

The repository structure is organized as follows:

generator_model.py: Contains the generator architecture.discriminator_model.py: Holds the discriminator architecture.dataset.py: Defines the dataset class for loading landscape and painting images.utils.py: Consists of utility functions for model checkpointing.config.py: Stores configuration parameters and transforms for data preprocessing.train.py: Main script to train the Cycle GAN model.

git clone https://github.com/Anirvan-Krishna/landscape-to-monet.git

cd landscape-to-monetAdjust the configuration parameters in config.py for specific learning rates, batch sizes, and other settings.

The trained generator models (genp.pth.tar and genl.pth.tar) and discriminator models (criticl.pth.tar and criticp.pth.tar) are saved periodically during training.

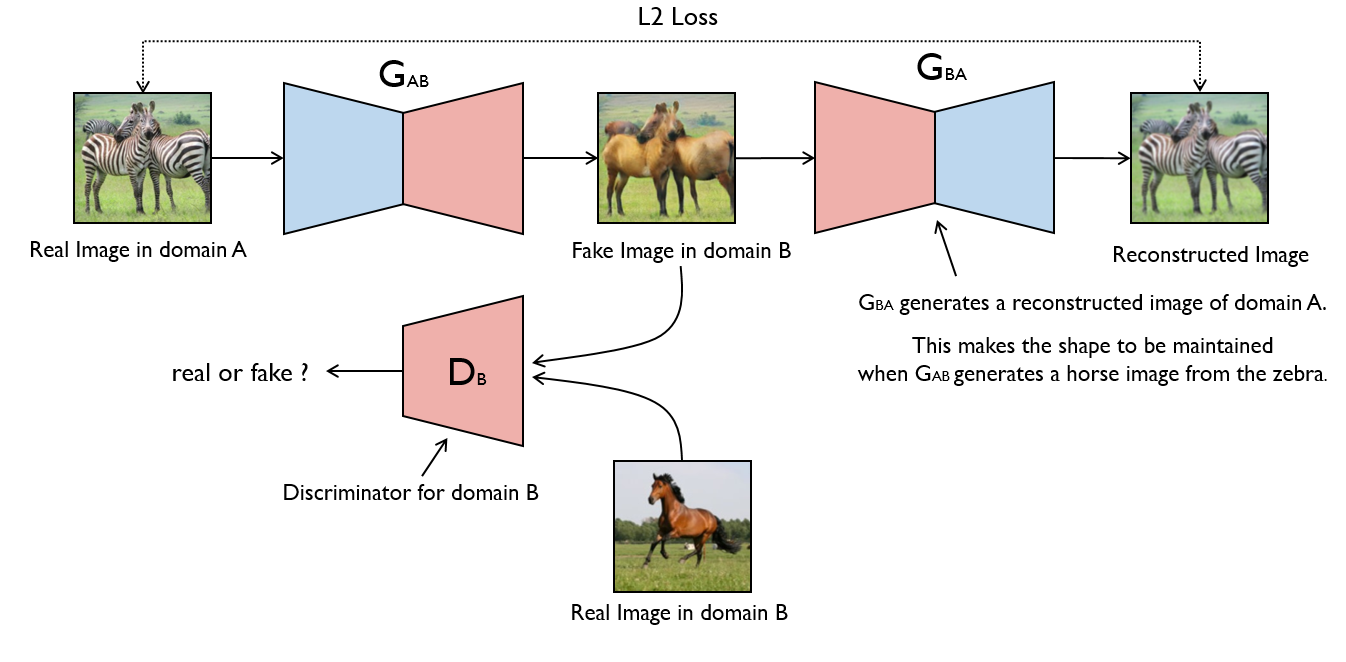

- Unpaired Image Translation: Enables translation between two domains without one-to-one correspondence in the training data.

- Cycle Consistency: Introduces cycle consistency loss to maintain image content during translation, allowing reconstruction back to the original domain.

- Generator-Discriminator Pairs: Comprises two generators and two discriminators, facilitating the mapping between the source and target domains while maintaining realism.

- Adversarial Training: Involves adversarial training where the generators attempt to fool the discriminators, and the discriminators aim to differentiate between real and generated images.

- Cycle Consistency Loss: Ensures that an image translated from domain A to domain B and then back to domain A remains similar to the original image in domain A.

Cycle GAN has found extensive use in various applications, including style transfer, image-to-image translation, and artistic image synthesis.

| Input | Output |

|---|---|

|

|

This is a result of 15 epochs of training for CycleGAN. Model shows significant improvement as it is trained further.

For more details of the architecture, refer to: Zhu, J.-Y., Park, T., Isola, P., & Efros, A. A. (2017). Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV).