This project hosts the code for implementing the DenseCL algorithm for self-supervised representation learning.

Dense Contrastive Learning for Self-Supervised Visual Pre-Training,

Xinlong Wang, Rufeng Zhang, Chunhua Shen, Tao Kong, Lei Li

In: Proc. IEEE Conf. Computer Vision and Pattern Recognition (CVPR), 2021

arXiv preprint (arXiv 2011.09157)

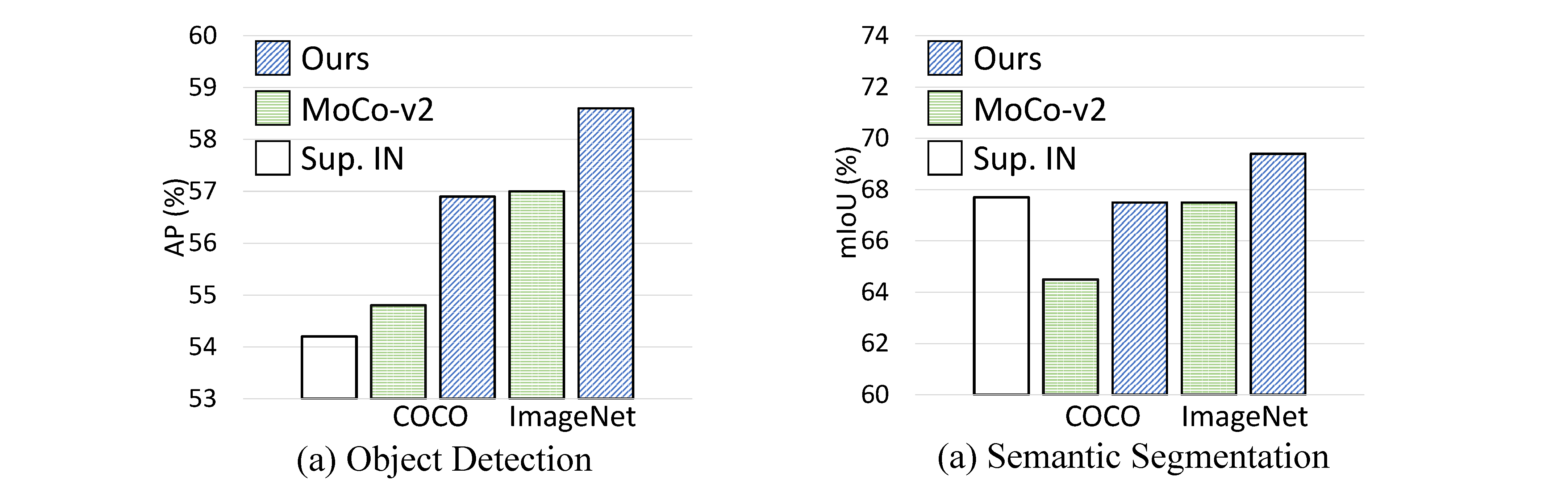

- Boosting dense predictions: DenseCL pre-trained models largely benefit dense prediction tasks including object detection and semantic segmentation (up to +2% AP and +3% mIoU).

- Simple implementation: The core part of DenseCL can be implemented in 10 lines of code, thus being easy to use and modify.

- Flexible usage: DenseCL is decoupled from the data pre-processing, thus enabling fast and flexible training while being agnostic about what kind of augmentation is used and how the images are sampled.

- Efficient training: Our method introduces negligible computation overhead (only <1% slower) compared to the baseline method.

- Code and pre-trained models of DenseCL are released. (02/03/2021)

Please refer to INSTALL.md for installation and dataset preparation.

For your convenience, we provide the following pre-trained models on COCO or ImageNet.

| pre-train method | pre-train dataset | backbone | #epoch | training time | VOC det | VOC seg | Link |

|---|---|---|---|---|---|---|---|

| Supervised | ImageNet | ResNet-50 | - | - | 54.2 | 67.7 | download |

| MoCo-v2 | COCO | ResNet-50 | 800 | 1.0d | 54.7 | 64.5 | download |

| DenseCL | COCO | ResNet-50 | 800 | 1.0d | 56.7 | 67.5 | download |

| DenseCL | COCO | ResNet-50 | 1600 | 2.0d | 57.2 | 68.0 | download |

| MoCo-v2 | ImageNet | ResNet-50 | 200 | 2.3d | 57.0 | 67.5 | download |

| DenseCL | ImageNet | ResNet-50 | 200 | 2.3d | 58.7 | 69.4 | download |

| DenseCL | ImageNet | ResNet-101 | 200 | 4.3d | 61.3 | 74.1 | download |

Note:

- The metrics for VOC det and seg are AP (COCO-style) and mIoU. The results are averaged over 5 trials.

- The training time is measured on 8 V100 GPUs.

- See our paper for more results on different benchmarks.

./tools/dist_train.sh configs/selfsup/densecl/densecl_coco_800ep.py 8

WORK_DIR=work_dirs/selfsup/densecl/densecl_coco_800ep/

CHECKPOINT=${WORK_DIR}/epoch_800.pth

WEIGHT_FILE=${WORK_DIR}/extracted_densecl_coco_800ep.pth

python tools/extract_backbone_weights.py ${CHECKPOINT} ${WEIGHT_FILE}

Please refer to README.md for transferring to object detection and semantic segmentation.

- After extracting the backbone weights, the model can be used to replace the original ImageNet pre-trained model as initialization for many dense prediction tasks.

- If your machine has a slow data loading issue, especially for ImageNet, your are suggested to convert ImageNet to lmdb format through folder2lmdb_imagenet.py, and use this config for training.

We would like to thank the OpenSelfSup for its open-source project and PyContrast for its detection evaluation configs.

Please consider citing our paper in your publications if the project helps your research. BibTeX reference is as follow.

@inproceedings{wang2020DenseCL,

title={Dense Contrastive Learning for Self-Supervised Visual Pre-Training},

author={Wang, Xinlong and Zhang, Rufeng and Shen, Chunhua and Kong, Tao and Li, Lei},

booktitle = {Proc. IEEE Conf. Computer Vision and Pattern Recognition (CVPR)},

year={2021}

}