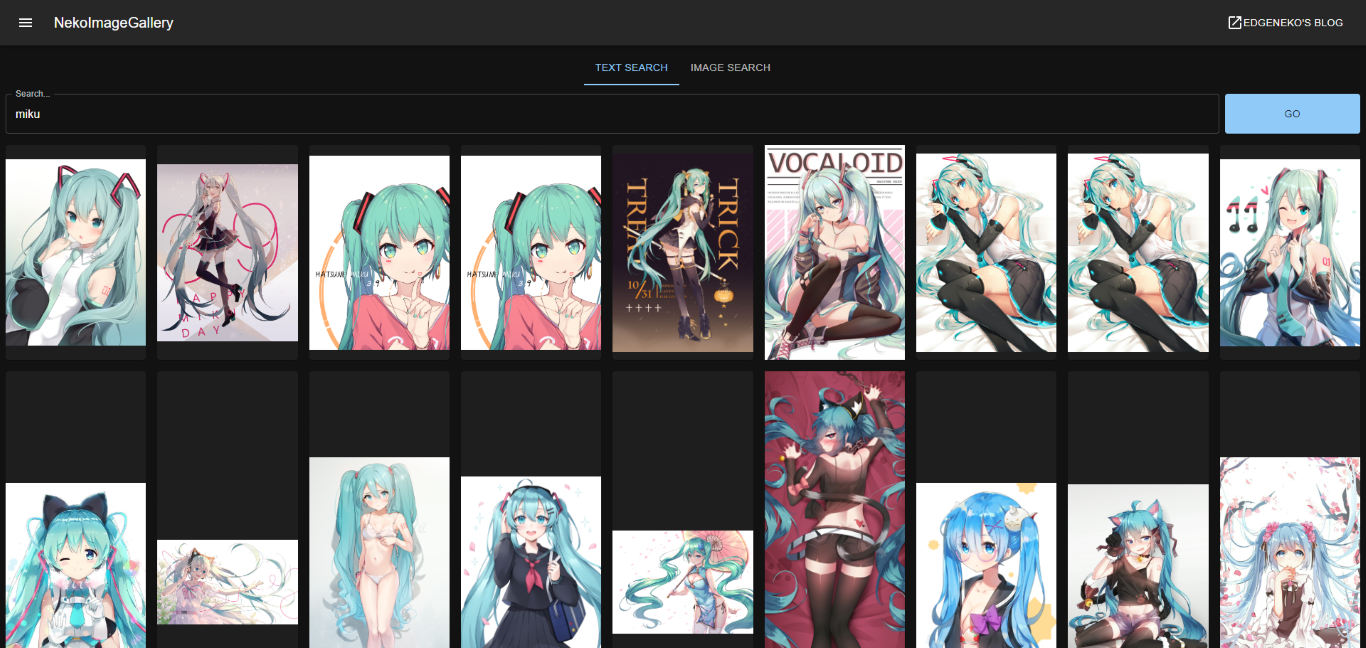

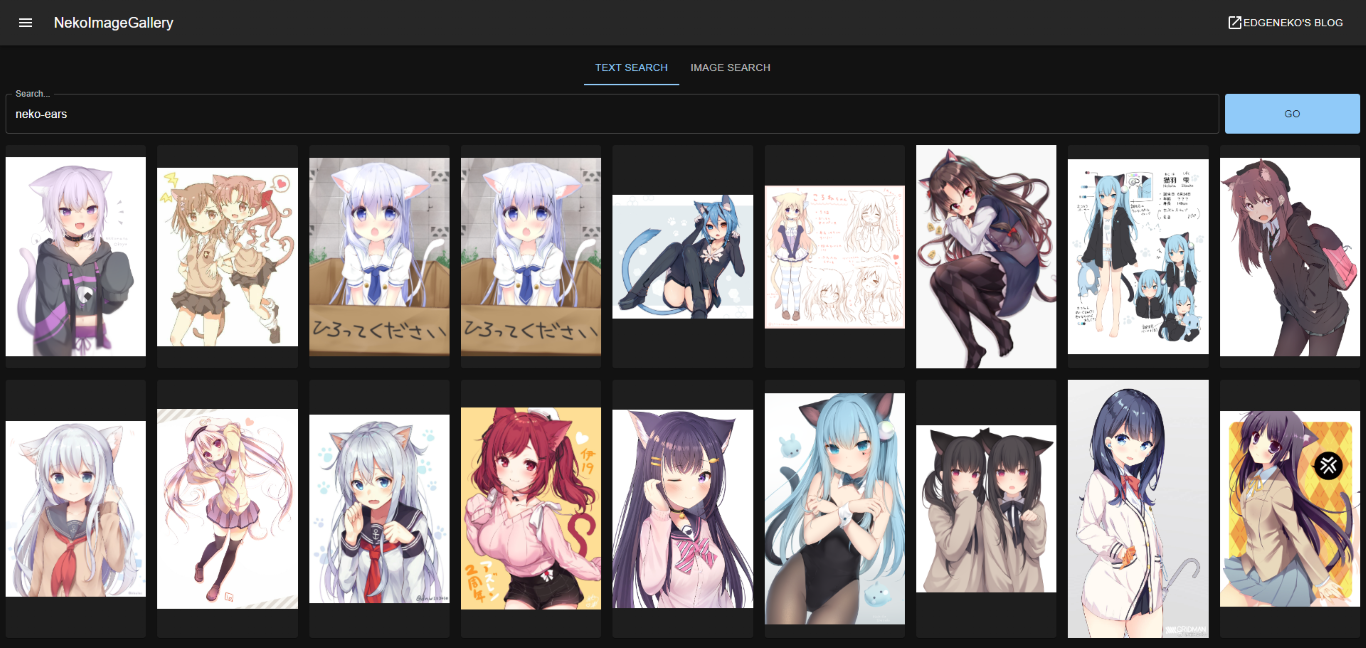

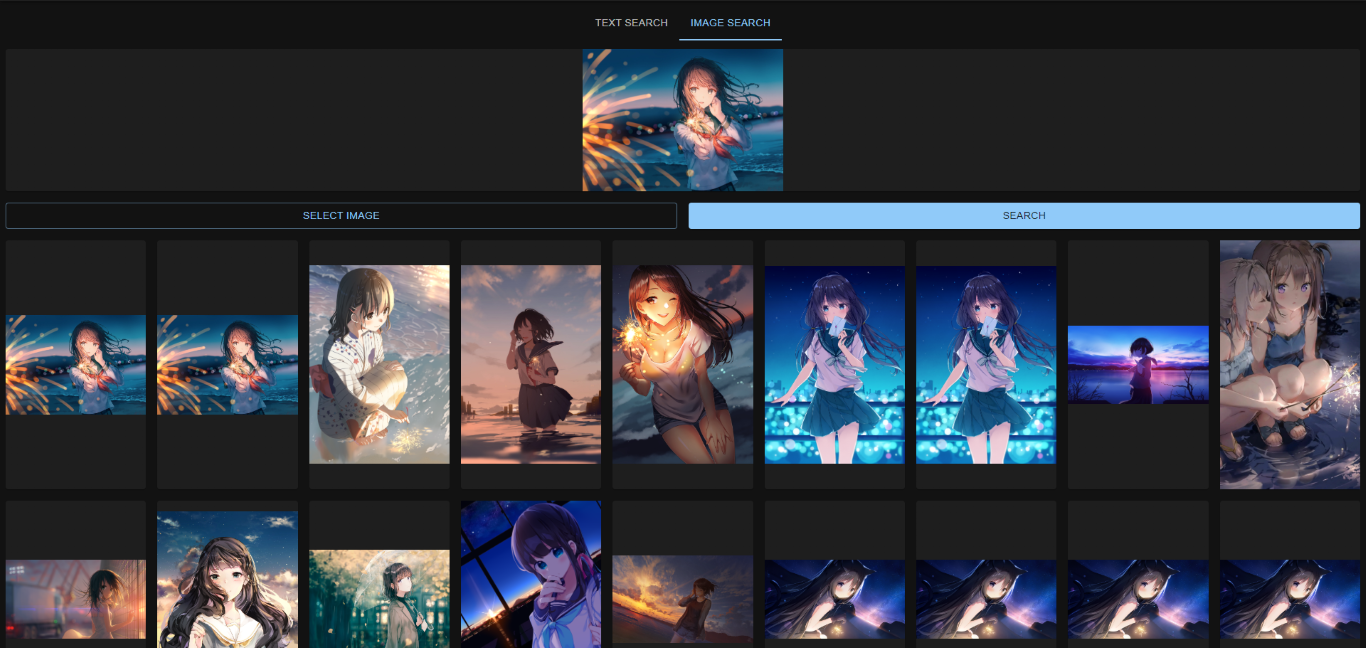

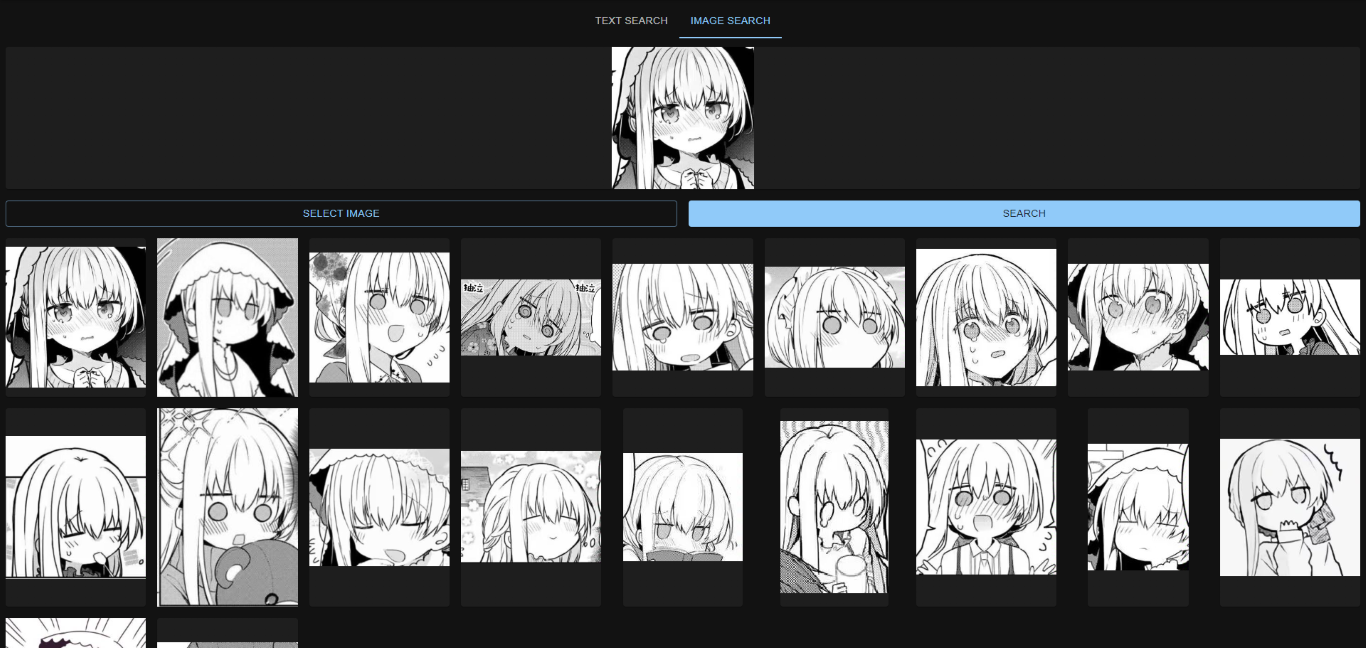

An online AI image search engine based on the Clip model and Qdrant vector database. Supports keyword search and similar image search.

- Use the Clip model to generate 768-dimensional vectors for each image as the basis for search. No need for manual annotation or classification, unlimited classification categories.

- OCR Text search is supported, use PaddleOCR to extract text from images and use BERT to generate text vectors for search.

- Use Qdrant vector database for efficient vector search.

The above screenshots may contain copyrighted images from different artists, please do not use them for other purposes.

Please deploy the Qdrant database according to the Qdrant documentation. It is recommended to use Docker for deployment.

If you don't want to deploy Qdrant yourself, you can use the online service provided by Qdrant.

-

Clone the project directory to your own PC or server.

-

It is highly recommended to install the dependencies required for this project in a Python venv virtual environment. Run the following command:

python -m venv .venv . .venv/bin/activate -

Install PyTorch. Follow the PyTorch documentation to install the torch version suitable for your system using pip.

If you want to use CUDA acceleration for inference, be sure to install a CUDA-supported PyTorch version in this step. After installation, you can use

torch.cuda.is_available()to confirm whether CUDA is available. -

Install other dependencies required for this project:

pip install -r requirements.txt

-

Modify the project configuration file inside

config/, you can editdefault.envdirectly, but it's recommended to create a new file namedlocal.envand override the configuration indefault.env. -

Initialize the Qdrant database by running the following command:

python main.py --init-database

This operation will create a collection in the Qdrant database with the same name as

config.QDRANT_COLLto store image vectors. -

(Optional) In development deployment and small-scale deployment, you can use the built-in static file indexing and service functions of this application. Use the following command to index your local image directory:

python main.py --local-index <path-to-your-image-directory>

This operation will copy all image files in the

<path-to-your-image-directory>directory to theconfig.STATIC_FILE_PATHdirectory (default is./static) and write the image information to the Qdrant database.Then run the following command to generate thumbnails for all images in the static directory:

python main.py --local-create-thumbnail

If you want to deploy on a large scale, you can use OSS storage services like

MinIOto store image files in OSS and then write the image information to the Qdrant database. -

Run this application:

python main.py

You can use

--hostto specify the IP address you want to bind to (default is 0.0.0.0) and--portto specify the port you want to bind to (default is 8000). -

(Optional) Deploy the front-end application: NekoImageGallery.App is a simple web front-end application for this project. If you want to deploy it, please refer to its deployment documentation.

Warning

Docker compose support is in an alpha state, and may not work for everyone(especially CUDA acceleration). Please make sure you are familiar with Docker documentation before using this deployment method. If you encounter any problems during deployment, please submit an issue.

If you want to use CUDA acceleration, you need to install nvidia-container-runtime on your system. Please refer to

the official documentation for installation.

Related Document:

- Modify the docker-compose.yml file as needed

- Run the following command to start the server:

# start in foreground docker compose up # start in background(detached mode) docker compose up -d

There are many ways to contribute to the project: logging bugs, submitting pull requests, reporting issues, and creating suggestions.

Even if you with push access on the repository, you should create a personal feature branches when you need them. This keeps the main repository clean and your workflow cruft out of sight.

We're also interested in your feedback on the future of this project. You can submit a suggestion or feature request through the issue tracker. To make this process more effective, we're asking that these include more information to help define them more clearly.

Copyright 2023 EdgeNeko

Licensed under GPLv3 license.