In this work is proposed a speech emotion recognition model based on the extraction of four different features got from RAVDESS sound files and stacking the resulting matrices in a one-dimensional array by taking the mean values along the time axis. Then this array is fed into a 1-D CNN model as input.

Finished but open to eventual improvement or updates.

You can run this code entirely on Colab without the need of libraries installation through pip.

All the libraries used are easy downloadable, but i recommend the creation of a conda environment thourgh the .yml file that you can find in the repo. It contains everything you need.

- Create the conda environment

conda env create -f SEC_environment.yml

- Activate the conda environment

conda activate SEC_environment

- For training and testing, just run:

python train_and_test.py

The first line of the yml file sets the new environment's name, that's already set on SEC_environment.

Contributions are what make the open source community such an amazing place to learn, inspire, and create. Any contributions you make are greatly appreciated.

If you have a suggestion that would make this better, please fork the repo and create a pull request. You can also simply open an issue with the tag "enhancement". Don't forget to give the project a star! Thanks again!

- Fork the Project

- Create your Feature Branch (

git checkout -b feature/AmazingFeature) - Commit your Changes (

git commit -m 'Add some AmazingFeature') - Push to the Branch (

git push origin feature/AmazingFeature) - Open a Pull Request

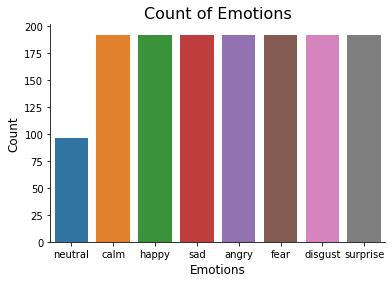

The dataset used for this work is the Ryerson Audio-Visual Database of Emotional Speech and Song (RAVDESS). This dataset contains audio and visual recordings of 12 male and 12 female actors pronouncing English sentences with eight different emotional expressions. For this task, we utilize only speech samples from the database with the following eight different emotion classes: sad, happy, angry, calm, fearful, surprised, neutral and disgust.

The overall number of utterances pronounced in audio files is 1440.

The results of this work were compared with different documents using deep and non-deep approaches. Of course, all of these jobs also use the same data set, the RAVDESS.

| Paper | Accuracy |

|---|---|

| Shegokar and Sircar | 60.10% |

| Zeng et al. | 65.97% |

| Livingstone and Russo | 67.00% |

| Dias Issa et al. | 71.61% |

| This work | 80.11% |

Andrea Lombardi - @LinkedIn