conda create -n marl python==3.6.1

conda activate marl

pip install torch==1.5.1+cu101 torchvision==0.6.1+cu101 -f https://download.pytorch.org/whl/torch_stable.html

- Please refer to Google Research Football

- we use training Cooperative Navigation as an example:

cd CooperativeNavigation

python train.py

- we use training Google Football as an example:

# 3vs1 scenario

cd GoogleFootball/3vs1

python train.py

# 2vs6 scenario

cd GoogleFootball/2vs6

python train.py

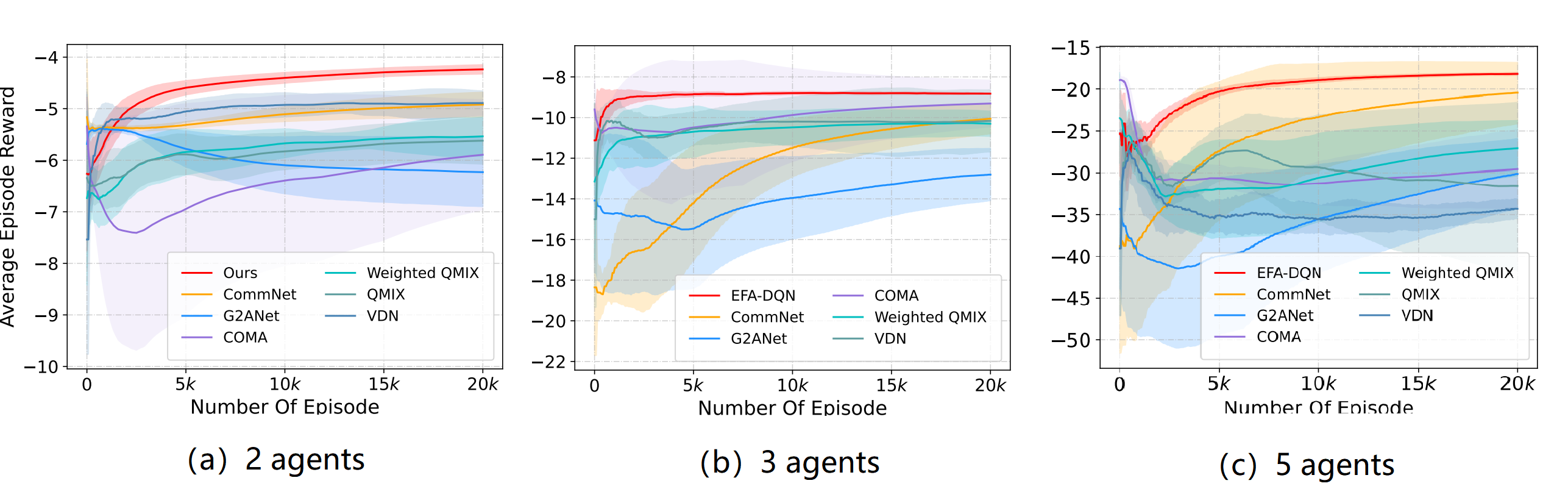

- Section 4.1: The comparison with VDN, QMIX, Weighted QMIX, COMA, CommNet and G2ANet on Cooperative Navigation.

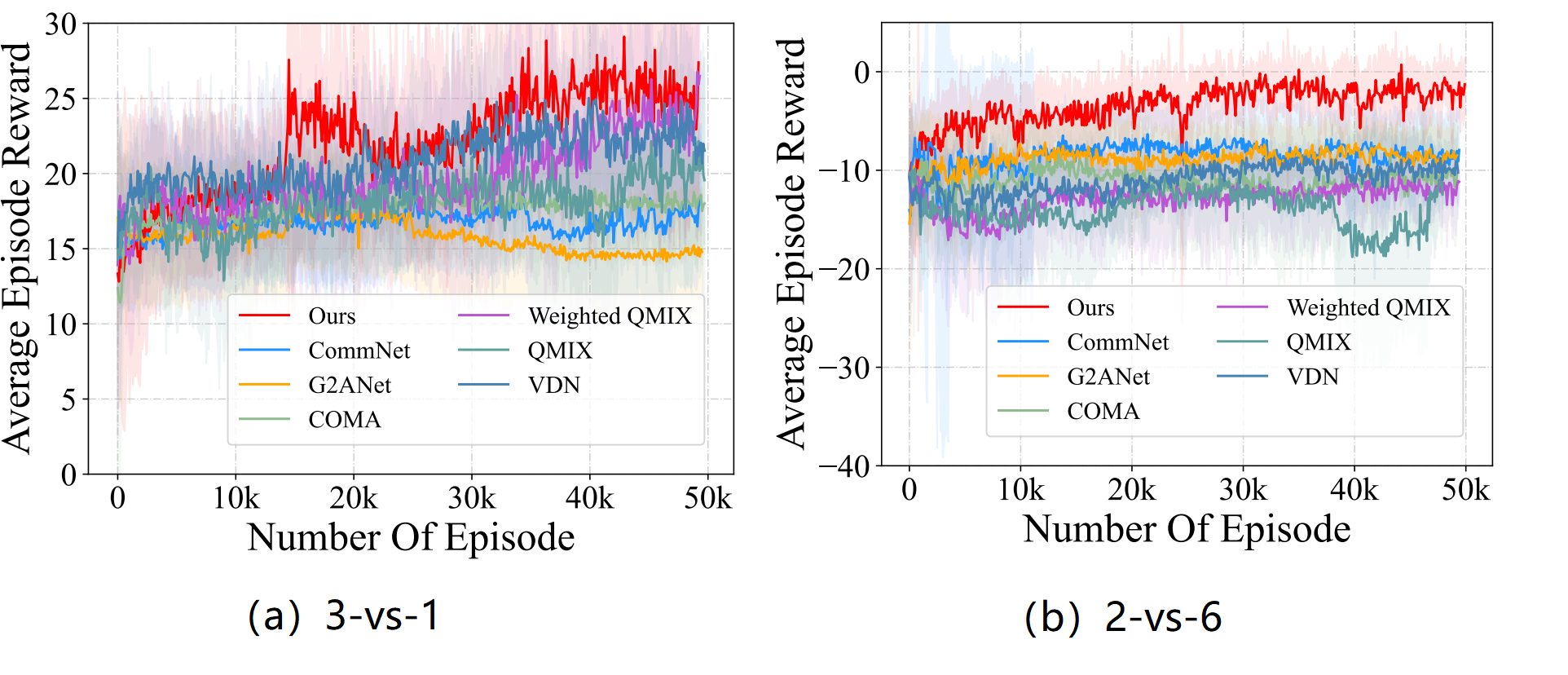

- Section 4.2: The comparison with VDN, QMIX, Weighted QMIX, COMA, CommNet and G2ANet on Google Football.

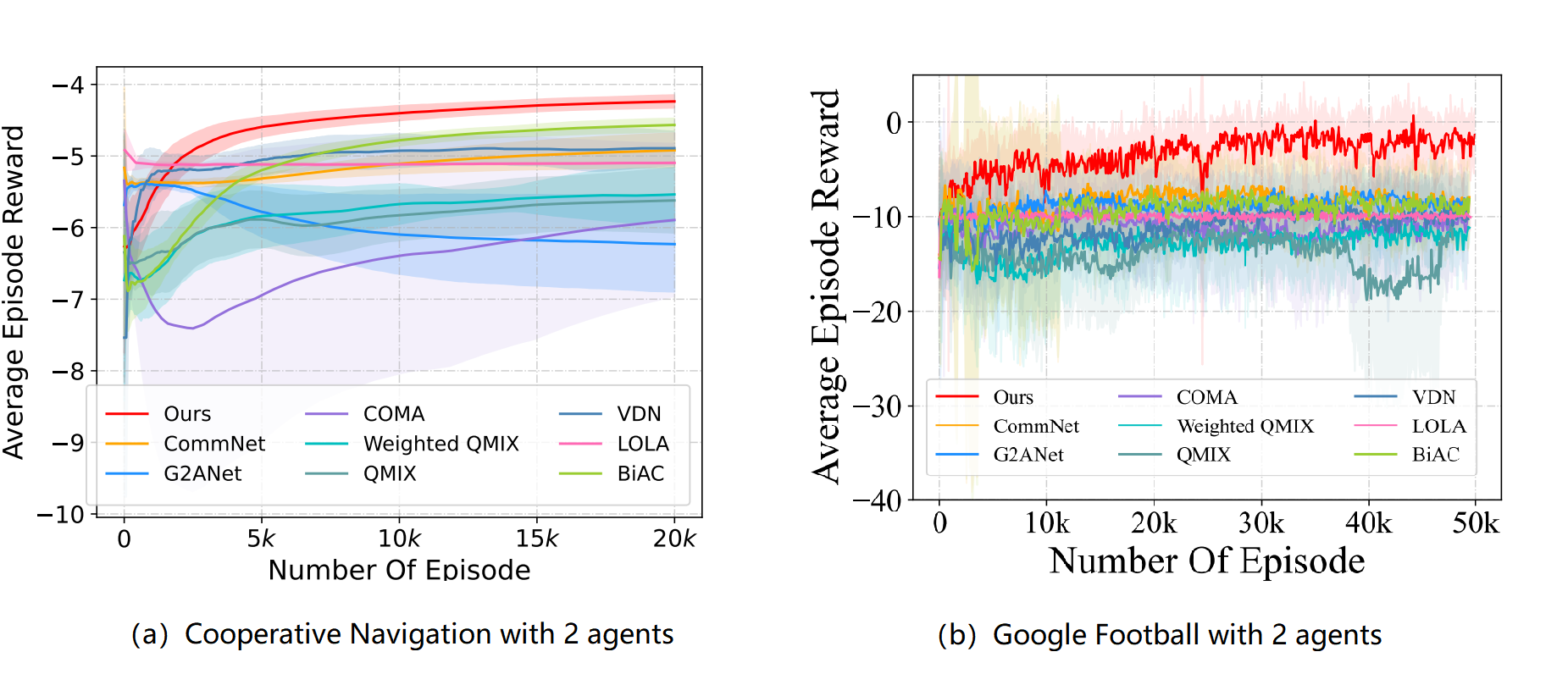

- Section 4.3: The comparison LOLA (2 agents).

- Section 4.4: The comparison with BiAC (2 agents).

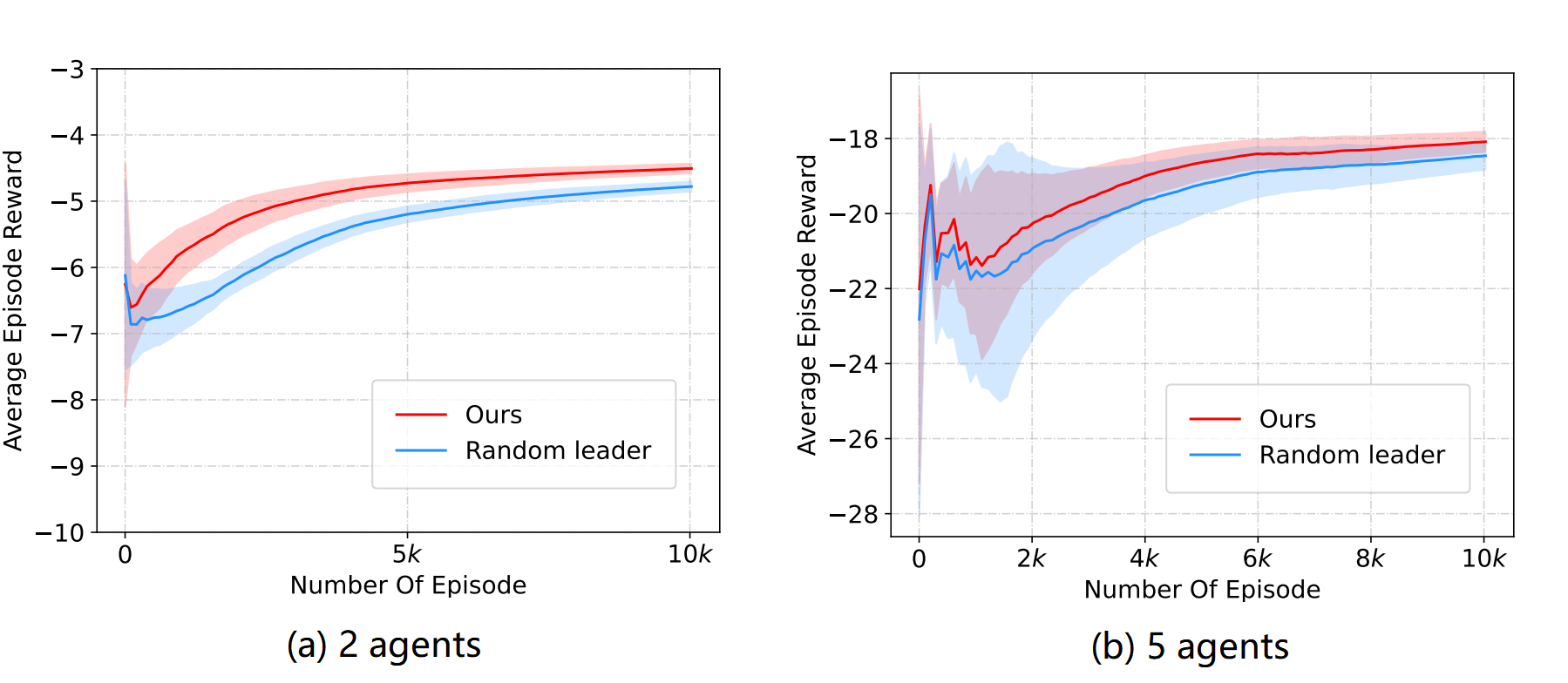

- Section 4.5: The importance of optimally electing the leader

Tips:

- Our reproduce for LOLA is available at this repo : AC_LOLA

- LOLA has an elegant theory guarantee of 2 agents in a general-sum game but no such guarantee with more than 2 agents. Due to the limitation of LOLA, we only test the LOLA with 2 agents.

- In the future, we can investigate the gradient effects (average gradients from other agents or gradient effects between pairs) of multiple agents (more than 2).

- 2 agents on the Cooperative Navigation and Google Football

- The emperical results about 2 agents and 5 agents on the Cooperative Navigation