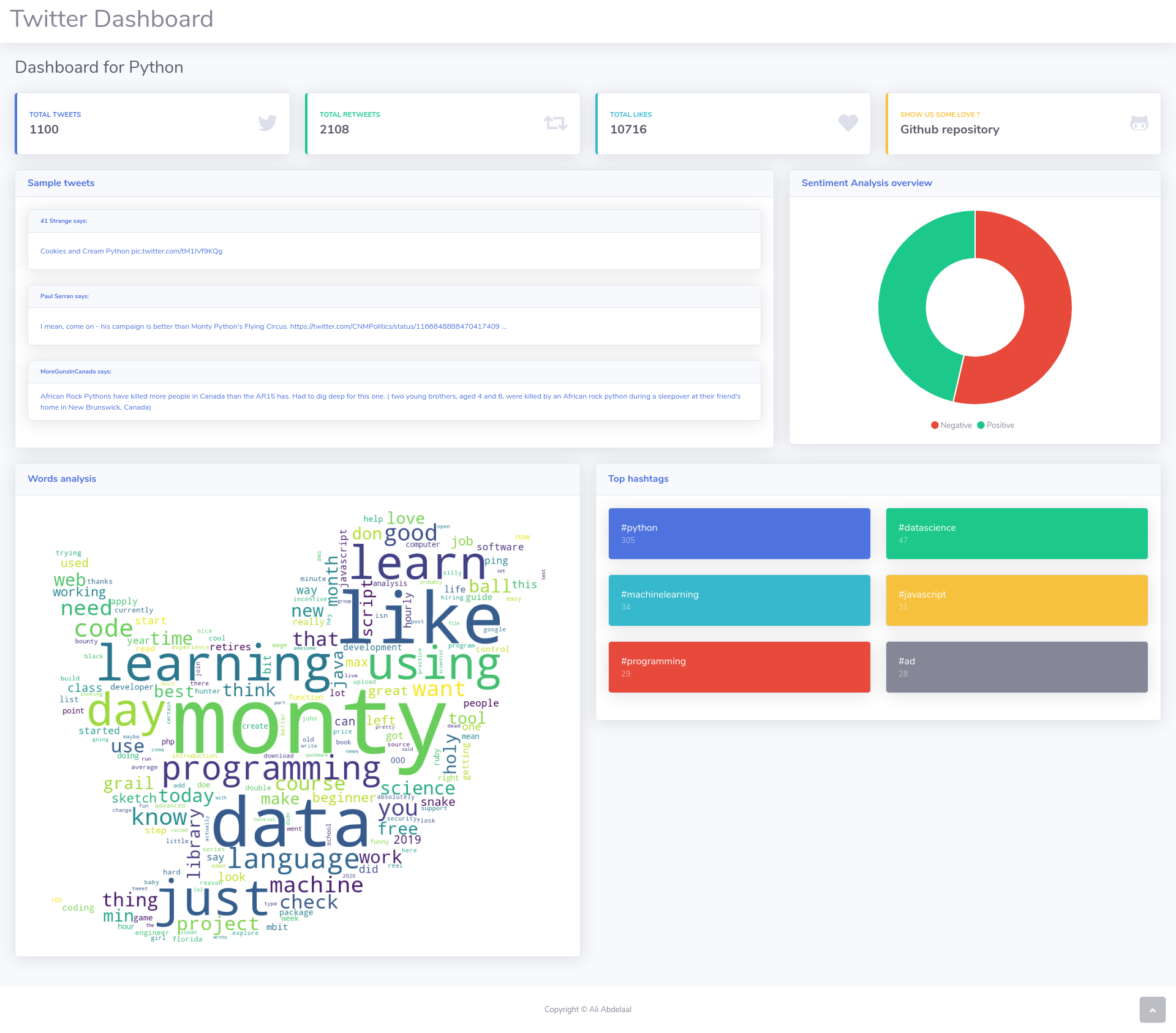

A dashboard for any custom query with simple analysis tools

the dashboard runs in the local host, it's still a little bit slow, but that's an early version, most probably i will be working on it's performance.

to use the dashboard follow these steps

- create a virtualenv and activate it

$python -m venv venv

$source venv/bin/activate- install all the requirements

$pip install -r requirements.txtNow you are ready to go !

- start the app from

app.py

$python app.pyyou will find a message ending with the url like so

* Running on http://127.0.0.1:5000/ (Press CTRL+C to quit)Jump into the link and you will directed to the landing page

you can type your query and press analyse to get the dashboard, it's a little bit slow, but it works :D

- The max number of tweets are set to 1000 and for only one month back, you can override these option in the

twitterdash/scraper.pyfile. - Changing the

poolsizeintwitterdash/scraper.pywill affect the speed of scraping but also the performance of your machine, don't get too far with it.

- Pages design were made possible by Bootstrap studio

- For Twitter scraping i used twitterscraper package, which is pretty useful !

- Word cloud is pretty easy using word_cloud package.

- Sentiment analysis here were made using TextBlob library.