Demo | API Documentation | API Endpoint

🔍 Clinical cases search by similarity specialized in Covid-19

Note: The public backend is not returning the best results due to the free-tier of Heroku. More details below.

We both are a very big fans of similarities project and when we saw this challenge, we couldn't lose the opportunity to work into.

Also, we can imagine how unstructured is the data from the clinical cases, so we wanted to collaborate with our help in order to improve the daily work from the field in this topic.

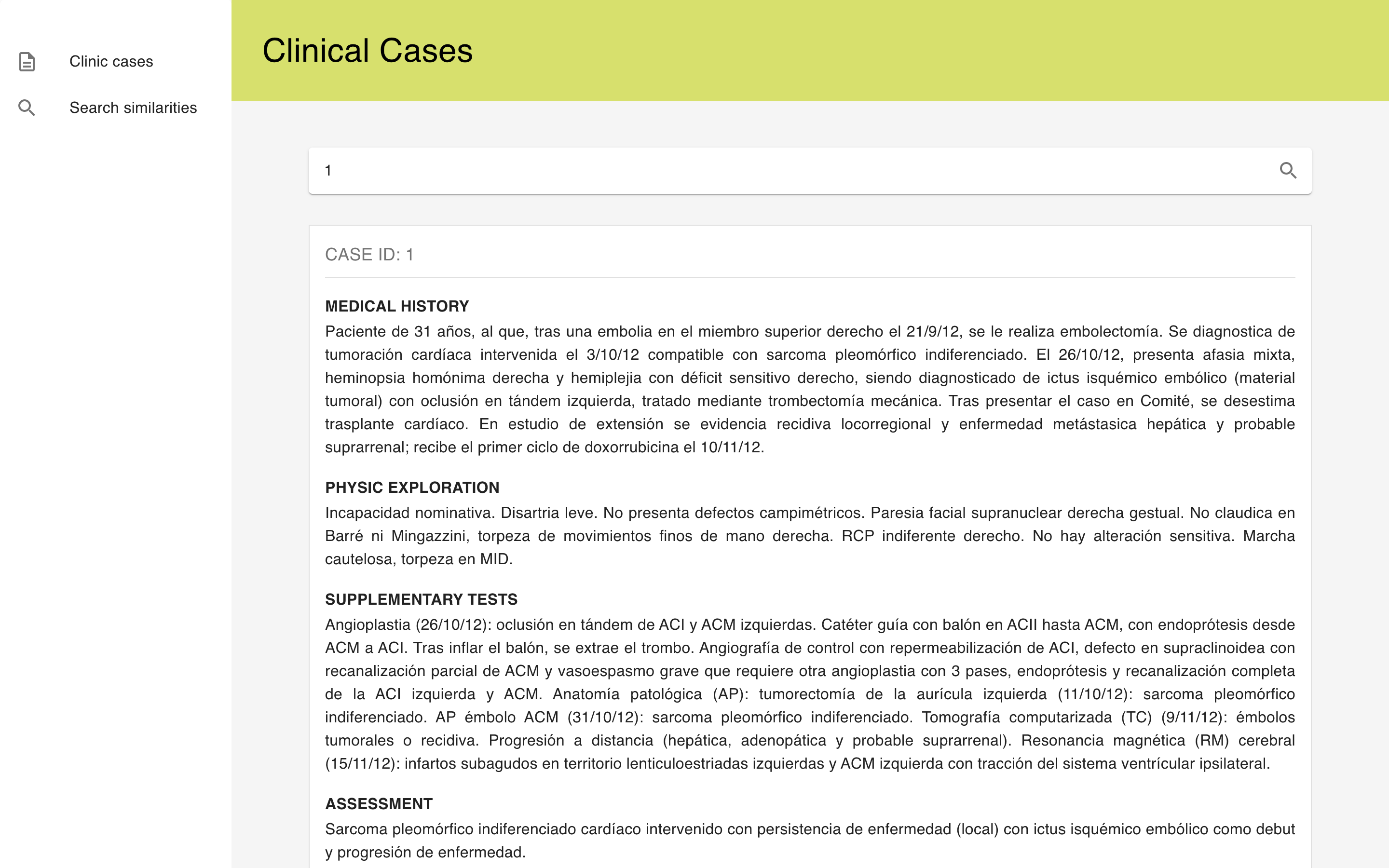

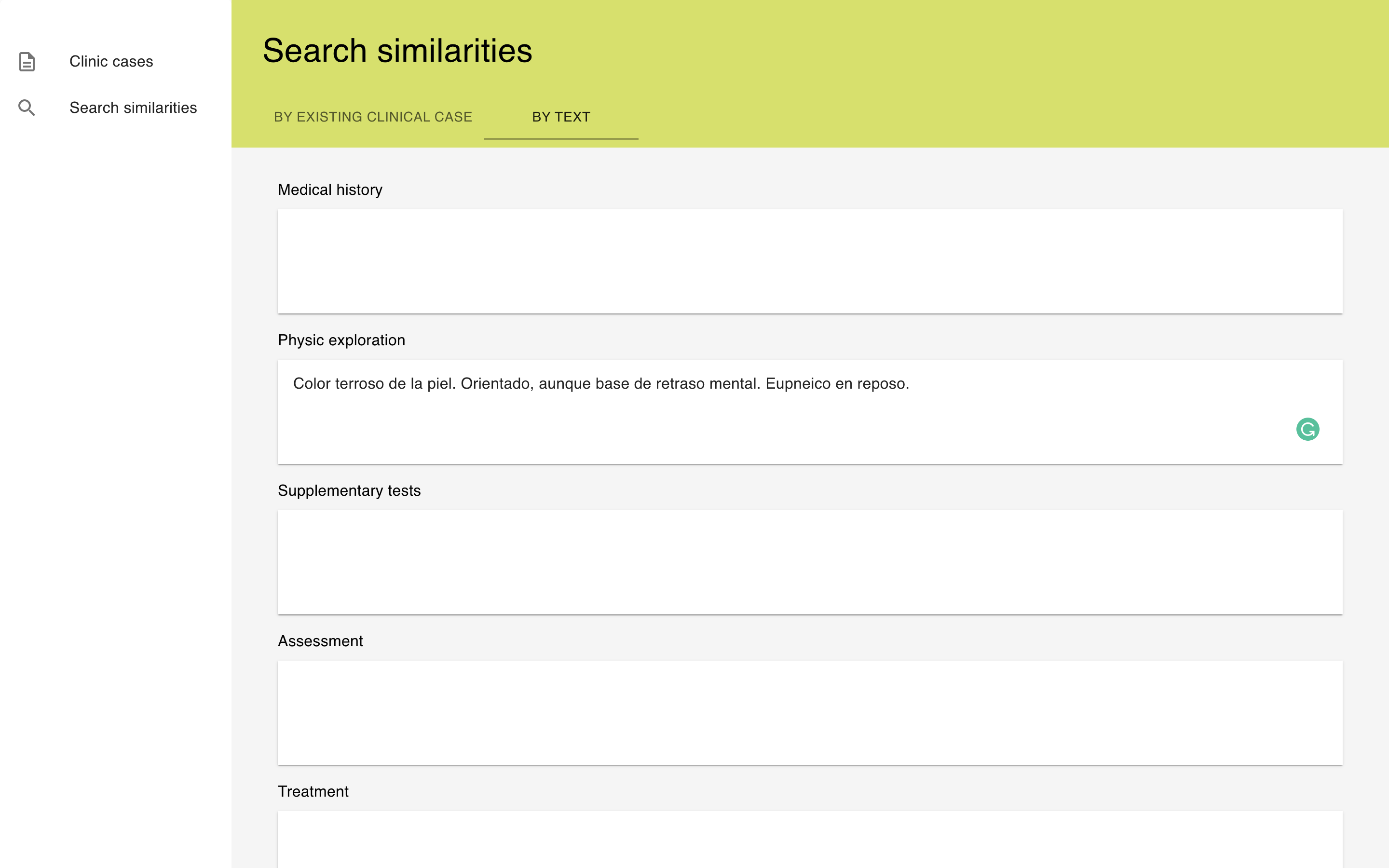

Casescan is a small web application where you can search into a clinical cases' database based on text-semantic similarities.

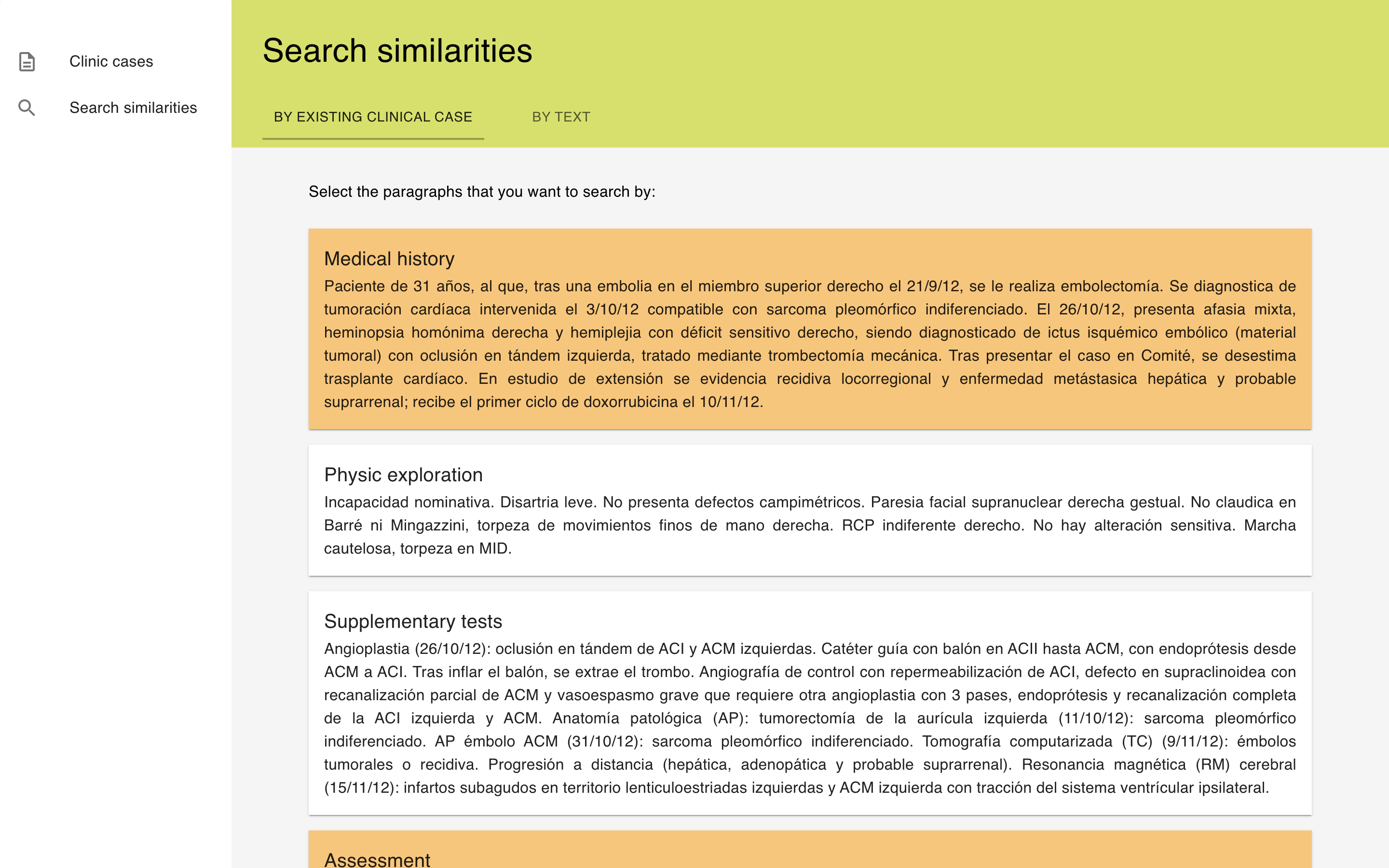

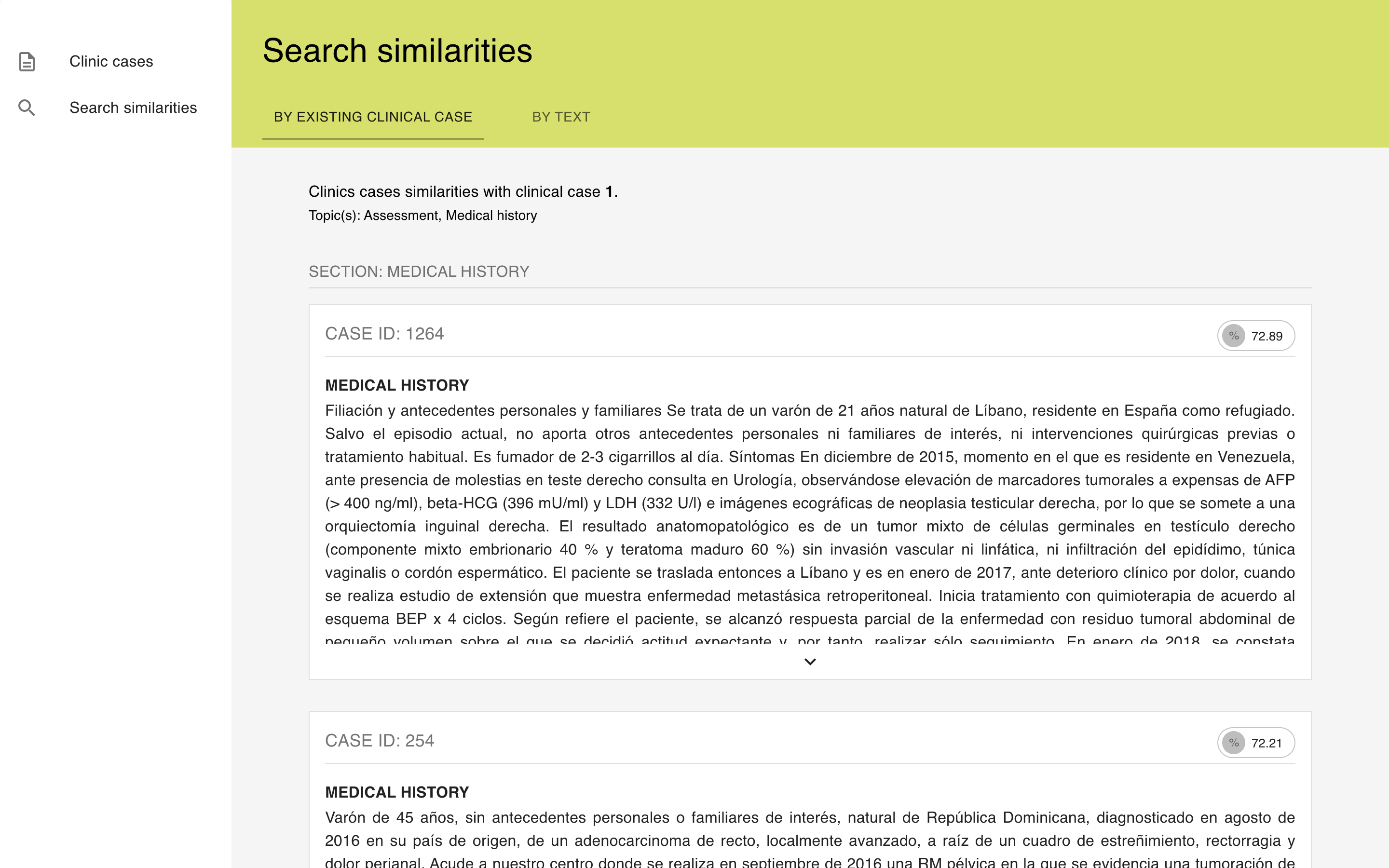

Searches can be performed in two different ways:

- Given an existing clinical case from the database and a bunch of sections from it (Medical history, assessment...).

- Given a free-text input split by section.

The output will be a list of the most similar clinical cases based on the query parameters with the corresponding similarity percentage.

From the frontend perspective, Casescan is a React Web application. It's build as a small dashboard designed for professionals in order to be used in a daily basis. The styles from the website are based on the Material UI guidelines.

From the backend perspective, we have a Python API built with Flask/OpenAPI (connected thanks to Connexion library). This API serves all the features that the frontend needs from the clinical cases retrieving to the differnt types of searches.

The procedure of extracting similarities from the given database has been the following: Similarity analysis procedure.

We both kinda had some experience in the NLP world with previous personal and university projects. However, it was a challenge to face again with this topic and understand how we could extract the most value information from the clinical cases texts.

Given the fact that we couldn't work 24/7 in this project during the hackathon time window, we are very proud of the result and how cute/working is the web application.

Similarities is a concept very famous in the industry but still a lot of discover to be found in the future.

We only tried one model for extracting the text embeddings, so maybe other one work better for this use case. A deep research given this will be the key for improving the solution.

Firstly, looking at the initial dataset of 2.500 clinical cases, we saw that a big portion of them contained headers during the clinical explanation (Like Assessment, Evolution...). So, given the length of every document, we decided to split every clinical case in different sections, being able then to have shorter fragments of text with more concise information.

We did a first assessment of the different headers that could be found in the dataset, and we decided to group them all in these 6 different sections:

medical_history,physic_exploration,supplementary_tests,assessment,treatment,evolution

Then, using the extract_data.py script, we generated a pickle file called db.pkl where you can find enough structure data to start playing with it. So, given the initial 2.500 clinical cases, we ended up with the following ones:

Clinical cases extracted: [1306 - 52.24%]

> medical_history: [1294 - 99.08%]

> physic_exploration: [1282 - 98.16%]

> supplementary_tests: [1285 - 98.39%]

> assessment: [1274 - 97.55%]

> treatment: [1255 - 96.09%]

> evolution: [1289 - 98.7%]

So, as it can be seen above, most of the extracted clinical cases have structured section data.

Once we have the db.pkl file contains the clinical cases' data, the next step was to extract the meaning of these texts. There are several approaches, but in this scenario we decided to move forward with a transformer.

Firstly we decided to not train our own model, basically for time concerns given that this project is for a hackathons. So, we ended up in research of which is the model that would work better.

We discovered the sentence-transformers tool, specialized in embedding retrieving from several sentences, as known as paragraphs; exactly what we wanted. Looking at the available pre-trained models in their documentation, we finally decided to go for an Average Word Embeddings Model, more specifically, going for the 840B version of the GloVe model, the current State-of-the-Art.

The GloVe model was returning very good results in a crazy time performance, but we finally went using the average_word_embeddings_levy_dependency weight model for basically fitting the API using the free-tier Heroku machine. So, the results could not be the best ones. In order to retrieve the embeddings with different models, just tweak the MODEL_NAME variable under the api/src/__init__.py file.

# Transformer

MODEL_NAME = 'average_word_embeddings_levy_dependency'

MODEL_DIMENSIONS = 300This model has an output embeddings size of 300 dimensions. So, given the 6 sections described in the previous step, we created 6 different datasets under an H5 file called embeddings_full.h5 with the clinical cases text features saved. These 6 datasets has an extra 7 one representing the aggregation of every section, being then a dataset with 1800 dimensions.

> Dataset aggregated: [(1306, 1800)]

> Dataset assessment: [(1274, 300)]

> Dataset evolution: [(1289, 300)]

> Dataset medical_history: [(1294, 300)]

> Dataset physic_exploration: [(1282, 300)]

> Dataset supplementary_tests: [(1285, 300)]

> Dataset treatment: [(1255, 300)]

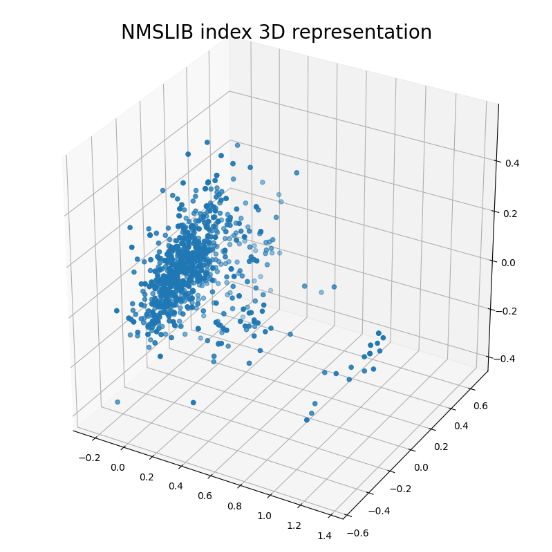

Given the embeddings_full.h5 file, the latest step was to create as much as NMSLIB indexes as different sections we had in our data. NMSLIB is a Non-Metric Space Library, used as an efficient similarity search library, and a toolkit for evaluation of k-NN methods for generic non-metric spaces.

Once the indexes created under the indexes folder, it's just sending embeddings as input and then getting the closest point in the space with the corresponding distance.

> Index for evolution.nmslib: [1.67 MB]

> Index for supplementary_tests.nmslib: [1.66 MB]

> Index for treatment.nmslib: [1.62 MB]

> Index for assessment.nmslib: [1.65 MB]

> Index for physic_exploration.nmslib: [1.66 MB]

> Index for medical_history.nmslib: [1.67 MB]

> Index for aggregated.nmslib: [9.16 MB]

Note: Index representation generated with nmslib-viz.

- Python 3.7+

- React 17.0+

Usage of virtualenv is recommended for API / backend package library / runtime isolation.

To run the commands, please execute the following from the api directory:

-

Setup virtual environment.

-

Install dependencies.

pip3 install -r requirements.lockTo run the commands, please execute the following from the client directory:

- Install dependencies.

npm installTo run the commands, please execute the following from the root directory:

- Run API container using docker-compose.

docker-compose up -d --buildTo run the commands, please execute the following from the client directory:

- Serve React application.

npm startMIT © Casescan