Building a Local Coding Assistant with Code Llama and Cody AI and Continue

Local Coding Assistants: Making coding more efficient, one model at a time!

- If you are just starting out with local LLMs, refer to my guide on setting up your own local LLM using Ollama here: https://github.com/Akshay-Dongare/Ollama-Local-LLM

- Open cmd and run:

ollama pull codellama:7b-code - Note: codellama is a LLM finetuned for coding domain

- To get your own personal, free, local and accurate coding assistant going, follow these steps

- Open Visual Studio Code

- Go to extensions tab:

ctrl+shift+x - Install Cody AI by Sourcegraph extension and enable it globally

- Navigate to the Cody extension settings by pressing

ctrl+shift+pand typing "Cody:Extension Settings" - Navigate to

Cody › Autocomplete › Advanced: Providerand selectexperimental-ollama - Navigate to

Cody › Autocomplete › Advanced: Server Endpointand typehttp://localhost:11434 - Your local coding assistant is up and running!

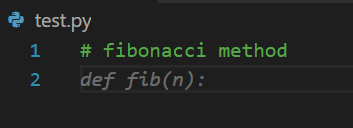

- To check it's performance, make a new python file and type

#fibonacci methodand codellama:7b-code will start auto-completing the code for you! - Example:

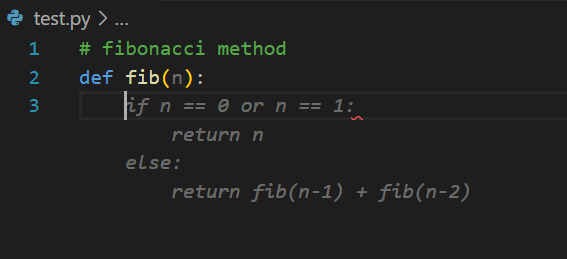

- To check logs, go to

Console>Outputand selectCody by Sourcegraph - You should see something similar to:

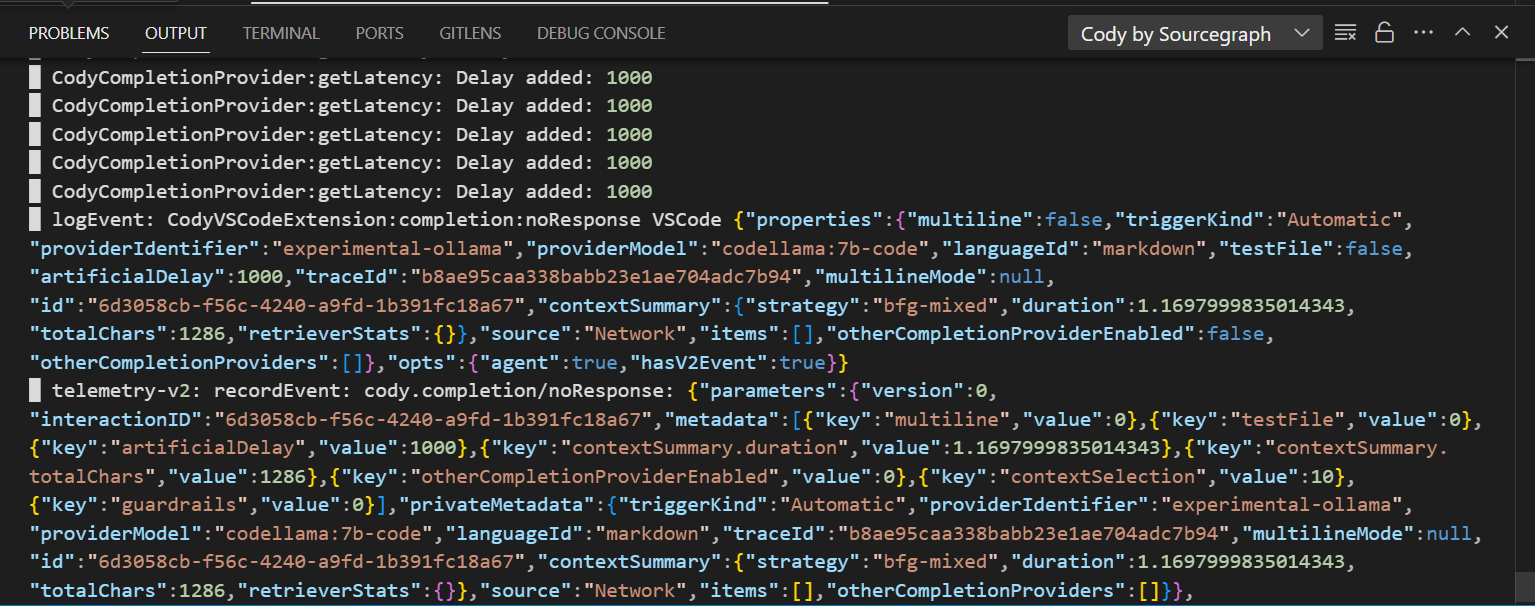

- To use local models with Continue and VS code, first Open Visual Studio Code

- Go to extensions tab:

ctrl+shift+x - Install

Continue - CodeLlama, GPT-4, and moreextension and enable it globally - Open the

Continueextension window - Open model selection menu on bottom left, here you can see all the freely available models for trial

- Click the

+icon, this will take you toAdd new modelmenu - Select Ollama

- This will take you to

Configure Modelmenu - Select

Autodetect - This will add all the models you have on your system that you have installed using

ollama pull {model_name}toContinue - To recheck this, open the

config.jsonfile by clicking on the settings icon inContinueextension and check whether the following has been added to"models"list automatically:

{

"model": "AUTODETECT",

"title": "Ollama",

"completionOptions": {},

"apiBase": "http://localhost:11434",

"provider": "ollama"

}

- Now you can easily chat with all the files in your codebase like so:

- Now all your local models will be available within

Continueinterface and you can use them to chat with your code, without the internet, fully private, free-of-cost and local! - This way, even the custom models you have created using ModelFile/System Prompt editing (via

ollama create {custom_model_name} --file {path_to_modelfile}) will also be available inContinue!