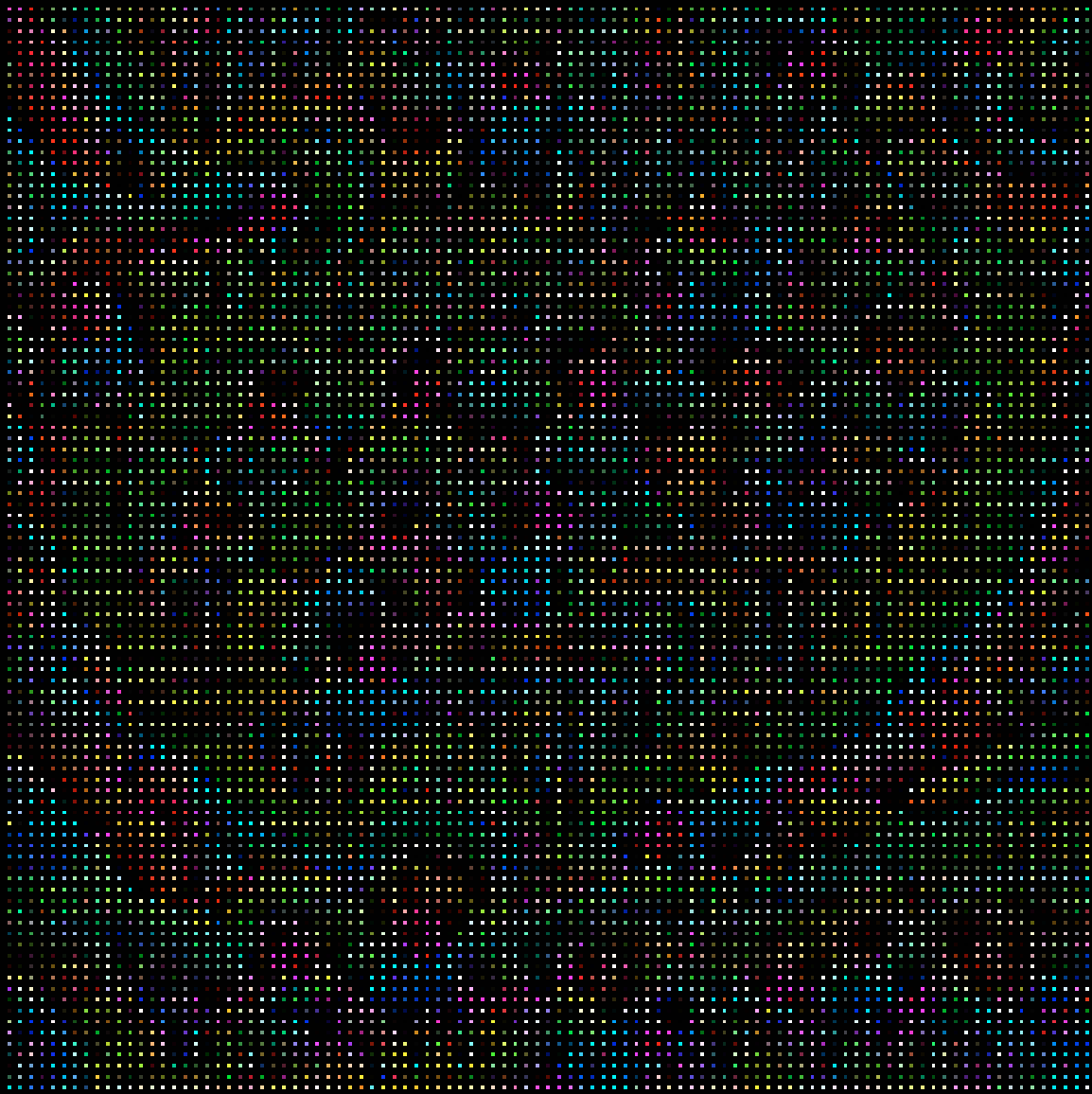

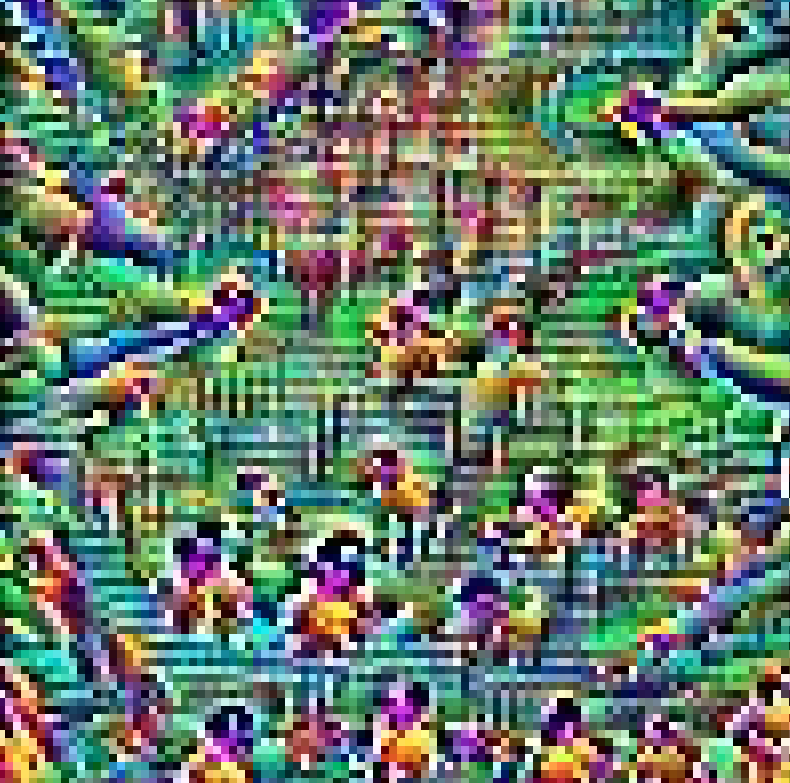

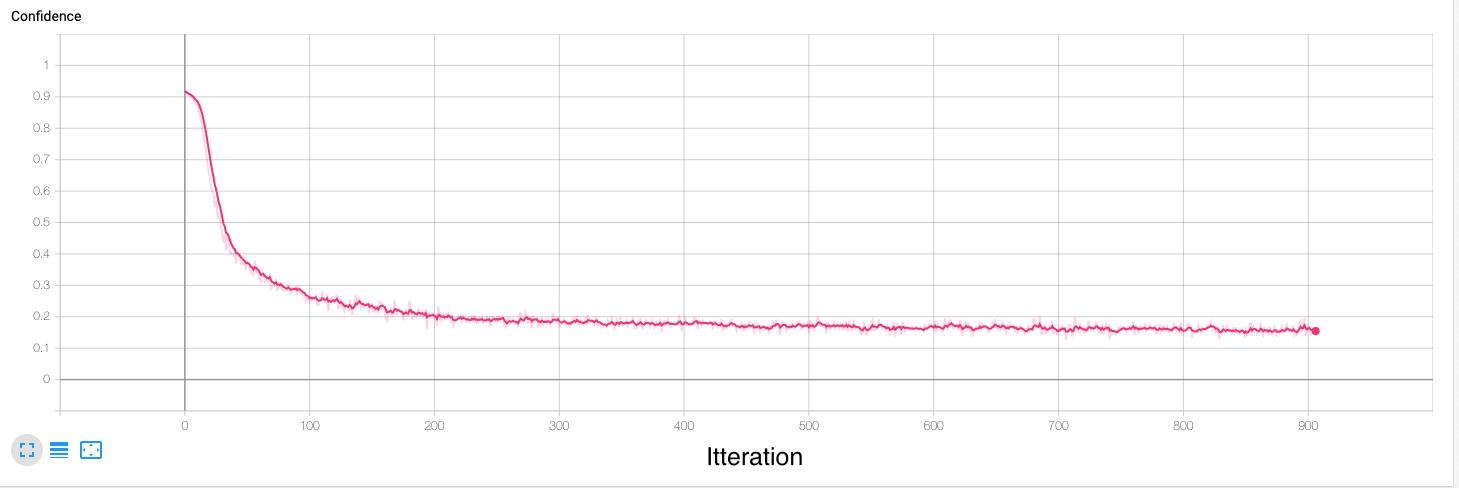

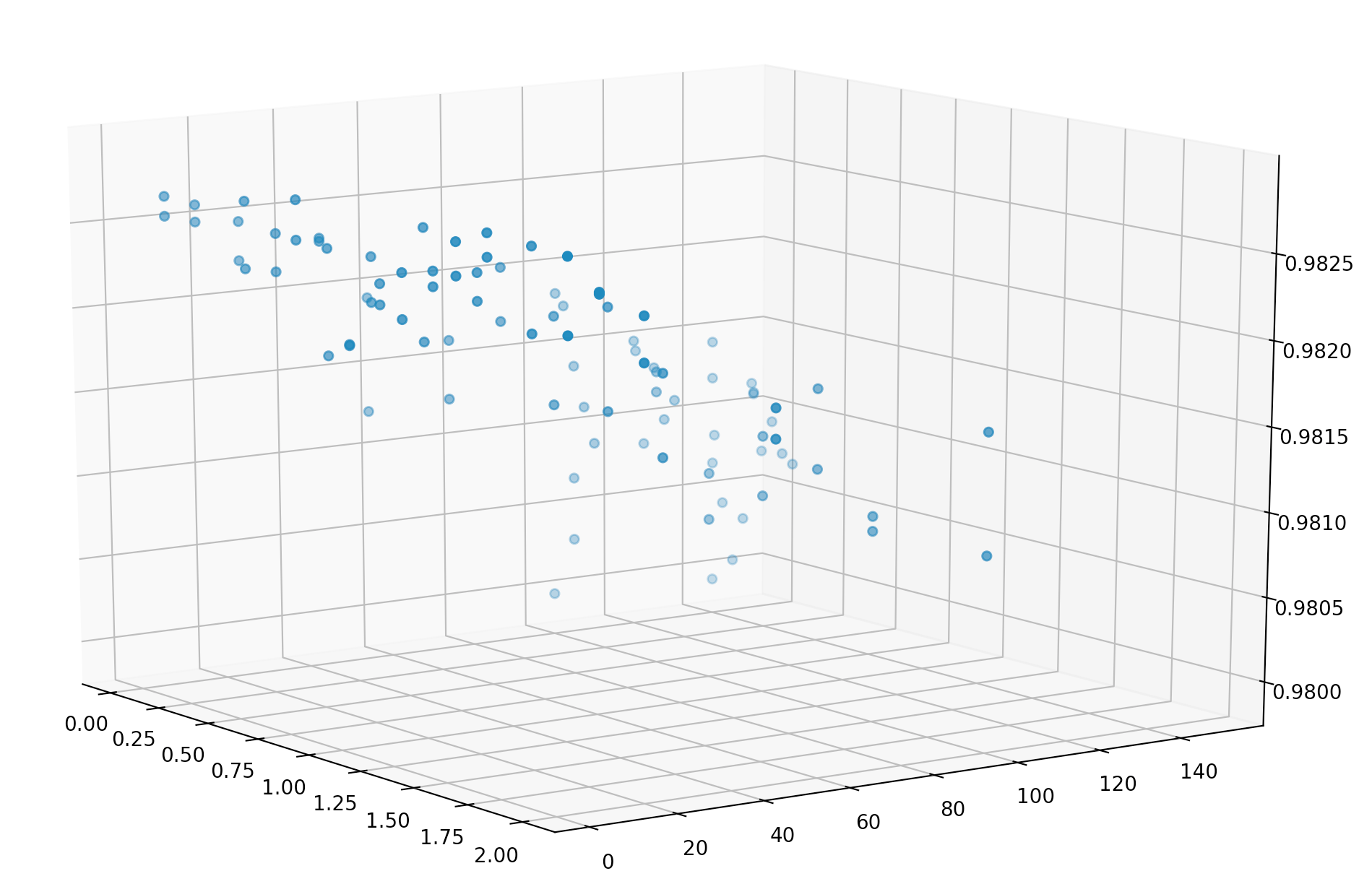

This repository contains python code to generate, evaluate, and visualize a patch based adversarial example for the Yolov3 object detector, a copy of which is included here. Some of the code in this repository has been pulled directly from other repositories, as cited in my research report.

This is research code, funded by a 8 week Summer Research Fellowship Grant (SOAR-NSE) from Swarthmore College. The associated paper can be found at https://arxiv.org/abs/2008.10106

- The requirements.txt file contains a listing of all required packages

- The script adversarial_attack.py is the adversarial attack generator

- The config.gin file contains the options for adversarial_attack.py

- The data directory contains training data

- The results directory contains results from past trainings and will be populated as adversarial_attack.py is run

- The tf_logs directory is set up for tensorboard logging

- The yolov3 directory contains the yolov3 object detector that is attacked.

This code supports multi-threading and is both cpu and gpu compatable. In config.gin specify 'cpu' to run on a cpu, 'cuda' to run on any available gpu or 'cuda:[gpu number]' to run on a specific gpu. The code runs on macOS and linux.

Python 3.7 or later with all requirements.txt dependencies installed. To install all dependencies run:

$ pip install -U -r requirements.txt- Set the main logic command in config.gin to 'train'

- Set optional configuration options in config.gin

$ python adversarial_attack.py config.gin- Set the main logic command in config.gin to 'visualize'

- Set optional configuration options in config.gin

$ python adversarial_attack.py config.gin- Set the main logic command in config.gin to 'evaluate'

- Set the secondary evaluation logic command if desired

- Set optional configuration options in config.gin

$ python adversarial_attack.py config.gin- Set the main logic command in config.gin to 'optimize'

- Set the optimization parameters in config.gin

$ python adversarial_attack.py config.ginGot questions? Email me at ianmcdiarmidsterling at gmail dot com

Special thank you for discussion, guidance, and support to:

- Professor Allan Moser

- Dr. David Sterling

This repository will not be updated after 8/1/2020

Thank you to all grant donors at Swarthmore College who make undergraduate research possible.