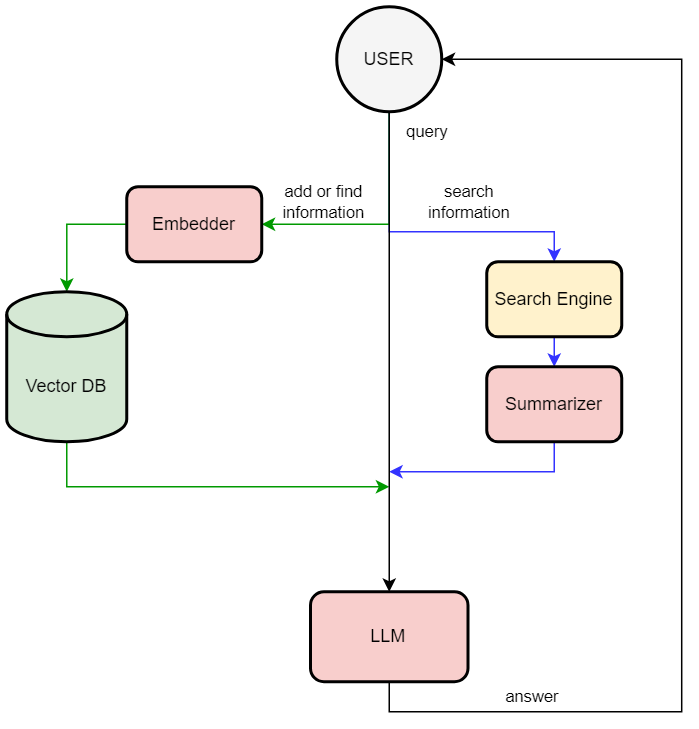

RAG-based LLM using long-term memory through vector database

This repository enables the large language model to use long-term memory through a vector database (This method is called RAG (Retrieval Augmented Generation) — this is a technique that allows LLM to retrieve facts from an external database). The application is built with mistral-7b-instruct-v0.2.Q4_K_M.gguf (using LLAMA_cpp_python binding) and chromadb. User can ask in natural language to add information to db, find information from db or the Internet using guidance.

- add new memory: add information (in quotes) in natural language to the database

- query memory: request information from a database in natural language

- web search (experimental): find information from the Internet in natural language

You > Hi

LOG: [Response]

Bot < Hello! How can I assist you today?

You > Please add information to db "The user name is Rustam Akimov"

LOG: [Adding to memory]

Bot < Done!

You > Can you find on the Internet who is Pavel Durov

LOG: [Extracting question]

LOG: [Searching]

LOG: [Summarizing]

Bot < According to the search results provided, Pavel Durov is a Russian entrepreneur who co-founded Telegram Messenger Inc.

You > Please find information in db who is Rustam Akimov

LOG: [Extracting question]

LOG: [Querying memory]

Bot < According to the input memories, your name is Rustam Akimov.

- Install requirements.txt

- Download mistral-7b-instruct-v0.2.Q4_K_M.gguf (Note: you can use other models)

- Get Google API key and Search Engine ID

- Specify variables in .env

- Run chat.py