We upload an offline version of documentation here. You can download and unzip it to view the documentation.

目前大部分数据集的链接已经恢复,确认无备份的数据集如下:

-

Classification

- COCO70

- EuroSAT

- PACS

- PatchCamelyon

- [Partial Domain Adaptation]

- CaltechImageNet

-

Keypoint Detection

- Hand3DStudio

- LSP

- SURREAL

-

Object Detection

- Comic

-

Re-Identification

- PersonX

- UnrealPerson

对于这些数据集,如果您之前有下载到本地,请通过邮件联系我们,非常感谢大家的支持。

Most of the dataset links have been restored at present. The confirmed datasets without backups are as follows:

-

Classification

- COCO70

- EuroSAT

- PACS

- PatchCamelyon

- [Partial Domain Adaptation]

- CaltechImageNet

-

Keypoint Detection

- Hand3DStudio

- LSP

- SURREAL

-

Object Detection

- Comic

-

Re-Identification

- PersonX

- UnrealPerson

For these datasets, if you had previously downloaded them locally, please contact us via email. We greatly appreciate everyone's support.

各位使用者,我们很抱歉通知大家,最近Transfer-Learning-Library的数据集链接因为云盘故障而失效,导致很多使用者无法正常下载数据集。

我们正在全力以赴解决这一问题,并计划在最短的时间内恢复链接。目前我们已经恢复了部分数据集链接,更新在master分支上,您可以通过git pull来获取最新的版本。

由于pypi上的版本还未更新,暂时请首先通过pip uninstall tllib卸载旧版本。

日后我们计划将数据集存储在百度云和谷歌云上,提供更加稳定的下载链接。

另外,小部分数据集在我们本地服务器上的备份也由于硬盘故障而丢失,对于这些数据集我们需要重新下载并验证,可能需要更长的时间来恢复链接。

我们会在本周内发布已经更新的数据集和确认无备份的数据集列表,对于无备份的数据集,如果您之前有下载到本地,请通过邮件联系我们,非常感谢大家的支持。

再次向您表达我们的歉意,并感谢您的理解。

Transfer-Learning-Library团队

Dear users,

We sincerely apologize to inform you that the dataset links of Transfer-Learning-Library have become invalid due to a cloud storage failure, resulting in many users being unable to download the datasets properly recently.

We are working diligently to resolve this issue and plan to restore the links as soon as possible. Currently, we have already restored some dataset links, and they have been updated on the master branch. You can obtain the latest version by running "git pull."

As the version on PyPI has not been updated yet, please temporarily uninstall the old version by running "pip uninstall tllib" before installing the new one.

In the future, we are planning to store the datasets on both Baidu Cloud and Google Cloud to provide more stable download links.

Additionally, a small portion of datasets that were backed up on our local server have also been lost due to a hard disk failure. For these datasets, we need to re-download and verify them, which might take longer to restore the links.

Within this week, we will release the updated dataset and confirm the list of datasets without backup. For datasets without backup, if you have previously downloaded them locally, please contact us via email. Your support is highly appreciated.

Once again, we apologize for any inconvenience caused and thank you for your understanding.

Sincerely,

The Transfer-Learning-Library Team

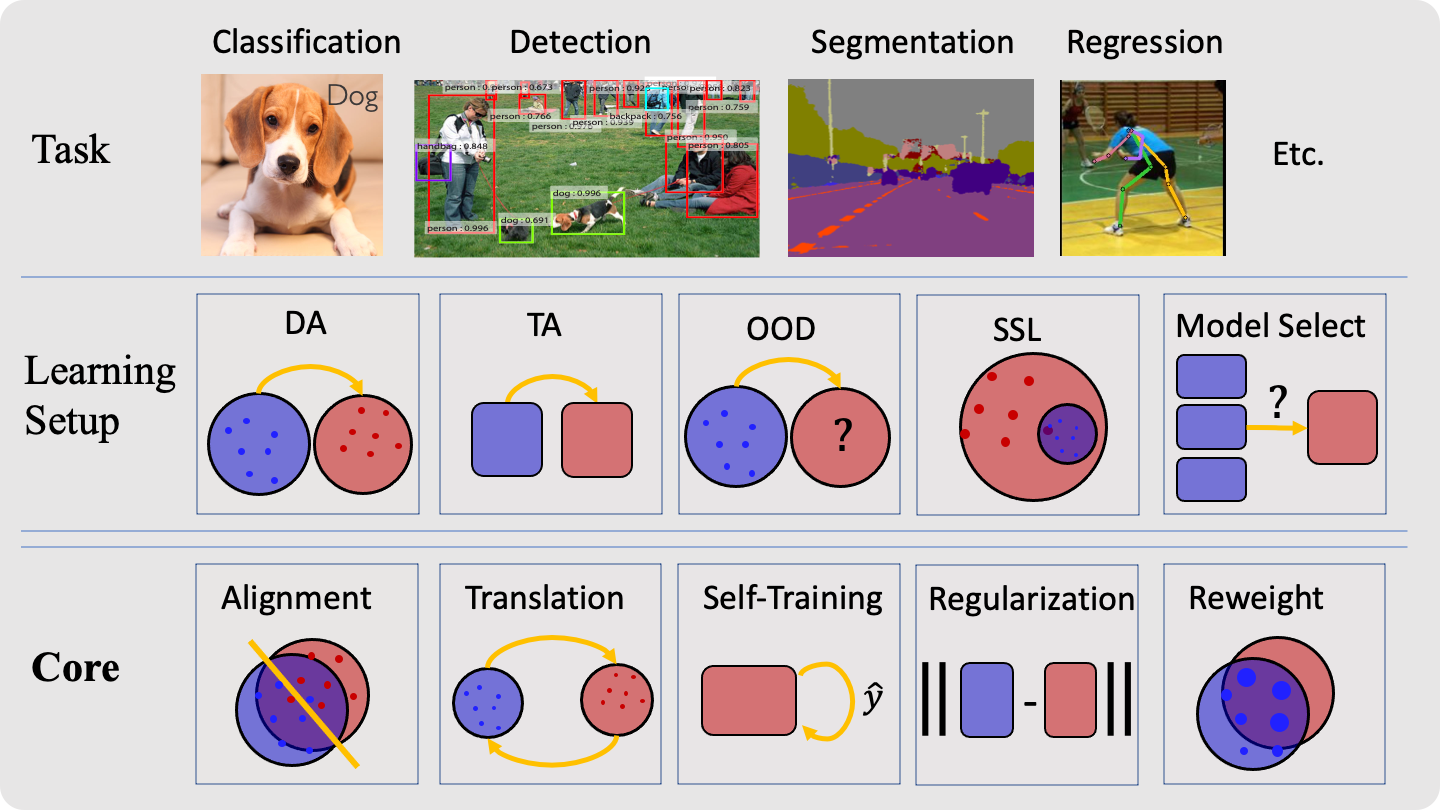

TLlib is an open-source and well-documented library for Transfer Learning. It is based on pure PyTorch with high performance and friendly API. Our code is pythonic, and the design is consistent with torchvision. You can easily develop new algorithms, or readily apply existing algorithms.

Our API is divided by methods, which include:

- domain alignment methods (tllib.aligment)

- domain translation methods (tllib.translation)

- self-training methods (tllib.self_training)

- regularization methods (tllib.regularization)

- data reweighting/resampling methods (tllib.reweight)

- model ranking/selection methods (tllib.ranking)

- normalization-based methods (tllib.normalization)

We provide many example codes in the directory examples, which is divided by learning setups. Currently, the supported learning setups include:

- DA (domain adaptation)

- TA (task adaptation, also known as finetune)

- OOD (out-of-distribution generalization, also known as DG / domain generalization)

- SSL (semi-supervised learning)

- Model Selection

Our supported tasks include: classification, regression, object detection, segmentation, keypoint detection, and so on.

We support installing TLlib via pip, which is experimental currently.

pip install -i https://test.pypi.org/simple/ tllib==0.4We release v0.4 of TLlib. Previous versions of TLlib can be found here. In v0.4, we add implementations of

the following methods:

- Domain Adaptation for Object Detection [Code] [API]

- Pre-trained Model Selection [Code] [API]

- Semi-supervised Learning for Classification [Code] [API]

Besides, we maintain a collection of awesome papers in Transfer Learning in another repo A Roadmap for Transfer Learning.

We adjusted our API following our survey Transferablity in Deep Learning.

The currently supported algorithms include:

Domain Adaptation for Classification [Code]

- DANN - Unsupervised Domain Adaptation by Backpropagation [ICML 2015] [Code]

- DAN - Learning Transferable Features with Deep Adaptation Networks [ICML 2015] [Code]

- JAN - Deep Transfer Learning with Joint Adaptation Networks [ICML 2017] [Code]

- ADDA - Adversarial Discriminative Domain Adaptation [CVPR 2017] [Code]

- CDAN - Conditional Adversarial Domain Adaptation [NIPS 2018] [Code]

- MCD - Maximum Classifier Discrepancy for Unsupervised Domain Adaptation [CVPR 2018] [Code]

- MDD - Bridging Theory and Algorithm for Domain Adaptation [ICML 2019] [Code]

- BSP - Transferability vs. Discriminability: Batch Spectral Penalization for Adversarial Domain Adaptation [ICML 2019] [Code]

- MCC - Minimum Class Confusion for Versatile Domain Adaptation [ECCV 2020] [Code]

Domain Adaptation for Object Detection [Code]

- CycleGAN - Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks [ICCV 2017] [Code]

- D-adapt - Decoupled Adaptation for Cross-Domain Object Detection [ICLR 2022] [Code]

Domain Adaptation for Semantic Segmentation [Code]

- CycleGAN - Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks [ICCV 2017] [Code]

- CyCADA - Cycle-Consistent Adversarial Domain Adaptation [ICML 2018] [Code]

- ADVENT - Adversarial Entropy Minimization for Domain Adaptation in Semantic Segmentation [CVPR 2019] [Code]

- FDA - Fourier Domain Adaptation for Semantic Segmentation [CVPR 2020] [Code]

Domain Adaptation for Keypoint Detection [Code]

- RegDA - Regressive Domain Adaptation for Unsupervised Keypoint Detection [CVPR 2021] [Code]

Domain Adaptation for Person Re-identification [Code]

- IBN-Net - Two at Once: Enhancing Learning and Generalization Capacities via IBN-Net [ECCV 2018]

- MMT - Mutual Mean-Teaching: Pseudo Label Refinery for Unsupervised Domain Adaptation on Person Re-identification [ICLR 2020] [Code]

- SPGAN - Similarity Preserving Generative Adversarial Network [CVPR 2018] [Code]

Partial Domain Adaptation [Code]

- IWAN - Importance Weighted Adversarial Nets for Partial Domain Adaptation[CVPR 2018] [Code]

- AFN - Larger Norm More Transferable: An Adaptive Feature Norm Approach for Unsupervised Domain Adaptation [ICCV 2019] [Code]

Open-set Domain Adaptation [Code]

- OSBP - Open Set Domain Adaptation by Backpropagation [ECCV 2018] [Code]

Domain Generalization for Classification [Code]

- IBN-Net - Two at Once: Enhancing Learning and Generalization Capacities via IBN-Net [ECCV 2018]

- MixStyle - Domain Generalization with MixStyle [ICLR 2021] [Code]

- MLDG - Learning to Generalize: Meta-Learning for Domain Generalization [AAAI 2018] [Code]

- IRM - Invariant Risk Minimization [ArXiv] [Code]

- VREx - Out-of-Distribution Generalization via Risk Extrapolation [ICML 2021] [Code]

- GroupDRO - Distributionally Robust Neural Networks for Group Shifts: On the Importance of Regularization for Worst-Case Generalization [ArXiv] [Code]

- Deep CORAL - Correlation Alignment for Deep Domain Adaptation [ECCV 2016] [Code]

Domain Generalization for Person Re-identification [Code]

- IBN-Net - Two at Once: Enhancing Learning and Generalization Capacities via IBN-Net [ECCV 2018]

- MixStyle - Domain Generalization with MixStyle [ICLR 2021] [Code]

Task Adaptation (Fine-Tuning) for Image Classification [Code]

- L2-SP - Explicit inductive bias for transfer learning with convolutional networks [ICML 2018] [Code]

- BSS - Catastrophic Forgetting Meets Negative Transfer: Batch Spectral Shrinkage for Safe Transfer Learning [NIPS 2019] [Code]

- DELTA - DEep Learning Transfer using Fea- ture Map with Attention for convolutional networks [ICLR 2019] [Code]

- Co-Tuning - Co-Tuning for Transfer Learning [NIPS 2020] [Code]

- StochNorm - Stochastic Normalization [NIPS 2020] [Code]

- LWF - Learning Without Forgetting [ECCV 2016] [Code]

- Bi-Tuning - Bi-tuning of Pre-trained Representations [ArXiv] [Code]

Pre-trained Model Selection [Code]

- H-Score - An Information-theoretic Approach to Transferability in Task Transfer Learning [ICIP 2019] [Code]

- NCE - Negative Conditional Entropy in `Transferability and Hardness of Supervised Classification Tasks [ICCV 2019] [Code]

- LEEP - LEEP: A New Measure to Evaluate Transferability of Learned Representations [ICML 2020] [Code]

- LogME - Log Maximum Evidence in `LogME: Practical Assessment of Pre-trained Models for Transfer Learning [ICML 2021] [Code]

Semi-Supervised Learning for Classification [Code]

- Pseudo Label - Pseudo-Label : The Simple and Efficient Semi-Supervised Learning Method for Deep Neural Networks [ICML 2013] [Code]

- Pi Model - Temporal Ensembling for Semi-Supervised Learning [ICLR 2017] [Code]

- Mean Teacher - Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results [NIPS 2017] [Code]

- Noisy Student - Self-Training With Noisy Student Improves ImageNet Classification [CVPR 2020] [Code]

- UDA - Unsupervised Data Augmentation for Consistency Training [NIPS 2020] [Code]

- FixMatch - Simplifying Semi-Supervised Learning with Consistency and Confidence [NIPS 2020] [Code]

- Self-Tuning - Self-Tuning for Data-Efficient Deep Learning [ICML 2021] [Code]

- FlexMatch - FlexMatch: Boosting Semi-Supervised Learning with Curriculum Pseudo Labeling [NIPS 2021] [Code]

- DebiasMatch - Debiased Learning From Naturally Imbalanced Pseudo-Labels [CVPR 2022] [Code]

- DST - Debiased Self-Training for Semi-Supervised Learning [NIPS 2022 Oral] [Code]

- Please git clone the library first. Then, run the following commands to install

tlliband all the dependency.

python setup.py install

pip install -r requirements.txt- Installing via

pipis currently experimental.

pip install -i https://test.pypi.org/simple/ tllib==0.4You can find the API documentation on the website: Documentation.

You can find examples in the directory examples. A typical usage is

# Train a DANN on Office-31 Amazon -> Webcam task using ResNet 50.

# Assume you have put the datasets under the path `data/office-31`,

# or you are glad to download the datasets automatically from the Internet to this path

python dann.py data/office31 -d Office31 -s A -t W -a resnet50 --epochs 20We appreciate all contributions. If you are planning to contribute back bug-fixes, please do so without any further discussion. If you plan to contribute new features, utility functions or extensions, please first open an issue and discuss the feature with us.

This is a utility library that downloads and prepares public datasets. We do not host or distribute these datasets, vouch for their quality or fairness, or claim that you have licenses to use the dataset. It is your responsibility to determine whether you have permission to use the dataset under the dataset's license.

If you're a dataset owner and wish to update any part of it (description, citation, etc.), or do not want your dataset to be included in this library, please get in touch through a GitHub issue. Thanks for your contribution to the ML community!

If you have any problem with our code or have some suggestions, including the future feature, feel free to contact

- Baixu Chen (cbx_99_hasta@outlook.com)

- Junguang Jiang (JiangJunguang1123@outlook.com)

- Mingsheng Long (longmingsheng@gmail.com)

or describe it in Issues.

For Q&A in Chinese, you can choose to ask questions here before sending an email. 迁移学习算法库答疑专区

If you use this toolbox or benchmark in your research, please cite this project.

@misc{jiang2022transferability,

title={Transferability in Deep Learning: A Survey},

author={Junguang Jiang and Yang Shu and Jianmin Wang and Mingsheng Long},

year={2022},

eprint={2201.05867},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

@misc{tllib,

author = {Junguang Jiang, Baixu Chen, Bo Fu, Mingsheng Long},

title = {Transfer-Learning-library},

year = {2020},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/thuml/Transfer-Learning-Library}},

}We would like to thank School of Software, Tsinghua University and The National Engineering Laboratory for Big Data Software for providing such an excellent ML research platform.