Stable diffusion headless and web interface

Version 0.1.6 alpha

Changelog

- Accelerated Torch 2.0

- Lightweight layer on top of Diffusers

- Fast startup and render

- GPU and CPU (slower)

- Easy to use web interface

- Headless console for experts

- Websockets server

- Safetensors-only checkpoints

- JSON configuration file

- Schedulers (ddpm, ddim, pndm, lms, euler, euler_anc)

- Text to image

- Image to image

- Image inpainting

- Image outpainting

- Face restoration

- Upscaling 4x

- Batch rendering

- PNG metadata

- Automatic pipeline switch

- File and base64 image inputs (PNG)

- Work offline, proxy supported

- No safety checker

- No miners, trackers, and telemetry

- Optimized for affordable hardware

- Optimized for slow internet connections

- Support custom VAE

- Support LoRA

- Support ControlNet

- Support Hypernetwork

- At least 16 GB RAM

- NVIDIA GPU with CUDA compute capability (at least 4 GB memory)

Usage: headless.py --arg "value"

Example: headless.py -p "prompt" -out base64

Upscale: headless.py -sr 0.1 -up true -i "/path-to-image.png" -of true

Metadata: headless.py -meta "/path-to-image.png"

Batch: headless.py -p "prompt" -st 25 -b 10 -w 1200 -h 400

CKPT: headless.py -p "prompt" -ck "deliberate_v2"

--help show this help message and exit

--get -get fetch data from server by record id (int)

--batch -b number of images to render (def: 1)

--checkpoint -ck set checkpoint by file name, null = default checkpoint (def: null)

--scheduler -sc ddpm, ddim, pndm, lms, euler, euler_anc (def: euler_anc)

--prompt -p positive prompt input

--negative -n negative prompt input

--width -w image width have to be divisible by 8 (def: 512)

--height -h image height have to be divisible by 8 (def: 512)

--seed -s seed number, 0 to randomize (def: 0)

--steps -st steps from 10 to 50, 20-25 is good enough (def: 20)

--guidance -g guidance scale, how closely linked to the prompt (def: 7.5)

--strength -sr how much respect the final image should pay to the original (def: 0.5)

--image -i PNG file path or base64 PNG (def: '')

--mask -m PNG file path or base64 PNG (def: '')

--facefix -ff true/false, face restoration using gfpgan (def: false)

--upscale -up true/false, upscale using real-esrgan 4x (def: false)

--savefile -sv true/false, save image to PNG, contain metadata (def: true)

--onefile -of true/false, save the final result only (def: false)

--outpath -o /path-to-directory (def: ./.temp)

--filename -f filename prefix (.png extension is not required)

--metadata -meta /path-to-image.png, extract metadata from PNG

--out -out stdout 'metadata' or 'base64' (def: metadata)

* When the image is not empty, the pipeline switches to image-to-image

* When the image and mask are not empty, the pipeline switches to inpainting

* Check server.log for previous records

Incorrect and correct mask image

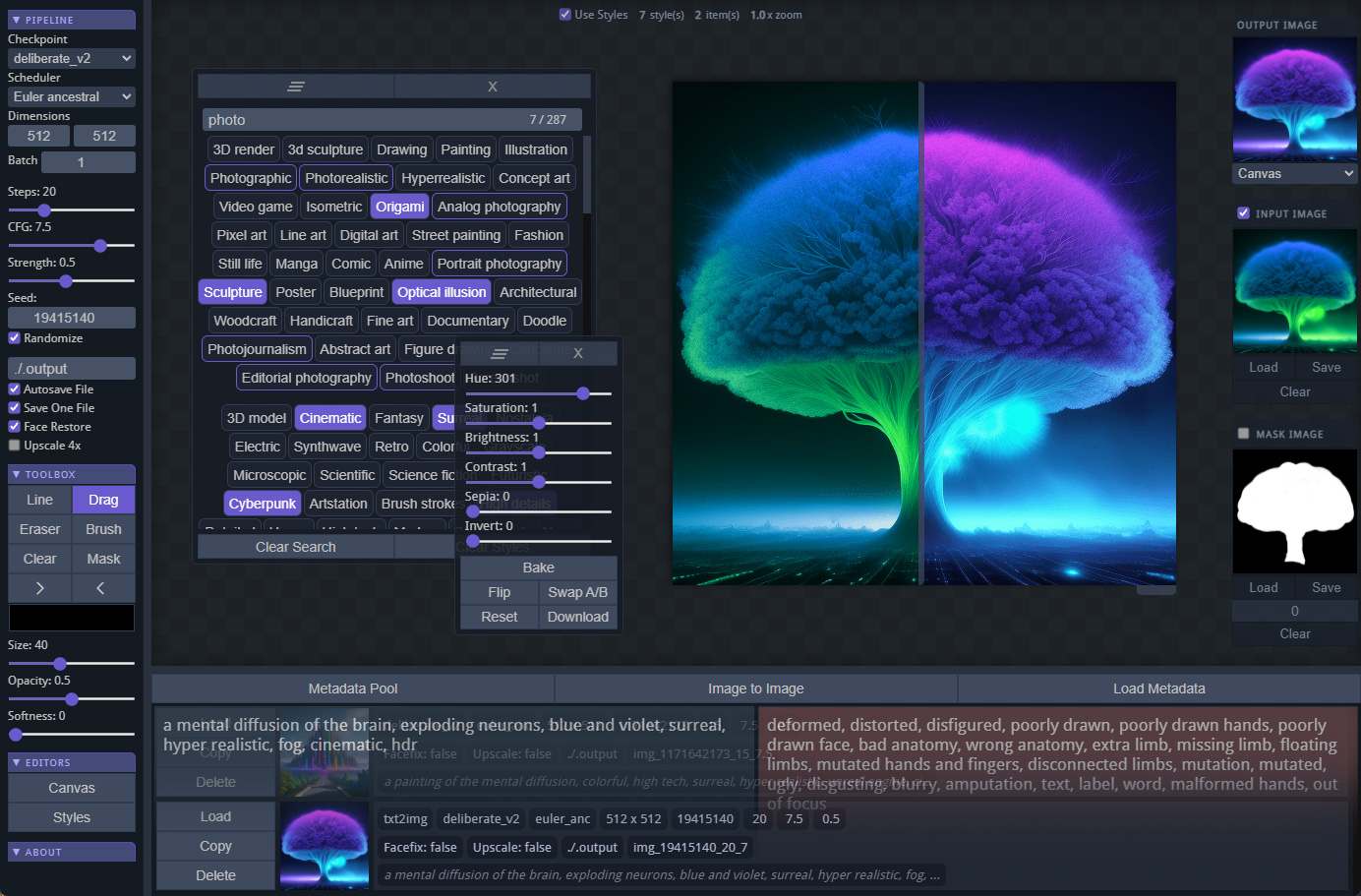

The web interface is a prototype with minimal bugs

- Online and offline webui

- Drag and drop workflow

- Image comparison A/B

A - Front canvas (left)

B - Background image (right) - Painting canvas (brush, line, eraser, mask, color picker)

- Canvas editor (flip, hue, saturation, brightness, contrast, sepia, invert)

- Styles editor (use predefined keywords, they are included in metadata)

- Guide the AI using text, brush strokes and color adjustments

- Quick mask painting

- Generates input and mask images for outpainting

- Autosave prompts

- Autosave PNG with metadata

- Metadata pool (single or multiple PNG import)

- Bake canvas to image

- Pan and zoom canvas

- Undo/redo for painting tools (brush, line, eraser, mask)

- Your data is safe and can be loaded again as long as "Autosave File" is checked

- If you want your painting to combine with the image pixels, you need to bake the canvas

- To create outpainting, set "Outpaint Padding" size, your initial image and mask will be generated for you (set Strength to 1.0)

Schedulers, using equal steps, steps is not enough for PNDM/LMS

GFPGAN was applied to the LMS rendering above

Painting bloods to guide AI with GFPGAN result

About 10 inpaint renders, top is the original

Outpaint examples, padding 128 and 256

Outpaint padding 128, use inpainting to cleanup errors

| Key | Action |

|---|---|

| Left Button | drag, draw, select |

| Middle Button | Zoom reset |

| Right Button | Pan canvas |

| Wheel | Zoom canvas in/out |

| Key | Action |

|---|---|

| Space | Toggle metadata pool |

| D | Drag tool |

| B | Brush tool |

| L | Line tool |

| E | Eraser tool |

| M | Mask tool |

| ] | Increase tool size |

| [ | Decrease tool size |

| + | Increase tool opacity |

| - | Decrease tool opacity |

| CTRL + Enter | Render/Generate |

| CTRL + L | Load PNG metadata |

| CTRL + Z | Undo painting |

| CTRL + X | Redo painting |

curl -o md-installer.py https://raw.githubusercontent.com/nimadez/mental-diffusion/main/installer/md-installer.py

- Download python-3.10.11-embed-amd64.zip

curl https://bootstrap.pypa.io/get-pip.py -k --ssl-no-revoke -o get-pip.py

python get-pip.py

python -m pip install torch==2.0.1+cu118 torchvision==0.15.2+cu118 --extra-index-url https://download.pytorch.org/whl/cu118

python -m pip install accelerate==0.20.3

python -m pip install diffusers==0.18.2

python -m pip install transformers==4.30.2

python -m pip install omegaconf==2.3.0

python -m pip install safetensors==0.3.1

python -m pip install realesrgan==0.3.0

python -m pip install gfpgan==1.3.8

python -m pip install websockets==11.0.3

git clone https://github.com/nimadez/mental-diffusion.git

run.bat -> start server (url: http://localhost:8011)

headless.py -> use headless if you are familiar with consoles

* edit "config.json" to define model paths

- 200 MB gfpgan weights (root directory)

- 1.7 GB openai/clip-vit-large-patch14 (huggingface cache)

To prevent re-downloading huggingface cache, add HF cache directory to your environment variables

> setx HF_HOME path-to-dir\.cache\huggingface

Some popular checkpoints:

v1-5-pruned-emaonly.safetensors

sd-v1-5-inpainting.ckpt

Deliberate_v2.safetensors

Deliberate-inpainting.safetensors

Reliberate.safetensors

Reliberate-inpainting.safetensors

dreamlike-diffusion-1.0.safetensors

dreamlike-photoreal-2.0.safetensors

Download at least one checkpoint to "models/checkpoints"

vae-ft-mse-840000-ema-pruned.safetensors (optional - to "models/vae")

GFPGANv1.4.pth (required - to "models/gfpgan")

RealESRGAN_x4plus.pth (required - to "models/realesrgan")

- All .ckpt checkpoints converted to .safetensors (security)

- All checkpoints converted to fp16 (smaller size, use prune.py)

- All inpainting checkpoints must have "inpainting" in their filename

- VAE is optional but recommended for getting optimal results

- Back to the future, SD v1.x only!

- I do not officially support any models

- Visit Civitai.com for more SD 1.5 checkpoints

Mental Diffusion is offline, if the internet access is interrupted,

if the connection is established, some data will be send and received

when loading the checkpoint. (huggingface tries to compare files)

How to speed up rendering?

- Do not constantly update the checkpoint, let it be cached and reused

- Open NVIDIA Control Panel, enable "Adaptive" power management mode

Why does it give a connection error when loading the checkpoint?

Use VPN, enable "use_proxy" in config.json, or disable network

connection. (after you have disabled your network connection, you

should not set proxy to 1)

Is SDXL supported?

SDXL requires 12 GB of video memory, it is not currently supported.

0.1.5 -> back to the roots, major performance gain #1

- Mental-diffusion started with "sdkit" and later evolved into diffusers

- Created for my personal use

Code released under the MIT license.