- [2024.01.09] Updated all v1.0 models trained on Objaverse. Please refer to HF Models and overwrite previous model weights.

- [2023.12.21] Hugging Face Demo is online. Have a try!

- [2023.12.20] Release weights of the base and large models trained on Objaverse.

- [2023.12.20] We release this project OpenLRM, which is an open-source implementation of the paper LRM.

git clone https://github.com/3DTopia/OpenLRM.git

cd OpenLRM

pip install -r requirements.txt

- Model weights are released on Hugging Face.

- Weights will be downloaded automatically when you run the inference script for the first time.

- Please be aware of the license before using the weights.

| Model | Training Data | Layers | Feat. Dim | Trip. Dim. | Render Res. | Link |

|---|---|---|---|---|---|---|

| openlrm-small-obj-1.0 | Objaverse | 12 | 768 | 32 | 192 | HF |

| openlrm-base-obj-1.0 | Objaverse | 12 | 1024 | 40 | 192 | HF |

| openlrm-large-obj-1.0 | Objaverse | 16 | 1024 | 80 | 384 | HF |

| openlrm-small | Objaverse + MVImgNet | 12 | 768 | 32 | 192 | To be released |

| openlrm-base | Objaverse + MVImgNet | 12 | 1024 | 40 | 192 | To be released |

| openlrm-large | Objaverse + MVImgNet | 16 | 1024 | 80 | 384 | To be released |

Model cards with additional details can be found in model_card.md.

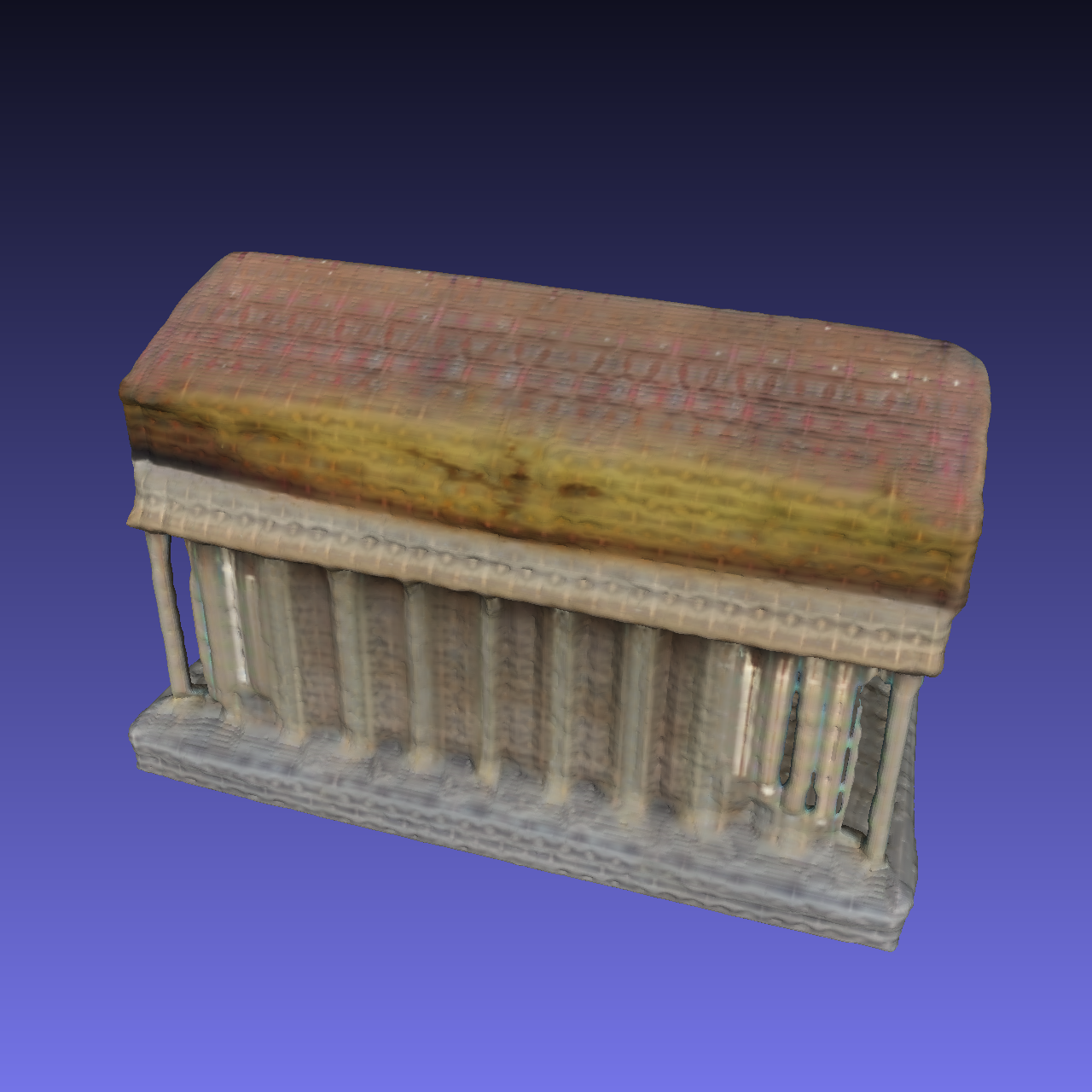

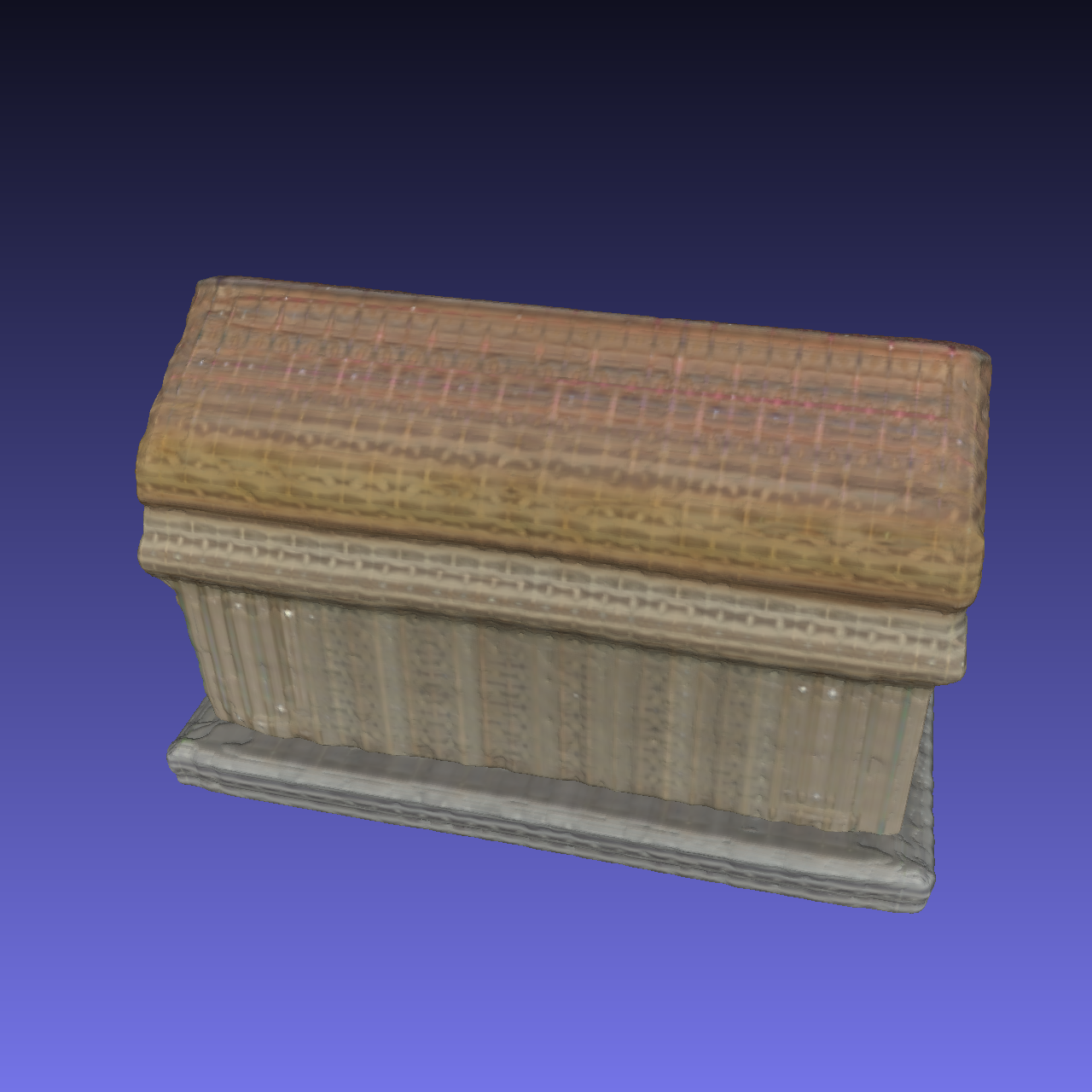

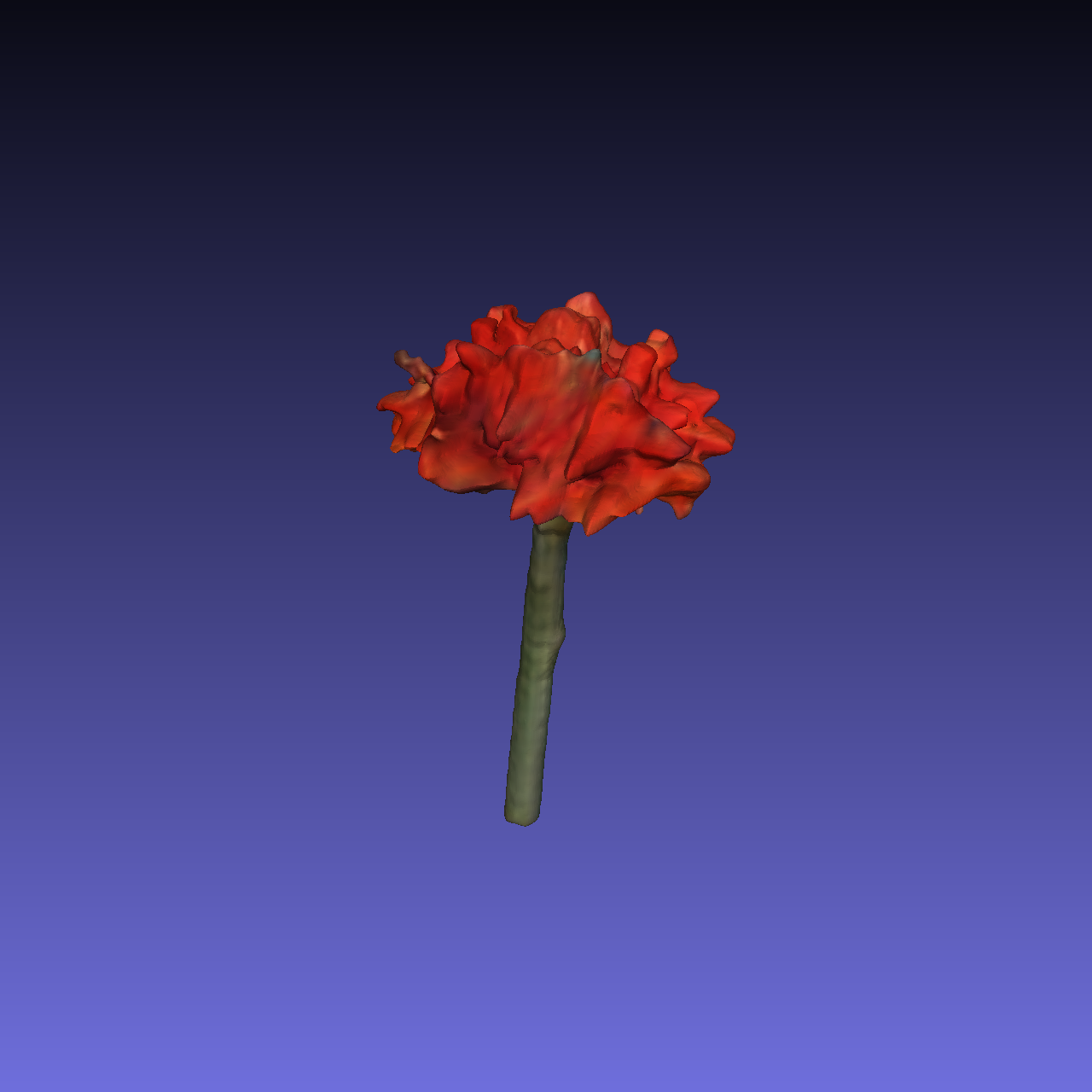

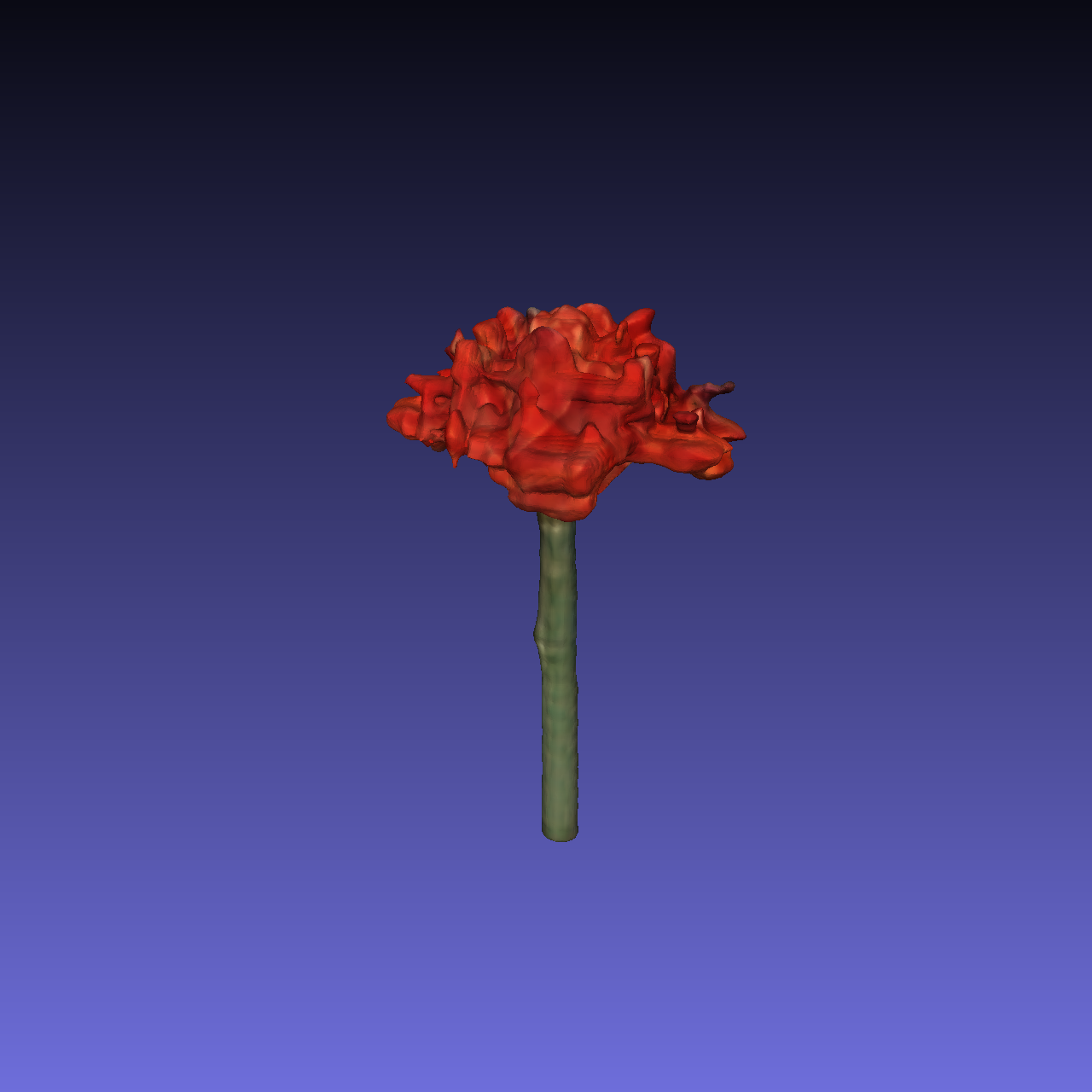

- We put some sample inputs under

assets/sample_input, and you can quickly try them. - Prepare RGBA images or RGB images with white background (with some background removal tools, e.g., Rembg, Clipdrop).

-

Run the inference script to get 3D assets.

-

You may specify which form of output to generate by setting the flags

--export_videoand--export_mesh.# Example usages # Render a video python -m lrm.inferrer --model_name openlrm-base-obj-1.0 --source_image ./assets/sample_input/owl.png --export_video # Export mesh python -m lrm.inferrer --model_name openlrm-base-obj-1.0 --source_image ./assets/sample_input/owl.png --export_mesh

To be released soon.

- We thank the authors of the original paper for their great work! Special thanks to Kai Zhang and Yicong Hong for assistance during the reproduction.

- This project is supported by Shanghai AI Lab by providing the computing resources.

- This project is advised by Ziwei Liu and Jiaya Jia.

If you find this work useful for your research, please consider citing:

@article{hong2023lrm,

title={Lrm: Large reconstruction model for single image to 3d},

author={Hong, Yicong and Zhang, Kai and Gu, Jiuxiang and Bi, Sai and Zhou, Yang and Liu, Difan and Liu, Feng and Sunkavalli, Kalyan and Bui, Trung and Tan, Hao},

journal={arXiv preprint arXiv:2311.04400},

year={2023}

}

@misc{openlrm,

title = {OpenLRM: Open-Source Large Reconstruction Models},

author = {Zexin He and Tengfei Wang},

year = {2023},

howpublished = {\url{https://github.com/3DTopia/OpenLRM}},

}

- OpenLRM as a whole is licensed under the Apache License, Version 2.0, while certain components are covered by NVIDIA's proprietary license. Users are responsible for complying with the respective licensing terms of each component.

- Model weights are licensed under the Creative Commons Attribution-NonCommercial 4.0 International License. They are provided for research purposes only, and CANNOT be used commercially.