Despite the significant relevance of federated learning (FL) in the realm of IoT, most existing FL works are conducted on well-known datasets such as CIFAR-10 and CIFAR-100. These datasets, however, do not originate from authentic IoT devices and thus fail to capture the unique modalities and inherent challenges associated with real-world IoT data. This notable discrepancy underscores a strong need for an IoT-oriented FL benchmark to fill this critical gap.

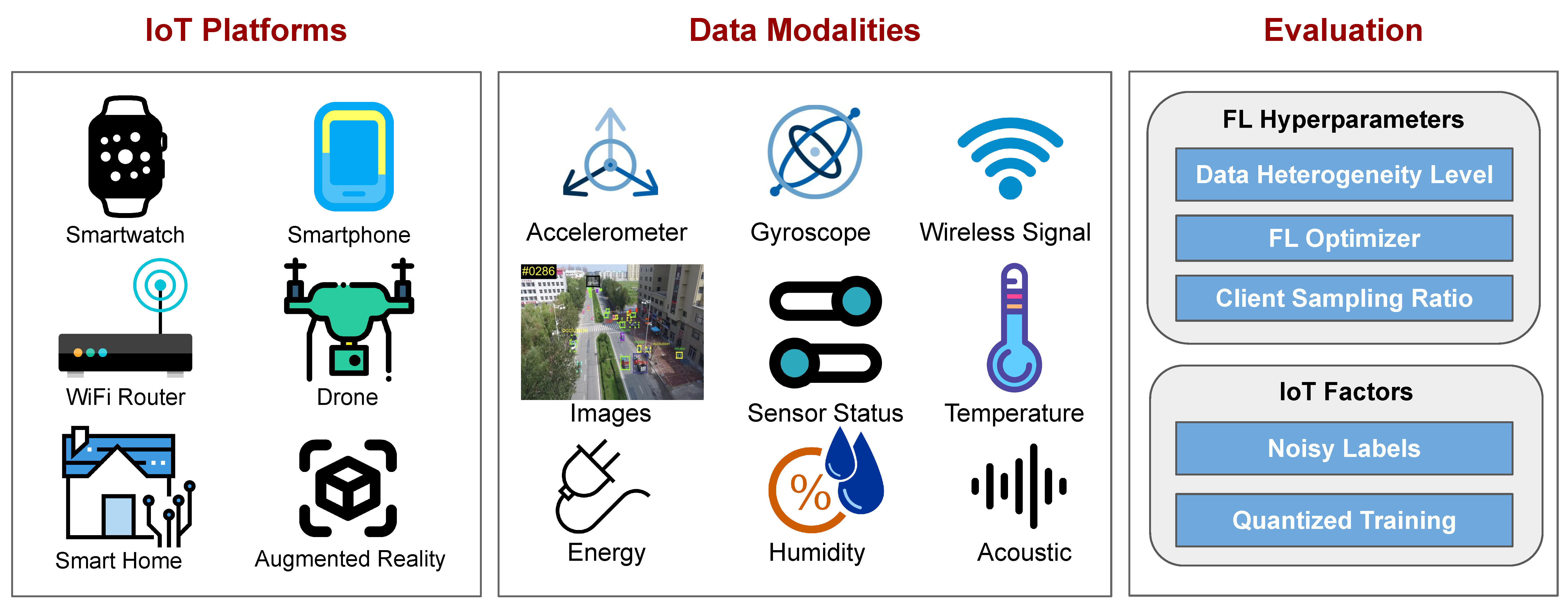

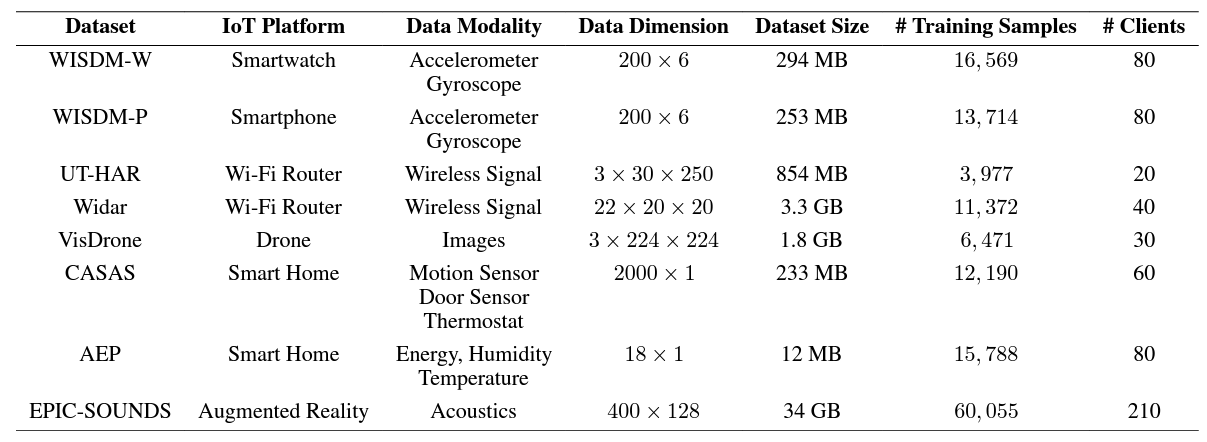

This repository holds the source code for FedAIoT: A Federated Learning Benchmark for Artificial Intelligence of Things. FedAIoT is a benchmarking tool for evaluating FL algorithms against real IoT datasets. FedAIoT contains eight well-chosen datasets collected from a wide range of authentic IoT devices from smartwatch, smartphone and Wi-Fi routers, to drones, smart home sensors, and head-mounted device that either have already become an indispensable part of people’s daily lives or are driving emerging applications. These datasets encapsulate a variety of unique IoT-specific data modalities such as wireless data, drone images, and smart home sensor data (e.g., motion, energy, humidity, temperature) that have not been explored in existing FL benchmarks.

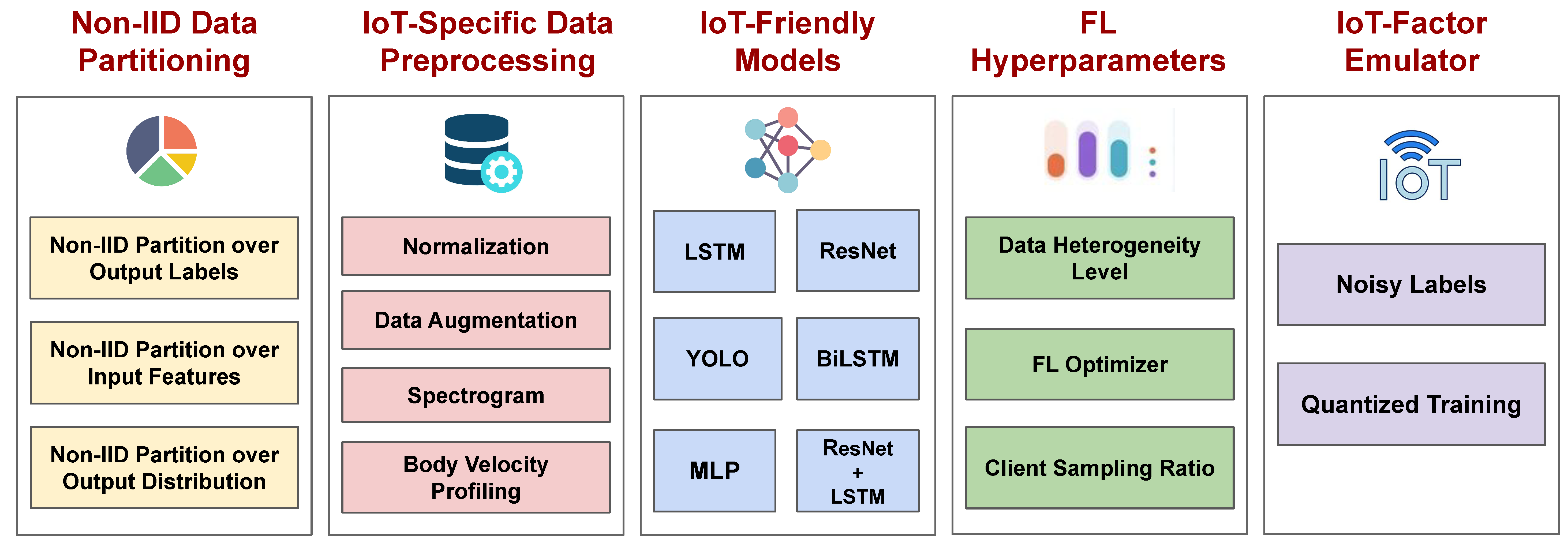

To facilitate the community benchmark the performance of the datasets and ensure reproducibility, FedAIoT includes a unified end-to-end FL framework for AIoT, which covers the complete FLfor- AIoT pipeline: from non-independent and identically distributed (non-IID) data partitioning, IoT-specific data preprocessing, to IoT-friendly models, FL hyperparameters, and IoT-factor emulator.

pip install -r requirements.txtFedAIoT currently includes the following eight IoT datasets:

Each dataset folder contains the download.py script to download the dataset.

Before running, we need to set the environment variables num_gpus and num_trainers_per_gpu. This will set the total number of workers for the distributed system. If you want to use a subset of GPUs available in the hardware, specify the GPUs to be used by CUDA_VISIBLE_DEVICES variable.

Take WISDM-W as an example. To train a centralized model on WISDM-W:

num_gpus=1 num_trainers_per_gpu=1 CUDA_VISIBLE_DEVICES=0 python distributed_main.py main --dataset_name wisdm_watch --model LSTM_NET --client_num_in_total 1 --client_num_per_round 1 --partition_type central --alpha 0.1 --lr 0.01 --server_optimizer sgd --server_lr 1 --test_frequency 5 --comm_round 200 --batch_size 128 --analysis baseline --trainer BaseTrainer --watch_metric accuracy

To train a federated model on WISDM-W with FedAvg and 10% client sampling rate under high data heterogeneity:

num_gpus=1 num_trainers_per_gpu=1 CUDA_VISIBLE_DEVICES=0 python distributed_main.py main --dataset_name wisdm_watch --model LSTM_NET --client_num_in_total 80 --client_num_per_round 8 --partition_type dirichlet --alpha 0.1 --lr 0.01 --server_optimizer sgd --server_lr 1 --test_frequency 5 --comm_round 400 --batch_size 32 --analysis baseline --trainer BaseTrainer --watch_metric accuracy

For the full list of parameters, run:

python distributed_main.py main --help

@article{

alam2024fedaiot,

title={Fed{AI}oT: A Federated Learning Benchmark for Artificial Intelligence of Things},

author={Samiul Alam and Tuo Zhang and Tiantian Feng and Hui Shen and Zhichao Cao and Dong Zhao and Jeonggil Ko and Kiran Somasundaram and Shrikanth Narayanan and Salman Avestimehr and Mi Zhang},

journal={Journal of Data-centric Machine Learning Research (DMLR)},

year={2024},

url={https://openreview.net/forum?id=fYNw9Ukljz},

}